当前位置:网站首页>Anti attack based on conjugate gradient method

Anti attack based on conjugate gradient method

2022-07-23 09:05:00 【Ghost road 2022】

1 introduction

Deep learning models are vulnerable to attacks against samples , Although the existing methods based on the fastest descent have achieved a high attack success rate , But the ill conditioned problem of optimization will occasionally reduce their attack performance . To solve this problem , In this paper, the author draws lessons from the conjugate gradient method which is effective for such problems , A new anti attack algorithm based on conjugate gradient method is proposed . In fact, in the optimization course of the University , It will involve learning the fastest descent method , conjugate gradient method , And quasi Newton method . The author applies the conjugate gradient method to combat attacks very well . Experimental results show that , For most models , The method proposed in this paper is better than the existing SOTA The algorithm can find better confrontation samples with fewer iterations , Moreover, the more diversified search of the method proposed in this paper significantly improves the success rate of countering attacks .

Thesis link : https://arxiv.org/abs/2206.09628

Paper code : https://github.com/yamamura-k/ACG

2 Against attack

Let locally differentiable functions g : D ⊆ R m → R K g:\mathcal{D}\subseteq \mathbb{R}^m\rightarrow \mathbb{R}^K g:D⊆Rm→RK It's a K K K Class classifier , The category label is arg max k ( g k ( ⋅ ) ) \arg\max\limits_k (g_k(\cdot)) argkmax(gk(⋅)). sample x o r i g ∈ D \boldsymbol{x}_{\mathrm{orig}}\in \mathcal{D} xorig∈D By classifier g g g Classified as c c c. Given the distance function d ( ⋅ , ⋅ ) d(\cdot,\cdot) d(⋅,⋅) And disturbance size ε > 0 \varepsilon>0 ε>0, The feasible region for countering attacks is defined as S = { x ∈ D ∣ d ( x o r i g , x ) ≤ ε } \mathcal{S}=\{\boldsymbol{x}\in \mathcal{D}|d(\boldsymbol{x}_{\mathrm{orig}},\boldsymbol{x})\le \varepsilon\} S={ x∈D∣d(xorig,x)≤ε}, Counter samples x a d v \boldsymbol{x}_{\mathrm{adv}} xadv Defined as arg max k g k ( x a d v ) ≠ c , d ( x o r i g , x a d v ) ≤ ε \arg\max\limits_k g_k(\boldsymbol{x}_{\mathrm{adv}})\ne c,\text{ } d(\boldsymbol{x}_{\mathrm{orig}},\boldsymbol{x}_{\mathrm{adv}})\le \varepsilon argkmaxgk(xadv)=c, d(xorig,xadv)≤ε Make L L L To find the confrontation sample x a d v \boldsymbol{x}_{\mathrm{adv}} xadv The objective function of . The mathematical form of fighting attacks is as follows : max x ∈ D L ( g ( x ) , c ) s . t . d ( x o r i g , x ) ≤ ε \max\limits_{\boldsymbol{x}\in\mathcal{D}}L(g(\boldsymbol{x}),c)\quad \mathrm{s.t.}\text{ }d(\boldsymbol{x}_{\mathrm{orig}},\boldsymbol{x})\le \varepsilon x∈DmaxL(g(x),c)s.t. d(xorig,x)≤ε The above formula makes the sample x \boldsymbol{x} x For classifiers g g g Classify into c c c Class differentiation decreases . In general , There are two kinds of distances used against attacks , They are Euclidean distances d ( v , w ) : = ∥ v − w ∥ 2 d(\boldsymbol{v},\boldsymbol{w}):=\|\boldsymbol{v}-\boldsymbol{w}\|_2 d(v,w):=∥v−w∥2, Uniform distance d ( v , w ) : = ∥ v − w ∥ ∞ d(\boldsymbol{v},\boldsymbol{w}):=\|\boldsymbol{v}-\boldsymbol{w}\|_\infty d(v,w):=∥v−w∥∞ And D = [ 0 , 1 ] m \mathcal{D}=[0,1]^m D=[0,1]m.

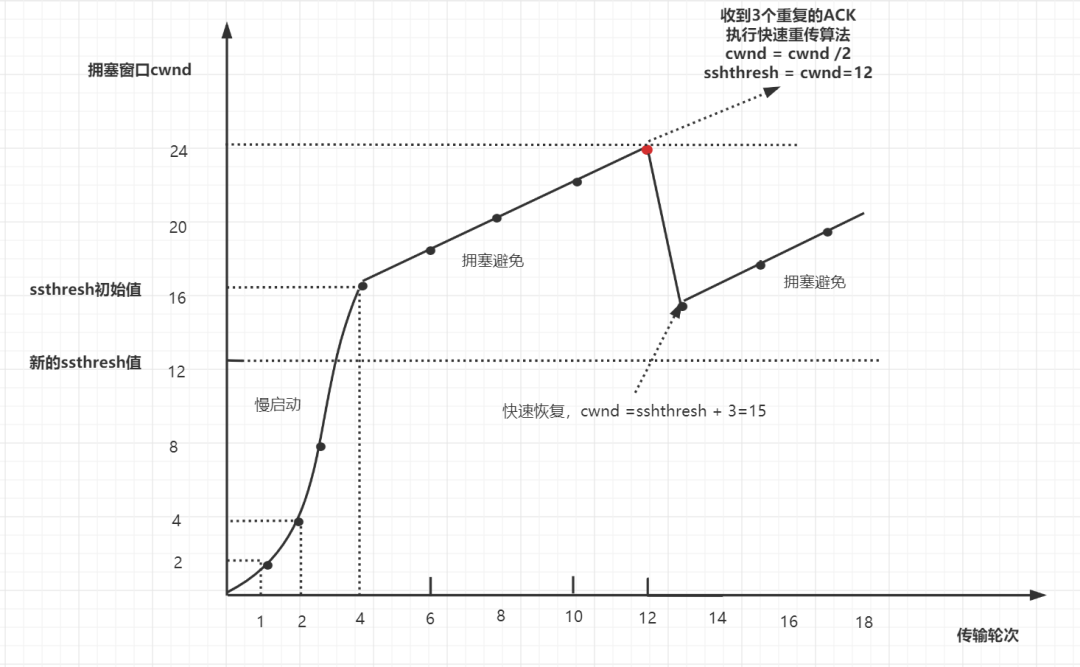

PGD Attack is a very important method to combat attack . Given classifier f : R m → R f:\mathbb{R}^m\rightarrow \mathbb{R} f:Rm→R,PGD The mathematical form of the generated countermeasure sample is x ( k + 1 ) = P S ( x ( k ) + η ( k ) ∇ f ( x ( k ) ) ) \boldsymbol{x}^{(k+1)}=P_\mathcal{S}(\boldsymbol{x}^{(k)}+\eta^{(k)}\nabla f(\boldsymbol{x}^{(k)})) x(k+1)=PS(x(k)+η(k)∇f(x(k))), among η ( k ) \eta^{(k)} η(k) The step size of resisting disturbance , P S P_\mathcal{S} PS Project the sample to the feasible region S \mathcal{S} S The operation of .APGD The attack was PGD An improved version of the attack , It introduces momentum operation in the iterative process . Make δ ( k ) \boldsymbol{\delta^{(k)}} δ(k) Iterate the direction of attack for each step ( namely ∇ f ( x k ) \nabla f({\bf{x}}^{k}) ∇f(xk)),APGD The specific form of attack is as follows :

z ′ ( k + 1 ) = x ( k ) + η ( k ) σ ( δ ( k ) ) z ( k + 1 ) = P S ( z ′ ( k ) ) x ( k + 1 ) = P S ( x ( k ) + α ( z ( k + 1 ) − x ( k ) ) + ( 1 − α ) ( x ( k ) − x ( k − 1 ) ) ) \begin{aligned}\boldsymbol{z}^{\prime(k+1)}&=\boldsymbol{x}^{(k)}+\eta^{(k)}\sigma(\boldsymbol{\delta}^{(k)})\\\boldsymbol{z}^{(k+1)}&=P_{\mathcal{S}}(\boldsymbol{z}^{\prime(k)})\\\boldsymbol{x}^{(k+1)}&=P_{\mathcal{S}}(\boldsymbol{x}^{(k)}+\alpha(\boldsymbol{z}^{(k+1)}-\boldsymbol{x}^{(k)})+(1-\alpha)(\boldsymbol{x}^{(k)}-\boldsymbol{x}^{(k-1)}))\end{aligned} z′(k+1)z(k+1)x(k+1)=x(k)+η(k)σ(δ(k))=PS(z′(k))=PS(x(k)+α(z(k+1)−x(k))+(1−α)(x(k)−x(k−1))) among σ \sigma σ Expressed as a way of regularization , α \alpha α Is the intensity coefficient of momentum , Generally, take α = 0.75 \alpha=0.75 α=0.75.

3 Conjugate gradient attack

Conjugate gradient method is generally used to solve linear problems , Then it is extended to solve convex quadratic minimization problems and general nonlinear problems . Conjugate gradient method can be used in unconstrained and projection constrained problems . Given an initial point x ( 0 ) \boldsymbol{x}^{(0)} x(0), Initial conjugate gradient s 0 \boldsymbol{s}^{0} s0 Set to 0 \boldsymbol{0} 0, The first k k k Search points x ( k ) \boldsymbol{x}^{(k)} x(k) And conjugate gradient s ( k ) \boldsymbol{s}^{(k)} s(k) Is updated to s ( k ) = − ∇ f ( x ( k ) ) + β ( k ) s ( k − 1 ) η ( k ) = arg min { f ( x ( k ) + η s ( k ) ) ∣ η ≥ 0 } x ( k + 1 ) = x ( k ) + η ( k ) s ( k ) \begin{aligned}\boldsymbol{s}^{(k)}&=-\nabla f(\boldsymbol{x}^{(k)})+\beta^{(k)}\boldsymbol{s}^{(k-1)}\\\eta^{(k)}&=\argmin\{f(\boldsymbol{x}^{(k)}+\eta \boldsymbol{s}^{(k)})|\eta \ge 0\}\\\boldsymbol{x}^{(k+1)}&=\boldsymbol{x}^{(k)}+\eta^{(k)}\boldsymbol{s}^{(k)}\end{aligned} s(k)η(k)x(k+1)=−∇f(x(k))+β(k)s(k−1)=argmin{ f(x(k)+ηs(k))∣η≥0}=x(k)+η(k)s(k) among k ≥ 1 k\ge 1 k≥1, β ( k ) \beta^{(k)} β(k) It is the parameter calculated from the past search information . η ( k ) \eta^{(k)} η(k) Usually satisfied by something similar to Wolfe Determined by conditional linear search , Because it is difficult to solve the following problems η ( k ) = arg min { f ( x ( k ) + η s ( k ) ) ∣ η ≥ 0 } \eta^{(k)}=\argmin\{f(\boldsymbol{x}^{(k)}+\eta \boldsymbol{s}^{(k)})|\eta \ge 0\} η(k)=argmin{ f(x(k)+ηs(k))∣η≥0} Consider minimizing a strictly quadratic convex function problem f ( x ) = x ⊤ A x + b ⊤ x f(\boldsymbol{x})=\boldsymbol{x}^\top A\boldsymbol{x}+\boldsymbol{b}^\top \boldsymbol{x} f(x)=x⊤Ax+b⊤x among A A A It's a positive definite matrix , x ∈ R n \boldsymbol{x}\in\mathbb{R}^n x∈Rn. under these circumstances , coefficient β ( k ) \beta^{(k)} β(k) The calculation formula of is β ( k ) = * A s ( k − 1 ) , − ∇ f ( x ( k ) ) * * A s ( k − 1 ) , s ( k − 1 ) * \beta^{(k)}=\frac{\langle A\boldsymbol{s}^{(k-1)},-\nabla f(\boldsymbol{x}^{(k)}) \rangle}{\langle A \boldsymbol{s}^{(k-1)},\boldsymbol{s}^{(k-1)} \rangle} β(k)=*As(k−1),s(k−1)**As(k−1),−∇f(x(k))* When the objective function is strictly convex , From the conjugate gradient method , Less than n n n The global optimal solution can be found in the next iteration .

For nonlinear problems , There are some calculation coefficients β ( k ) \beta^{(k)} β(k) The formula is proposed , In this paper, the author uses the coefficient calculation formula as follows : β H S ( k ) = * ∇ f ( x ( k ) , y ( k − 1 ) ) * * s ( k − 1 ) , y ( k − 1 ) * \beta^{(k)}_{HS}=\frac{\langle \nabla f(\boldsymbol{x}^{(k)},\boldsymbol{y}^{(k-1)})\rangle}{\langle\boldsymbol{s}^{(k-1)},\boldsymbol{y}^{(k-1)}\rangle} βHS(k)=*s(k−1),y(k−1)**∇f(x(k),y(k−1))* among y ( k − 1 ) = ∇ f ( x ( k − 1 ) ) − ∇ f ( x ( k ) ) \boldsymbol{y}^{(k-1)}=\nabla f(\boldsymbol{x}^{(k-1)})-\nabla f(\boldsymbol{x}^{(k)}) y(k−1)=∇f(x(k−1))−∇f(x(k)), And β ( k ) ≥ 0 \beta^{(k)}\ge 0 β(k)≥0, You can also use max { β ( k ) , 0 } \max\{\beta^{(k)},0\} max{ β(k),0} Instead of β ( k ) \beta^{(k)} β(k). Proposed by the paper ACG The specific form of the algorithm is as follows : y ( k − 1 ) = ∇ f ( x ( k − 1 ) ) − ∇ f ( x ( k ) ) β H S ( k ) = * − ∇ f ( x ( k ) ) , y ( k − 1 ) * * s ( k − 1 ) , y ( k − 1 ) * s ( k ) = ∇ f ( x ( k ) ) + β H S ( k ) s ( k − 1 ) x ( k + 1 ) = P S ( x ( k ) + η ( k ) ⋅ σ ( s ( k ) ) ) \begin{aligned}\boldsymbol{y}^{(k-1)}&=\nabla f(\boldsymbol{x}^{(k-1)})-\nabla f(\boldsymbol{x}^{(k)})\\\beta^{(k)}_{HS}&=\frac{\langle -\nabla f(\boldsymbol{x}^{(k)}),\boldsymbol{y}^{(k-1)}\rangle}{\langle \boldsymbol{s}^{(k-1)},\boldsymbol{y}^{(k-1)}\rangle }\\\boldsymbol{s}^{(k)}&=\nabla f(\boldsymbol{x}^{(k)})+\beta^{(k)}_{HS} \boldsymbol{s}^{(k-1)}\\\boldsymbol{x}^{(k+1)}&=P_{\mathcal{S}}\left(\boldsymbol{x}^{(k)}+\eta^{(k)}\cdot \sigma(\boldsymbol{s}^{(k)})\right)\end{aligned} y(k−1)βHS(k)s(k)x(k+1)=∇f(x(k−1))−∇f(x(k))=*s(k−1),y(k−1)**−∇f(x(k)),y(k−1)*=∇f(x(k))+βHS(k)s(k−1)=PS(x(k)+η(k)⋅σ(s(k))) The search algorithm of attack step size is as follows :

( I ) N i n c < ρ ⋅ ( w j − w j − 1 ) ( I I ) η ( w j − 1 ) = η ( w j ) a n d f max ( w j − 1 ) = f max ( w j ) \begin{aligned}(\mathrm{I})&\quad N_{\mathrm{inc}} <\rho\cdot(w_j-w_{j-1})\\(\mathrm{II})&\quad \eta^{(w_j-1)}=\eta^{(w_j)}\text{ }\mathrm{and} \text{ } f_{\max}^{({w}_{j-1})}=f_{\max}^{(w_j)} \end{aligned} (I)(II)Ninc<ρ⋅(wj−wj−1)η(wj−1)=η(wj) and fmax(wj−1)=fmax(wj)

- Conditions ( I ) (\mathrm{I}) (I) It means that the number of objective functions corresponding to the growth point is less than a certain threshold , Then the local maximum point has a high probability to occur in this interval . The details are shown in the following example :

- When the function value of a discontinuity rises and falls abruptly , Then the condition is ( I I ) (\mathrm{II}) (II) There will also be local maximum points , The specific examples are as follows :

in summary , The final algorithm flow chart is shown below :

4 Paper understanding

The automatic conjugate gradient counter attack proposed by the author of this paper is different from the original conjugate gradient method . Corresponding to nonlinear optimization problem , Given point x ( k ) \boldsymbol{x}^{(k)} x(k) And conjugate direction s ( k ) \boldsymbol{s}^{(k)} s(k), The original conjugate gradient method will first be at point x ( k ) \boldsymbol{x}^{(k)} x(k) Along the direction conjugate direction s ( k ) \boldsymbol{s}^{(k)} s(k) Search for the next iteration point that maximizes the objective function s ( k + 1 ) \boldsymbol{s}^{(k+1)} s(k+1), The iteration step is η ( k ) \eta^{(k)} η(k) For the problem of positive definite quadratic form , The above steps can be solved directly ; Nonlinear problems can be solved by linear search or other search algorithms . Then find the conjugate direction s ( k + 1 ) \boldsymbol{s}^{(k+1)} s(k+1). In this paper , The author directly gives the iteration step size and the set of search discontinuities , Then, as the iteration progresses, the iteration discontinuity is continuously reduced to resist disturbance η ( k ) \eta^{(k)} η(k) Size , Thus, the optimal anti disturbance is finally obtained . If according to the original conjugate gradient method , The algorithm flow of countering attack based on conjugate gradient method can be changed as follows :

- step 0: Given the initial point x ( 0 ) \boldsymbol{x}^{(0)} x(0), neural network f f f, The feasible region S \mathcal{S} S, Note that the initial conjugate gradient direction is s ( 0 ) = ∇ f ( x ( 0 ) ) \boldsymbol{s}^{(0)}=\nabla f(\boldsymbol{x}^{(0)}) s(0)=∇f(x(0))

- step 1: By linear search in the conjugate direction s ( k ) \boldsymbol{s}^{(k)} s(k) Search attack step on η ( k ) ∈ [ 0 , 1 ] \eta^{(k)}\in [0,1] η(k)∈[0,1], bring η ( k ) = arg min { f ( x ( k ) + η ( k ) ⋅ s ( k ) ) } \eta^{(k)}=\arg\min\{f(\boldsymbol{x}^{(k)}+\eta^{(k)}\cdot \boldsymbol{s}^{(k)} )\} η(k)=argmin{ f(x(k)+η(k)⋅s(k))}

- step 2: Get the second k + 1 k+1 k+1 Step confrontation sample x k + 1 \boldsymbol{x}^{k+1} xk+1 by x ( k + 1 ) = P S ( x ( k ) + η ( k ) ⋅ σ ( s ( k ) ) ) \boldsymbol{x}^{(k+1)}=P_{\mathcal{S}}\left(\boldsymbol{x}^{(k)}+\eta^{(k)}\cdot \sigma(\boldsymbol{s}^{(k)})\right) x(k+1)=PS(x(k)+η(k)⋅σ(s(k)))

- step 3: Make k = k + 1 k=k+1 k=k+1, And go to the step 1 In the middle .

5 experimental result

The following table shows the paper method ACG And other gradient based methods APGD stay CIFAR10 Attack success rate of data set . You can intuitively find ,ACG The attack success rate of is higher than APGD Attack success rate of . These results suggest that , Regardless of the data set or the architecture of the model used ,ACG Both show higher attack performance . Besides ,ACG The method does not rely on random numbers to select the initial point , Because the initial point is the center of the feasible region , Thus we can see that ,ACG Only in deterministic operation, it is better than APGD.

The image below shows APGD and ACG Between the last two consecutive search points 2 The transformation of norms . Can be observed ACG Search point ratio APGD Our search points move further . Besides , To study projection pairs APGD Influence , The author also calculates the ratio of travel distance between two search points , It represents the amount of update distance wasted by projection . And ACG comparison ,APGD It shows a higher projection ratio in the travel distance . Due to the introduction of conjugate direction ,ACG Than APGD Move more .

The following figure is a visual example of conjugate gradient attack search for multimodal functions . The initial search point is represented by a white star , The search ends with a white square . Circles represent search points . Black circles indicate search diversification , White circles indicate search intensity . In the left “A” To “F” Indicates local optimum ,“E” Represents the global optimum . The examples on the left and in the middle are failed searches that cannot find the global optimal value due to the lack of diversification or intensification . The example on the right shows the search that successfully finds the global optimal value due to the appropriate balance between diversification and intensification . The following figure shows enhanced search 、 Visualization of diversified search and appropriate search , Demonstrates the appropriate balance between diversification and reinforcement . Six local solutions can be observed from the contour of the function in the figure , among “E” The local solution of the position is the global optimal solution .

6 Core code example

The algorithm source code is provided in the paper , The code is a little complicated , The following code is a relatively simple core code rewritten according to the core algorithm of the paper .

class ACG_attack(object):

# Input_x shape : R^n

def __init__(self, input_x, input_y, model, N_iter, W_set, eta):

self.input_x = input_x

self.input_y = input_y

self.model = model

self.eta = eta

self.N_iter = N_iter

self.W_set = W_set

self.rho = rho

def judgement(self, k, x_new, x_old, eta_list, loss_list):

flag = False

CE = nn.CrossEntropyLoss()

count = 0

j = self.W_set.index(k)

for i in range(self.W_set[j-1], k-1):

y_new = self.model(x_new)

y_old = self.model(x_old)

if CE(y_new, self.input_y) > CE(y_old, self.input_y):

count += 1

if count < self.rho * (k - self.W_set[j-1]):

flag = True

if eta_list[k] == eta_list[self.W_set[j-1]] and loss_list[k] == loss_list[self.W_set[j-1]]:

flag = True

return flag

def attack(self):

eta_list = []

loss_list = []

nabla_list = []

s_list = []

input_shape = self.input_x.shape

self.input_x.requires_grad = True

CE = nn.CrossEntropyLoss()

x_adv = self.input_x.view(-1)

x_pre = self.input_x.view(-1)

x_adv.requires_grad = True

x_pre.requires_grad = True

beta = 0

eta_old = self.eta

# Compute the graident of f w.r.t x

y = model(x_pre)

loss = CE(y, self.input_y)

loss.backward()

s_0 = self.input_x.grad.detach().view(-1)

s_pre = s_0

# Auto conjugate gradient method

x_old = x_pre

s_old = s_pre

loss_list.append(loss)

eta_list.append(self.eta)

nabla_list.append(s_pre)

s_list.append(s_pre)

for k in range(self.N_iter):

x_new = torch.clamp( x_old + eta_pre * torch.sign(s_old) , 0, 1)

x_new.requires_grad = True

y_new = self.model(x_new)

y_adv = self.model(x_adv)

loss_new = CE(y_new, self.input_y)

loss_old = CE(y_adv, self.input_y)

loss_new.backward()

loss_list.append(loss_new)

y_list = []

if CE(y_new, self.input_y) > CE(y_adv, self.input_y):

x_adv = x_new

x_pre = x_old

s_pre = s_old

eta_new = eta_old

eta_list.append()

nabla_list.append(x_new.grad.data)

if k in self.W_set[1:]:

if self.judgement(k, x_new, x_old, eta_list, loss_list):

eta_new = eta_old / 2

eta_list[-1] = eta_new

x_new = x_adv

x_old = x_pre

s_old = s_pre

y_list.append(nabla_list[-1].view(-1)-nabla_list[-2].view(-1))

beta = torch.dot(-nabla_list[-1].view(-1), y_list[-1].view(-1))/(torch.dot(s_list[-1].view(-1)), y_list[-1].view(-1))

beta_list = beta

s_new = nabla_list[-1] + beta * s_list[-1]

s_list.append(s_new)

return x_adv

Summary of new words

diversified: diversify

renders: present

discriminative: Differentiated

边栏推荐

- Camera IQ: 76% of consumers have experienced AR, and 49% are willing to share ar advertisements

- 元宇宙并不是一个像互联网一样将流量看成是终极追求的存在

- SQL Server database design -- select statement 2

- Regular expression conversion to corresponding text gadget

- K3S - 轻量级Kubernetes集群

- [ctfshow-web入门]SSRF

- Unity3d learning note 9 - loading textures

- 50道经典计算机网络面试题,你答得上几个?(上)

- 驱动单片机硬件调试器的一些开源库总结(包含stlink调试器)

- 生成13位条形码Ean-13码规则:第十三位数字是前十二位数字经过计算得到的校验码。

猜你喜欢

Unity中实现判断Missing还是Null

疫情隔离区订餐系统的开发

![[concurrent programming] Chapter 2: go deep into the reentrantlock lock lock from the core source code](/img/df/f29eed667c2a7dc02d93ac3f424198.png)

[concurrent programming] Chapter 2: go deep into the reentrantlock lock lock from the core source code

![[ctfshow-web入门]SSRF](/img/eb/19c215fcacc0f101510a77c6d1edc3.png)

[ctfshow-web入门]SSRF

LiveQing直播点播流媒体OBS推流直播如何获得接口校验token视频校验streamToken及配置token有效期

In depth explanation of CAS is necessary for interview practice

50道经典计算机网络面试题,你答得上几个?(二)

50道经典计算机网络面试题,你答得上几个?(三)

50道经典计算机网络面试题,你答得上几个?(上)

宝塔安装hyperf

随机推荐

How many of the 50 classic computer network interview questions can you answer? (II)

LiveQing直播点播流媒体OBS推流直播如何获得接口校验token视频校验streamToken及配置token有效期

Day05 MySql的基础使用

Geely Xingrui: from product technology empowerment to cultural confidence

The most detailed explanation of the output of three numbers from large to small

白盒测试的概念及测试方法

Jmeter---Jmeter安装教程

深度讲解CAS,面试实践必备

触发器基础知识(下)

生成13位条形码Ean-13码规则:第十三位数字是前十二位数字经过计算得到的校验码。

[英雄星球七月集训LeetCode解题日报] 第22日 有序集合

Family fraud is prevalent, and Sogou number builds a security firewall

数论 —— 整除分块,常见经典例题。

亲情诈骗盛行,搜狗号码通筑安全防火墙

基于SSM的博客系统【带后台管理】

生成13位条形码

吉利星瑞:从产品技术赋能到文化自信

[ctfshow web getting started]ssrf

全新 IDEA 2022.2 正式发布,新特性很NICE

发现了一个好用到爆的数据分析利器