当前位置:网站首页>Li Hongyi machine learning (2017 Edition)_ P14: back propagation

Li Hongyi machine learning (2017 Edition)_ P14: back propagation

2022-07-27 01:12:00 【Although Beihai is on credit, Fuyao can take it】

Related information

Open source content :https://linklearner.com/datawhale-homepage/index.html#/learn/detail/13

Open source content :https://github.com/datawhalechina/leeml-notes

Open source content :https://gitee.com/datawhalechina/leeml-notes

Video address :https://www.bilibili.com/video/BV1Ht411g7Ef

Official address :http://speech.ee.ntu.edu.tw/~tlkagk/courses.html

Reference note address :https://datawhalechina.github.io/leeml-notes/#/chapter14/chapter14

1、 gradient descent Gradient Descent

- Give to the θ \theta θ (weight and bias)

- First choose an initial θ 0 \theta^0 θ0, Calculation θ 0 \theta^0 θ0 Loss function of (Loss Function) Let's have a partial differential of a parameter

- After calculating this vector (vector) Partial differential , Then you can update θ \theta θ

- Million level parameters (millions of parameters)

Back propagation (Backpropagation) Is a more efficient algorithm , Calculate the gradient (Gradient) Vector (Vector) when , It can be calculated efficiently

2、 The chain rule ( One yuan and multiple )

Chain rule of multivariate function , You need to do the chain rule to calculate the partial derivative and sum of each inner function , Pictured above Case 2.

3、 Back propagation

3.1、 Loss function calculation

neural network ( Model ) The structure is as follows : Calculation y 1 y_1 y1, y 2 y_2 y2 For parameters w 1 w_1 w1, w 2 w_2 w2 Partial derivative of .

The loss function is the sum of each single data loss function :

3.2、 gradient ( Partial Guide ) Calculation

Adopt the chain rule , Perform parameter separation :

∂ l ∂ w = ∂ z ∂ w ∂ l ∂ z \frac{\partial l}{\partial w}= \frac{\partial z}{\partial w}\frac{\partial l}{\partial z} ∂w∂l=∂w∂z∂z∂l

among ∂ z ∂ w \frac{\partial z}{\partial w} ∂w∂z For forward propagation , The result is input data x x x;

∂ l ∂ z \frac{\partial l}{\partial z} ∂z∂l For backward propagation , It is necessary to divide different parameters for calculation :

Take out a Neuron Analyze :

Introduce activation function a a a, At the same time, identify the subsequent neurons z ′ z^{\prime} z′, z ′ ′ z^{\prime \prime} z′′ Perform chain rule derivation :

∂ l ∂ z = ∂ a ∂ z ∂ l ∂ a ⇒ σ ′ ( z ) ∂ l ∂ a = ∂ z ′ ∂ a ∂ l ∂ z ′ + ∂ z ′ ′ ∂ a ∂ l ∂ z ′ ′ \frac{\partial l}{\partial z}= \frac{\partial a}{\partial z}\frac{\partial l}{\partial a}\Rightarrow \sigma ^{\prime}(z)\frac{\partial l}{\partial a}= \frac{\partial z^{\prime}}{\partial a}\frac{\partial l}{\partial z^{\prime}}+ \frac{\partial z^{\prime \prime}}{\partial a}\frac{\partial l}{\partial z^{\prime \prime}} ∂z∂l=∂z∂a∂a∂l⇒σ′(z)∂a∂l=∂a∂z′∂z′∂l+∂a∂z′′∂z′′∂l

Mark the above formula in bold in the structural drawing , as follows :

Will find , Look at this matter from another angle , Now there's another neuron , hold forward The process is reversed , among σ ′ ( z ) {\sigma}'(z) σ′(z) Is constant , Because it has been determined when it propagates forward .

3.3、 Discuss on the output layer

3.3.1、 The following is Output layer

hypothesis ∂ l ∂ z ′ \frac{\partial l}{\partial z'} ∂z′∂l and ∂ l ∂ z ′ ′ \frac{\partial l}{\partial z''} ∂z′′∂l It's the last hidden layer , That is to say y1 And y2 Is the output value , Then we can calculate directly ∂ l ∂ z \frac{\partial l}{\partial z} ∂z∂l result :

3.3.2、 Not later Output layer( That is, the hidden layer )

In this case , Continue to calculate the following green ∂ l ∂ z a \frac{\partial l}{\partial z_a} ∂za∂l and ∂ l ∂ z b \frac{\partial l}{\partial z_b} ∂zb∂l, Then by continuing to multiply w 5 w_5 w5 and w 6 w_6 w6 obtain ∂ l ∂ z ′ \frac{\partial l}{\partial z'} ∂z′∂l , But if ∂ l ∂ z a \frac{\partial l}{\partial z_a} ∂za∂l and ∂ l ∂ z b \frac{\partial l}{\partial z_b} ∂zb∂l I don't know , Then we will continue to calculate the surface layer later , Until you encounter the output value , Get the output value and then go in the opposite direction .

Actually backward pass The calculation amount of time and forward propagation is about the same .

4、 summary

∂ l ∂ w = ∂ z ∂ w ∂ l ∂ z \frac{\partial l}{\partial w}= \frac{\partial z}{\partial w}\frac{\partial l}{\partial z} ∂w∂l=∂w∂z∂z∂l

Our goal is to require calculation ∂ z ∂ w \frac{\partial z}{\partial w} ∂w∂z(Forward pass Part of ) And calculation ∂ l ∂ z \frac{\partial l}{\partial z} ∂z∂l ( Backward pass Part of ), And then put ∂ z ∂ w \frac{\partial z}{\partial w} ∂w∂z and ∂ l ∂ z \frac{\partial l}{\partial z} ∂z∂l Multiply , You can get all the parameters in the neural network , And then you can use gradient descent to keep updating , Get the function with the least loss .

边栏推荐

- Spark On YARN的作业提交流程

- SQL learning (1) - table related operations

- 6. 世界杯来了

- 并发编程之生产者消费者模式

- Channel shutdown: channel error; protocol method: #method<channel.close>(reply-code=406, reply-text=

- 数据仓库知识点

- Tencent upgrades the live broadcast function of video Number applet. Tencent's foundation for continuous promotion of live broadcast is this technology called visual cube (mlvb)

- Small programs related to a large number of digital collections off the shelves of wechat: is NFT products the future or a trap?

- Flink based real-time project: user behavior analysis (II: real-time traffic statistics)

- Based on Flink real-time project: user behavior analysis (III: Statistics of total website views (PV))

猜你喜欢

Naive Bayes Multiclass训练模型

Game project export AAB package upload Google tips more than 150m solution

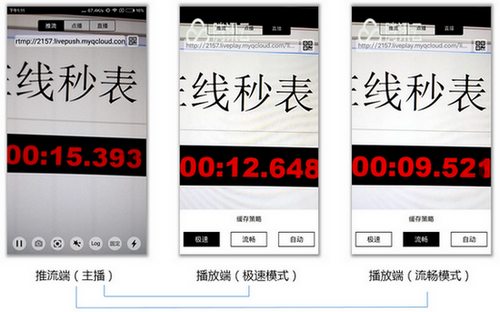

Zhimi Tencent cloud live mlvb plug-in optimization tutorial: six steps to improve streaming speed + reduce live delay

Redis -- cache avalanche, cache penetration, cache breakdown

深入理解Pod对象:基本管理

The basic concept of how Tencent cloud mlvb technology can highlight the siege in mobile live broadcasting services

腾讯升级视频号小程序直播功能,腾讯持续推广直播的底气是这项叫视立方(MLVB)的技术

One of the Flink requirements - processfunction (requirement: alarm if the temperature rises continuously within 30 seconds)

What are the necessary functions of short video app development?

网站日志采集和分析流程

随机推荐

李宏毅机器学习(2021版)_P7-9:训练技巧

Android——LitePal数据库框架的基本用法

Understanding of Flink interval join source code

SparkSql之编程方式

SQL学习(3)——表的复杂查询与函数操作

进入2022年,移动互联网的小程序和短视频直播赛道还有机会吗?

Spark on yarn's job submission process

深度学习笔记

DataNode Decommision

MYSQL 使用及实现排名函数RANK、DENSE_RANK和ROW_NUMBER

Uni-app 小程序 App 的广告变现之路:Banner 信息流广告

李宏毅机器学习(2017版)_P1-2:机器学习介绍

MySQL split table DDL operation (stored procedure)

ADB shell screen capture command

MYSQL数据库事务的隔离级别(详解)

FaceNet

Compile Darknet under vscode2015 to generate darknet Ext error msb3721: XXX has exited with a return code of 1.

pytorch张量数据基础操作

Tencent upgrades the live broadcast function of video Number applet. Tencent's foundation for continuous promotion of live broadcast is this technology called visual cube (mlvb)

Zhimi Tencent cloud live mlvb plug-in optimization tutorial: six steps to improve streaming speed + reduce live delay