当前位置:网站首页>Application of mobile face stylization Technology

Application of mobile face stylization Technology

2022-07-28 11:55:00 【Alibaba Amoy technology team official website blog】

This paper introduces the whole process of face stylization Technology , And the technology is live 、 Applications in short videos and other scenes . This technology can be used as an atmosphere 、 An effective means to improve appearance , It can also protect face privacy in graphic scenes such as buyer shows 、 Add fun and other functions .

Preface

With the yuan universe 、 Digital person 、 The explosion of concepts such as virtual image , Various digital collaborative and interactive pan entertainment applications are also constantly landing . for example , In some games , Players become virtual artists and participate in the daily work of real artists with high degree of restoration , And under certain circumstances , Form a strong mapping with virtual artists on facial expressions and other levels to enhance the sense of participation . And the hyper realistic digital people launched by Alibaba tmall AYAYI Unite with Jing Boran “ Take a stroll ” The magazine of 《MO Magazine》, Break the traditional plane reading experience , Let readers get an immersive experience in the form of the combination of virtual and real .

In these pan entertainment application scenarios ,“ people ” Must be the first consideration . And artificially designed numbers 、 Animated image , There is too “ abstract ”、 It's expensive 、 Lack of personalization and other problems . So in face digitization , We have a good sense of control through research and development 、ID sense 、 Face stylization technology of stylization degree , Realize face image switching with customized style . This technology can not only be used in live broadcast 、 Short videos and other entertainment consumption scenes as an atmosphere 、 An effective means to improve appearance , It can also protect face privacy in graphic scenes such as buyer shows 、 Add fun and other functions . Imagine further , If different users gather in a digital community , Chat and socialize with the community style digital image ( for example “ Battle of two cities ” Our users use the stylized image of the battle of two cities to communicate friendly in the metauniverse ), That's a lot of things with a sense of substitution .

Battle of two cities animation

The picture on the left shows the original AYAYI Image , The picture on the right shows the stylized image .

In order to put face stylization technology into our live broadcast 、 Buyer show 、 Seller shows and other different pan entertainment business scenarios , We did it :

Low cost production of different face stylized editing models ( All the effects shown in this article are achieved without any design resources );

Edit the style appropriately to match the design 、 product 、 Operation style selection ;

Can be on the face ID Tilt and balance between sense and stylization ;

Ensure the generalization of the model , To apply to different faces 、 angle 、 Scene environment ;

On the premise of ensuring clarity and other effects , Reduce the requirements of the model for computational power .

Next , Let's take a look first demo, Then we will introduce our whole technical process : Thank you for our products mm—— Adolphe ~

The overall plan

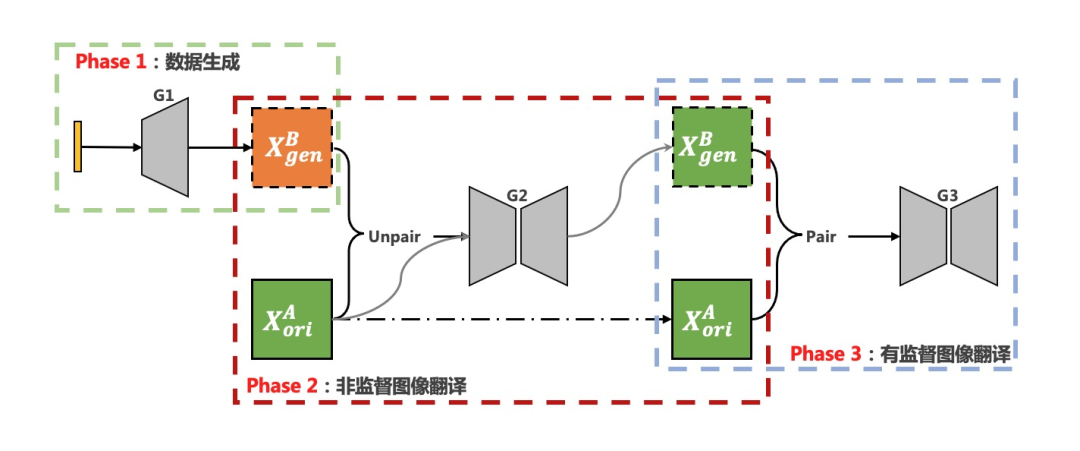

Our overall algorithm scheme adopts three stages :

Stage 1 : be based on StyleGAN Stylized data generation ;

Stage two : Unsupervised image translation generates paired images ;

Stage three : Using paired images to train the supervised image translation model on the mobile terminal .

The overall algorithm scheme of face stylized editing

Of course , You can also use a two-stage scheme :StyleGAN Make pair The image is right , Then directly train the supervised small model . But add the unsupervised image translation stage , The two tasks of stylized data production and paired image data production can be decoupled , Through the algorithm in the stage 、 Optimization and improvement of data between stages , Combined with mobile terminal supervised small model training , Finally solve the low-cost stylized model production 、 Style editing and selection 、ID Sense and stylized inclination 、 Lightweight deployment model .

be based on StyleGAN Data generation

Use StyleGAN Algorithm for data generation , Mainly aimed at 3 The solution of a problem :

Improve the richness and stylization of the generated data of the model : For example, generation CG The face is more like CG, And all angles 、 expression 、 Hairstyle and other images are richer ;

Improve data generation efficiency : The yield of generated data is high 、 Distribution is more controllable ;

Style editing and selection : For example, modify CG The size of the face's eyes .

Let's focus on these three aspects .

▐ Richness and stylization

be based on StyleGAN2-ADA The first important problem of transfer learning is : The relationship between the richness of the model and the degree of stylization of the model trade-off. Use the training set  When conducting transfer learning , Affected by the richness of training set data , The migrated model is in facial expression 、 Face angle 、 The richness of face elements will also be damaged ; meanwhile , As the iterative algebra of migration training increases 、 The degree of stylization of the model /FID The promotion of , The richness of the model will also be lower . This will make the distribution of stylized data sets generated by subsequent application models too monotonous , It's not good for U-GAT-IT Training for .

When conducting transfer learning , Affected by the richness of training set data , The migrated model is in facial expression 、 Face angle 、 The richness of face elements will also be damaged ; meanwhile , As the iterative algebra of migration training increases 、 The degree of stylization of the model /FID The promotion of , The richness of the model will also be lower . This will make the distribution of stylized data sets generated by subsequent application models too monotonous , It's not good for U-GAT-IT Training for .

In order to enhance the richness of the model , We have made the following improvements :

adjustment 、 Optimize the data distribution of the training data set ;

Model fusion : Because the source model is trained on a large amount of data , Therefore, the generation space of the source model has a very high richness ; If the weight of the low resolution layer of the migration model is replaced by the weight of the corresponding layer of the source model, the fusion model is obtained , Then the generated image of the new model can be generated in large elements / The distribution of features is consistent with the source model , Thus, the richness consistent with the source model is obtained on the low resolution features ;

Fusion mode :Swap layer Directly exchange parameters of different layers , It is easy to cause the disharmony of the generated image 、 details bad cases; And through smooth model interpolation , You can get better generation effect ( The following illustrations are generated by the fusion model of interpolation fusion )

Constrain the learning rate and characteristics of different layers 、 Optimization and adjustment ;

Iterative optimization : Manually screen the data of new production , Add to the original stylized dataset to enhance richness , Then in iterative training optimization until a higher richness can be generated 、 A model of satisfaction with the degree of stylization .

Original picture , Migration model , Fusion model

▐ Data generation efficiency

If we have a rich StyleGAN2 Model , How to generate a style data set with rich distribution ? There are two ways :

Random sampling of hidden variables , Generate random style data sets ;

Use StyleGAN inversion, Input face data that conforms to a certain distribution , Make the corresponding style data set .

practice 1 It can provide richer stylized data ( Especially the richness of the background ), And the way to do it 2 It can improve the effectiveness of generated data and provide a certain degree of distribution control , Improve the production efficiency of stylized data .

original image ,StyleGAN Inversion The obtained hidden vector is sent into “ Advanced face style / Animation style ” StyleGAN2 The image obtained by the generator

▐ Style editing and selection

The original style is not very good-looking, so it can't be used

The model style after migration training cannot be changed

No No No, Each model can be used to generate more than data , It can also precipitate into a basic component 、 Basic ability . I don't just want to fine tune the original style 、 Optimize , Even want to create a new style , It's all right :

Model fusion : By fusing multiple models 、 Set different fusion parameters / The layer number 、 Use different fusion methods, etc , It can optimize the inferior style model , It can also realize the adjustment of style ;

Model dolls : Connect models of different styles , Make the final output style carry some facial features of the intermediate model 、 Tonal and other style features .

In the process of integration, realize the fine adjustment of comic style ( Pupil color 、 Lips 、 Skin tone, etc )

Through style creation and fine-tuning , Different styles of models can be implemented , So as to realize the production of face data of different styles .

Based on StyleGAN Migration study 、 Style editing optimization 、 The data generated , We can get our first pot of gold : With high richness 、1024×1024 Resolution 、 Stylized data set after style selection .

Pairing data production based on unsupervised image translation

Unsupervised image translation technology learns the mapping relationship between two domains , You can convert images from one domain to another , So as to provide the possibility of making image pairs . For example, famous in this field CycleGAN It has the following structure :

CycleGAN Main framework

I discussed above “ Model richness ” When I said :

this ( Low richness ) It will make the distribution of stylized data sets generated by subsequent application models too monotonous , It's not good for U-GAT-IT Training for .

Why is this ? because CycleGAN The framework of requires that the data of the two domains basically conform to the bijection relationship , Otherwise, the domain  Translate to domain

Translate to domain  after , It is easy to lose semantics . and StyleGAN2 inversion There is a problem with the generated image , Most of the background information will be lost , Become simple 、 A vague background ( Of course , Some of the latest papers have greatly alleviated this problem , Tencent, for example AI Lab Of High-Fidelity GAN Inversion). If you use datasets

after , It is easy to lose semantics . and StyleGAN2 inversion There is a problem with the generated image , Most of the background information will be lost , Become simple 、 A vague background ( Of course , Some of the latest papers have greatly alleviated this problem , Tencent, for example AI Lab Of High-Fidelity GAN Inversion). If you use datasets  And real face data sets

And real face data sets  Direct training U-GAT-IT, Data sets are easy to happen

Direct training U-GAT-IT, Data sets are easy to happen  Generated corresponding image

Generated corresponding image  A lot of semantic information is lost in the background of , It is difficult to form effective image pairs .

A lot of semantic information is lost in the background of , It is difficult to form effective image pairs .

Therefore, some improvements are proposed U-GAT-IT In two ways to achieve a fixed background : Based on adding background constraints Region-based U-GAT-IT Algorithm improvements , Based on adding mask branches Mask U-GAT-IT Algorithm improvements . These two ways exist ID The difference between the strength and balance of sense and stylization , Combined with the adjustment of hyperparameters , For our ID Sense and stylization provide a room for control . meanwhile , We also improve the network structure 、 Model EMA、 Edge lifting and other means to further improve the generation effect .

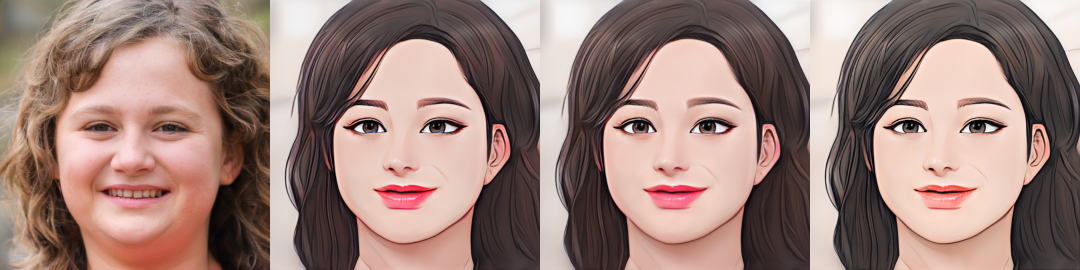

The left is the original picture , In the middle and on the right are the generation effects of unsupervised image translation , The difference is that the algorithm is ID Control of sense and stylization

Final , Use the trained generation model to infer and translate the human image data set to get the corresponding paired stylized data set .

Supervised image translation

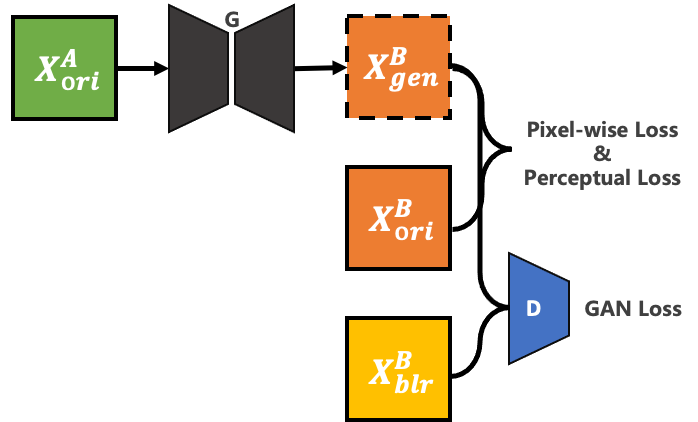

be based on MNN Research on computing efficiency of different operators and modules on mobile terminal , Conduct Structure design of mobile terminal model And The calculation amount of the model is divided into grades , And combined with CartoonGAN、AnimeGAN、pix2pix And so on , In the end, I got Light weight 、 high definition 、 A highly stylized mobile terminal model :

Model | clarity ↑ | FID↓ |

Pixel-wise Loss | 3.44 | 32.53 |

+Perceptual loss + GAN Loss | 6.03 | 8.36 |

+Edge-promoting | 6.24 | 8.09 |

+Data Augmentation | 6.57 | 8.26 |

* Clarity use Sum the Laplace gradient values As a statistical indicator

The overall training framework of supervised image translation model

Realize real-time face changing effect on the mobile terminal :

expectation

Optimize data sets : Image data from different angles 、 Quality optimization ;

Optimization of the overall link 、 improvement 、 Redesign ;

Better data generation :StyleGAN3、Inversion Algorithm 、 Model fusion 、 Style editor / create 、few-shot;

Unsupervised two domain translation : Use the generated data with high matching degree to do semi supervision , Generate model structure optimization ( For example, Fourier convolution is introduced );

Supervised two domain translation :vid2vid 、 Inter frame stability is improved 、 Optimization of extreme scenarios 、 Stability of details ;

Full picture stylization / Digital creation :disco diffusion、dalle2,style transfer.

reference

Karras, Tero, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. "Training generative adversarial networks with limited data." arXiv preprint arXiv:2006.06676 (2020).

Kim, Junho, Minjae Kim, Hyeonwoo Kang, and Kwanghee Lee. "U-gat-it: Unsupervised generative attentional networks with adaptive layer-instance normalization for image-to-image translation." arXiv preprint arXiv:1907.10830 (2019).

Pinkney, Justin NM, and Doron Adler. "Resolution Dependent GAN Interpolation for Controllable Image Synthesis Between Domains." arXiv preprint arXiv:2010.05334 (2020).

Tov, Omer, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. "Designing an encoder for stylegan image manipulation." ACM Transactions on Graphics (TOG) 40, no. 4 (2021): 1-14.

Song, Guoxian, Linjie Luo, Jing Liu, Wan-Chun Ma, Chunpong Lai, Chuanxia Zheng, and Tat-Jen Cham. "AgileGAN: stylizing portraits by inversion-consistent transfer learning." ACM Transactions on Graphics (TOG) 40, no. 4 (2021): 1-13.

zllrunning. face-parsing.PyTorch. https://github.com/zllrunning/face-parsing.PyTorch, 2019. 5

Roy, Abhijit Guha, Nassir Navab, and Christian Wachinger. "Recalibrating fully convolutional networks with spatial and channel “squeeze and excitation” blocks." IEEE transactions on medical imaging 38, no. 2 (2018): 540-549.

Chen, Yang, Yu-Kun Lai, and Yong-Jin Liu. "Cartoongan: Generative adversarial networks for photo cartoonization." In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 9465-9474. 2018.

Zhang, Lingzhi, Tarmily Wen, and Jianbo Shi. "Deep image blending." In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 231-240. 2020.

Wang, Xintao, Ke Yu, Shixiang Wu, Jinjin Gu, Yihao Liu, Chao Dong, Yu Qiao, and Chen Change Loy. "Esrgan: Enhanced super-resolution generative adversarial networks." In Proceedings of the European conference on computer vision (ECCV) workshops, pp. 0-0. 2018.

Wang, Xintao, Liangbin Xie, Chao Dong, and Ying Shan. "Real-esrgan: Training real-world blind super-resolution with pure synthetic data." In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1905-1914. 2021.

Siddique, Nahian, Sidike Paheding, Colin P. Elkin, and Vijay Devabhaktuni. "U-net and its variants for medical image segmentation: A review of theory and applications." IEEE Access (2021).

Wang, Tengfei, et al. "High-fidelity gan inversion for image attribute editing." arXiv preprint arXiv:2109.06590 (2021).

team introduction

We are big Taobao technology multimedia production & Video content understanding algorithm team , Relying on the billions of videos of Taobao tmall / Image data , We are committed to providing full link visual algorithm solutions from multimedia production of highlight products to front-end video understanding and recommendation . In the image of cloud integration / Video processing 、 Cross modal video content understanding 、AR live broadcast 、3D The digital field 、 Intelligent production of content 、 to examine 、 Retrieval and high-level semantic understanding , Continue to explore and make efforts to drive product and commodity innovation ; In support of Taobao live 、 Stroll around 、 While Diantao and other tmall Taobao content businesses , Also through self-developed content, Zhongtai is a nail in Alibaba Group 、 Idle fish 、 Youku and other content businesses provide visual algorithm capability support . We continue to attract and welcome machine learning 、 Visual algorithms 、NLP Algorithm 、 End side intelligence and other fields ⼈ Just add ⼊, Welcome to contact [email protected]

* Expanding reading

author | Zheng Yuwei ( Guan Liang )

edit | Orange King

边栏推荐

- Traversal and copy of files in jar package

- 一种比读写锁更快的锁,还不赶紧认识一下

- Introduction to web security RADIUS protocol application

- Solutions to slow start of MATLAB

- How to deal with invalid objects monitored by Oracle every day in the production environment?

- Redis安装

- Minikube initial experience environment construction

- STL の 概念及其应用

- Outlook suddenly becomes very slow and too laggy. How to solve it

- How to use JWT for authentication and authorization

猜你喜欢

108. Introduction to the usage of SAP ui5 image display control Avatar

LabVIEW AI视觉工具包(非NI Vision)下载与安装教程

![[applet] how to notify users of wechat applet version update?](/img/04/848a3d2932e0dc73adb6683c4dca7a.png)

[applet] how to notify users of wechat applet version update?

Service workers let the website dynamically load webp pictures

直接插入排序与希尔排序

可视化大型时间序列的技巧。

![ASP. Net core 6 framework unveiling example demonstration [29]: building a file server](/img/90/40869d7c03f09010beb989af07e2f0.png)

ASP. Net core 6 framework unveiling example demonstration [29]: building a file server

![[pyGame practice] the super interesting bubble game is coming - may you be childlike and always happy and simple~](/img/3b/c06c140cd107b1158056e41b954e2e.png)

[pyGame practice] the super interesting bubble game is coming - may you be childlike and always happy and simple~

Unity遇坑记之 ab包卸载失败

Direct insert sort and Hill sort

随机推荐

Alexnet - paper analysis and reproduction

Detailed explanation of boost official website search engine project

多线程与高并发(三)—— 源码解析 AQS 原理

[pyGame practice] the super interesting bubble game is coming - may you be childlike and always happy and simple~

Minikube initial experience environment construction

[collection] Advanced Mathematics: Capriccio of stars

Good use explosion! The idea version of postman has been released, and its functions are really powerful

R language ggplot2 visualization: use the ggdotplot function of ggpubr package to visualize the grouped dot plot, set the palette parameter, and set the color of data points in different grouped dot p

「以云为核,无感极速」第五代验证码重磅来袭

Today's sleep quality record 74 points

Service Workers让网站动态加载Webp图片

什么是WordPress

MySQL (version 8.0.16) command and description

一种比读写锁更快的锁,还不赶紧认识一下

中国业务型CDP白皮书 | 爱分析报告

Multithreading and high concurrency (III) -- source code analysis AQS principle

MySQL离线同步到odps的时候 可以配置动态分区吗

Five Ali technical experts have been offered. How many interview questions can you answer

OsCache缓存监控刷新工具

Zotero document manager and its use posture (updated from time to time)