当前位置:网站首页>Combat tactics based on CEPH object storage

Combat tactics based on CEPH object storage

2022-06-12 09:34:00 【YoungerChina】

1. Enemy and know yourself , you can fight a hundred battles with no danger of defeat

Dissect business IO Model

Understand the basic business storage model :

Maximum concurrency , Maximum read / write bandwidth requirements .

How much concurrency determines when you know a single RGW How many do you need to use under the premise of the maximum concurrent number RGW To support these concurrency .

The maximum read / write bandwidth determines how much you use OSD To support such a large read-write bandwidth , Also consider endpoint Whether the bandwidth at the entrance meets this demand .

Whether the clients are distributed inside or outside the network .

Clients are mainly distributed on the Internet , It means that in such a complex network environment as the public network , Data reading and writing will be affected by some uncontrollable factors , So the public cloud for object storage now dare not tell you its bandwidth, delay and concurrency .

Clients are distributed in the intranet , It means that we should minimize endpoint The number of routing hops between the portal and the client , Guarantee its bandwidth .

Read write ratio , Average request size .

If the reading is high , such as CDN scene , You can consider endpoint Add read cache components at the entrance .

If the write ratio is high , Like data backup , Consider controlling the inlet bandwidth , Avoid multiple services preempting write bandwidth during peak periods , Affect the overall service quality .

Average request size , Determines the entire object storage service , Whether to optimize the performance of large files or small files .

Old driver experience : Many times we don't know the details of the business IO Model composition , At this time, small-scale access services can be considered , And connect the front end endpoint Access log ( For example, the front-end uses nginx) Access to ELK A kind of log analysis system , With the help of ELK It is very convenient to analyze the business IO Model .

2. The troops did not move , Gateway leading

All business ideas ultimately need to be built on the basic hardware platform , The business scale in the early stage is small , May not pay much attention to hardware selection , But as the business grows , Choosing a reliable and cost-effective hardware platform will become particularly important .

Experience in small-scale hardware resources

Small scale generally means that the number of cluster machines is 20 Within the platform or osd Quantity in 200 No more than one scene , This stage is to save money ,MON Nodes can consider using virtual machines , But it has to meet 2 Conditions :

* MON Quantity in 3 Table above , most 5 platform , A waste of money , also CPU And memory control in 2 nucleus 4G above .

* MON It must be distributed on different physical machines , At the same time NTP Sync .as for OSD node , The following conditions are met :

* OSD Don't go to the virtual machine , It is troublesome to change disks and troubleshoot problems , And because of the virtual machine IO There is another layer on the stack , The data security is very poor .

* The bandwidth limitation of the network should be considered , If it's Gigabit , Can only do bond, Try to satisfy every OSD Yes 40~60M/s Network bandwidth , From experience, if the network can reach tens of thousands of megabytes, do not engage in Gigabit bond, Subsequent network upgrades need to be stopped OSD It is also a big pit .

* Every OSD No matter how hard you try, you should try to keep it to a minimum 1 nucleus 2G Physical resources , Otherwise, if something goes wrong, the memory will hold Unable to live .

* If you don't care about performance , No, SSD do journal, Well, forget it .

* index pool It works SSD best , I can't help it .RGW Nodes are much easier :

* RGW Consider using virtual machines , even to the extent that docker, Because we can improve the high availability and concurrency of the overall service by opening more services .

* Every RGW The least resources 2 nucleus 4G, Because of every request The request was not written rados Before , Are cached in memory .

* RGW Service node 2 It's about time , The front end can be accessed nginx Do reverse proxy to improve concurrency .

* Without tuning civetweb Concurrency is better than fastcgi Much worse .Hardware resource experience in medium scale

Medium scale generally means that the number of cluster machines is 20~40 Within the platform or osd Quantity in 400 No more than one scene , At this stage, the business has basically begun to take shape ,MON Nodes are not very suitable for virtual machine scenarios , At the same time, we should pay attention to some matters :

* MON Quantity in 3 Table above , most 5 platform , also CPU And memory control in 4 nucleus 8G above , If there are conditions MON Of metadata Stored in SSD above . Because when the cluster reaches a certain scale ,Mon above LevelDB There will be performance bottlenecks , Especially when doing data compression .

* MON It must be distributed on different physical machines , Deploy across multiple cabinets if possible , But be careful not to span more than one IP Network segment , If the network fluctuates between network segments , Easy to trigger Mon Frequent elections , Of course, the election parameters can be adjusted .OSD node , The following conditions are met :

* OSD Consider doing well crushmap Fault domain isolation , It works 3 copy , Never save money 2 copy , Later, after the disk batch reaches its lifetime , This is a big hidden danger .

* Every OSD Physical nodes OSD The number of disks should not be too large , And the capacity of a single disk should not be too large , You make a 8T Of SATA disc , If the single disk has been used up 80% The above bad disks , So the whole data backfill is a long wait , Of course you can control backfill The concurrent number of , But it has an impact on the business , Make your own trade-offs .

* Every OSD It's better to have SSD journal Escort , At this scale, we can save SSD Your money is no longer necessary .

* index pool Be sure to go to SSD, This will be a qualitative leap in performance .RGW Nodes are much easier :

* RGW You can still consider using virtual machines , even to the extent that docker.

* The front-end entrance shall be connected to the load balancing scheme , such as LVS Or use nginx Anti agency . High availability and load balancing are required .

* according to SSD Quantity and fault domain design shall be well controlled rgw_override_bucket_index_max_shards The number of , tuning bucket Of index performance .

* RGW Services can be considered in every OSD Deploy one above , However, it is necessary to ensure that the corresponding nodes CPU And enough memory .Hardware resource experience in large and medium-sized scale

Large and medium scale generally refers to that the number of cluster machines is 50 Within the platform or osd Quantity in 500 No more than one scene , At this stage, the business has basically reached a certain scale ,MON Nodes must not use virtual machines , At the same time, we should pay attention to some matters :

* MON Quantity in 3 Table above , most 5 platform , On SSD.

* MON It must be distributed on different physical machines , Be sure to deploy across multiple cabinets , But be careful not to cross IP Network segment , 10 Gigabit Internet is the best .

* Every MON The guarantee is 8 The core 16G Memory is basically enough .OSD node , The following conditions are met :

* OSD Be sure to design in advance crushmap Fault domain isolation , recommend 3 copy , as for EC programme , It depends on your ability to solve the problem of server power failure in batches EC Data recovery issues .

* OSD The hard disk must be configured uniformly , Don't do it 4T and 8T blend , such weight Control can be cumbersome , And we must do well in every osd Of pg Distribution tuning , Avoid uneven distribution of performance pressure and capacity .pg Distribution tuning will be introduced later .

* SSD journal and SSD index pool Is a must .RGW Nodes are much easier :

* RGW Still be honest and practical , On this scale , The money saved today will have to pay the tuition in tears one day .

* Front end load balancing scheme , Can do a layer of optimization , For example, improve front-end bandwidth and concurrency , Increase the read cache , Even on dpdk.

* Use civetweb replace fastcgi, It can greatly improve the deployment efficiency , If combined docker It can achieve rapid concurrency and elastic scaling .

* RGW It can be centrally deployed on several separate physical nodes , You can also consider using and OSD Mixing scheme .3. Everything is done in advance , If you don't anticipate, you will lose .

Hard disk valuable data priceless , Storage systems that deal with data must be treated with a little more awe . Some high-risk operations , Be careful , Don't get into the habit of restarting and deleting data in the test environment , After you go online, you may have a habit that you will never forget . In addition, various tests must be done before launching , Otherwise, waiting until something goes wrong on the line to cram for a temporary job may have become hopeless . The following points must be done for testing .

Failure drill and recovery : Use Cosbench Simulate various dials while reading and writing , Broken net , Cabinet power failure, etc , To test your crushmap Fault domain design capability and basic level of operation and maintenance personnel , I can't get through this , After the system goes online, the operation and maintenance personnel can only seek their own blessings .

Independent client machines must be prepared for performance pressure test , Try not to mix client and server , At the same time, all network traffic generated by pressure measurement should be isolated , Don't affect the online environment .

NTP The importance of service is really worth mentioning alone , Be sure to check the clock of all nodes before going online , Those increases mon_clock_drift_allowed The method of delaying is pure deceiving , Finally, I would like to remind you that , All related to hardware update and maintenance ( For example, replace the motherboard and memory after shutdown 、CPU、RAID card ), Be sure to check that the clock is correct before resuming the service , Or you can drink a pot .

Functional coverage testing depends on you QA The foundation of , To be honest, whether it's official use case or Cosbench It's hard to meet your expectations , Let's do this test case by ourselves , Conditional of various languages SDK All have one set , Maybe one day I'll step on the pit . When you go online, you must sort out one API Compatibility list , Avoid trying to verify the interface availability later .

4. Devise strategies among , Successful in distant

After the system goes online , How to make the operation and maintenance work easy is a great knowledge . Smart operation and maintenance , Seven points depend on tools , Rely on experience . Faced with a variety of operation and maintenance tools , And write a script to make a wheel , Obviously, it is not advisable , What's more, you can't control the large scale simply by using scripts , Succession of follow-up personnel , The cost of scripted management will be higher and higher , Therefore, I recommend an operation and maintenance framework scheme suitable for small and medium-sized operation and maintenance teams , Here's the picture

Deployment tools

Recommended ansible, Why not puppet, Why not saltstack,puppet Tell the truth ruby Syntax is really unfriendly to O & M , and puppet Too heavy , The client should be deployed separately for maintenance , Although it is widely used in production , But for O & M ceph It still feels like killing a chicken with an ox knife . as for saltstack, although python Grammar is basically familiar to operation and maintenance , But then ceph Of calamari Team and saltstack Because of the version compatibility problem, both sides lose , Final calamari The project has become a uncompleted residential building , So for saltstack Be reserved .ansible and ceph Just like being RedHat Acquisition , meanwhile ansible It's also ceph The official deployment tool ,SSH Agent free and Ceph-deploy similar , however ansible More inclined to engineering practice ,ceph-deploy Small scale use can , In the direction of normalization, we still use ansible By spectrum .

Log collection and management

first ELK,ELK I won't introduce the basic functions of , The first open source log management solution , If you want to roast about your shortcomings , That's learning GROK Regular is a bit disgusting , But I got used to it slowly , hold MON/OSD/RGW/MDS Log to ELK I lost it , The next step is to see you accumulate Ceph Operation and maintenance experience , be familiar with Ceph journal , At the same time, various abnormal and alarm triggering conditions are continuously improved , Put the daily disk 、RAID Card and other common hardware fault logs are also integrated , Basically, you can quickly diagnose through logs OSD Disk failure , Don't hang up foolishly anymore OSD I don't know what's going on yet , Just tell the computer room to change the disk for you .

Asynchronous task scheduling

Why do we need asynchronous task scheduling , Because the front ELK Although the diagnosis analyzes the cause of the fault , Finally, someone needs to log in to the specific machine to deal with the fault , introduce Celery After this distributed task scheduling middleware , The operation and maintenance personnel encapsulate the corresponding fault handling operations into ansible playbook, such as ELK Disk failure found , Call the operation and maintenance personnel playbook Go to the corresponding disk out fall , then umount, Use megacli A class of tools lights up the disk fault light , The last e-mail informs XX Computer room XX Cabinet IP by XX Your machine needs to replace the hard disk , The rest is to restore the data after the computer room changes the disk .

Notice of news

Communication tools such as wechat greatly facilitate the communication of internal personnel , Especially when you're not at work , Trigger by operating the robot ansible playbook Script processing failure , This kind of feeling makes you feel the real bitterness of O & M ! The computer room students have changed the disk , Send the message of operation completion to wechat robot , The robot will build a OSD Recovery task , At the same time, send the corresponding execution request to the operation and maintenance personnel , The operation and maintenance students only need to confirm the operation with the robot , The rest is to let the robot inform you of the progress of the recovery by message at any time . It is definitely not extravagant to flirt with your sister and deal with the fault at the same time .

Finally, the recommended tools are attached :

https://github.com/ceph/ceph-ansible

https://www.elastic.co/cn/products

http://docs.celeryproject.org/en/latest/index.html

http://wxpy.readthedocs.io/zh/latest/index.html

http://ansible-tran.readthedocs.io/en/latest/

author : Qin Yangmu

You are welcome to recommend the original author of this official account

边栏推荐

- SAP Hana error message sys_ XSA authentication failed SQLSTATE - 28000

- 电脑启动快捷键一览表

- Latex common symbols summary

- C#入门系列(十二) -- 字符串

- 数据库常见面试题都给你准备好了

- 小程序的介绍

- Dragon Boat Festival Ankang - - les Yankees dans mon cœur de plus en plus de zongzi

- QQ, wechat chat depends on it (socket)?

- Mysql database ignores case

- Basic exercise decomposing prime factors

猜你喜欢

SAP Hana error message sys_ XSA authentication failed SQLSTATE - 28000

电脑启动快捷键一览表

榜样访谈——董宇航:在俱乐部中收获爱情

数据库常见面试题都给你准备好了

ADB命令集锦,一起来学吧

Distributed transaction solution 2: message queue to achieve final consistency

Principle analysis of mongodb storage engine wiredtiger

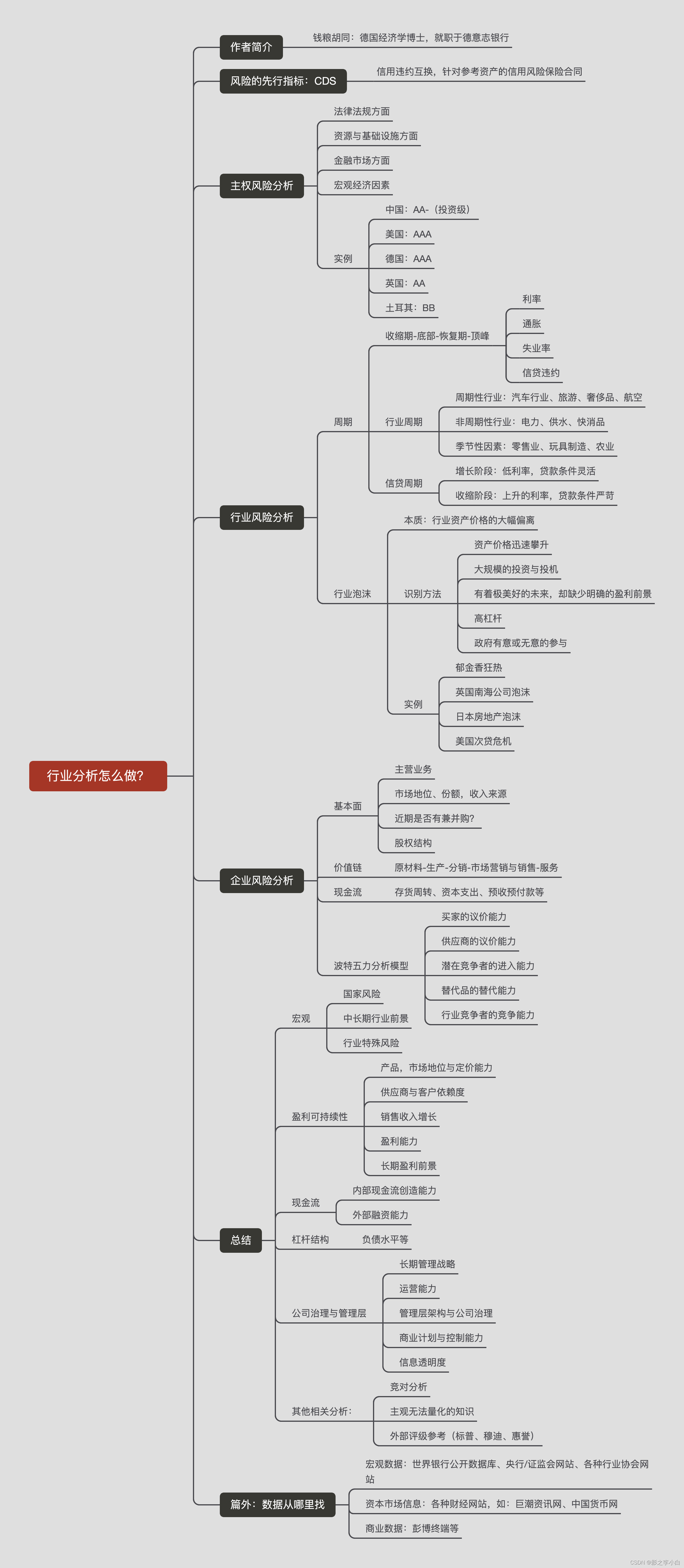

行业分析怎么做

![[cloud native] establishment of Eureka service registration](/img/da/0a700081be767db91edd5f3d49b5d0.png)

[cloud native] establishment of Eureka service registration

Black screen solution for computer boot

随机推荐

L1-019 who goes first

L1-002 print Hourglass (20 points)

NiO principle

Swagger documentation details

科创人·神州数码集团CIO沈旸:最佳实践模式正在失灵,开源加速分布式创新

小程序介绍

数据库常见面试题都给你准备好了

MySQL优化之慢日志查询

端午節安康--諸佬在我心裏越來越粽要了

软件测试面试官问这些问题的背后意义你知道吗?

DNA数字信息存储的研究进展

价值投资.

Thread deadlock and its solution

软件测试需求分析方法有哪些,一起来看看吧

电阻的作用有哪些?(超全)

测试用例如何编写?

网络层IP协议 ARP&ICMP&IGMP NAT

QQ, wechat chat depends on it (socket)?

Definition of polar angle and its code implementation

MySQL index