当前位置:网站首页>How much computing power does transformer have

How much computing power does transformer have

2022-07-04 05:38:00 【Oriental Golden wood】

https://jishuin.proginn.com/p/763bfbd4ca4f

I found this problem in my recent research , After checking, someone really said this thing

This paper indirectly explains what is in the middle of the residual attention It may not be necessary

therefore

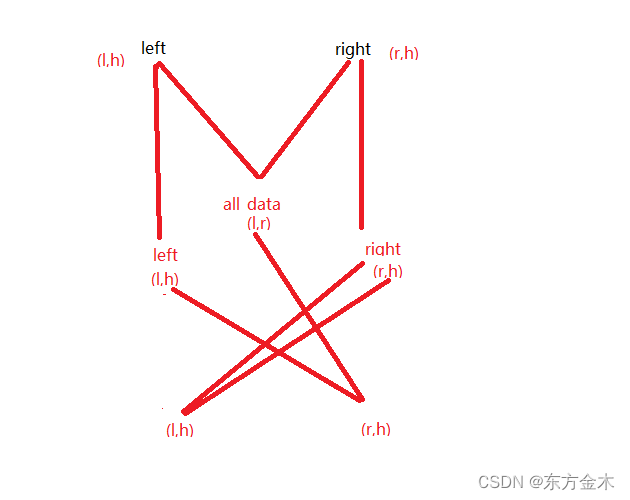

Used linner Instead of this part, a transformer ( Leave the parts that decode each other )

Another one is designed to only use ( Decode each other , Other direct linner There is no residual after decoding )

The result is that the latter is better or the same effect, but the efficiency is not only MLP Efficient in the same task

That is to say, the residuals have little effect

The point is still MLP

And double output will be better than single output

And softmax of no avail , Self attention , The essence is a relational dictionary , Like Xinhua Dictionary

You can refer to the following code ( A little messy )

https://blog.csdn.net/weixin_32759777/category_11446474.html

Shield one side when reasoning , In this way, they can be translated and used

边栏推荐

- 小程序毕业设计---美食、菜谱小程序

- Use of hutool Pinyin tool

- June 2022 summary

- VB. Net simple processing pictures, black and white (class library - 7)

- 模拟小根堆

- [matlab] general function of communication signal modulation Fourier transform

- Automated testing selenium foundation -- webdriverapi

- Simulated small root pile

- Etcd database source code analysis - initialization overview

- 724. Find the central subscript of the array

猜你喜欢

C语言简易学生管理系统(含源码)

如何使用postman实现简单的接口关联【增删改查】

谷歌 Chrome 浏览器将支持选取文字翻译功能

Graduation design of small programs -- small programs of food and recipes

What is MQ?

2022 t elevator repair operation certificate examination question bank and simulation examination

![[Excel] 数据透视图](/img/45/be87e4428a1d8ef66ef34a63d12fd4.png)

[Excel] 数据透视图

Upper computer software development - log information is stored in the database based on log4net

Two sides of the evening: tell me about the bloom filter and cuckoo filter? Application scenario? I'm confused..

Build an Internet of things infrared temperature measuring punch in machine with esp32 / rush to work after the Spring Festival? Baa, no matter how hard you work, you must take your temperature first

随机推荐

[technology development -25]: integration technology of radio and television network, Internet, telecommunication network and power grid

力扣(LeetCode)184. 部门工资最高的员工(2022.07.03)

Use of hutool Pinyin tool

LabVIEW错误对话框的出现

【QT】制作MyComboBox点击事件

[MySQL practice of massive data with high concurrency, high performance and high availability -8] - transaction isolation mechanism of InnoDB

input显示当前选择的图片

Unity2D--人物移动并转身

Evolution of system architecture: differences and connections between SOA and microservice architecture

Talk about the SQL server version of DTM sub transaction barrier function

Actual cases and optimization solutions of cloud native architecture

Basic concept of bus

Introduction To AMBA 简单理解

Programmers don't talk about morality, and use multithreading for Heisi's girlfriend

RSA加密应用常见缺陷的原理与实践

19.Frambuffer应用编程

Thinkphp6.0 middleware with limited access frequency think throttle

Integer type of C language

Viewing and using binary log of MySQL

XII Golang others