当前位置:网站首页>Wu En 07 regularization of teacher machine learning course notes

Wu En 07 regularization of teacher machine learning course notes

2022-07-29 12:06:00 【3077491278】

7 正则化

7.1 过拟合的问题

Meaning of overfitting

Underfitting is when the fitting algorithm has high bias,数据拟合效果很差.

Overfitting is when the fitting algorithm has high variance,can fit all data,but too many function variables,Not enough data to constrain,thus cannot generalize to new samples.

如果有非常多的特征,而只有非常少的训练数据,The learned model may be able to fit the training set very well(代

The valence function may be almost 0),但是可能会不能推广到新的数据.

解决过拟合的方法

- 减少特征的数量:More important features can be manually selected to keep,Or use the model selection algorithm to be described later to automatically select the features that need to be preserved.But the disadvantage of this method is that while discarding some features, it also discards some information.

- 正则化:保留所有的特征,但是减少参数的大小.

- By plotting the hypothetical function,Choose an appropriate degree of polynomial based on whether the curve is distorted.但是大多数时候,The research questions have many characteristics,cannot be visualized,causing this method to fail.

总结

Overfitting is when the fitting algorithm has high variance,can fit all data,但泛化能力差.

The problem of overfitting can generally be solved by reducing the number of features or regularization.

7.2 代价函数

正则化

It is the assumption that the degree of polynomial in the function is too high, which leads to overfitting.,So if the coefficients of these higher-order terms are made close to0的话,is equivalent to assuming that the function is still a function of lower degree、更加平滑,can solve the problem of overfitting.

If there were a lot of features,It is not known in advance which of these features are high-order terms,Therefore, it is necessary to modify the cost function to penalize all features.The specific modification method is to add a regularization term to the original cost function J ( θ ) = 1 2 m [ ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 + λ ∑ j = 1 n θ j 2 ] J(\theta)=\frac{1}{2 m}\left[\sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right)^{2}+\lambda \sum_{j=1}^{n} \theta_{j}^{2}\right] J(θ)=2m1[∑i=1m(hθ(x(i))−y(i))2+λ∑j=1nθj2].It should be noted that there is no right θ 0 \theta_0 θ0进行惩罚,这是约定俗成的,But whether or not this one has little effect on the results in practice.

λ ∑ j = 1 n θ j 2 \lambda \sum_{j=1}^{n} \theta_{j}^{2} λ∑j=1nθj2是正则化项, λ \lambda λ是正则化参数,used to fit the training set and keep the parameters as small as possible(to avoid overfitting)trade-off between the two goals of.

并且让代价函数最优化的软件来选择这些惩罚的程度.这样的结果是得到了一个较为简单的 能防止过拟合问题的假设.

Discussion of Regularization Parameters

如果 λ \lambda λ过大,In order to make the cost function as small as possible,所有的 θ i \theta_i θi(不包括 θ 0 \theta_0 θ0)都会趋于0,The final result is a line parallel to x x x轴的直线,underfitting.

所以对于正则化,Need to take a reasonable λ \lambda λ的值,这样才能更好的应用正则化.

总结

The regularization term keeps the parameters as small as possible,So as to solve the problem of overfitting.

7.3 正则化线性回归

Gradient Descent for Regularized Linear Regression

正则化线性回归的代价函数为 J ( θ ) = 1 2 m [ ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 + λ ∑ j = 1 n θ j 2 ] J(\theta)=\frac{1}{2 m}\left[\sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right)^{2}+\lambda \sum_{j=1}^{n} \theta_{j}^{2}\right] J(θ)=2m1[∑i=1m(hθ(x(i))−y(i))2+λ∑j=1nθj2].

The process of gradient descent is θ 0 : = θ 0 − a 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x 0 ( i ) θ j : = θ j − a [ 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i ) + λ m θ j ] \begin{aligned} \theta_{0}: &=\theta_{0}-a \frac{1}{m} \sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right) x_{0}^{(i)} \\ \theta_{j} &:=\theta_{j}-a\left[\frac{1}{m} \sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right) x_{j}^{(i)}+\frac{\lambda}{m} \theta_{j}\right] \end{aligned} θ0:θj=θ0−am1i=1∑m(hθ(x(i))−y(i))x0(i):=θj−a[m1i=1∑m(hθ(x(i))−y(i))xj(i)+mλθj]

Some transformations of the second formula can be obtained θ j : = θ j ( 1 − a λ m ) − a 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i ) \theta_{j}:=\theta_{j}\left(1-a \frac{\lambda}{m}\right)-a \frac{1}{m} \sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right) x_{j}^{(i)} θj:=θj(1−amλ)−am1∑i=1m(hθ(x(i))−y(i))xj(i),其中 1 − a λ m 1-a \frac{\lambda}{m} 1−amλ是一个比1略小的值.也就是说,正则化线性回归的梯度下降算法的变化在于,每次都在原有算法更新规则的基础上令 θ \theta θ值减少了一个额外的值.

Regularization linear regression of the normal equation method

The normal equation method of the general linear regression model can be solved as follows: θ = ( X T X ) − 1 X T y \theta=\left(X^{T} X\right)^{-1} X^{T} y θ=(XTX)−1XTy .

The solution method of the normal equation method of the regularized linear regression model is: θ = ( X T X + λ [ 0 1 1 ⋱ 1 ] ) − 1 X T y \theta=\left(X^{T} X+\lambda\left[\begin{array}{lllll}0 & & & & \\ & 1 & & & \\ & & 1 & & \\ & & & \ddots & \\ & & & & 1\end{array}\right]\right)^{-1} X^{T} y θ=⎝⎛XTX+λ⎣⎡011⋱1⎦⎤⎠⎞−1XTy.

总结

This section generalizes the gradient descent method and the normal equation method to regularized linear regression.

7.4 正则化的逻辑回归模型

Similar to the treatment of regularized linear regression models,对于逻辑回归,Also add a regularized expression to the cost function,得到代价函数 J ( θ ) = 1 m ∑ i = 1 m [ − y ( i ) log ( h θ ( x ( i ) ) ) − ( 1 − y ( i ) ) log ( 1 − h θ ( x ( i ) ) ) ] + λ 2 m ∑ j = 1 n θ j 2 J(\theta)=\frac{1}{m} \sum_{i=1}^{m}\left[-y^{(i)} \log \left(h_{\theta}\left(x^{(i)}\right)\right)-\left(1-y^{(i)}\right) \log \left(1-h_{\theta}\left(x^{(i)}\right)\right)\right]+\frac{\lambda}{2 m} \sum_{j=1}^{n} \theta_{j}^{2} J(θ)=m1∑i=1m[−y(i)log(hθ(x(i)))−(1−y(i))log(1−hθ(x(i)))]+2mλ∑j=1nθj2.

The process of getting the gradient descent method is:

θ 0 : = θ 0 − a 1 m ∑ i = 1 m ( ( h θ ( x ( i ) ) − y ( i ) ) x 0 ( i ) ) \theta_{0}:=\theta_{0}-a \frac{1}{m} \sum_{i=1}^{m}\left(\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right) x_{0}^{(i)}\right) θ0:=θ0−am1∑i=1m((hθ(x(i))−y(i))x0(i))

θ j : = θ j − a [ 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i ) + λ m θ j ] \theta_{j}:=\theta_{j}-a\left[\frac{1}{m} \sum_{i=1}^{m}\left(h_{\theta}\left(x^{(i)}\right)-y^{(i)}\right) x_{j}^{(i)}+\frac{\lambda}{m} \theta_{j}\right] θj:=θj−a[m1∑i=1m(hθ(x(i))−y(i))xj(i)+mλθj]

总结

This section generalizes the gradient descent method and the normal equation method to regularized logistic regression.

边栏推荐

- DAY 25 daily SQL clock 】 【 丨 different sex daily score a total difficulty moderate 】 【

- 路径依赖 - 偶然决策导致的依赖。

- Pangolin库链接库问题

- 游戏合作伙伴专题:BreederDAO 与《王国联盟》结成联盟

- DAY 24 daily SQL clock 】 【 丨 find the beginning and end of the continuum digital difficulty moderate 】 【

- 微信怎么知道别人删除了你?批量检测方法(建群)

- 微信发红包测试用例

- 爱可可AI前沿推介(7.29)

- 第二章总结

- LMO·3rd - 报名通知

猜你喜欢

Paddle frame experience evaluation and exchange meeting, the use experience of the product is up to you!

AI cocoa AI frontier introduction (7.29)

【第三次自考】——总结

Recursion - Eight Queens Problem

Codeforces Round #797 (Div. 3)个人题解

Learning with Recoverable Forgetting阅读心得

JVM内存模型如何分配的?

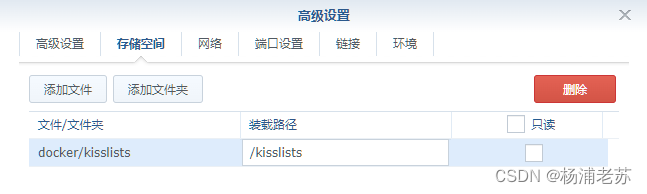

最简单的共享列表服务器KissLists

Codeforces Round # 797 (Div. 3) personal answer key

mapbox 地图 生成矢量数据圆

随机推荐

第二章总结

Learn weekly - 64 - a v2ex style source BBS program

【day04】IDEA, method

飞桨框架体验评测交流会,产品的使用体验由你来决定!

QCon Guangzhou Station is here!Exclusive custom backpacks are waiting for you!

Network layer and transport layer restrictions

2.2 Selection sort

2.2选择排序

路径依赖 - 偶然决策导致的依赖。

【每日SQL打卡】DAY 21丨报告系统状态的连续日期【难度困难】

WordPress 固定链接设置

MarkDown高阶语法手册

金仓数据库KingbaseES客户端编程接口指南-JDBC(4. JDBC 创建语句对象)

1.4, stack

Collections.singletonList(T o)

Basic knowledge of redis database learning - basic, commonly used

WordPress 编辑用户

MFC学习备忘

【每日SQL打卡】DAY 26丨餐馆营业额变化增长【难度中等】

AMH6.X升级到AMH7.0后,登录后台提示MySQL连接出错怎么解决?