当前位置:网站首页>Google hacking search engine attack and Prevention

Google hacking search engine attack and Prevention

2022-06-24 12:28:00 【Tiancun information】

Google Hacking, Sometimes it's called Google dorking, It's a technology that uses the advanced use of Google search to collect information . This concept first came into being in 2000 By hackers Johnny Long Put forward and popularize , A series about Google Hacking He wrote about it in 《Google Hacking For Penetration Testers》 In a Book , And get the attention of the media and the public . stay DEFCON 13 On ,Johnny Created “Googledork" The word ,“Googledork" refer to “ By Google The stupidity of revealing information 、 Incompetent people ”. This is to draw people's attention to , It's not that this information can be searched Google The problem of , It's caused by the user or the user's unconscious misconfiguration when installing the program . as time goes on ,“dork" The word became “ Search for sensitive information ” Short for this behavior .

Hackers can use Google High level operators search for vulnerable Web Applications or specific file types ( .pwd, .sql...), lookup Web Security vulnerabilities in applications 、 Collect target information 、 Discover leaked sensitive information or error messages and find files containing credentials and other sensitive data .

although Google It's not directly accessible at home , But as a technician , We should find the right way to visit . In addition, this technology is called “Google Hacking”, But the same idea , Similar search techniques , It is also suitable for other search engines . So here is just an introduction to the nature of the introduction , By analogy, it can be flexibly applied in other search scenarios , Just pay attention to all kinds of search engines in Search operators (operator) Small differences in use .

One 、 Search for Basics

- You can use double quotes ( “ " ) Search for phrases ;

- Keywords are not case sensitive ;

- You can use wildcards ( * );

- Will ignore some words in the search , These words are called stop words, such as :how,where etc. ;

- Keywords can have at most 32 A word , but Google It doesn't make wildcards ( * ) Count in the length of the keyword , So you can use wildcards to extend the length of the search content ;

- Boolean operators and special characters :

+ plus (AND) Will force the search for the word following the plus sign , No space after . Use the plus sign to make those Google Words ignored by default can be searched ; - minus sign (NOT) The words following the minus sign are forced to be ignored , There can't be spaces after that ; | Pipe, (OR) Will search in the search by pipeline character segmentation of any keyword .

Two 、 Advanced operators

stay Google Hacking Advanced operators can be used in , To narrow the search results , Finally get the information you need . Advanced operators are easy to use , But it also needs to follow strict grammar .

1. Need to know

- The basic grammar is :

operator:search_term, among You can't have Spaces ; - Boolean operators and advanced operators can be used in combination ;

- Multiple advanced operators can be used together in a single search ;

- With

allThe starting operator can only be used once in a search , Cannot be used with other advanced operators .

2. Basic operators (operator)

· intitle & allintitle ·

Use intitle You can search the title of the page , The title refers to HTML Medium title Content of the label . For example, search

intitle:"Index of"Will return to all title The tag contains keyword phrases “Index of" Search results for .allintitle How to use and intitle similar , but allintitle It can be followed by multiple contents . such as

allintitle:"Index of""backup files"Back to all title The tag contains keyword phrases

Index ofandbackup filesSearch results for .But use allintitle There will be a lot of restrictions , Because the content of this search will only be limited to the return intitle The content of , You can't use other advanced operators . In practical use , It's best to use multiple intitle, Instead of using allintitle.

· allintext ·

This is the easiest operator to understand , The function is to return to the pages that contain the search content . Of course ,allintext Cannot be used in combination with other advanced operators .

· inurl & allinurl ·

I introduced intitle after ,inurl It's easy to understand : You can search the web url The content of . But in practice ,inurl It's not always as expected , Here's why :

Google It's not very efficient to search url Part of the agreement , such as

http://; In practice ,url It usually contains a lot of special characters . To be compatible with these special characters while searching , The search results will not be as accurate as expected ; Other advanced operators ( such as :site, filetype etc. ) You can search url A specific part of , It's also more efficient in search than inurl Much higher .therefore inurl It's not as good as intitle That's easy to use . But even if inurl There are more or less problems ,inurl stay Google Hacking It's also indispensable .

and intitle identical ,inurl There is also a corresponding high-level operator allinurl. and allinurl It can't be used in combination with other advanced operators , So if you want to search url Multiple keywords in , It's best to use multiple inurl The operator .

· site ·

site Operators can specify the search content in a specific website , For example, search

site:apple.com, The content returned will only be www.apple.com The content under this domain name or its subdomain name .But here's the thing ,Google “ read ” The order of domain names is from right to left , The order in which people read is the opposite . If you search

site:aa,Google Will search for .aa For the ending domain name , Rather than aa Domain name at the beginning .

· filetype ·

filetype The type of file the operator can search for , That is, specify the suffix of the search file . For example, search

filetype:php, The search will return to php For the end of URL. This operator is often used in conjunction with other advanced operators , Achieve more accurate search results .

· link ·

link Operator can search and jump to the specified URL Link to ,link Operator can not only write some basic URL, You can also write complex 、 complete URL.link Operators cannot be used with other advanced operators or keywords .

· inanchor ·

inanchor Operators can search for HTML In the link tag Anchor text ,“ Anchor text ” It's a description of hyperlinks in a web page , Like the following paragraph HTML Language :

<a href="http://en.wikipedia.org/wiki/Main_Page">Wikipedia</a>

Among them

WikipediaIt's the anchor text in this link .

· cache ·

When Google When I get to the website , A link will be generated to save a snapshot of the site , Also known as Web caching . Application cache Operator to search for the specified URL Web snapshot of , And the web page snapshot will not change because of the disappearance or change of the original web page .

· numrange ·

numrange The operator needs to be followed by two numbers to indicate the range of numbers , With “-" For division , Form like :

numrange:1234-1235. Of course Google It also provides a simpler way to search for numbers , Form like :1234..1235, In this way, you can not use numrange Operator to achieve the purpose of the search range number .

· daterange ·

daterange The operator can search within a specified time range Google Indexed websites , The date format used after the operator is “ Julian date (Julian Day)”. About “ Julian date ” Please refer to the relevant documentation for an explanation of . When using, you can get the information you need through the online query tool “ Julian date " The number , Such as :www.onlineconversion.com/julian_date.htm

· info ·

info The operator returns a summary of a site , The content after the operator must be a complete site name , Otherwise, the correct content will not be returned .info Operators cannot be used with other operators .

· related ·

related The operator will search for those and the input URL Related or similar pages ,related Operators cannot be used with other operators .

· stocks ·

stocks The operator searches for relevant stock information ,stocks Operators cannot be used with other operators .

· define ·

define The operator searches for the definition of the keyword ,define Operators cannot be used with other operators .

3、 ... and 、 Simple application

1. Mailbox capture

If you want to test a goal ,Google Hacking Can help us find enough information . among , Collect relevant email addresses ( It's also the user name of the website ) It is Goolge Hakcing A simple and powerful example in application .

First of all, we are in Google Mid search “@gmail.com", Found that the search results are not good , But it also includes the search results you need .

And then , use Lynx(Linux Plain text web browser under ), Output all the results to a file :

lynx --dump 'http://www.google.com/[email protected]' > test.html

Last , use grep And regular expressions to find all the email addresses :

grep -E '^[A-Za-z0-9+._-][email protected]([a-zA-Z0-9-]+\.)+[a-zA-Z]{2,6}' test.html

Of course , There are more “ perfect ” Regular expressions can cover more email address formats ( such as :emailregex). This is just a case in point , Just using Google Search can achieve the purpose of searching basic information .

2. Basic website crawling

As a Security Tester , If we need to collect information from a designated website , have access to site Operator to specify a site 、 Domain name or subdomain name .

You can see a lot of search results ,Google Will intelligently put the more obvious results ahead . And what we often want to see is not the common content , It's the results that you might not see in normal times . We can use - To filter our search results .

After excluding several sites in the figure above, search for keywords :

site:microsoft.com -site:www.microsoft.com -site:translator.microsoft.com -site:appsource.microsoft.com -site:bingads.microsoft.com -site:imagine.microsoft.com

The search results :

You can see , The results no longer include several sites in the first search . Want to dig further , You have to repeat the screening , Then the length of the final search content is bound to arrive Google The limit 32 The maximum number of words . However, this operation can easily achieve the domain name collection work , Although it's a bit tedious and tedious .

Same as before , We can use Lynx Simplify the process a little bit :

lynx --dump 'https://www.google.com/search?q=site:microsoft.com+-site:www.microsoft.com&num=100' > test.html

grep -E '/(?:[a-z0-9](?:[a-z0-9-]{0,61}[a-z0-9])?\.)+[a-z0-9][a-z0-9-]{0,61}[a-z0-9]/' test.html【 advantage 】

Although site name and domain name collection is not a new thing , But through Google There are several advantages to completing this task :

- A low profile : No packets are sent directly to the test target , Not being caught by the target ;

- Simple : The result returned is Google Sort them in a certain order , Often more useful information will be put in “ below ”, So you can simply filter the results and find the information you need ;

- Directivity : adopt Google Search for information , You can get more than just site names and domain names , And the email address 、 User name and more useful information . This information often points to the next test operation .

Four 、 Complex applications

1. Google Hacking Database

www.exploit-db.com/google-hacking-database

Google Hacking Database (GHDB) It's an index of Internet search engine queries , The aim is to find information that is open, transparent and sensitive on the Internet . This sensitive information should not be made public in most cases , But for some reason , This information is captured by search engines , And then it's put on the open web .GHDB It contains a lot of Google Hacking Search statements for , If you want to improve your search ability , Or you want to broaden your horizons , It's definitely a great place to go .

GHDB Divide all the search content into the following 13 class :

- Footholds Demo page

- Files Containing Usernames User name file

- Sensitive Directories Sensitive directory

- Web Server Detection Web server detection

- Vulnerable Files Vulnerable files

- Error Messages error message

- Files Containing Juicy Info Valuable documents

- Files Containing Passwords Password file

- Sensitive Online Shopping Info Online business information

- Network or Vulnerability Data Safety related data

- Pages Containing Login Portals The login page

- Various Online Devices Online devices

- Advisories and Vulnerabilities Announcements and vulnerabilities

thus it can be seen ,Google Hacking Almost nothing can't be done , Only unexpected , If you need to improve , It must take a long time to learn .

2. Script utilization

As mentioned before , utilize Lynx And other related command lines can be relatively simple to Google The data is processed , And then get the desired results . meanwhile ,Google It also provides a lot of API It's easy to call . So writing scripts , We can get the information we need more efficiently and quickly . Here are two uses Google Search script , To demonstrate the power and flexibility of scripts .

· dns-mine ·

github.com/sensepost/SP-DNS-mine

utilize

dns-min.plCan be more quickly achieved before the introduction of the purpose of crawling the website .

· bile ·

github.com/sensepost/BiLE-suite

bile Scripting tools take advantage of Httrack and Google Can search and target site associated with the site , And use the algorithm to measure the weight of each result , Finally, the ordered output .

5、 ... and 、 How to prevent

A lot of different Google Hacking Methods , So for the operators of the website , How to prevent this seemingly pervasive attack ?

1. List of prohibited directories

Usually by .htaccess Files can prevent unauthorized access to directory content in a website . stay Apache Web Server It can also be edited httpd.conf file Options-Indexes-FollowSymLinks-MultiViews Field prevents access to the list of directories in the site .

2. Reasonable setting of the site robots.txt

have access to /robots.txt The document provides the webrobot with a description of its website , This is known as The Robots Exclusion Protocol.

Create at site root robots.txt, for example :

User-agent: Baiduspider Disallow: / User-agent: Sosospider Disallow: / User-agent: sogou spider Disallow: / User-agent: YodaoBot Disallow: / Disallow: /bin/ Disallow: /cgi-bin/

adopt User-agent Specify the crawler robot for , adopt Disallow Specify the directory that the robot is not allowed to access . The above example means to reject Baidu 、 soso 、 Sogou and Youdao robots crawl websites , At the same time, all robots are forbidden to crawl /bin/ and /cgi-bin/ Catalog .

3. Reasonable settings of the page NOARCHIVE label

adopt robot.txt You can limit crawler access to your site , But for a single page ,robot.txt It doesn't work that well ,Google And so on, the search engine still grabs the web page and will generate the web page snapshot , To deal with this situation, you need to use META label .

<META NAME="ROBOTS" CONTENT="NOCARCHIVE">

Put the top one META Tag added to page head in , It can effectively avoid the robot crawling a single page to generate a web page snapshot .

4. Reasonable settings of the page NOSNIPPET

In order not to let the search engine generate the web page summary , You can also add a META label :

<META NAME="BAIDUSPIDER" CONTENT="NOSNIPPET">

In this way, search engines can avoid grabbing web pages and generating summaries of web pages , meanwhile NOSNIPPET It also allows search engines to avoid generating web snapshots .

6、 ... and 、 Expand

Finally, I recommend two websites , be relative to Google They pay more attention to the information collection of search network security .

1. Zhong Kui's eyes

www.zoomeye.org

ZoomEye Is a search engine for cyberspace , Includes devices in the Internet space 、 Information about the website and the services or components it uses .

ZoomEye It has two detection engines :Xmap and Wmap, For devices and websites in cyberspace , adopt 24 We're going to be exploring for hours 、 distinguish , Identify the services and components used by Internet devices and websites . Researchers can use ZoomEye It is convenient to understand the penetration rate of components and the harm scope of vulnerabilities .

The search includes :

- Website component fingerprint : Including the operating system ,Web service , Server language ,Web Development framework ,Web application , Front end libraries and third-party components, etc .

- Host device fingerprint : combination NMAP Large scale scan results are integrated .

2. Shodan

www.shodan.io

Shodan It's a search engine , It allows users to use various filters to find specific types of computers connected to the Internet ( Webcam , Router , The server etc. ). Some also describe it as a search engine serving banners , The service banner is the metadata that the server sends back to the client . This can be information about the server software , Service supported options , Welcome message or any other information the client can find before interacting with the server .

Finally, I need to remind you , When searching for privacy related data , And you need to be in awe , Don't abuse technology , Otherwise, it may cause disputes and trigger rules .( Huang Miaohua | Tiancun information )

Ref

- J. Long - Google Hacking for Penetration Testers

- J. Long - Using Google as a Security Testing Tool

- Google Search Help

- Anchor text (Anchor_text)

- robot.txt Detailed explanation

- Zhong Kui's eyes

- Shodan

- Julian date

边栏推荐

- New progress in the construction of meituan's Flink based real-time data warehouse platform

- Jenkins pipeline syntax

- Getting started with scrapy

- GTest从入门到入门

- 怎么可以打新债 开户是安全的吗

- [5 minutes to play lighthouse] create an immersive markdown writing environment

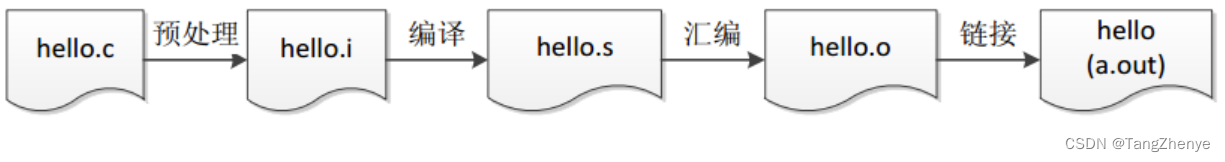

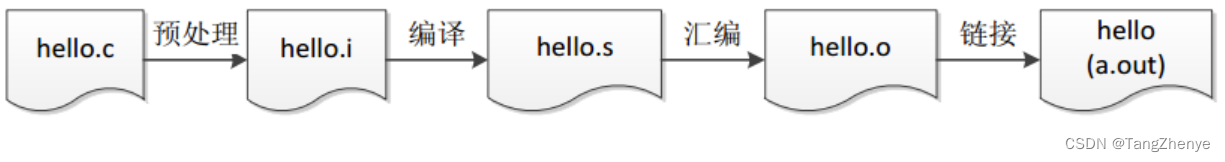

- 链接器 --- Linker

- As one of the bat, what open source projects does Tencent have?

- [mysql_16] variables, process control and cursors

- 9+!通过深度学习从结直肠癌的组织学中预测淋巴结状态

猜你喜欢

How to write controller layer code gracefully?

链接器 --- Linker

Installation and operation of libuv

GTEST from getting started to getting started

如何优雅的写 Controller 层代码?

Linker --- linker

【直播回顾】战码先锋第七期:三方应用开发者如何为开源做贡献

How is the e-commerce red envelope realized? For interview (typical high concurrency)

Opencv learning notes - regions of interest (ROI) and image blending

Deep parsing and implementation of redis pub/sub publish subscribe mode message queue

随机推荐

Conceptual analysis of DDD Domain Driven Design

"Meng Hua Lu" is about to have a grand finale. It's better to learn it first than to look ahead!

Introduction to C language circular statements (foe, while, do... While)

Installation and operation of libuv

Continuous testing | making testing more free: practicing automated execution of use cases in coding

Cryptography series: collision defense and collision attack

Can Tencent's tendis take the place of redis?

Chenglixin research group of Shenzhen People's hospital proposed a new method of multi group data in the diagnosis and prognosis analysis of hepatocellular carcinoma megps

Identification of new prognostic DNA methylation features in uveal melanoma by 11+ based on methylation group and transcriptome analysis~

How to configure the national standard platform easygbs neutral version?

2022年有什么低门槛的理财产品?钱不多

5 points + single gene pan cancer pure Shengxin idea!

Deep learning ~11+ a new perspective on disease-related miRNA research

Opencv learning notes - Discrete Fourier transform

Automatic reconstruction of pod after modifying resource object

Opencv learning notes -- Separation of color channels and multi-channel mixing

基于AM335X开发板 ARM Cortex-A8——Acontis EtherCAT主站开发案例

GTEST from getting started to getting started

Axi low power interface

10 zeros of D