当前位置:网站首页>Watermelon book -- Chapter 5 neural network

Watermelon book -- Chapter 5 neural network

2022-07-02 09:11:00 【Qigui】

Individuality signature : The most important part of the whole building is the foundation , The foundation is unstable , The earth trembled and the mountains swayed .

And to learn technology, we should lay a solid foundation , Pay attention to me , Take you to firm the foundation of the neighborhood of each plate .

Blog home page : Qigui's blog

It's not easy to create , Don't forget to hit three in a row when you pass by !!!

Focus on the author , Not only lucky , The future is more promising !!!Triple attack( Three strikes in a row ):Comment,Like and Collect--->Attention

Artificial neural network (ANN): Simulate the structure and function of human brain nervous system , An artificial network system composed of a large number of simple processing units and widely connected .

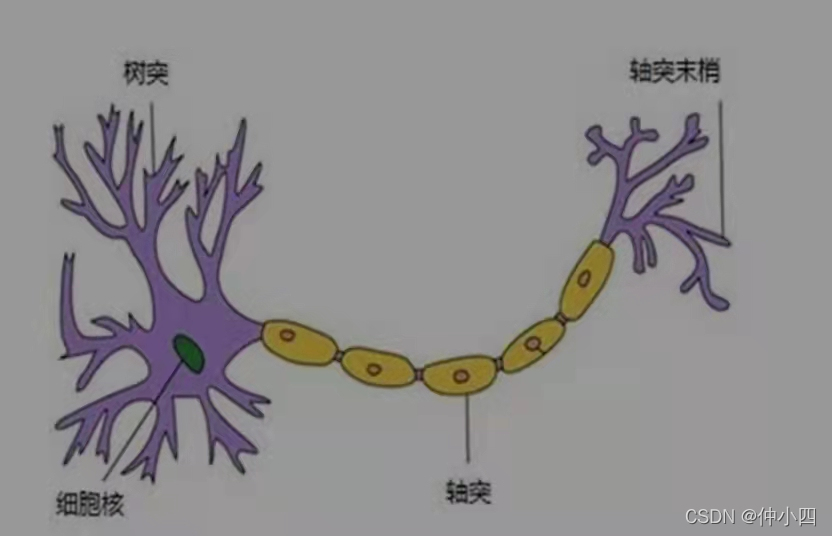

One 、 Neuron

A neuron usually has multiple dendrites . It is mainly used to receive incoming information , There is only one axon . Axons can transmit information to many other neurons . Axon terminals connect with dendrites , To send a signal , This connection corresponds to a weight ; The value of the weight is called the weight , This is something that needs training . namely Each connecting line corresponds to a different weight .

Neuron model Is a containing input , Models of output and computing functions . The input can be likened to the dendrites of neurons , The output can be compared to the axon of a neuron , The calculation can be compared to the nucleus . The most basic component of neural network is neuron model .

Again, connection is the most important thing in neurons , Each connection has a weight .

A training algorithm of neural network is to adjust the value of weight to the best , So that the prediction effect of the whole network is the best .

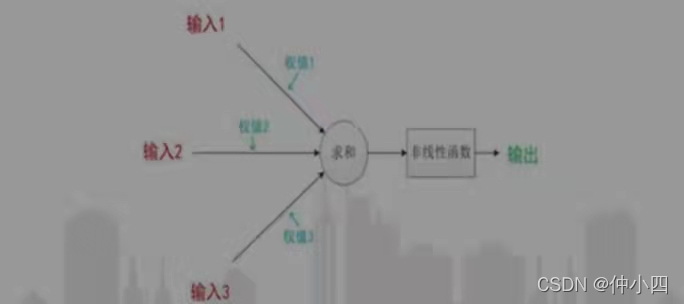

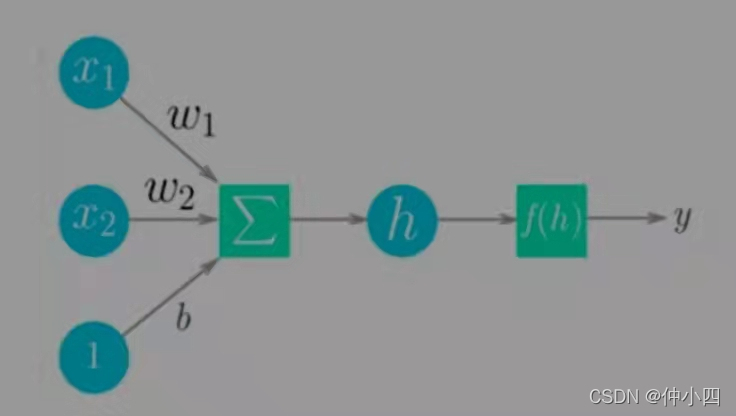

Which has been used until now is M-P Neuron model . In this model , Neurons receive information from n The input signals from these other neurons , These input signals are transmitted through the connection of weights , The total input received by the neuron is compared with the threshold of the neuron , And then through Activation function Processing to produce the output of neurons .

Next, it's about Calculation of neurons , Input It's been through Three step mathematical operation :

1. First enter multiply by weight (weight):x1-->x1 * w1;x2-->x2 * w2

2. Sum up :(x1 * w1) + (x2 * w2) + b

3. After the activation function processing, the output :y = f((x1 * w1) + (x2 *w2) +b)

Activation function :

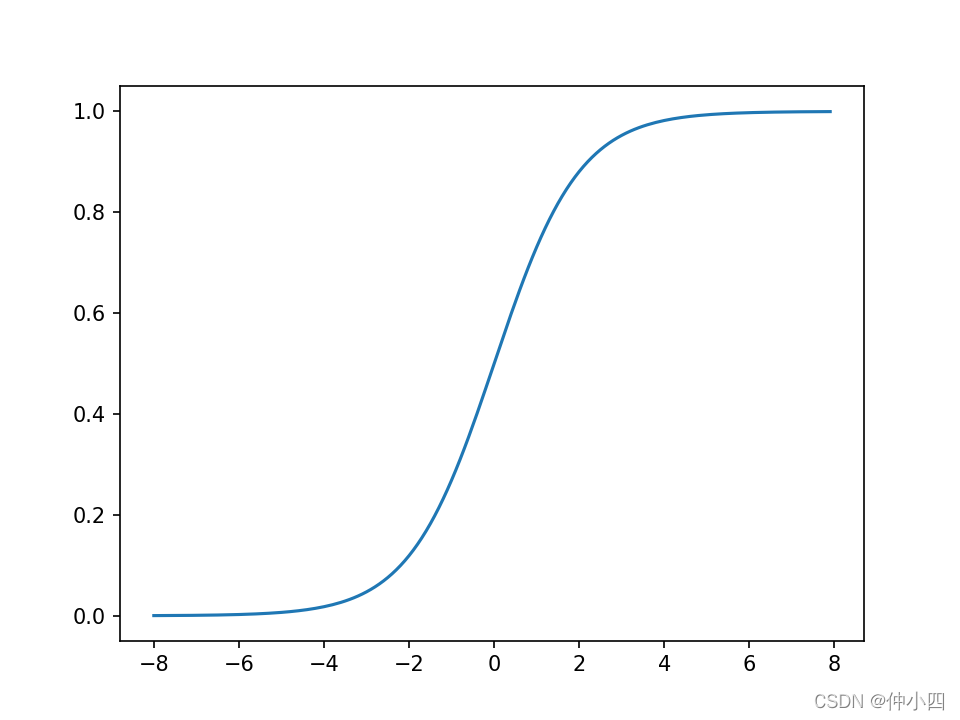

Nonlinear function is introduced into neural network as activation function , It is no longer a linear combination of inputs , But almost any function .

The function of activation : Convert unlimited input into predictable output , The commonly used activation function is sigmoid function .

sigmoid Function squeezes input values that may vary over a wide range to (0,1) Output value range , So it is sometimes called ” Squeeze function “.

import matplotlib.pyplot as plt

import numpy as np

def sigmoid(x):

# Go straight back to sigmoid function

return 1 / (1 + np.exp(-x))

def plot_sigmoid():

# param: The starting point , End , spacing

x = np.arange(-8, 8, 0.1)

y = sigmoid(x)

plt.plot(x, y)

plt.show()

if __name__ == '__main__':

plot_sigmoid()

Two 、 neural network

Multilayer neural network , Not at all The more levels, the better .

How to build neural networks :

1. Building a neural network is to connect multiple neurons .

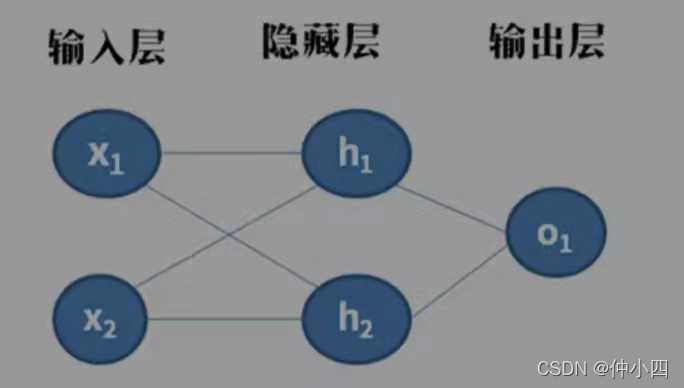

2. This neural network has 2 Inputs 、 A contain 2 A hidden layer of neurons (h1 and h2)、 contain 1 The output layer of neurons o1.

3. The hidden layer is the part sandwiched between the input layer and the output layer , A neural network can have multiple hidden layers .

3、 ... and 、 perceptron ( Reference resources 《 Statistical learning method 》) And multi tier Networks

The perceptron consists of two layers of neurons . Input layer After receiving the external input signal, it is transmitted to the output layer , Output layer yes M-P Neuron , Also known as ” Threshold logical unit “.

Perceptron is a linear classification model of binary classification problem . Single layer perceptron can only deal with linear problems , Can't handle nonlinear problems !!! The perceptron has only output layer neurons to process the activation function , That is, only one layer of functional neurons . To solve nonlinear problems , We need to consider using multi-layer functional neurons . The layer of neurons between the output layer and the input layer is called Hidden layer or hidden layer , Hidden layer and output layer neurons are functional neurons with activation function .

Each layer of neurons is fully interconnected with the next layer of neurons , There is no same layer connection between neurons , There is no cross layer connection , Such a neural network structure is usually called ” Multilayer feedforward neural network “.

Input layer neurons only receive input without performing functions beyond , The hidden layer and the output layer contain functional neurons , It's called ” Two layer network “; Just include the hidden layer , It can be called ” Multi layer network “.

Four 、 Error back propagation algorithm (BP Algorithm )

BP It's an iterative learning algorithm , In each iteration, the generalized perceptron learning rules are used to update the parameters .

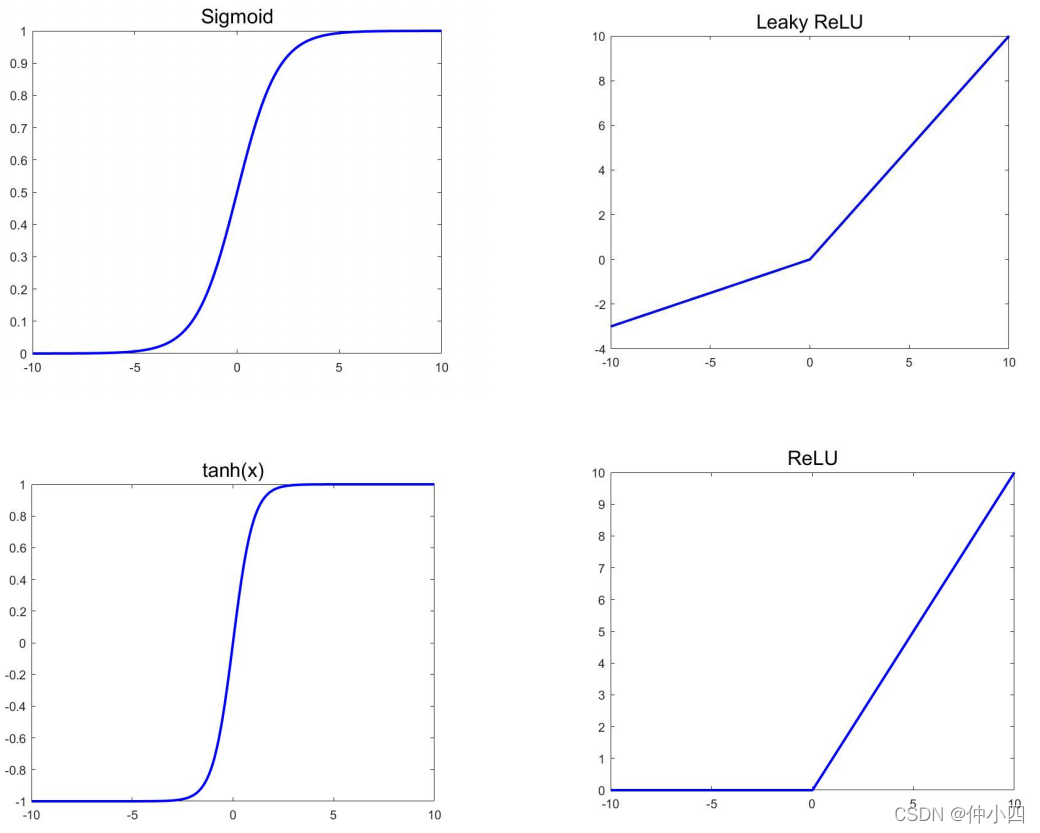

Common activation function selection :sigmoid function 、tanh function 、ReLU function 、Leaky ReLU function .

Algorithm flow :

import numpy as np

def mse_loss(y_true, y_pre):

# y_true and y_pre It's the same length np Array

return ((y_true - y_pre) ** 2).mean()

Test code :

y_true = np.array([1, 0, 0, 1])

y_pre = np.array([0, 0, 0, 0])

print(mse_loss(y_true, y_pre)) # 0.5 Chapter six - Support vector machine (SVM)

http://t.csdn.cn/q6o2F http://t.csdn.cn/q6o2F Chapter four - Decision tree http://t.csdn.cn/3Tme3

http://t.csdn.cn/q6o2F Chapter four - Decision tree http://t.csdn.cn/3Tme3 http://t.csdn.cn/3Tme3 The third chapter - Linear model http://t.csdn.cn/4S6Y6

http://t.csdn.cn/3Tme3 The third chapter - Linear model http://t.csdn.cn/4S6Y6 http://t.csdn.cn/4S6Y6

http://t.csdn.cn/4S6Y6

边栏推荐

- 队列管理器running状态下无法查看通道

- Pdf document of distributed service architecture: principle + Design + practice, (collect and see again)

- 1、 QT's core class QObject

- win10使用docker拉取redis镜像报错read-only file system: unknown

- Npoi export word font size correspondence

- Flink-使用流批一体API统计单词数量

- Jingdong senior engineer has developed for ten years and compiled "core technology of 100 million traffic website architecture"

- Matplotlib swordsman Tour - an artist tutorial to accommodate all rivers

- Webflux responsive programming

- CSDN Q & A_ Evaluation

猜你喜欢

C#钉钉开发:取得所有员工通讯录和发送工作通知

Programmers with ten years of development experience tell you, what core competitiveness do you lack?

小米电视不能访问电脑共享文件的解决方案

win10使用docker拉取redis镜像报错read-only file system: unknown

【Go实战基础】如何安装和使用 gin

Avoid breaking changes caused by modifying constructor input parameters

How to realize asynchronous programming in a synchronous way?

![[staff] common symbols of staff (Hualian clef | treble clef | bass clef | rest | bar line)](/img/ae/1ecb352c51a101f237f244da5a2ef7.jpg)

[staff] common symbols of staff (Hualian clef | treble clef | bass clef | rest | bar line)

微服务实战|Eureka注册中心及集群搭建

西瓜书--第六章.支持向量机(SVM)

随机推荐

C Gaode map obtains the address according to longitude and latitude

微服务实战|熔断器Hystrix初体验

查看was发布的应用程序的端口

Dix ans d'expérience dans le développement de programmeurs vous disent quelles compétences de base vous manquez encore?

以字节跳动内部 Data Catalog 架构升级为例聊业务系统的性能优化

Matplotlib swordsman Tour - an artist tutorial to accommodate all rivers

盘点典型错误之TypeError: X() got multiple values for argument ‘Y‘

Taking the upgrade of ByteDance internal data catalog architecture as an example, talk about the performance optimization of business system

数构(C语言)——第四章、矩阵的压缩存储(下)

How to realize asynchronous programming in a synchronous way?

【Go实战基础】gin 如何验证请求参数

【Go实战基础】gin 如何自定义和使用一个中间件

微服务实战|声明式服务调用OpenFeign实践

Gocv split color channel

CSDN Q & A_ Evaluation

将一串数字顺序后移

【Go实战基础】gin 高效神器,如何将参数绑定到结构体

队列管理器running状态下无法查看通道

Connect function and disconnect function of QT

Don't spend money, spend an hour to build your own blog website