当前位置:网站首页>Flagai Feizhi: AI basic model open source project, which supports one click call of OPT and other models

Flagai Feizhi: AI basic model open source project, which supports one click call of OPT and other models

2022-06-23 19:21:00 【Zhiyuan community】

One background

GPT-3、OPT series 、 Pre training models such as enlightenment are NLP The field has achieved remarkable results , But different code warehouses have different implementation styles , And the techniques used in pre training large models are also different , Created a technological gap . For quick loading 、 Training 、 Reasoning different big models , Use the latest and fastest model parallel technology and improve the convenience of user training and using models , Zhiyuan Artificial Intelligence Research Institute launched FlagAI( Feizhi ) Basic model open source project , Provide support for functions such as one click model enlargement .

Two FlagAI characteristic

FlagAI Feizhi is a fast 、 Easy to use and scalable AI Basic model toolkit . Support one click call to a variety of mainstream basic models , At the same time, it adapts to a variety of downstream tasks in both Chinese and English .

- FlagAI Support the Enlightenment of up to 10 billion parameters GLM( See GLM Introduce ), It also supports BERT、RoBERTa、GPT2、T5 Model 、Meta OPT Models and Huggingface Transformers Model of .

- FlagAI Provide API To quickly download and in a given ( in / english ) Use these pre training models on the text , You can fine tune it on your own dataset (fine-tuning) Or applications Prompt learning (prompt-tuning).

- FlagAI Provide rich basic model downstream task support , For example, text classification 、 information extraction 、 Question and answer 、 Abstract 、 Text generation, etc , Good support for both Chinese and English .

- FlagAI By the three most popular data / Model parallel library (PyTorch/Deepspeed/Megatron-LM) Provide support , Seamless integration between them . stay FlagAI On , You can parallel your training with less than ten lines of code 、 Testing process , It is also convenient to use various model acceleration techniques .

Open source project address :https://github.com/BAAI-Open/FlagAI

3、 ... and Application example

One click call Pipeline

for example : call GLM The pre training model directly performs Chinese Q & A 、 Phrase completion task

You only need three lines of code to load GLM-large-ch Model and corresponding tokenizer.

Besides , We also support one click calls :

- Title generation task

- Universal NER Named entity recognition task

- Text continuation task

- Semantic similarity matching task, etc

The training sample

In addition to the convenient one click call for different tasks ,FlagAI It also provides a wealth of training examples , Data samples are provided for each training example , It is more convenient to understand the training process .

For example, using Bert The model is used to train the title generation task , The training related directory structure is organized as follows ( See open source repository for details examples Catalog https://github.com/BAAI-Open/FlagAI/tree/master/examples):

among data The directory is a sample data structure ,news.tsv For sample data format , It is more convenient to understand the training process ;train.py Script file for training ;generate.py Script files for reasoning .

FlagAI Provides a very rich Chinese / English training examples , You can directly download relevant data for training , You can also flexibly switch your own data sets , Achieve training 、 Support the whole process of reasoning .

Four Multiple data parallel 、 Model parallel training mode supports

FlagAI Support multiple parallel training strategies , Include :

- pytorch: The conventional pytorch Single card training

- pytorchDDP: The conventional pytorch Data parallelism , DOCA training

- deepspeed: Microsoft open source efficient deep learning training optimization Library , Increase the utilization efficiency of multi card training video memory , Details please see :https://github.com/microsoft/DeepSpeed

- deepspeed+mpu:mpu Open source for NVIDIA megatron-lm Model parallel method , Details please see :https://github.com/NVIDIA/Megatron-LM

stay FlagAI in , You can choose different training methods according to the size of the model , also FlagAI It also integrates many practical skills and operations , for example fp16 Semi precision 、 Gradient accumulation 、 Gradient recalculation 、CPU offload And various parallel computing strategies . about Bert-base Level model , The combination of these techniques can reduce the memory utilization 50% above , Training speed has been greatly improved ; Using a single card V100 It can also be easy finetune Ten billion level model , Come and try it .

5、 ... and The follow-up plan

at present FlagAI Most of the supported are NLP Common models in , In later versions ,FlagAI Will continue to integrate computer vision and multimodal orientation of the pre training model , for example Vit、Swin-Transformer、PVT etc. , send FlagAI More general . Welcome to try , And put forward valuable opinions , You can also join us in many ways , Discuss big model technology together .

Project address :https://github.com/BAAI-Open/FlagAI

FlagAI Technology exchange group :

边栏推荐

- 游戏资产复用:更快找到所需游戏资产的新方法

- 20set introduction and API

- 函数的定义和函数的参数

- How to make a list sort according to the order of another list

- 如何避免基因领域“黑天鹅”事件:一场预防性“召回”背后的安全保卫战

- 获取设备信息相关

- Borui data attends Alibaba cloud observable technology summit, and digital experience management drives sustainable development

- 基于微信小程序的婚纱影楼小程序开发笔记

- Docker builds redis cluster

- 【One by One系列】IdentityServer4(八)使用EntityFramework Core对数据进行持久化

猜你喜欢

好用的人事管理软件有哪些?人事管理系统软件排名!

Basic knowledge of penetration test

Helix QAC更新至2022.1版本,将持续提供高标准合规覆盖率

IOT platform construction equipment, with source code

Programmable, protocol independent software switch (read the paper)

19 classic cases of generator functions

从零开发小程序和公众号【第一期】

Database migration tool flyway vs liquibase (I)

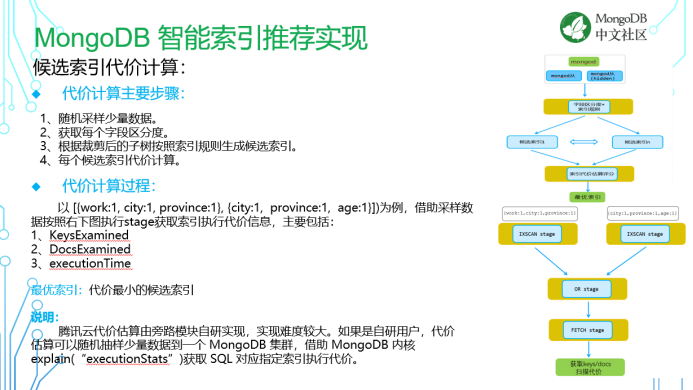

直播分享| 腾讯云 MongoDB 智能诊断及性能优化实践

游戏资产复用:更快找到所需游戏资产的新方法

随机推荐

Advanced network accounting notes (VIII)

Docker搭建redis集群

【NOI2014】15. Difficult to get up syndrome [binary]

Develop small programs and official account from zero [phase I]

Design of hardware switch with programmable full function rate limiter

【NOI2014】15.起床困難綜合症【二進制】

Matrix analysis notes (III-1)

区块哈希竞猜游戏系统开发(dapp)

Learn the basic principles of BLDC in Simulink during a meal

基于微信小程序的婚纱影楼小程序开发笔记

How can enterprises do business monitoring well?

Jericho Forced upgrade [chapter]

Cloud security daily 220623: the red hat database management system has found an arbitrary code execution vulnerability and needs to be upgraded as soon as possible

Dataease template market officially released

Basic knowledge of penetration test

从零开发小程序和公众号【第一期】

Pisces: a programmable, protocol independent software switch (summary)

sed replace \tPrintf to \t//Printf

Netseer: stream event telemetry notes for programmable data plane

准备好迁移上云了?请收下这份迁移步骤清单