当前位置:网站首页>[Bayesian classification 2] naive Bayesian classifier

[Bayesian classification 2] naive Bayesian classifier

2022-06-26 20:38:00 【NoBug ㅤ】

List of articles

1. Review of Bayesian decision theory

1.1 Classification principle

Classification principle . Y = { c 1 , c 2 , . . . , c N } Y = \{c_1, c_2, ..., c_N\} Y={ c1,c2,...,cN} Yes N Species marker , λ i j \lambda_{ij} λij It's marking a reality as c j c_j cj The samples were misclassified as c i c_i ci The loss caused . R ( c i ∣ x ) R(c_i|x) R(ci∣x) Yes sample x x x Classified as c i c_i ci The expected loss incurred ( Also called sample x x x The conditional risk of ). We know the wrong classification marks c i c_i ci, Our aim is to find the expected loss , And find the smallest . Define the formula : R ( c i ∣ x ) = ∑ j = 1 N λ i j P ( c j ∣ x ) . R(c_i|x)=\sum_{j=1}^N\lambda_{ij}P(c_j|x). R(ci∣x)=∑j=1NλijP(cj∣x). for example : λ i j \lambda_{ij} λij They are samples { x 1 , x 2 , . . . , x j } \{x_1, x_2, ..., x_j\} { x1,x2,...,xj} Misclassification as c i c_i ci The loss caused . P ( c j ∣ x ) P(c_j|x) P(cj∣x) They are samples { x 1 , x 2 , . . . , x j } \{x_1, x_2, ..., x_j\} { x1,x2,...,xj} A probability corresponding to the occurrence of an event .

1.2 Bayesian classifier

Bayesian classifier . We use the above principle , Yes N There are three kinds of marks N The category can be divided into N class , { c 1 , c 2 , . . . , c N } \{c_1, c_2,..., c_N\} { c1,c2,...,cN} Collectively referred to as c c c .“ As long as each sample x x x The conditional risk is minimal , Just select the one on each sample that makes the conditional risk R ( c ∣ x ) R(c|x) R(c∣x) The smallest category mark , This is the Bayesian criterion ”. Bias optimal classifier : h ∗ ( x ) = a r g m i n c ∈ Y R ( c ∣ x ) h^*(x)=arg\ min_{c\in Y}R(c|x) h∗(x)=arg minc∈YR(c∣x).

1.3 P(c|x)

h ∗ ( x ) = a r g m a x c ∈ Y P ( c ∣ x ) h^*(x)=arg\ max_{c\in Y}P(c|x) h∗(x)=arg maxc∈YP(c∣x). prove : Miscalculation of loss λ i j = { 0 , i = j 1 , Its He \lambda_{ij} = \left\{ \begin{array}{lr} 0 & ,i=j\\[6pt] 1 & , other \end{array} \right. λij={ 01,i=j, Its He , R ( c i ∣ x ) = ∑ j = 1 N λ i j P ( c j ∣ x ) R(c_i|x)=\sum_{j=1}^N\lambda_{ij}P(c_j|x) R(ci∣x)=∑j=1NλijP(cj∣x), R ( c i ∣ x ) = ∑ j = 1 N P ( c j ∣ x ) And i ≠ j R(c_i|x)=\sum_{j=1}^NP(c_j|x) And i\neq j R(ci∣x)=∑j=1NP(cj∣x) And i=j, ∑ j = 1 N P ( c j ∣ x ) = 1 \sum_{j=1}^NP(c_j|x)=1 ∑j=1NP(cj∣x)=1,… …, In the end R ( c ∣ x ) = 1 − P ( c ∣ x ) R(c_|x)=1-P(c|x) R(c∣x)=1−P(c∣x).

1.4 Calculation formula

Calculation formula . P ( c ∣ x ) = P ( c ) P ( x ∣ c ) P ( x ) P(c|x)=\frac{P(c)P(x|c)}{P(x)} P(c∣x)=P(x)P(c)P(x∣c), P ( c ) P(c) P(c) Is a class of prior probabilities , P ( x ∣ c ) P(x|c) P(x∣c) Is the sample x x x Relative to class tags c c c The class conditional probability of ( Also known as :“ likelihood ”), P ( x ) P(x) P(x) The tag is the same for all classes . transcendental P ( c ) P(c) P(c) Is the proportion of various samples in the sample space . likelihood P ( x ∣ c ) P(x|c) P(x∣c) Calculate by maximum likelihood estimation .

1.5 Maximum likelihood estimation

Maximum likelihood estimation . We have to solve P ( x ∣ c ) P(x|c) P(x∣c), D c D_c Dc Represents a training set D pass the civil examinations c Set of class samples , These samples are independent and identically distributed . There is a likelihood formula : P ( D c ∣ c ) = ∏ x ∈ D c P ( x ∣ c ) P(D_c|c)=\prod_{x\in D_c}P(x|c) P(Dc∣c)=∏x∈DcP(x∣c), Consecutive operation is easy to cause underflow , Generally, logarithm is used for processing . l o g P ( D c ∣ c ) logP(D_c|c) logP(Dc∣c). What is required is its maximum value .

2. Naive Bayesian classifier learning notes

2.1 introduction

h ∗ ( x ) = a r g m a x c ∈ Y P ( c ∣ x ) h^*(x)=arg\ max_{c\in Y}P(c|x) h∗(x)=arg maxc∈YP(c∣x), P ( c ∣ x ) = P ( c ) P ( x ∣ c ) P ( x ) P(c|x)=\frac{P(c)P(x|c)}{P(x)} P(c∣x)=P(x)P(c)P(x∣c), The main difficulty is to find the class conditional probability P ( x ∣ c ) P(x|c) P(x∣c), It is the joint probability of all attributes ( The calculation will encounter the problem of combined explosion ; Data will encounter missing values ). Naive Bayes classifier :“ For known categories , Suppose all attributes are independent of each other .( Assumption of independence of attribute condition )”

2.2 Knowledge cards

1. Naive Bayes classifier :Naive Bayes classifier

2. also called :NB Algorithm

2.3 Naive Bayes classifier

- Calculate the class conditional probability P ( x ∣ c ) P(x|c) P(x∣c)

d For the number of attributes

x i x_i xi by x x x In the i i i Values on attributes

P ( x ∣ c ) = ∏ i = 1 d P ( x i ∣ c ) P(x|c)=\prod_{i=1}^dP(x_i|c) P(x∣c)=i=1∏dP(xi∣c)

- Expression of naive Bayesian classifier

In the knowledge review , For all categories ,P(x) identical

h n b ( x ) = a r g m a x c ∈ Y P ( c ) ∏ i = 1 d P ( x i ∣ c ) h_{nb}(x)=arg\ max_{c\in Y}\ P(c)\prod_{i=1}^dP(x_i|c) hnb(x)=arg maxc∈Y P(c)i=1∏dP(xi∣c)

- Calculate the prior probability P ( c ) P(c) P(c)

P ( c ) = ∣ D c ∣ ∣ D ∣ P(c)=\frac{|D_c|}{|D|} P(c)=∣D∣∣Dc∣

- Calculate the class conditional probability P ( x i ∣ c ) P(x_i|c) P(xi∣c)

( discrete )

P ( x i ∣ c ) = ∣ D c , x i ∣ ∣ D c ∣ P(x_i|c)=\frac{|D_{c, x_i}|}{|D_c|} P(xi∣c)=∣Dc∣∣Dc,xi∣

( Continuity )

Yes On even To continue Belong to sex can Examination Consideration General rate The secret degree Letter Count \ \ \\ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ {} For continuous attributes, the probability density function can be considered Yes On even To continue Belong to sex can Examination Consideration General rate The secret degree Letter Count

two spot 、 A few What 、 Two term 、 finger Count 、 Berth pine 、 just state branch cloth etc. \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ \ {} At two o 'clock 、 The geometric 、 Binomial 、 Index 、 Poisson 、 Normal distribution, etc two spot 、 A few What 、 Two term 、 finger Count 、 Berth pine 、 just state branch cloth etc.

- Example

【 Data sets D】

【 Prior probability P ( c ) P(c) P(c)】

P ( good melon = yes ) = 8 17 ≈ 0.471 P( Good melon = yes )=\frac{8}{17}\approx0.471 P( good melon = yes )=178≈0.471

P ( good melon = no ) = 9 17 ≈ 0.529 P( Good melon = no )=\frac{9}{17}\approx0.529 P( good melon = no )=179≈0.529

【 Class conditional probability P ( x i ∣ c ) P(x_i|c) P(xi∣c)】

【 Naive Bayes classifier 】

because , 0.038 0.038 0.038 > 6.80 × 1 0 − 5 6.80\times10^{-5} 6.80×10−5

Park plain shellfish leaf Si branch class device take measuring try sample Ben " measuring 1 " sentence other by " good melon " Naive Bayes classifier will test samples " measuring 1" Judge as " Good melon " Park plain shellfish leaf Si branch class device take measuring try sample Ben " measuring 1" sentence other by " good melon "

2.4 Laplacian smoothing

Question elicitation

In practice, we will definitely encounter , such as : The attribute is “ Knock sound = Crisp ” There are 8 Samples , But this 8 The categories of samples are “ Good melon ” No . So there is : P clear brittle ∣ yes = P ( knock The sound = clear brittle ∣ good melon = yes ) = 0 8 = 0 P_{ Crisp | yes }=P( Knock sound = Crisp | Good melon = yes )=\frac{0}{8}=0 P clear brittle ∣ yes =P( knock The sound = clear brittle ∣ good melon = yes )=80=0, When it is treated as a tandem , Whatever other attributes of the sample are , Even on other attributes, it looks good , The result of classification will be “ Good melon = no ”, It's obviously not reasonable .Laplacian smoothing

Molecule plus one , Add to the denominator K,K Represents the number of categories .Example

3. Naive Bayesian classifier extension

3.1 Data processing

There are many ways to use naive Bayes classifier in real tasks .

for example , If the task requires high prediction speed , For a given training set , All probability estimates involved in naive Bayesian classifier can be calculated and stored in advance , In this way, the prediction can be made only by " Look up the table " Then we can judge ;

If task data changes frequently , You can use “ Lazy study ” (lazy learning) The way , No training at first , When the prediction request is received, the probability estimation is performed according to the current data set ;

If the data keeps growing , On the basis of the existing valuation , Incremental learning can be achieved only by counting and modifying the probability estimates involved in the attribute values of new samples .

3.2 Collect other information

【 The core of naive Bayesian classifier 】

h ∗ ( x ) = a r g m a x c ∈ Y P ( c ∣ x ) , P ( c ∣ x ) = P ( c ) P ( x ∣ c ) P ( x ) h^*(x)=arg\ max_{c\in Y}P(c|x),P(c|x)=\frac{P(c)P(x|c)}{P(x)} h∗(x)=arg maxc∈YP(c∣x),P(c∣x)=P(x)P(c)P(x∣c)

P ( class other ∣ , sign ) = P ( class other ) P ( , sign ∣ class other ) P ( , sign ) P( Category | features )=\frac{P( Category )P( features | Category )}{P( features )} P( class other ∣ , sign )=P( , sign )P( class other )P( , sign ∣ class other )

【 Advantages and disadvantages of naive Bayesian classifier 】

- " advantage :"

1. The algorithm logic is simple , Easy to implement

2. The cost of time and space is small in the process of classification

- " shortcoming :"

1. Because naive Bayesian models assume that attributes are independent of each other , This assumption is often not true in practical application .

2. When the number of attributes is large or the correlation between attributes is large , The classification effect is not good .

- " solve :"

1. about nb Disadvantages of the algorithm , There are algorithms like semi naive Bayes that are moderately improved by considering some correlations .

【 Bayesian network 】

边栏推荐

- 30. 串联所有单词的子串

- 0 basic C language (1)

- Stringutils judge whether the string is empty

- mysql存储过程

- Super VRT

- find_ path、find_ Library memo

- 【贝叶斯分类3】半朴素贝叶斯分类器

- Tiktok practice ~ sharing module ~ short video download (save to photo album)

- Swagger: how to generate beautiful static document description pages

- Separate save file for debug symbols after strip

猜你喜欢

Detailed explanation of stored procedures in MySQL

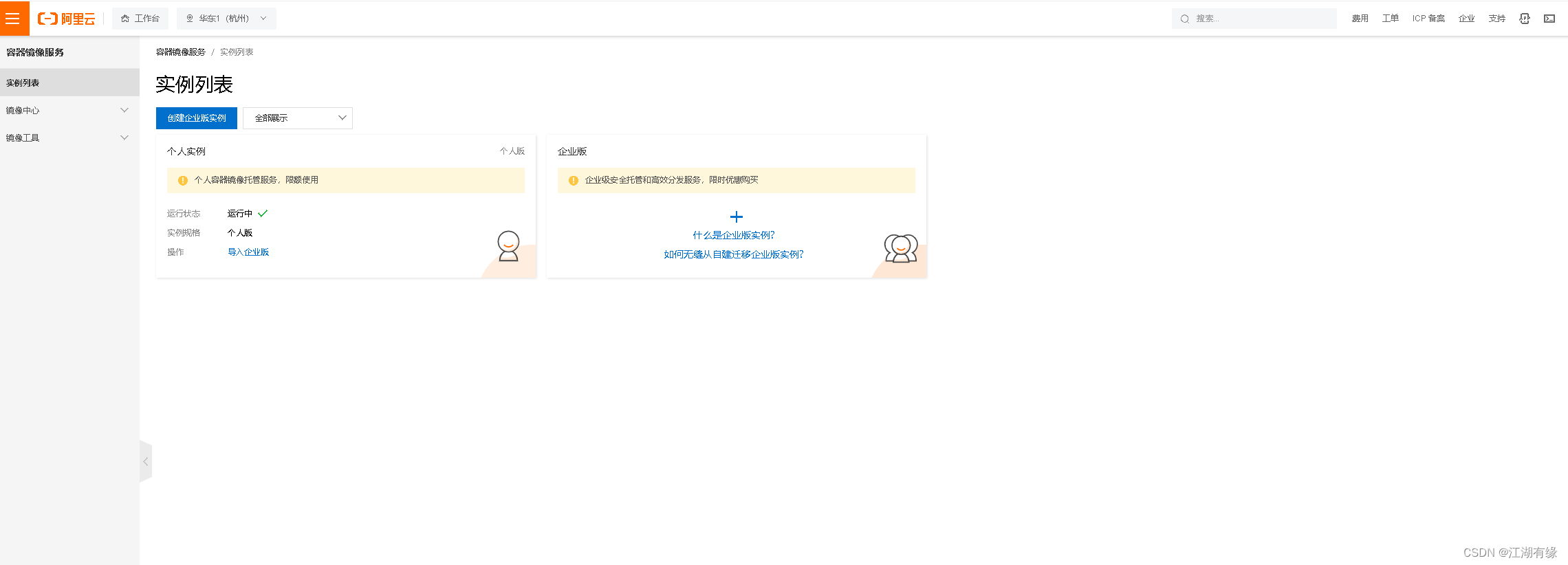

阿里云个人镜像仓库日常基本使用

Idea error: process terminated

抖音实战~分享模块~短视频下载(保存到相册)

QT两种方法实现定时器

Flutter TextField详解

Introduction to single chip microcomputer one-on-one learning strategy, independent development program immediately after reading

超分之VRT

好物推荐:移动端开发安全工具

Guomingyu: Apple's AR / MR head mounted display is the most complicated product in its history and will be released in January 2023

随机推荐

0基础学c语言(2)

QT两种方法实现定时器

慕课11、微服务的用户认证与授权

[recommended collection] these 8 common missing value filling skills must be mastered

C primer plus learning notes - 3. Character IO (input / output)

西瓜书重温(七): 贝叶斯分类器(手推+代码demo)

460million zongzi were sold in half a year. How big is the "imagination space" of time-honored brands?

C# 练习。类列表加记录,显示记录和清空记录

Boot指标监测

BOM and DOM operations

Fixed length memory pool

回溯思路详解

Six necessary threat tracking tools for threat hunters

mysql的充值问题

0基础c语言(0)

windows系統下怎麼安裝mysql8.0數據庫?(圖文教程)

Muke 8. Service fault tolerance Sentinel

On the origin of the dispute between the tradition and the future of database -- AWS series column

What are the specific steps for opening a stock account? Is it safe to open an account online?

c语言99乘法表