当前位置:网站首页>One line of code accelerates sklearn operations thousands of times

One line of code accelerates sklearn operations thousands of times

2022-06-21 10:16:00 【AI technology base camp】

author | Fevrey

source | Python Big data analysis

*

1、 brief introduction

scikit-learn As a classic machine learning framework , It has developed for more than ten years since its birth , But its computing speed has been widely criticized by users . be familiar with scikit-learn Your friends should know ,scikit-learn Some of the included in are based on joblib The effect of the operation acceleration function of the library is limited , Can't make full use of computing power .

And today I want to introduce you to the knowledge , It can help us without changing the original code , Get tens or even thousands of times scikit-learn The computing efficiency is improved ,let's go!

2、 utilize sklearnex Speed up scikit-learn

In order to speed up the calculation , We only need additional installation sklearnex This extended library , Can help us to have intel On the processor's device , The operation efficiency is greatly improved .

Take a cautious attitude towards tasting fresh food , We can do it alone conda Experiment in virtual environment , All orders are as follows , We installed it by the way jupyterlab As IDE:

conda create -n scikit-learn-intelex-demo python=3.8 -c https://mirrors.sjtug.sjtu.edu.cn/anaconda/pkgs/main -y

conda activate scikit-learn-intelex-demo

pip install scikit-learn scikit-learn-intelex jupyterlab -i https://pypi.douban.com/simple/ After the preparation of the experimental environment , We are jupyter lab Write test code in to see how the acceleration effect , It's easy to use , We just need to import... Into the code scikit-learn Before the relevant functional modules , Just run the following code :

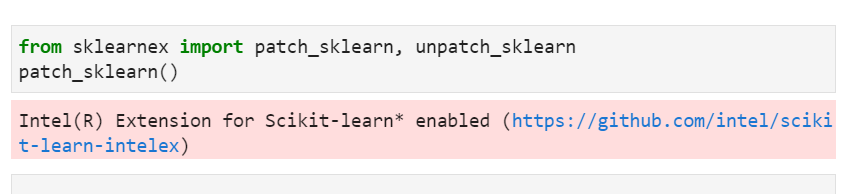

from sklearnex import patch_sklearn, unpatch_sklearn

patch_sklearn()After the acceleration mode is successfully turned on, the following information will be printed :

The other thing to do is to put your original scikit-learn The code can be executed later , I did a simple test on my old savior notebook, which I usually write and develop open source projects .

Take linear regression , On the sample data set of millions of samples and hundreds of features , It only takes... After acceleration is turned on 0.21 Seconds to complete the training set , While using unpatch_sklearn() After the acceleration mode is forced off ( Be careful scikit-learn Relevant modules need to be re imported ), The training time increases to 11.28 second , Means through sklearnex We got 50 Multiple times of operation speed !

And according to the official statement , The stronger CPU A higher percentage of performance improvements can be achieved , The following figure shows the official Intel Xeon Platinum 8275CL The performance improvement results after testing a series of algorithms under the processor , It can not only improve the training speed , It can also improve the speed of model reasoning and prediction , In some scenarios, the performance is even improved thousands of times :

Officials also offer some ipynb Example (https://github.com/intel/scikit-learn-intelex/tree/master/examples/notebooks), It shows that K-means、DBSCAN、 Random forests 、 Logical regression 、 Ridge return And other common algorithm examples , Interested readers can download and learn by themselves .

The above is the whole content of this paper , Welcome to discuss with me in the comments section ~

Looking back

Google AI Be exposed to self Awakening ?

13 individual python Necessary knowledge , Recommended collection !

Interesting Python Visualization techniques !

Low code out of half a lifetime , Come back or " cancer "!

Share

Point collection

A little bit of praise

Click to see 边栏推荐

- WCF RestFul+JWT身份验证

- 聊聊大火的多模态项目

- 123. deep and shallow copy of JS implementation -- code text explanation

- Unity中的地平面简介

- 简易的安卓天气app(三)——城市管理、数据库操作

- leetcode:715. Range 模块【无脑segmentTree】

- Mid 2022 Summary - step by step, step by step

- 触摸按键控制器TTP229-BSF使用心得[原创cnblogs.com/helesheng]

- [practice] stm32mp157 development tutorial FreeRTOS system 3: FreeRTOS counting semaphore

- Telecommuting Market Research Report

猜你喜欢

Where is the cow in Shannon's information theory?

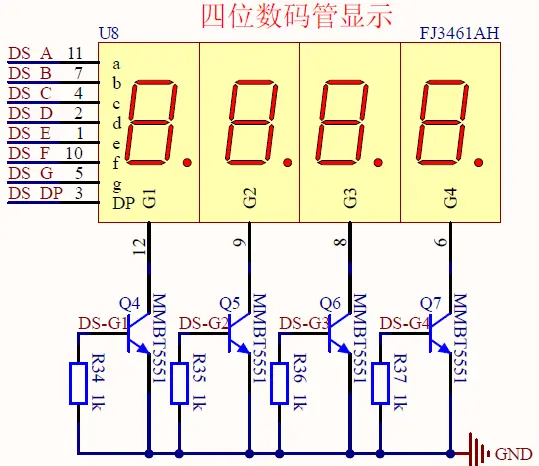

Stm32mp1 cortex M4 development part 10: expansion board nixie tube control

111. solve the problem of prohibiting scripts from running on vs code. For more information, see error reporting

燎原之势 阿里云数据库“百城聚力”助中小企业数智化转型

Talk about the multimodal project of fire

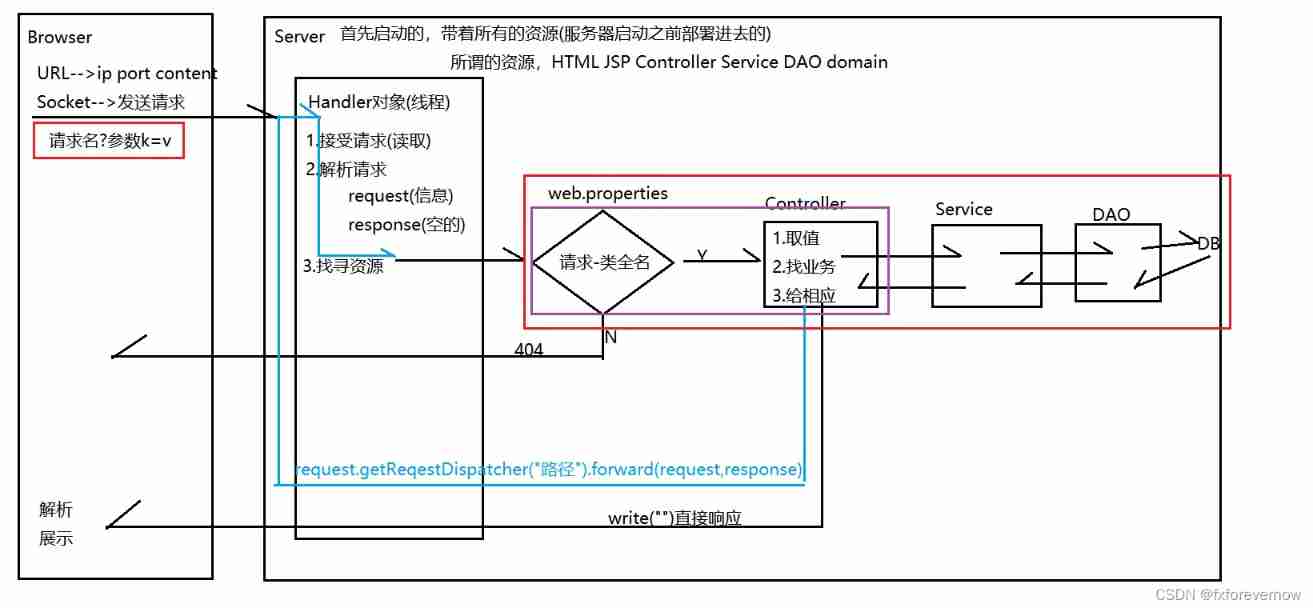

Request and response must know

108. detailed use of Redux (case)

Introduction and template of segment tree Foundation (I)

js正则-梳理

Stm32mp1 cortex M4 development part 12: expansion board vibration motor control

随机推荐

TC软件详细设计文档(手机群控)

Optional classes, convenience functions, creating options, optional object operations, and optional streams

程序员新人周一优化一行代码,周三被劝退?

110. JS event loop and setimmediate, process.nexttick

Stream programming: stream support, creation, intermediate operation and terminal operation

西电AI专业排名超清北,南大蝉联全国第一 | 2022软科中国大学专业排名

Unity中的地平面简介

[actual combat] STM32 FreeRTOS migration series tutorial 7: FreeRTOS event flag group

信号功率谱估计

The memory allocation of the program, the storage of local const and global const in the system memory, and the perception of pointers~

Talk about the multimodal project of fire

ENGRAIL THERAPEUTICS公布ENX-101临床1b研究正面结果

开课报名|「Takin开源特训营」第一期来啦!手把手教你搞定全链路压测!

WCF restful+jwt authentication

聊聊大火的多模态项目

JWT与Session的比较

AI越进化越跟人类大脑像!Meta找到了机器的“前额叶皮层”,AI学者和神经科学家都惊了...

stm32mp1 Cortex M4开发篇8:扩展板LED灯控制实验

WCF RestFul+JWT身份验证

Stm32mp1 cortex M4 Development Part 9: expansion board air temperature and humidity sensor control