当前位置:网站首页>Cat installation and use

Cat installation and use

2022-07-26 09:16:00 【Or turn around】

I introduced it in the previous blog apm System selection and elastic APM, This article will continue cat.

CAT(Central Application Tracking) The original public comment is based on eBay Of CAL Improved distributed service link monitoring platform .CAT since 2004 Open source since , In Ctrip 、 Lu Jin Su 、 Ping An Bank 、 A lot of spelling 、OPPO、 Cooperated network 、 Find steel mesh, etc 100 Several companies 、 Application in the production environment of the enterprise .

With 2016 Take a set of data in :

US group review ,2016 year ,3000+ Application service ,7000+ The server ,100+TB/ God , Single machine peak QPS 16w

Ctrip ,2016 year ,4500+ Application service ,10000+ The server ,140TB/ God , Single machine peak QPS 10w.

at present ,CAT( As the basic component of the server project , Provides Java, C/C++, Node.js, Python, Go Wait for multilingual clients , We have commented on the infrastructure middleware framework in meituan (MVC frame ,RPC frame , Database framework , Caching framework, etc , Message queue , Configuration system, etc ) Deep integration , Provide rich performance indicators for each business line of meituan reviews 、 health 、 Real time alarm, etc .

In front of blog Distributed tracking system and Elastic APM Installation and use , This article will continue Cat Installation and use .

Server deployment

Cat The deployment document for is in github It's written in detail , Portal :https://github.com/dianping/cat/wiki/readme_server.

The following will be explained according to the actual deployment process of bloggers .

Environmental Science :tomcat8,mysql,jdk8,cat Source code (https://github.com/dianping/cat).

(1) establish cat Initial Directory

Cat The initial directory cannot be changed ( The official deployment document says it can be defined CAT_HOME Create a custom directory for , But in faq The document says that it does not support modifying the directory , If you want to modify , You need to read the source code yourself ),linux Create directories and modify permissions in the environment :

mkdir /data

chmod -R 777 /data

mkdir -p /data/appdatas/cat

If it is windows Environmental Science , for example cat Service running on e Discoid tomcat in , You need to e:/data/appdatas/cat and e:/data/applogs/cat Read and write permission .

(2) Deploy tomcat

stay tomcat Of bin New under the directory setenv.sh Script , Add environment variables :

export CAT_HOME=/data/appdatas/cat/

CATALINA_OPTS="$CATALINA_OPTS -server -DCAT_HOME=$CAT_HOME -Djava.awt.headless=true -Xms25G -Xmx25G -XX:PermSize=256m -XX:MaxPermSize=256m -XX:NewSize=10144m -XX:MaxNewSize=10144m -XX:SurvivorRatio=10 -XX:+UseParNewGC -XX:ParallelGCThreads=4 -XX:MaxTenuringThreshold=13 -XX:+UseConcMarkSweepGC -XX:+DisableExplicitGC -XX:+UseCMSInitiatingOccupancyOnly -XX:+ScavengeBeforeFullGC -XX:+UseCMSCompactAtFullCollection -XX:+CMSParallelRemarkEnabled -XX:CMSFullGCsBeforeCompaction=9 -XX:CMSInitiatingOccupancyFraction=60 -XX:+CMSClassUnloadingEnabled -XX:SoftRefLRUPolicyMSPerMB=0 -XX:-ReduceInitialCardMarks -XX:+CMSPermGenSweepingEnabled -XX:CMSInitiatingPermOccupancyFraction=70 -XX:+ExplicitGCInvokesConcurrent -Djava.nio.channels.spi.SelectorProvider=sun.nio.ch.EPollSelectorProvider -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCApplicationConcurrentTime -XX:+PrintHeapAtGC -Xloggc:/data/applogs/heap_trace.txt -XX:-HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/data/applogs/HeapDumpOnOutOfMemoryError -Djava.util.Arrays.useLegacyMergeSort=true"

Tomcat The environment variables of the script will be automatically loaded when starting , Please note that : Please adjust the heap size and Cenozoic size by yourself , The heap size in the development environment is 2G that will do .

modify tomcat Of conf In the catalog server.xml, Prevent Chinese miscoding :

<Connector port="8080" protocol="HTTP/1.1"

URIEncoding="utf-8" connectionTimeout="20000"

redirectPort="8443" /> <!-- increase URIEncoding="utf-8" -->

(3) Configure what the client points to cat server Address

stay /data/appdatas/cat Create under directory client.xml file , hypothesis cat The server address is 10.1.1.1,10.1.1.2,10.1.1.3, The configuration is :

<?xml version="1.0" encoding="utf-8"?>

<config mode="client">

<servers>

<server ip="10.1.1.1" port="2280" http-port="8080"/>

<server ip="10.1.1.2" port="2280" http-port="8080"/>

<server ip="10.1.1.3" port="2280" http-port="8080"/>

</servers>

</config>

(4) Install the database and configure

Create database cat( The library name cannot be changed ), from cat Source code script Get the database script file under the directory :CatApplication.sql, Execute script file generation cat The watch you need .

To configure /data/appdatas/cat/datasources.xml file :

<?xml version="1.0" encoding="utf-8"?>

<data-sources>

<data-source id="cat">

<maximum-pool-size>3</maximum-pool-size>

<connection-timeout>1s</connection-timeout>

<idle-timeout>10m</idle-timeout>

<statement-cache-size>1000</statement-cache-size>

<properties>

<driver>com.mysql.jdbc.Driver</driver>

<url><![CDATA[jdbc:mysql://127.0.0.1:3306/cat]]></url> <!-- Please replace with a real database URL And Port -->

<user>root</user> <!-- Please replace with the real database user name -->

<password>root</password> <!-- Please replace with the real database password -->

<connectionProperties><![CDATA[useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&socketTimeout=120000]]></connectionProperties>

</properties>

</data-source>

</data-sources>

If it's cluster mode , Each cat This file needs to be deployed on the server .

(5)war Packaging and deployment

There are two ways , One is self built through the source code , perform mvn clean install -DskipTests, stay cat_home Lower generation war newspaper , Renamed as cat.war.

The other is to download the official package ( Corresponding jdk8):http://unidal.org/nexus/service/local/repositories/releases/content/com/dianping/cat/cat-home/3.0.0/cat-home-3.0.0.war

It also needs to be renamed cat.war.

take war Put the bag in tomcat Of webapps Under the table of contents , start-up tomcat.

If it is a local development environment , stay IDE Can be used in tomcat Plug in Launch cat-home modular ,application context Set to /cat.

(6) The system configuration

visit http://localhost:8080/cat/s/config?op=projects, Enter the global system configuration menu .

Server configuration

If it's a cluster environment , Then configure sample as follows :

<?xml version="1.0" encoding="utf-8"?>

<server-config>

<server id="default">

<properties>

<property name="local-mode" value="false"/>

<property name="job-machine" value="false"/>

<property name="send-machine" value="false"/>

<property name="alarm-machine" value="false"/>

<property name="hdfs-enabled" value="false"/>

<property name="remote-servers" value="10.1.1.1:8080,10.1.1.2:8080,10.1.1.3:8080"/>

</properties>

<storage local-base-dir="/data/appdatas/cat/bucket/" max-hdfs-storage-time="15" local-report-storage-time="7" local-logivew-storage-time="7">

<hdfs id="logview" max-size="128M" server-uri="hdfs://10.1.77.86/" base-dir="user/cat/logview"/>

<hdfs id="dump" max-size="128M" server-uri="hdfs://10.1.77.86/" base-dir="user/cat/dump"/>

<hdfs id="remote" max-size="128M" server-uri="hdfs://10.1.77.86/" base-dir="user/cat/remote"/>

</storage>

<consumer>

<long-config default-url-threshold="1000" default-sql-threshold="100" default-service-threshold="50">

<domain name="cat" url-threshold="500" sql-threshold="500"/>

<domain name="OpenPlatformWeb" url-threshold="100" sql-threshold="500"/>

</long-config>

</consumer>

</server>

<server id="10.1.1.1">

<properties>

<property name="job-machine" value="true"/>

<property name="alarm-machine" value="true"/>

<property name="send-machine" value="true"/>

</properties>

</server>

</server-config>

among , id="default" Is the default configuration information ,server id=“10.1.1.1” The following configuration represents 10.1.1.1 The node configuration of this server overrides default Configuration information , Like the following job-machine,alarm-machine,send-machine by true.

Cat Node responsibilities

- Console : Provide data for business personnel to view 【 Default owned cat Nodes can be used as a console , Not configurable 】

- Consumer machines : Receive business data in real time , real-time processing , Provide real-time analysis report 【 Default owned cat Nodes can be used as consumer machines , Not configurable 】

- Alarm terminal : Start the alarm thread , Do rule matching , Send alarm ( Currently, only single point deployment is supported )【 You can configure the 】

- Mission machine : Do some offline tasks , Merge days 、 Zhou 、 Monthly reports 【 You can configure the 】

If it is a local stand-alone environment , The configuration should look like this :

<?xml version="1.0" encoding="utf-8"?>

<server-config>

<server id="default">

<properties>

<property name="local-mode" value="false"/>

<property name="job-machine" value="false"/>

<property name="send-machine" value="false"/>

<property name="alarm-machine" value="false"/>

<property name="hdfs-enabled" value="false"/>

<property name="remote-servers" value="127.0.0.1:8080"/>

</properties>

<storage local-base-dir="/data/appdatas/cat/bucket/" max-hdfs-storage-time="15" local-report-storage-time="2" local-logivew-storage-time="1" har-mode="true" upload-thread="5">

<hdfs id="dump" max-size="128M" server-uri="hdfs://127.0.0.1/" base-dir="/user/cat/dump"/>

<harfs id="dump" max-size="128M" server-uri="har://127.0.0.1/" base-dir="/user/cat/dump"/>

<properties>

<property name="hadoop.security.authentication" value="false"/>

<property name="dfs.namenode.kerberos.principal" value="hadoop/[email protected]"/>

<property name="dfs.cat.kerberos.principal" value="[email protected]"/>

<property name="dfs.cat.keytab.file" value="/data/appdatas/cat/cat.keytab"/>

<property name="java.security.krb5.realm" value="value1"/>

<property name="java.security.krb5.kdc" value="value2"/>

</properties>

</storage>

<consumer>

<long-config default-url-threshold="1000" default-sql-threshold="100" default-service-threshold="50">

<domain name="cat" url-threshold="500" sql-threshold="500"/>

<domain name="OpenPlatformWeb" url-threshold="100" sql-threshold="500"/>

</long-config>

</consumer>

</server>

<server id="127.0.0.1">

<properties>

<property name="job-machine" value="true"/>

<property name="send-machine" value="true"/>

<property name="alarm-machine" value="true"/>

</properties>

</server>

</server-config>

Client routing

The client routing configuration in the cluster environment is as follows :

<?xml version="1.0" encoding="utf-8"?>

<router-config backup-server="10.1.1.1" backup-server-port="2280">

<default-server id="10.1.1.1" weight="1.0" port="2280" enable="false"/>

<default-server id="10.1.1.2" weight="1.0" port="2280" enable="true"/>

<default-server id="10.1.1.3" weight="1.0" port="2280" enable="true"/>

<network-policy id="default" title="default" block="false" server-group="default_group">

</network-policy>

<server-group id="default_group" title="default-group">

<group-server id="10.1.1.2"/>

<group-server id="10.1.1.3"/>

</server-group>

<domain id="cat">

<group id="default">

<server id="10.1.1.2" port="2280" weight="1.0"/>

<server id="10.1.1.3" port="2280" weight="1.0"/>

</group>

</domain>

</router-config>

The configuration in the local stand-alone environment is as follows :

<?xml version="1.0" encoding="utf-8"?>

<router-config backup-server="127.0.0.1" backup-server-port="2280">

<default-server id="127.0.0.1" weight="1.0" port="2280" enable="true"/>

<network-policy id="default" title=" Default " block="false" server-group="default_group">

</network-policy>

<server-group id="default_group" title="default-group">

<group-server id="127.0.0.1"/>

</server-group>

<domain id="cat">

<group id="default">

<server id="127.0.0.1" port="2280" weight="1.0"/>

</group>

</domain>

</router-config>

The server and client routing configuration only needs to be configured once , Will be saved in the database , When starting other nodes, it will automatically read from the database .

Cat Use

Deploy well cat After the service side , You only need to introduce the client library into the business system , Bury points in the code to report data .

Here we use python Code, for example : introduce cat-sdk(3.1.2) package , Test code :

import cat

import time

cat.init('appkey', sampling=True, debug=True)

while True:

with cat.Transaction("foo", "bar") as t:

try:

t.add_data("a=1")

cat.log_event("hook", "before")

# do something

except Exception as e:

cat.log_exception(e)

finally:

cat.metric("api-count").count()

cat.metric("api-duration").duration(100)

cat.log_event("hook", "after")

time.sleep(2000)

stay cat Console configuration item name :

After running the code , By application name appkey View the reported data :

Suppose that by /test/hello This url To access an interface :

class HelloWorldHandler(tornado.web.RequestHandler):

def get(self):

with cat.Transaction(self.request.path, "test") as t:

# Call other systems

cat.log_event("call service1", "before")

self.func1()

cat.log_event("call service1", "after")

time.sleep(3)

cat.log_event("call service2", "before")

self.func2()

cat.log_event("call service2", "after")

cat.log_event("get data from db", "before")

self.func3()

cat.log_event("get data from db", "after")

self.write("Hello, world")

def func1(self):

time.sleep(1)

def func2(self):

time.sleep(2)

def func3(self):

time.sleep(1)

During the interface processing , Called other services , Accessed the local database . adopt cat The embedding point can report the call event .

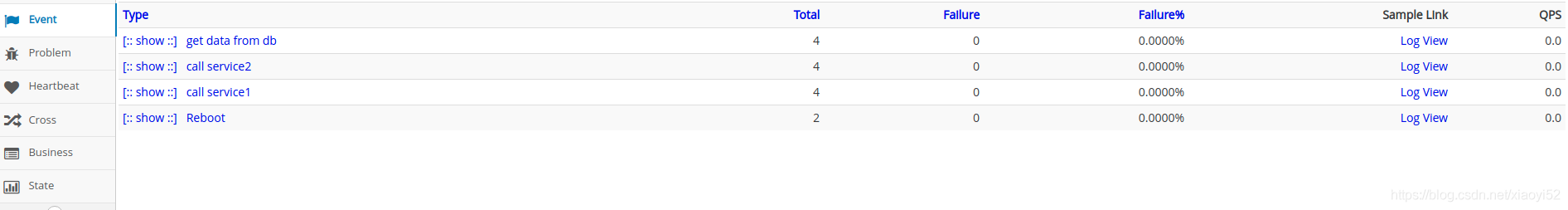

Event as follows :

Transaction as follows :

Click on Transaction perhaps event Of logview, You can see the call chain :

python Inside cat It can also be used by decorators , But in this case, the transaction object cannot be obtained .

In the example above Transaction The page looks normal , Shows each api The number of calls and request time of the interface .

But in fact, this is wrong , because Transaction Of the two parameters of , The first parameter should be type , The second parameter is the value corresponding to the type .

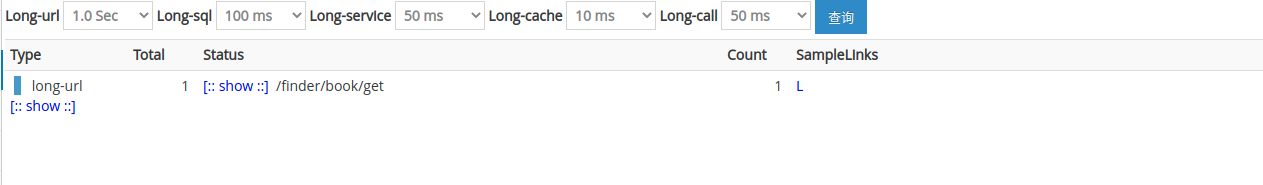

stay Problem The page will filter out the problematic buried point data according to the type and set parameters :

- Long-url, Express Transaction Dot URL Slow request of type

- Long-sql, Express Transaction Dot SQL Slow request of type

- Long-service, Express Transaction Dot Service perhaps PigeonService Slow request of type

- Long-cache, Express Transaction Dot Cache. Slow request at the beginning

- Long-call, Express Transaction Dot Call perhaps PigeonCall Slow request of type

If you don't dot by type , Can not be in Problem Page view request with problem . Therefore, the management of affairs is changed to :

with cat.Transaction("URL", self.request.path) as t:

problem The page is as follows :

The following will continue Zipkin Installation and use .

Reference material

[1]. https://github.com/dianping/cat/wiki/readme_server

[2].https://github.com/dianping/cat/wiki/cat_faq

[3].https://tech.meituan.com/2018/11/01/cat-in-depth-java-application-monitoring.html

边栏推荐

- Vertical search

- Zipkin安装和使用

- 深度学习常用激活函数总结

- Original root and NTT 5000 word explanation

- js闭包:函数和其词法环境的绑定

- NFT与数字藏品到底有何区别?

- Flask project learning (I) -- sayhello

- 巴比特 | 元宇宙每日必读:元宇宙的未来是属于大型科技公司,还是属于分散的Web3世界?...

- [leetcode database 1050] actors and directors who have cooperated at least three times (simple question)

- Babbitt | metauniverse daily must read: does the future of metauniverse belong to large technology companies or to the decentralized Web3 world

猜你喜欢

Babbitt | metauniverse daily must read: does the future of metauniverse belong to large technology companies or to the decentralized Web3 world

Introduction to excellent verilog/fpga open source project (30) - brute force MD5

李沐d2l(五)---多层感知机

【线上死锁分析】由index_merge引发的死锁事件

Clean the label folder

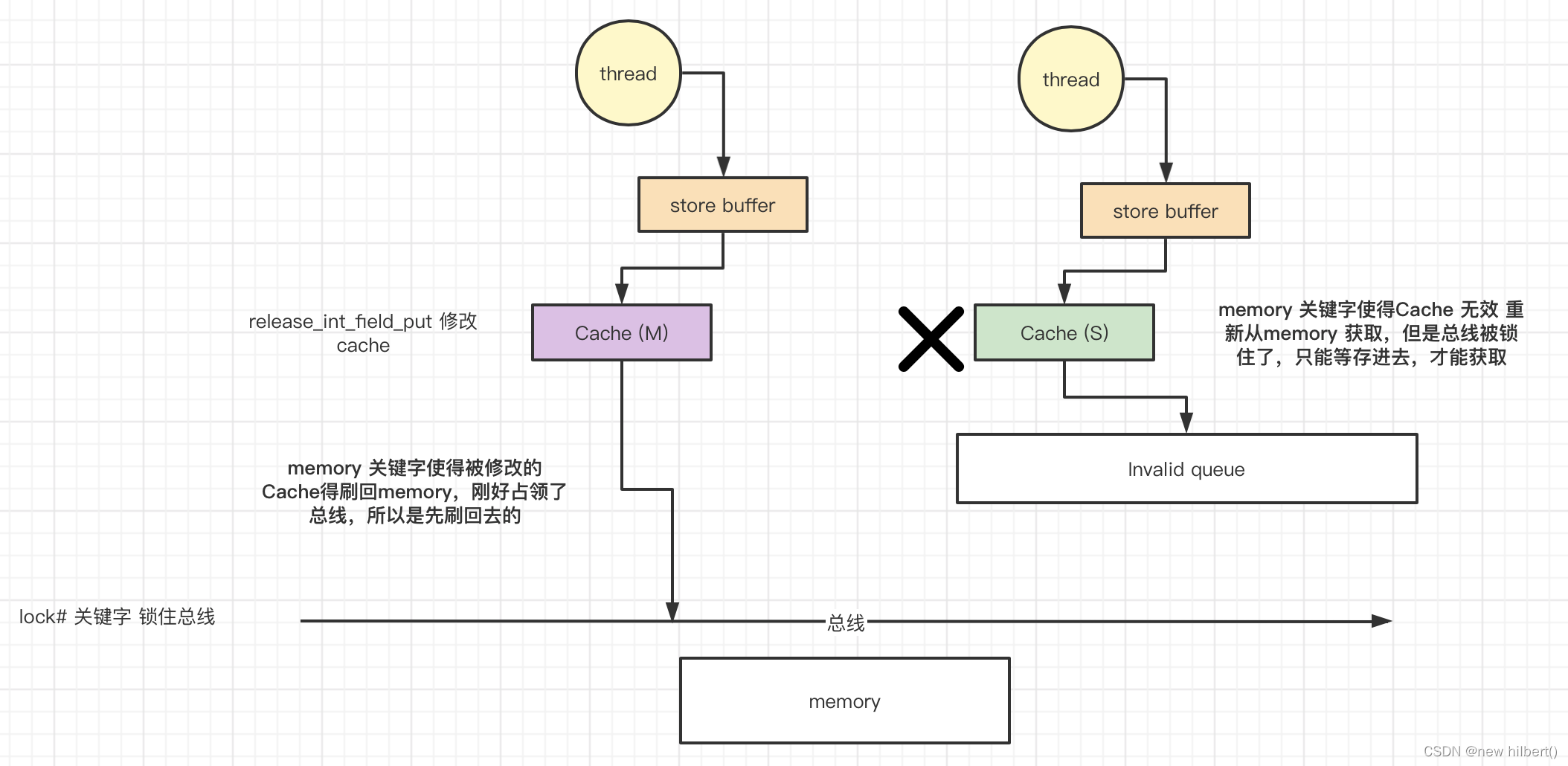

Does volatile rely on the MESI protocol to solve the visibility problem? (next)

Li Mu D2L (IV) -- softmax regression

187. Repeated DNA sequence

【线上问题】Timeout waiting for connection from pool 问题排查

Original root and NTT 5000 word explanation

随机推荐

Qt | 关于如何使用事件过滤器 eventFilter

Pat grade a A1034 head of a gang

"Could not build the server_names_hash, you should increase server_names_hash_bucket_size: 32"

力扣题DFS

Babbitt | metauniverse daily must read: does the future of metauniverse belong to large technology companies or to the decentralized Web3 world

(2006,Mysql Server has gone away)问题处理

The child and binary tree- open root inversion of polynomials

MySQL strengthen knowledge points

839. Simulation reactor

[MySQL] detailed explanation of MySQL lock (III)

李沐d2l(六)---模型选择

服务器内存故障预测居然可以这样做!

redis原理和使用-安装和分布式配置

十大蓝筹NFT近半年数据横向对比

760. 字符串长度

分布式跟踪系统选型与实践

unity TopDown角色移动控制

【Mysql】一条SQL语句是怎么执行的(二)

pycharm 打开多个项目的两种小技巧

2B和2C