当前位置:网站首页>< Pytorch series 4 & gt;: Constructing neural network model

< Pytorch series 4 & gt;: Constructing neural network model

2022-06-11 10:22:00 【thump-thump-thump】

WeChat official account : Dong Dong Xue AI

Neural networks consist of layers that perform data operations / Module composition .

torch.nn Namespace provides all the modules needed to build your own neural network .

PyTorch Each module in inherits from nn.Module .

The neural network is made up of other modules ( layer ) Module composed of . This nested structure allows you to easily build and manage complex architectures

Next , We will build a neural network to FashionMNIST Classification of images in the dataset

import os

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

Selection of training equipment

If GPU You can use , Just choose GPU Training on , Otherwise we continue to use CPU

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using {device} device")

If GPU You can use , The output

Using cuda device

Construct neural network class

By inheritance nn.Module Define the neural network .

And in __ init __ Initialize the neural network layer in .

Every nn.Module The subclass is forward Method to realize the operation of input data .

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10),

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

Next, I create a NeuralNetwork Example , And move it to the device , And print out its structure .

model = NeuralNetwork().to(device)

print(model)

Output is

NeuralNetwork(

(flatten): Flatten(start_dim=1, end_dim=-1)

(linear_relu_stack): Sequential(

(0): Linear(in_features=784, out_features=512, bias=True)

(1): ReLU()

(2): Linear(in_features=512, out_features=512, bias=True)

(3): ReLU()

(4): Linear(in_features=512, out_features=10, bias=True)

)

)

Next , We input an image into the neural network model to predict its category probability .

X = torch.rand(1, 28, 28, device=device) # The input data needs to be on the same device as the model

logits = model(X) # Would call forward Method to operate X

pred_probab = nn.Softmax(dim=1)(logits) # The generated ten numbers are probabilistic , The sum is equal to 1

y_pred = pred_probab.argmax(1) # Get the index of the largest number of ten , Is the category

print(f"Predicted class: {y_pred}")

Output is

、

Predicted class: tensor([9], device='cuda:0')

The model layer

Next, each layer in the model is analyzed in detail , We use three sheets of size 28x28 As input

input_image = torch.rand(3,28,28)

print(input_image.size())

The output shape is

torch.Size([3, 28, 28])

nn.Flatten modular

Initialize the instance first nn.Flatten class , Then each 2 dimension 28x28 Flatten into a 784 A continuous array of pixel values

Be careful :nn.Flatten Medium forward The operation starts from the second dimension , Keep the first dimension

flatten = nn.Flatten()

flat_image = flatten(input_image)

print(flat_image.size())

The shape of the output is

torch.Size([3, 784])

nn.Linear modular

The linear layer uses its stored Weight and bias Apply a linear mapping to the input

layer1 = nn.Linear(in_features=28*28, out_features=20) # take 28*28 Dimension mapping to 20

hidden1 = layer1(flat_image) # Only the last dimension is mapped

print(hidden1.size())

The output shape is

torch.Size([3, 20])

nn.ReLU modular

Nonlinear activation creates a complex mapping between the inputs and outputs of the model . They are used to introduce after linear transformation nonlinear , It helps to improve the learning ability of the network .

print(f"Before ReLU: {hidden1}\n\n")

hidden1 = nn.ReLU()(hidden1)

print(f"After ReLU: {hidden1}")

Output is

Before ReLU: tensor([[-0.2237, -0.2367, 0.2977, -0.3347, -0.4724, 0.3709, 0.0294, -0.0807,

-0.5721, -0.1723, -0.8035, 0.4663, -0.0803, -0.2520, 0.8864, 0.4762,

0.2638, -0.1566, 0.0790, -0.0876],

[-0.2885, -0.3101, 0.2298, -0.4918, -0.3310, 0.4374, 0.1665, 0.1405,

-0.5300, -0.3482, -0.4831, -0.0948, 0.1129, -0.3147, 0.8067, 0.3847,

0.2725, -0.0671, 0.4173, -0.3192],

[-0.2258, -0.1209, 0.6989, -0.4547, -0.3201, -0.1266, -0.1083, -0.0766,

-0.2590, -0.3851, -0.7130, 0.4853, 0.2001, -0.3398, 0.9755, 0.3800,

-0.0782, 0.2659, 0.2886, -0.5325]], grad_fn=<AddmmBackward0>)

After ReLU: tensor([[0.0000, 0.0000, 0.2977, 0.0000, 0.0000, 0.3709, 0.0294, 0.0000, 0.0000,

0.0000, 0.0000, 0.4663, 0.0000, 0.0000, 0.8864, 0.4762, 0.2638, 0.0000,

0.0790, 0.0000],

[0.0000, 0.0000, 0.2298, 0.0000, 0.0000, 0.4374, 0.1665, 0.1405, 0.0000,

0.0000, 0.0000, 0.0000, 0.1129, 0.0000, 0.8067, 0.3847, 0.2725, 0.0000,

0.4173, 0.0000],

[0.0000, 0.0000, 0.6989, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.4853, 0.2001, 0.0000, 0.9755, 0.3800, 0.0000, 0.2659,

0.2886, 0.0000]], grad_fn=<ReluBackward0>)

You can find nn.ReLU Convert all negative values to 0, The positive value remains unchanged

nn.Sequential modular

nn.sequential Is an ordered module container . The data passes through all modules in the order in which they are , Easy to use

seq_modules = nn.Sequential(

flatten,

layer1,

nn.ReLU(),

nn.Linear(20, 10)

) # It contains different modules , In order

input_image = torch.rand(3,28,28)

logits = seq_modules(input_image) # Execute each module in sequence

nn.Softmax modular

The last linear layer of the neural network returns logits( Original value in [-infty,infty] in )

nn.Softmax The module will each Logit Zoom to numeric [0,1] Within the scope of ( The sum of the values is 1), Represents the prediction probability of the model for each category .

softmax = nn.Softmax(dim=1) # dim Indicates which dimension to operate on

pred_probab = softmax(logits)

Model parameters

Many layers in neural networks are parameterized , That is, it has relevant weights and deviations optimized during training .

nn.Module Subclasses automatically track all parameters defined within the model , And use the... Of the model **parameters ()** or **named _ parameters ()** Method to access all parameters .

print(f"Model structure: {model}\n\n")

for name, param in model.named_parameters():

print(f"Layer: {name} | Size: {param.size()} | Values : {param[:2]} \n")

Output is

Model structure: NeuralNetwork(

(flatten): Flatten(start_dim=1, end_dim=-1) # No parameters

(linear_relu_stack): Sequential(

(0): Linear(in_features=784, out_features=512, bias=True) # Contains weights and offsets

(1): ReLU() # No parameters

(2): Linear(in_features=512, out_features=512, bias=True)

(3): ReLU()

(4): Linear(in_features=512, out_features=10, bias=True)

)

)

Layer: linear_relu_stack.0.weight | Size: torch.Size([512, 784]) | Values : tensor([[ 0.0033, -0.0081, -0.0354, ..., -0.0335, 0.0070, 0.0030],

[ 0.0106, -0.0064, 0.0300, ..., 0.0071, -0.0062, 0.0169]],

device='cuda:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.0.bias | Size: torch.Size([512]) | Values : tensor([-0.0193, -0.0153], device='cuda:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.2.weight | Size: torch.Size([512, 512]) | Values : tensor([[ 0.0408, 0.0078, 0.0300, ..., 0.0058, -0.0142, -0.0226],

[ 0.0319, -0.0063, -0.0093, ..., -0.0096, 0.0352, 0.0178]],

device='cuda:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.2.bias | Size: torch.Size([512]) | Values : tensor([0.0219, 0.0020], device='cuda:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.4.weight | Size: torch.Size([10, 512]) | Values : tensor([[ 0.0076, 0.0076, 0.0433, ..., 0.0178, 0.0230, 0.0227],

[-0.0396, -0.0042, 0.0342, ..., -0.0364, -0.0184, -0.0329]],

device='cuda:0', grad_fn=<SliceBackward0>)

Layer: linear_relu_stack.4.bias | Size: torch.Size([10]) | Values : tensor([-0.0380, -0.0044], device='cuda:0', grad_fn=<SliceBackward0>)

边栏推荐

- 你对软件兼容性测试知道多少?如何选择软件兼容性测试机构?

- Explain the physical layer consistency test of 2.5g/5g/10g Base-T Ethernet interface in detail!

- Wechat applet ordering system with source code

- Record yesterday's embarrassment

- 微信小程序之点餐系统附源码

- Empire CMS imitates DIY handmade website source code of craft activity /92kaifa imitates self-adaptive mobile phone version template of craft activity

- Handwritten code call, apply, bind

- puppeteer入门之 Browser 类

- NGUI,飘血

- What hydraulic oil is used for Denison hydraulic pump? What are the requirements

猜你喜欢

图片规则翻页

What are the functions and features of EMG linear light emitter?

UGUI

Introduction to ZigBee module wireless transmission star topology networking structure

Explain the physical layer consistency test of 2.5g/5g/10g Base-T Ethernet interface in detail!

【机器学习理论】True Positive, True Negative, False Positive, False Negative概念

Q1's revenue exceeded Wall Street's expectations, and the value of Zhiwen group is waiting to return

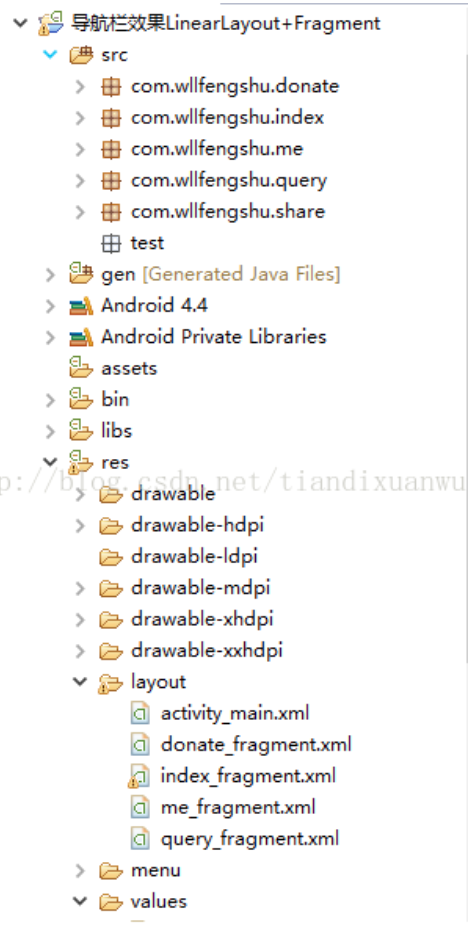

Reprint: linearlayout+fragment to achieve the lower navigation bar effect

Internet of things security in the era of remote work

为什么DDRx的电源设计时需要VTT电源

随机推荐

BCGControlBar库专业版,完整记录的MFC扩展类

Q1's revenue exceeded Wall Street's expectations, and the value of Zhiwen group is waiting to return

【高并发】关于线程池,蚂蚁金服面试官问了我这些内容!!

手写代码call,apply,bind

利用PHP开发的一款万能、表白墙系统部分代码片段

Introduction to ZigBee module wireless transmission star topology networking structure

Tree topology networking structure of ZigBee module communication protocol

ugui图片墙

Mysql--事务

TikTok在英国遭遇文化冲突,短期内众多员工离职

Circuit board made of real gold -- golden finger

UGUI

Dotween usage

【bert】:在训练bert 语义相似的任务时,last ave state 的计算

Q1营收超预期,满帮为何赢得逆风增长?

Browserfetcher class for getting started with puppeter

Explain the physical layer consistency test of 2.5g/5g/10g Base-T Ethernet interface in detail!

MySQL permission management and backup

为什么DDRx的电源设计时需要VTT电源

puppeteer入门之 BrowserFetcher 类