当前位置:网站首页>Convolutional neural network CNN

Convolutional neural network CNN

2022-07-24 19:16:00 【Coding~Man】

https://cs231n.github.io/assets/conv-demo/index.html

Network model training three musketeers : normalization ,dropout, And activation functions .

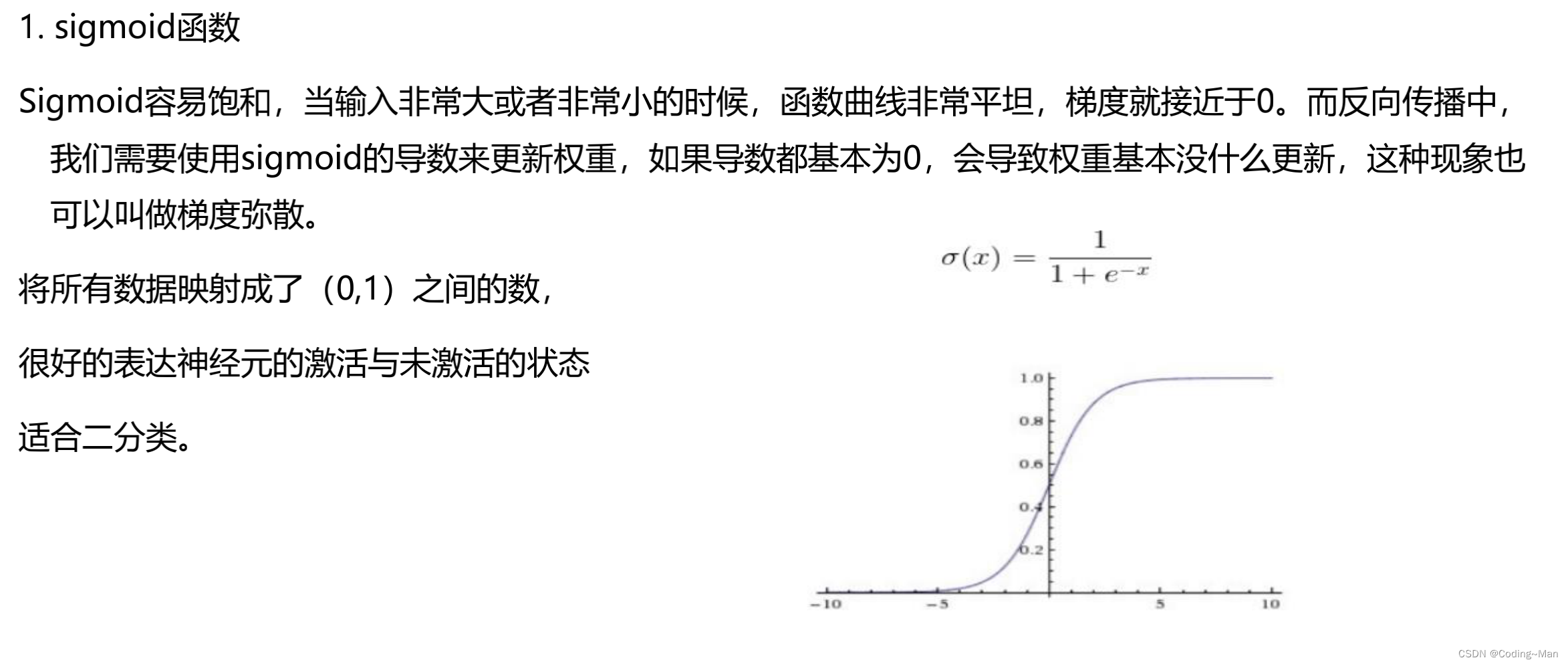

1: Common activation functions of Neural Networks

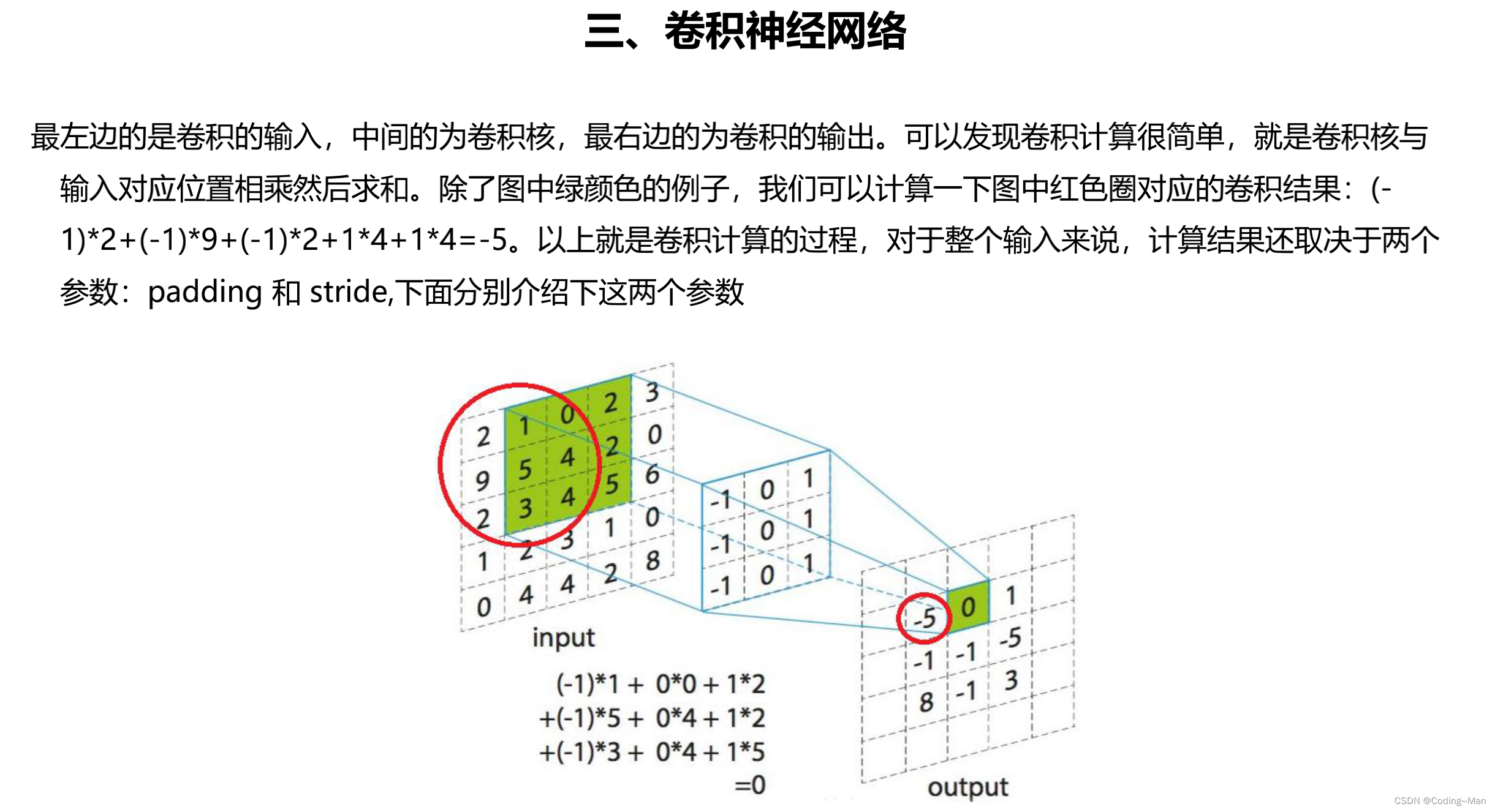

2: Convolutional neural networks

Convolution kernel ,padding, and stride step .

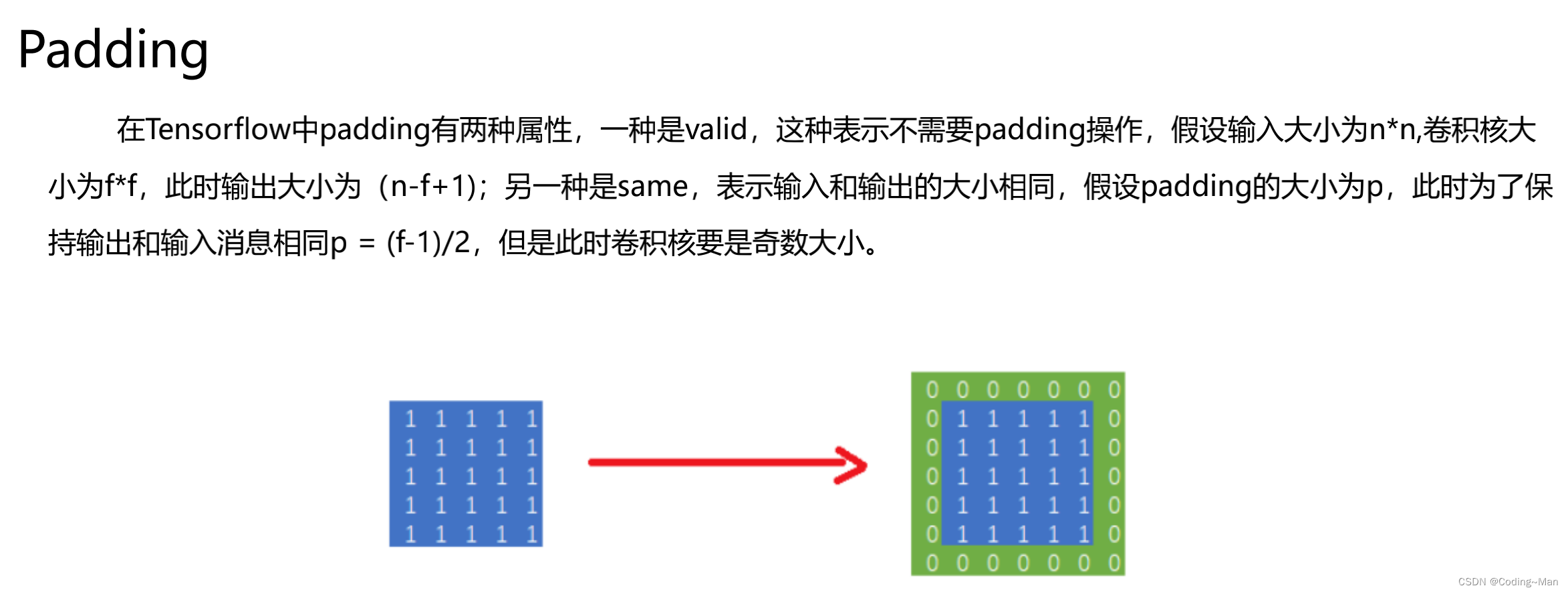

Padding The role of , In order to keep the shape of the input-output matrix unchanged .

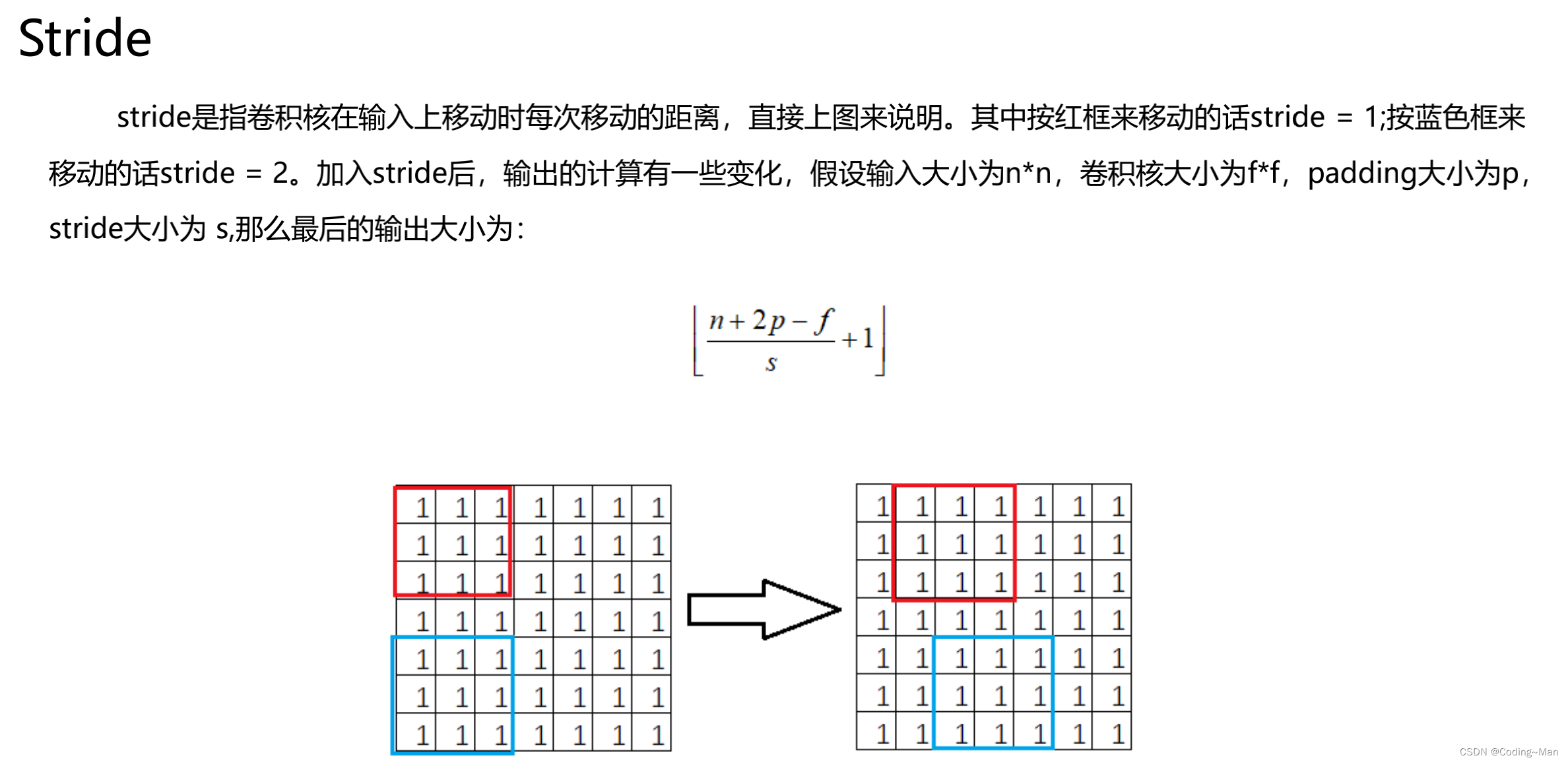

Stride It's the step length .

Multichannel convolution :

3: Methods to prevent over fitting

1:DropOut Randomly change the output of the node into 0.

2:DropConnect Random will WX Medium W become 0.

3:DisOut Randomly assign different weights to nodes (0~1 Between ).

4: The activation function is large PK

sigmoid The value range of the function is 0~1 Between .

tanh The value range of the function is -1~1 Between .

relu The value range of the function is 0~X Between .

5: Pooling Pooling

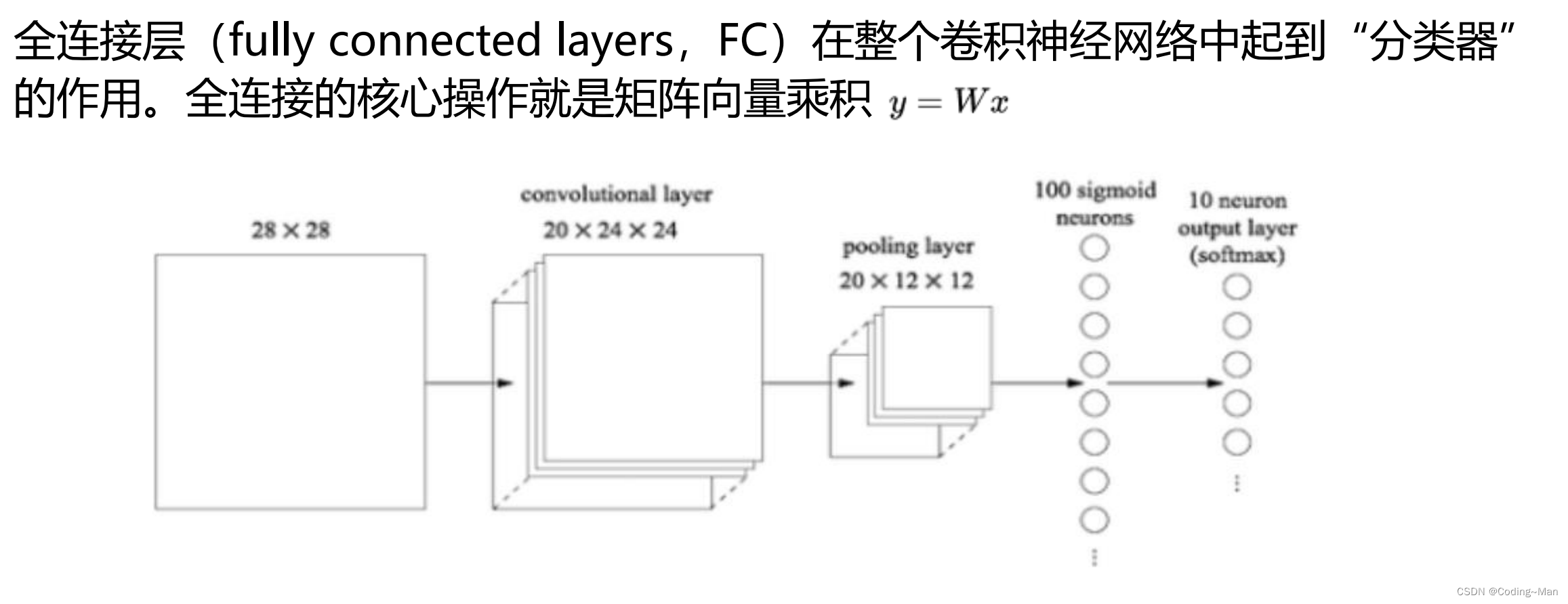

6: Full link layer

The difference between full connection and neural network , Full connection has no activation function .

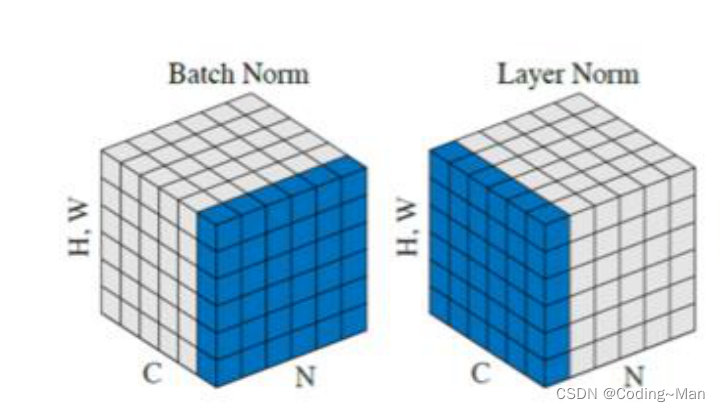

7: normalization

Normalized benefits : Speed up training , Improve accuracy

Batch Normalization: stay BatchSize Layer normalization , for example BatchSize = 258, Then normalize the data in the dimension of the input quantity of the data .

Only batchsize Big enough to , Because it is too small , Not enough to represent the whole sample .

C: The channel number

N: Sample size

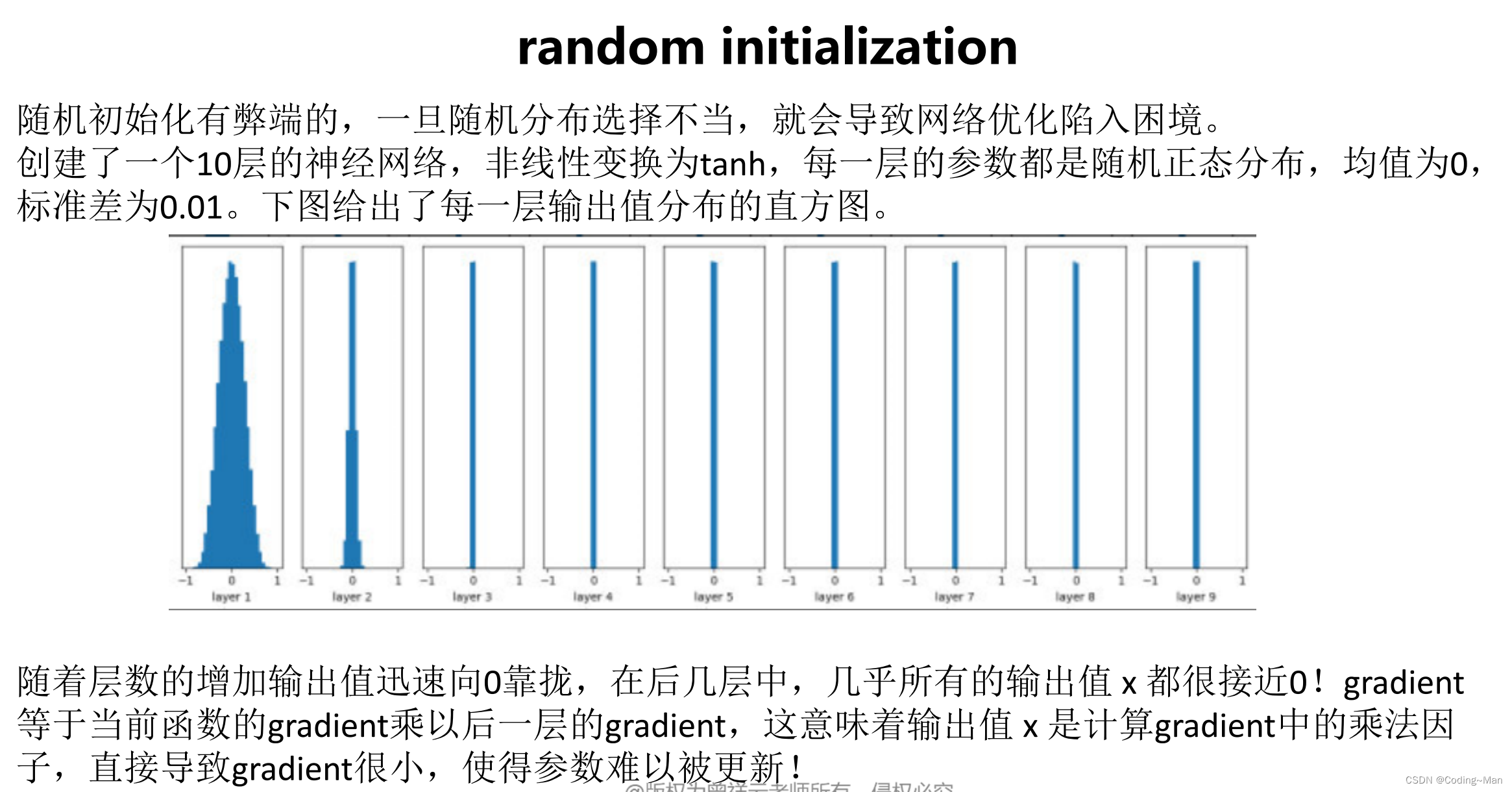

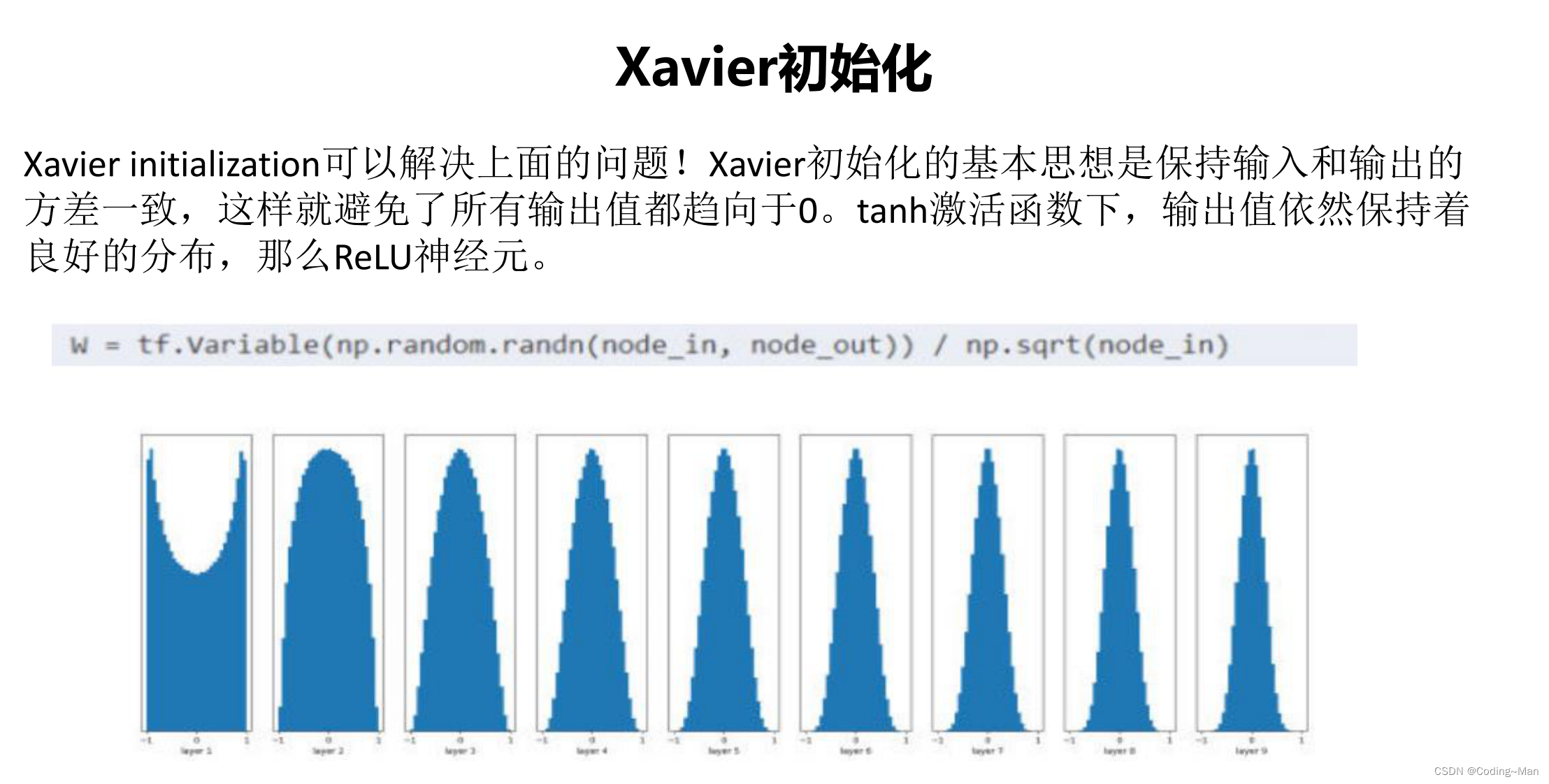

8: Parameter initialization

Xavier initialization , Initialize according to the standard distribution ,node_in = 9, node_out = 8, initialization 9*8 dimension /(9 Square root ). The best and Tanh Function with .

He initialization Initialization and ReLU Function with .

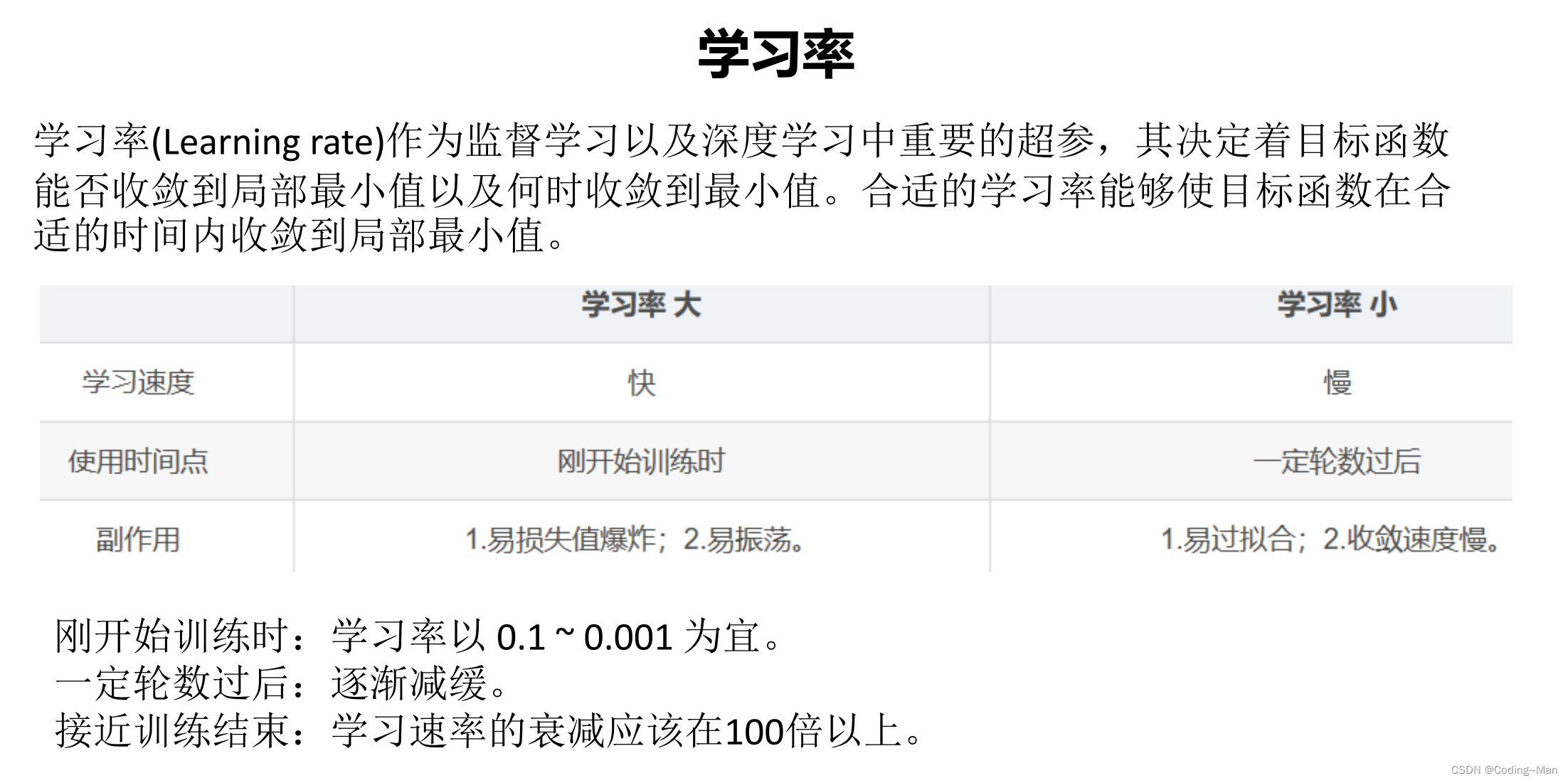

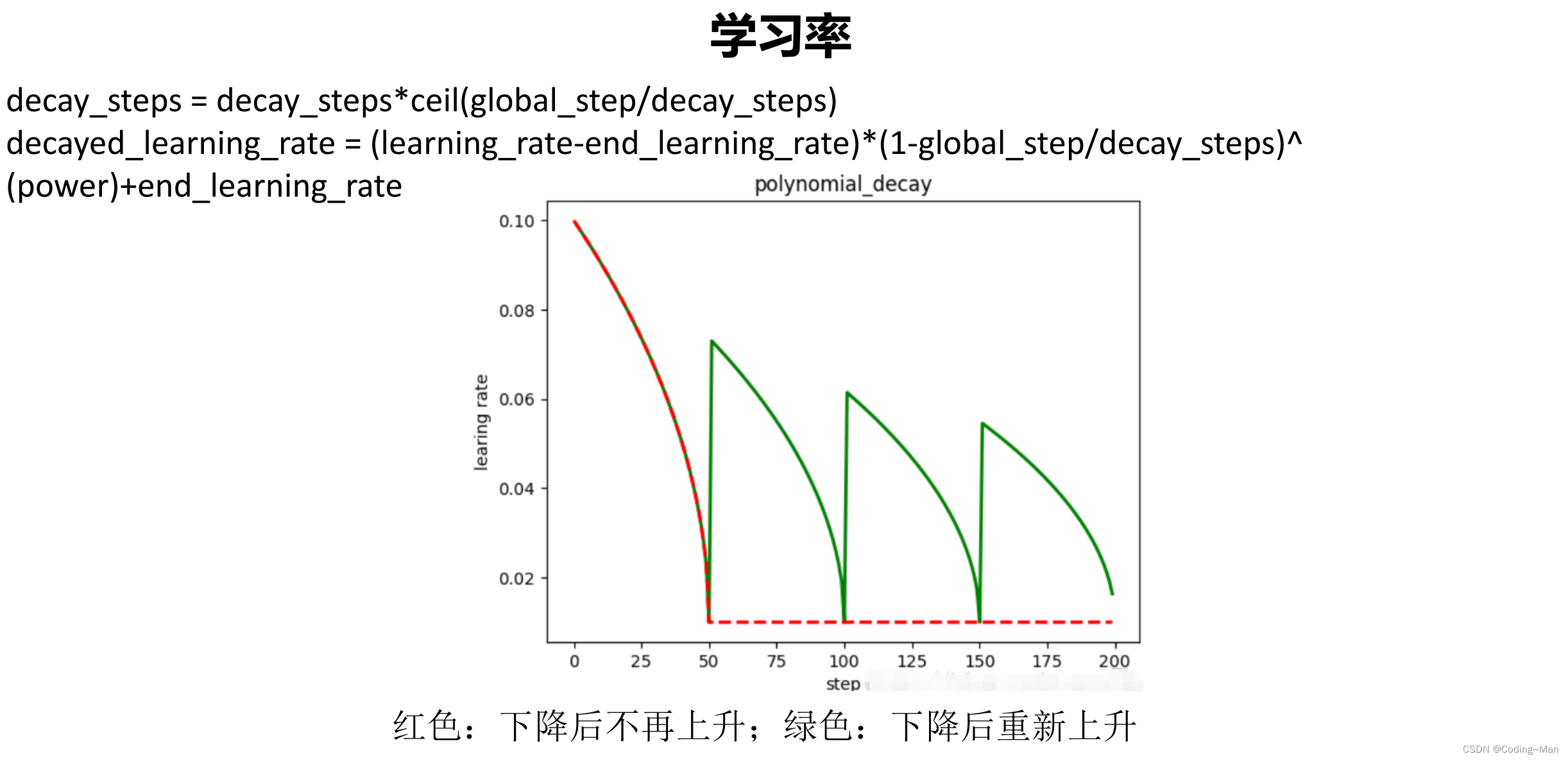

9: Learning rate

Exponential decay , The learning rate decreases with the number of steps .

The above learning rate is the most common way , Periodically change the learning rate to prevent local optimization , and consion Combination of cosine learning rate .

10: Optimizer

effect : After the model is built, the parameters are solved .

Optimizer and @ The difference between : Because you can't put all the data in the depth model , So use the optimizer . and @ All the data is put in .

The benefits of the optimizer : The learning rate is variable , You can refer to the historical gradient , You can use each bitchsize Gradient of . Simply put, the benefit of the optimizer is to put the data in once to get the value of the update item and each bitchsize The value obtained from the data is closer .

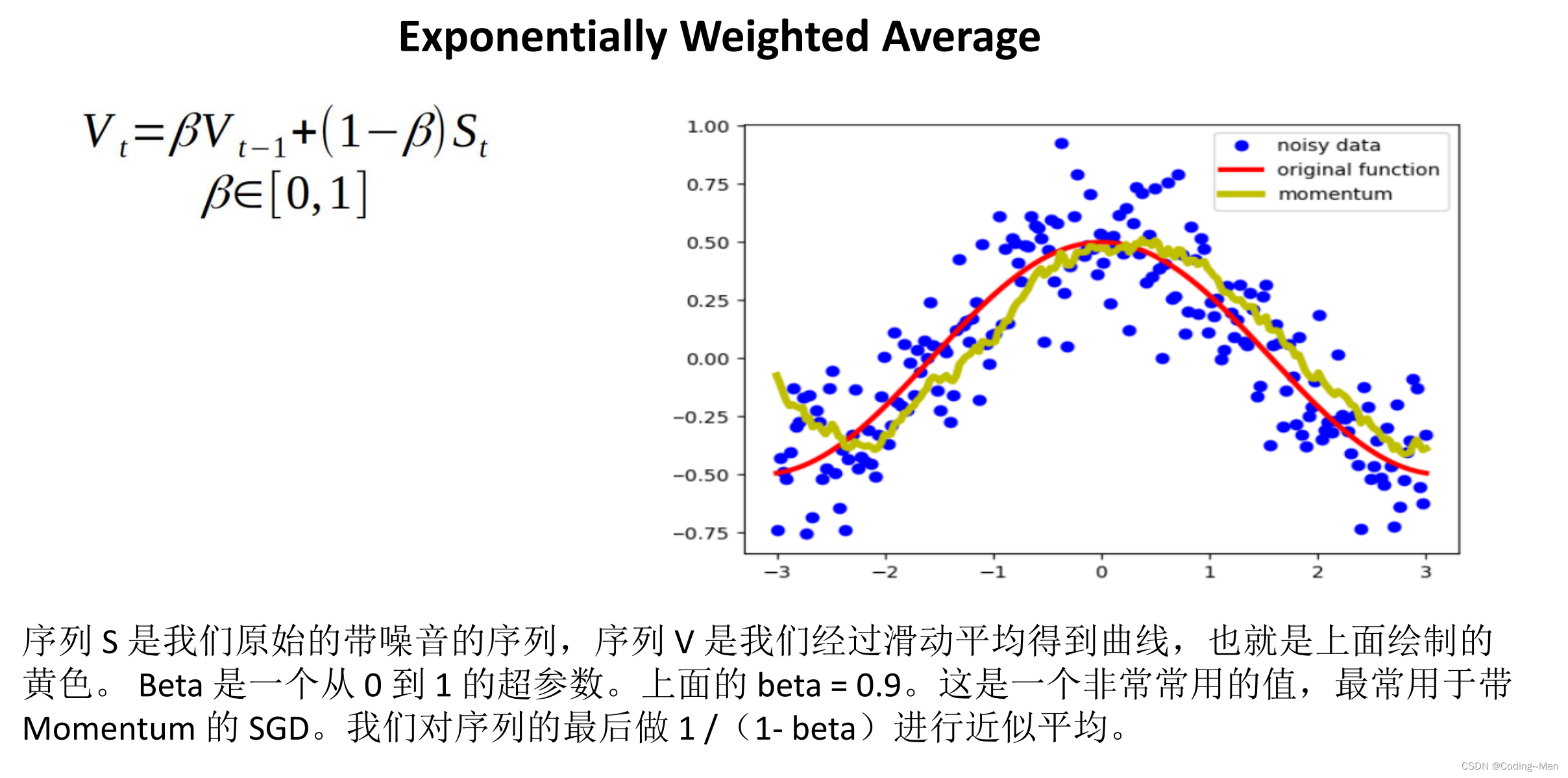

Optimizer : There are two main ideas , One is Momentum faction , That is, the impulse on the falling belt , Representative for :SGD. The other is AdaGrad faction , Thought is too volatile , Give him resistance , Prevent you from fluctuating .

Representative for :Adam A combination of two ideas .

Prevent falling into local optimum , We can add the gradient of historical update , And the current gradient weighting

Anti noise treatment :

AdaGrad

RMSprop

边栏推荐

- profile环境切换

- Sequences, time series and prediction in tessorflow quizs on coursera (I)

- 【无标题】

- Tcl/tk grouping and replacement rules

- OpenGL learning (IV) glut 3D image rendering

- 卷积神经网络感受野计算指南

- Implement a proxy pool from 0

- Arrays

- MySQL index principle and query optimization "suggestions collection"

- PostgreSQL Elementary / intermediate / advanced certification examination (7.16) passed the candidates' publicity

猜你喜欢

asp. Net coree file upload and download example

MySQL1

【JVM学习04】JMM内存模型

Tcl/tk file operation

matplotlib

Reading notes of XXL job source code

FPGA 20 routines: 9. DDR3 memory particle initialization write and read through RS232 (Part 1)

Sequences, time series and prediction in tessorflow quizs on coursera (I)

matplotlib

Sqoop

随机推荐

Interceptors and filters

Tcl/tk grouping and replacement rules

In the spring of domestic databases

Mysql database, de duplication, connection

[question 39] special question for Niuke in-depth learning

Summer Niuke multi school 1:i chiitoitsu (expectation DP, inverse yuan)

Converter

Why are there loopholes in the website to be repaired

The problem that files cannot be uploaded to the server using TFTP is solved

core dump

Configmanager of unity framework [JSON configuration file reading and writing]

[JVM learning 03] class loading and bytecode Technology

Analysis of dropout principle in deep learning

Timed task framework

Redis data type

Reading notes of XXL job source code

Biopharmaceutical safety, power supply and production guarantee

Excel practice notes 1

Profile environment switching

Detailed explanation of DHCP distribution address of routing / layer 3 switch [Huawei ENSP]