当前位置:网站首页>JD Sanmian: I want to query a table with tens of millions of data. How can I operate it?

JD Sanmian: I want to query a table with tens of millions of data. How can I operate it?

2022-07-26 16:44:00 【chenzixia】

Preface

- interviewer : Say it , Ten million data , How did you inquire ?

- I : Direct paging query , Use limit Pagination .

- interviewer : Have you ever practiced ?

- I : There must be

Here's a song 《 be doomed 》

Maybe some people haven't met a table with tens of millions of data , It's not clear what happens when you query tens of millions of data .

Today, let's take you to practice , This time it's based on MySQL 5.7.26 Do a test

Prepare the data

What to do without 10 million data ?

Create it

Code to create 10 million ? That's impossible , Too slow , Maybe you really have to run all day . You can use database scripts to execute much faster .

Create table

CREATE TABLE `user_operation_log` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`user_id` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`ip` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`op_data` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr1` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr2` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr3` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr4` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr5` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr6` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr7` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr8` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr9` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr10` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr11` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`attr12` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 1 CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;

Create data script

Using batch insert , It will be much faster , And every 1000 The number is commit, Too much data , It will also lead to slow batch insertion efficiency

DELIMITER ;

;

CREATE PROCEDURE batch_insert_log()BEGIN DECLARE i iNT DEFAULT 1;

DECLARE userId iNT DEFAULT 10000000;

set @execSql = 'INSERT INTO `test`.`user_operation_log`(`user_id`, `ip`, `op_data`, `attr1`, `attr2`, `attr3`, `attr4`, `attr5`, `attr6`, `attr7`, `attr8`, `attr9`, `attr10`, `attr11`, `attr12`) VALUES';

set @execData = '';

WHILE i<=10000000 DO set @attr = "' Test long, long, long, long, long, long, long, long, long, long, long, long, long, long, long, long, long properties '";

set @execData = concat(@execData, "(", userId + i, ", '10.0.69.175', ' User login operation '", ",", @attr, ",", @attr, ",", @attr, ",", @attr, ",", @attr, ",", @attr, ",", @attr, ",", @attr, ",", @attr, ",", @attr, ",", @attr, ",", @attr, ")");

if i % 1000 = 0 then set @stmtSql = concat(@execSql, @execData,";");

prepare stmt from @stmtSql;

execute stmt;

DEALLOCATE prepare stmt;

commit;

set @execData = ""; else set @execData = concat(@execData, ",");

end if;

SET i=i+1;

END WHILE;

END;

;

DELIMITER ;

Brother's computer configuration is relatively low :win10 Standard pressure slag i5 About reading and writing 500MB Of SSD

Due to low configuration , For this test, only 3148000 Data , Disk occupied 5G( Without indexing ), ran 38min, Students with computer configuration , You can insert multipoint data test

SELECT count(1) FROM `user_operation_log`Return results :3148000

The time of three queries is respectively :

- 14060 ms

- 13755 ms

- 13447 ms

General paging query

MySQL Support LIMIT Statement to select the specified number of data , Oracle have access to ROWNUM To select .

MySQL The syntax of paging query is as follows :

SELECT * FROM table LIMIT [offset,] rows | rows OFFSET offset- The first parameter specifies the offset of the first return record line

- The second parameter specifies the maximum number of rows to return records

Now let's start testing the query results :

SELECT * FROM `user_operation_log` LIMIT 10000, 10Inquire about 3 The times are :

- 59 ms

- 49 ms

- 50 ms

It seems that the speed is OK , It's just a local database , Speed naturally faster .

Test from another angle

Same offset , Different amount of data

SELECT * FROM `user_operation_log` LIMIT 10000, 10

SELECT * FROM `user_operation_log` LIMIT 10000, 100

SELECT * FROM `user_operation_log` LIMIT 10000, 1000

SELECT * FROM `user_operation_log` LIMIT 10000, 10000

SELECT * FROM `user_operation_log` LIMIT 10000, 100000

SELECT * FROM `user_operation_log` LIMIT 10000, 1000000

The query time is as follows :

Quantity first, second, third 10 strip 53ms52ms47ms100 strip 50ms60ms55ms1000 strip 61ms74ms60ms10000 strip 164ms180ms217ms100000 strip 1609ms1741ms1764ms1000000 strip 16219ms16889ms17081ms

From the above results, we can conclude that : More data , The longer it takes

The same amount of data , Different offset

SELECT * FROM `user_operation_log` LIMIT 100, 100

SELECT * FROM `user_operation_log` LIMIT 1000, 100

SELECT * FROM `user_operation_log` LIMIT 10000, 100

SELECT * FROM `user_operation_log` LIMIT 100000, 100

SELECT * FROM `user_operation_log` LIMIT 1000000, 100

Offset the first time, the second time, the third time 10036ms40ms36ms100031ms38ms32ms1000053ms48ms51ms100000622ms576ms627ms10000004891ms5076ms4856ms

From the above results, we can conclude that : The greater the offset , The longer it takes

SELECT * FROM `user_operation_log` LIMIT 100, 100

SELECT id, attr FROM `user_operation_log` LIMIT 100, 100

Now that we have gone through the above toss , The conclusion is also drawn , In view of the above two problems : Large offset 、 Large amount of data , Let's optimize separately

Optimization of large offset

Adopt sub query method

We can locate the offset position first id, Then query the data

SELECT * FROM `user_operation_log` LIMIT 1000000,

10SELECT id FROM `user_operation_log` LIMIT 1000000,

1SELECT * FROM `user_operation_log` WHERE id >= (

SELECT id FROM `user_operation_log` LIMIT 1000000,

1

) LIMIT 10

The query results are as follows :

sql Take time first 4818ms Second ( Without index )4329ms Second ( With index )199ms Article 3 the ( Without index )4319ms Article 3 the ( With index )201ms

Draw a conclusion from the above results :

- The first one takes the most time , The third is a little better than the first

- Subqueries use indexes faster

shortcoming : Only applicable to id Incremental situation

id In the case of non increment, the following expression can be used , But this disadvantage is that paging queries can only be placed in sub queries

Be careful : some mysql Version not supported in in Used in clauses limit, Therefore, multiple nested select

SELECT * FROM `user_operation_log` WHERE id IN (

SELECT t.id FROM (

SELECT id FROM `user_operation_log` LIMIT 1000000,

10

) AS t

)

This method is more demanding ,id Must be continuously increasing , And it has to be calculated id The scope of the , And then use between,sql as follows

SELECT * FROM `user_operation_log` WHERE id between 1000000 AND 1000100 LIMIT 100SELECT * FROM `user_operation_log` WHERE id >= 1000000 LIMIT 100The query results are as follows :

sql Take time first 22ms Second 21ms

From the results, we can see that this method is very fast

Be careful : there LIMIT Yes, the number is limited , No offset is used

Optimize the problem of large amount of data

The amount of data returned will also directly affect the speed

SELECT * FROM `user_operation_log` LIMIT 1, 1000000

SELECT id FROM `user_operation_log` LIMIT 1, 1000000

SELECT id, user_id, ip, op_data, attr1, attr2, attr3, attr4, attr5, attr6, attr7, attr8, attr9, attr10, attr11, attr12 FROM `user_operation_log` LIMIT 1, 1000000

The query results are as follows :

sql Take time first 15676ms Second 7298ms Article 3 the 15960ms

From the results, we can see that we can reduce the unnecessary Columns , Query efficiency can also be significantly improved

The first and third queries are about the same speed , You will definitely make complaints about it. , Then why do I write so many fields , direct * No, it's over

Pay attention to my MySQL The server and client are in The same machine On , So the query data is not much different , Conditional students can test the client and MySQL Separate

SELECT * Doesn't it smell good ?

By the way, I would like to add why SELECT *. Is it simple and brainless , Doesn't it smell good ?

Two main points :

- use "SELECT * " The database needs to parse more objects 、 Field 、 jurisdiction 、 Properties and other related content , stay SQL Complex sentences , In the case of more hard parsing , It's a huge burden on the database .

- Increase network overhead ,* Sometimes it will be mistakenly taken with log、IconMD5 Such useless and large text fields , The data transfer size Will grow geometrically . especially MySQL Not on the same machine as the application , This kind of expense is very obvious .

end

Finally, I hope you can do it yourself , There must be more to be gained , Welcome to leave a message !!

I'll give you just the right script , What are you waiting for !!!

边栏推荐

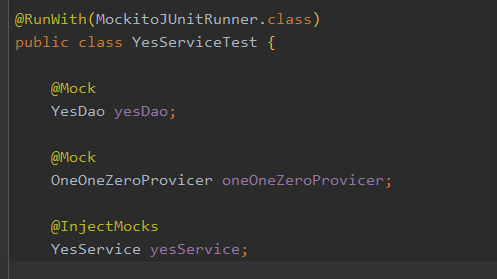

- How to write unit tests

- 研发效能的道与术 - 道篇

- “青出于蓝胜于蓝”,为何藏宝计划(TPC)是持币生息最后的一朵白莲花

- Guetzli simple to use

- 如何借助自动化工具落地DevOps|含低代码与DevOps应用实践

- My SQL is OK. Why is it still so slow? MySQL locking rules

- NUC 11 build esxi 7.0.3f install network card driver-v2 (upgraded version in July 2022)

- 别用Xshell了,试试这个更现代的终端连接工具

- 广东首例!广州一公司未履行数据安全保护义务被警方处罚

- DTS搭载全新自研内核,突破两地三中心架构的关键技术|腾讯云数据库

猜你喜欢

第一章概述-------第一节--1.3互联网的组成

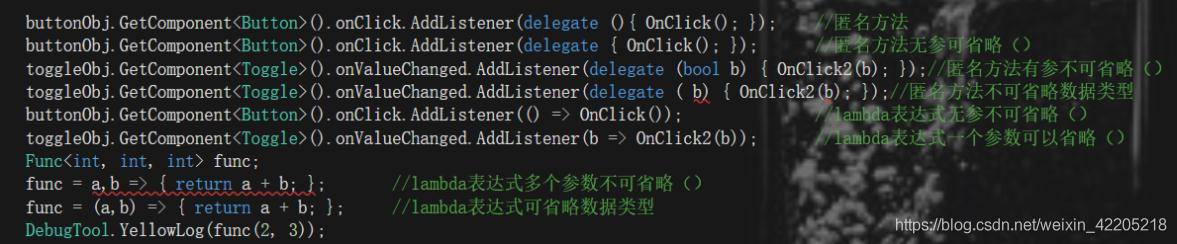

The difference between anonymous methods and lambda expressions

我的sql没问题为什么还是这么慢|MySQL加锁规则

该怎么写单元测试呢

最终一致性性分布式事务 TCC

Docker install redis? How to configure persistence policy?

数字化转型怎么就那么的难?!

营销指南 | 几种常见的微博营销打法

Comprehensively design an oppe homepage -- the design of the top and head

How to implement Devops with automation tools | including low code and Devops application practice

随机推荐

理财产品锁定期是什么意思?理财产品在锁定期能赎回吗?

“青出于蓝胜于蓝”,为何藏宝计划(TPC)是持币生息最后的一朵白莲花

搭建typora图床

Set up typera drawing bed

数字化转型怎么就那么的难?!

TCP 和 UDP 可以使用相同端口吗?

Matlab论文插图绘制模板第40期—带偏移扇区的饼图

ES:Compressor detection can only be called on some xcontent bytes or compressed xcontent bytes

Win11如何关闭共享文件夹

From SiCp to LISP video replay

抓包与发流软件与网络诊断

What is a distributed timed task framework?

Interface test for quick start of JMeter

40个高质量信息管理专业毕设项目分享【源码+论文】(六)

C#读取本地文件夹中所有文件文本内容的方法

Win11怎么重新安装系统?

Re7: reading papers fla/mlac learning to predict charges for critical cases with legal basis

Singleton mode

kubernetes之探针

Pyqt5 rapid development and practice 3.4 signal and slot correlation