当前位置:网站首页>Deep learning common optimizer summary

Deep learning common optimizer summary

2022-06-24 04:46:00 【Goose】

1. background

Choosing a good optimizer for a machine learning project is not an easy task . Popular deep learning library ( Such as PyTorch or TensorFLow) Provides a variety of optimizer options , They have their own advantages and disadvantages . also , Choosing an inappropriate optimizer may have a great negative impact on machine learning projects . This makes the selection optimizer a build 、 A key step in testing and deploying machine learning models .

2. Common optimizers

In this paper, we use w On behalf of the parameter ,g It's for gradients ,α For the global learning rate of each optimizer ,t Represents the time step (time step).

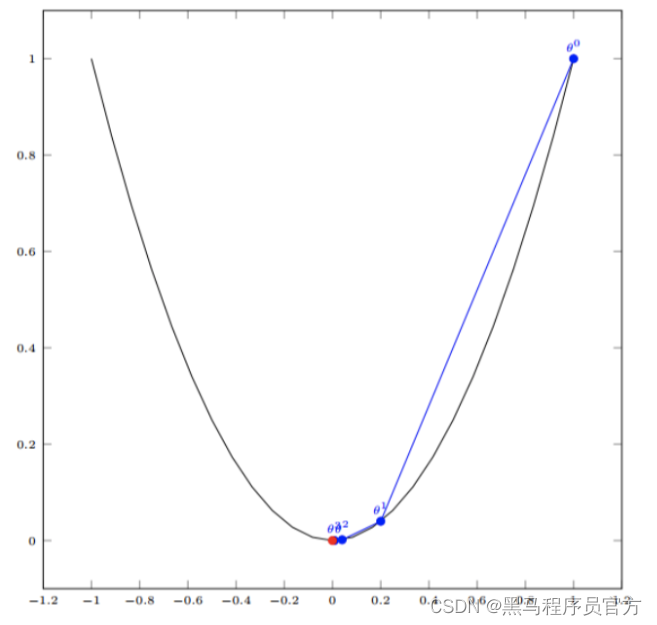

2.1 SGD Stochastic gradient descent

In the random gradient descent algorithm (SGD) in , The optimizer estimates the direction where the gradient falls the fastest based on a small batch , And take a step in that direction . Because the step size is fixed , therefore SGD It's likely to stop in the stable zone soon (plateaus) Or the local minimum .

2.2 With momentum SGD momentum

among β<1. When there is momentum ,SGD Will accelerate in the direction of continuous descent ( This is what this method is called 「 Heavy ball method 」 Why ). This acceleration helps the model escape from the stationary region , It is not easy to fall into local minimum .

2.3 AdaGrad

AdaGrad It's one of the first successful ways to use adaptive learning rate .AdaGrad be based on The square root of the reciprocal of the sum of square gradients To scale the learning rate of each parameter . This process amplifies the sparse gradient direction , To allow large adjustments in these directions . The result is that the Sparse features In the scene ,AdaGrad Can converge faster .

2.4 RMSprop

RMSprop The idea is similar to AdaGrad, But the re scaling of the gradient is not very positive : Replace the sum of the square gradients with the moving mean of the square gradients .RMSprop Usually used with momentum , It can be understood as Rprop Adaptation to small batch settings .

2.5 Adam

Adam take AdaGrad、RMSprop Combined with the momentum method . The direction of the next step is determined by the moving average of the gradient , The step size is set by the global step size . Besides , Be similar to RMSprop,Adam Rescale each dimension of the gradient .

Adam and RMSprop( or AdaGrad) One of the main differences between them is the instantaneous estimation m and v The zero deviation of is corrected .Adam It is well known that good performance can be achieved with a small amount of super parameter tuning .

2.6 AdamW

Loshchilov and Hutter In the adaptive gradient method L2 Inequalities for regularization and weight reduction , And suppose that this inequality limits Adam Performance of . then , They propose to decouple weight decay from learning rate . Experimental results show that AdamW Than Adam( Use momentum to reduce and SGD The gap between ) Better generalization performance , And for the AdamW for , The range of optimal super parameters is wider .

2.7 LARS

LARS yes SGD We have momentum expansion , It can adapt to the learning rate of each layer .LARS Recently, it has attracted much attention in the research field . This is due to the steady growth of available data , Distributed training of machine learning is becoming more and more popular . This makes the batch size start to grow , But it also makes training unstable . There are researchers (Yang et al) It is considered that these instabilities are due to the imbalance between the gradient criterion and the weight criterion of some layers . So they came up with an optimizer , The optimizer is based on 「 trust 」 Parameters η<1 And the inverse norm of the gradient of the layer to readjust the learning rate of each layer .

2.8 FTRL

It is mainly used for CTR Predictive online training , Thousands of dimensions result in a large number of sparse features . It is generally expected that the model parameters will be more sparse , But simple L1 Regular cannot really be sparse , Some gradient truncation methods (TG) The proposal of is to solve this problem , In the Middle East: FTRL It is an online learning method with both precision and sparsity .

FTRL The basic idea of Will be close to 0 The gradient of is set directly to zero , Skip the calculation directly to reduce the amount of calculation .

Here is the pseudocode of the project , The four parameters are adjustable .

Statement of rights : This paper is about CSDN Blogger 「 Entropy of banana fork 」 The original article of , follow CC 4.0 BY-

3. summary

If the data is sparse , Just use the self applicable method , namely Adagrad, Adadelta, RMSprop, Adam.

RMSprop, Adadelta, Adam In many cases the effect is similar .

Adam Is in the RMSprop On the basis of bias-correction and momentum,

As the gradient becomes sparse ,Adam Than RMSprop The effect will be good .

Overall, ,Adam Is the best choice .

Many papers will use SGD, No, momentum etc. .SGD Although it can reach a minimum , But it takes longer than other algorithms , And may be trapped at the saddle point .

If you need faster convergence , Or train deeper and more complex neural networks , An adaptive algorithm is needed .

Ref

https://www.cnblogs.com/guoyaohua/p/8542554.html

https://mp.weixin.qq.com/s/WjwkYzZpBGGKGfmGvwNi4Q

https://cloud.tencent.com/developer/article/1118673

https://zhuanlan.zhihu.com/p/58236906

边栏推荐

- ribbon

- Next. JS + cloud development webify creates an excellent website

- 一文简述:供应链攻击知多少

- ribbon

- Abnova荧光原位杂交(FISH)探针解决方案

- [2021 "shadow seeking" medical artificial intelligence algorithm competition] frequently asked questions related to Ti-One products

- 由浅入深的混合精度训练教程

- Introduction to C language custom types (structure, enumeration, union, bit segment)

- How to create an FTP server on the ECS? Is it safe to create an FTP server on the ECS?

- Facebook内部通告:将重新整合即时通讯功能

猜你喜欢

一款支持内网脱机分享文档的接口测试软件

Weibo International Edition changed its name to Weibo light sharing Edition

线性回归的损失和优化,机器学习预测房价

『渗透基础』Cobalt Strike基础使用入门_Cobalt Strike联动msfconsole

阿里云混合云首席架构师张晓丹:政企混合云技术架构的演进和发展

Abnova荧光原位杂交(FISH)探针解决方案

Doctor application | Hong Kong University of science and Technology (Guangzhou) Mr. Liu Hao recruits the full award doctor / Master in data mining

Training methods after the reform of children's programming course

解析后人类时代类人机器人的优越性

大一下学期期末总结(补充知识漏洞)

随机推荐

大一下学期期末总结(补充知识漏洞)

Web penetration test - 5. Brute force cracking vulnerability - (9) MS-SQL password cracking

由浅入深的混合精度训练教程

How to change the IP address of ECS? What are the precautions for changing the IP address

Worthington弹性蛋白酶的应用和相关研究

[2021 "shadow seeking" medical artificial intelligence algorithm competition] Ti-One product use tutorial

web渗透测试----5、暴力破解漏洞--(8)PostgreSQL密码破解

2022年二级造价工程师备考攻略,你准备好了吗?

How does ECS publish websites? What software tools are needed?

Weak current engineer, 25g Ethernet and 40g Ethernet: which do you choose?

Introduction to the "penetration foundation" cobalt strike Foundation_ Cobalt strike linkage msfconsole

Library management backstage

Real time monitoring: system and application level real-time monitoring based on flow computing Oceanus (Flink)

What is the data center

Mini web framework: adding routes in decorator mode | dark horse programmer

[2021 "shadow seeking" medical artificial intelligence algorithm competition] frequently asked questions related to Ti-One products

阿里云混合云首席架构师张晓丹:政企混合云技术架构的演进和发展

Introduction à la méthode de descente par Gradient - document d'apprentissage automatique pour les programmeurs de chevaux noirs

一文简述:供应链攻击知多少

Popularization of children's programming education in specific scenarios