当前位置:网站首页>Simple summary of recommendation system combined with knowledge map

Simple summary of recommendation system combined with knowledge map

2022-06-21 05:17:00 【Necther】

Recommendation system for recent years (Recommendation System) Combination of fields Knowledge Graph Embedding ( Knowledge map means learning ) perhaps Network Embedding( The Internet says ) A few of the papers made a brief introduction . First, a brief introduction to the recommendation system , After that, I sorted out several papers combined with knowledge representation .

Introduction to the recommendation system

A word to introduce , By analyzing historical data , To give users recommend Might like it / Purchased goods , The core of this is the user (User) and goods (Item). Further more , There are three key parts of the recommendation system :

- User preference modeling :User Preference

- Product feature modeling : Item Feature

- Interaction : Interaction

stay RS in , There are many specific problems , This note focuses on Click-Through-Rate( Click forecast ) problem , That is, click... According to the user's history / Purchased Item list , To predict the Will you click / Buy current item, So don't think about user Some attribute factors of , The essence is to judge history item Assemble with the current new item The similarity .

therefore item The key to modeling is , In the recommendation system , At present, a lot of work began to integrate some structural information to improve performance and interpretability , As for how to model the structure , Personal understanding of existing work can be roughly divided into two types :

- Combined with knowledge map (Knowledge Graph)

- Combining heterogeneous information networks (Heterogenerous Network)

This note mainly focuses on the recommendation system combined with several works of knowledge map to make a very simple summary , Follow up as time permits , Will complete this series .

The paper

CKE

Zhang F, Yuan N J, Lian D, et al. Collaborative knowledge base embedding for recommender systems[C]// KDD, 2016: 353-362.

problem : Recommend to users A list of goods , The evaluation index is to use [email protected]: [email protected] .

The overview

Use item All kinds of external auxiliary information are integrated into CF in , contain :

- structural information : Heterostructure information ( Knowledge map ), Such as ( actor -》 in - The movie )

- Text information : item The text description of , Such as the text introduction of the movie .

- Image information : item Pictures of the , Such as movie poster The encoding method is : - structural information : TransE/R - Text information / Image information : SDAE( Stack Auto Code )

Get about item Of the three Embedding, And CF Of latent factor Add up , obtain item The final embedding user : Only CF Of latent factor.

obtain item and user Of Embedding after , loss Then use pair-wise Of rankinig loss. In addition, we need to pay attention to ,CF In the process user/item The interaction is through the rating matrix , Only used here Postive Score information for ( Not less than 3).

evaluation

- It's a little limited , Need a lot of extra information in the knowledge map , It's not easy to get .

- The fusion method is a little rough , Just use vector addition

DKN

Wang H, Zhang F, Xie X, et al. Dkn: Deep knowledge-aware network for news recommendation[C]//WWW, 2018: 1835-1844.

This article focuses on news recommendation scenarios , take KG Embedding Combine with CTR in ( The way of pre training ), Basic combination of users click on the history of news , To see if you're going to click on new news . Essentially equivalent to Judge The similarity between historical news collections and new news .

The motivation is as follows , By extracting entities from news headlines , Then pass on the relevant other news through the knowledge map , Here's the picture :

Here's the main part of the model .

Multi Channel CNN

similar RGB Of 3 passageway : As CNN The input of ( The text is news title)

- word embedding: Lexical word embedding

- entity emebdding: KG Embedding Got entity embedding. For non entity words , The direct complement is 0 that will do .

- entity context embedding: For substantive words , The first-order neighbor entity average in the knowledge map embedding As supplementary information Make up three channels for cnn The input of

User-Candidate News Attention

- Attention Calculation : take u Click on news and candidate news embedding Connect , Input to DNN

- Query Vector: The headline features of the candidate news

- Key/Value: Features of all historical news titles that users click on

- Weighted to get the user's click preferences

- Click on two categories :aggreate obtain user embedding And Candidate news Similarity calculation , It's still used here dnn

summary

This article focuses more on the modeling of short text , Three channels CNN It's very technical , We can learn from other fields . Besides attention The calculation method of (target item by query,history items by key) It is also widely used .

RippleNet

Wang H, Zhang F, Wang J, et al. Ripplenet: Propagating user preferences on the knowledge graph for recommender systems[C]//CIKM, 2018: 417-426.

RippleNet: Take the knowledge map as additional information , Integrated into the CTR/Top-K recommend

motivation

Considering the waves (Ripple) Communication of , With user Interested in item by seed, On the map of commodity knowledge, it spreads to other products circle by circle item, This process is called preference communication (Preference Propagation).

The model assumes that the outer layer item It's also a potential user preference , So it's depicting user When , It needs to be taken into account , Instead of just using the observed items To express user Preference .

KG combination RS Two ways of thinking

- Embedding:item And attribute building knowledge map , And then use it KG Embedding, Calculation item Of Embedding.

- Path-Based: take user-item And attribute to build heterogeneous information network (HIN), And then use it HIN Related algorithm modeling (Metapath)

General situation ,KG Only consider item, and HIN You can consider user+item. Here is a brief introduction to the following model part .

Model is introduced

- Input : One user u And a candidate item i

- Output : user Will click item Probability

- structure And u First off k-hop Of item aggregate ( On the map of knowledge item set To expand outward ). [ these item It can be used as user Preference information for )

- according to embedding Vector inner product , Calculate candidates item i And Each layer hop Upper head item The normalized similarity of

- According to similarity , Yes Tailstock items To sum by weight , As this layer hop Output ( Essentially , Belong to Attention, among Q= The candidate item i, K=Head Item, V= Tail Item)

- Repeat the process k Time

- Will all k hop Add the output vectors of , As user Of Embedding, And item Of Embedding Inner product calculates the final similarity

summary

The motivation of this article is very intuitive , So it's very explanatory , The construction of the model is also full of skills , Such as sampling , Many local value mining .

The following is a brief introduction to two articles that combine network representation or heterogeneous information networks .

NERM

Zhao W X, Huang J, Wen J R. Learning distributed representations for recommender systems with a network embedding approach[C]//AIRS, 2016: 224-236.

Will recommend user,item And attributes as graph The nodes in the , Build a network . Then use network embedding To learn node eigenvectors , Use similarity to do TopK recommend .

structure Graph

user, item ,feature As different types of nodes , To build the network : - user-item The binary network , Side is user Buying or other activities item - user-item-tag The third part of the network : take item Of tag Attributes add in , Also as a node .

Study Embedding

This article uses LINE The first-order representation of : That is for a pair nodes (u, v) If There is an edge between the two , Then the inner product of the two vectors is larger

recommend :

Using the above calculation directly user-item Or attribute embedding, Use inner product to calculate similarity , According to the similarity ranking to calculate TopK

The overview

Fully using the Network Embedding The way to do Top-N recommend , It's a trial job , Contrast work is also very thin .

CDNE

Gao L, Yang H, Wu J, et al. Recommendation with multi-source heterogeneous information[J]. IJCAI 2018.

Problems and CKE(fzzhang) Agreement : by user recommend Top N May be interested in item. utilize Network Embedding Method modeling auxiliary information , Then combine CF: (Network Embedding The model basically refers to :Tri-Party Deep Network Representation)

Fusion of supporting information

For better modeling item, Combined with supporting information : - item Text content of (word sequence) - item The label of tag( It's also word) - item And item The connection structure information of

Deep Walk/Skip Gram Modeling AIDS

- imitation skip gram, A word that predicts context . Use the center here item To predict in text content word, And with item Of tag To predict the word

- utilize deep walk Let's learn about structure representation ( In essence skip gram) This is related to Tri-Party Deep Network Representation Exactly the same as .

So you can learn about nodes Embedding, word Of Embedding, tag Of Embedding. Of the nodes Embedding For the following CF.

combination CF

Same as CKE, The Embedding And CF Of latent vectors Direct additive , As item The representation vector of , The user's vector is still CF Of latent vectors. The use of Min(R-UV) To optimize ( This is different from CKE Of pair wise ranking)

data : Two of them CiteULike The data of , among item The nodes of are not using paper Of reference It's based on if there's more than 4 Two articles by the same author , Then add an edge between the two articles .

Briefly

- Method and motivation are basically similar to CKE, But there is no comparison CKE.

- amount to CKE + Tri-DNR The combination of

边栏推荐

- 常见的请求方式和请求头参数

- Abnova chicken anti cotton mouse IgG (H & L) secondary antibody (HRP) protocol

- 5.watch方法的实现

- YOLO系列------(二)Loss解析

- What is the event loop in JS?

- Abbexa 一抗、二抗、蛋白质等生物试剂方案

- Seata四大模式之AT模式详解及代码实现

- De duplication according to an attribute in the class

- Z 字变形[规律的形式之一 -> 周期考察]

- Deeply explore the technical characteristics of maker Education

猜你喜欢

Abnova 11 dehydrogenation TXB2 ELISA Kit instructions

Explain with pictures and deeply learn the advanced understanding of anchors in Yolo

Physical principle of space design in maker Education

The golang regular regexp package uses -03- to find matching strings, find matching string positions, and regular grouping (findsubmatch series methods, findsubmatchindex series methods)

基于SSM+MySQL+LayUI+JSP的公共交通运输信息管理系统

单例模式详解

jmeter里面的json提取器和调试器的用法

基于SSM+MySQL+Layui的博客论坛系统

Abnova鸡抗棉鼠 IgG (H&L) 二抗 (HRP) 方案

Shell script iterates through the values in the log file to sum and calculate the average, maximum and minimum values

随机推荐

Which is more valuable, PMP or MBA?

Dayjs get the last day of the first day of the current month the last day of the first day of the current year

Abnova 11-脱氢-TXB2 ELISA 试剂盒说明书

Super classic MySQL common functions, collect them quickly

Seven design principles

猴子都能上手的Unity插件Photon之重要部分(PUN)

Qualcomm LCD bring up process

Steam教育学科融合AR的智能场景

单例模式详解

Improve the comprehensive quality of teachers and students in the concept of maker Education

Glycosylated albumin research - abbexa ELISA kit to help!

[punch in] subarray sum is k

What is the event loop in JS?

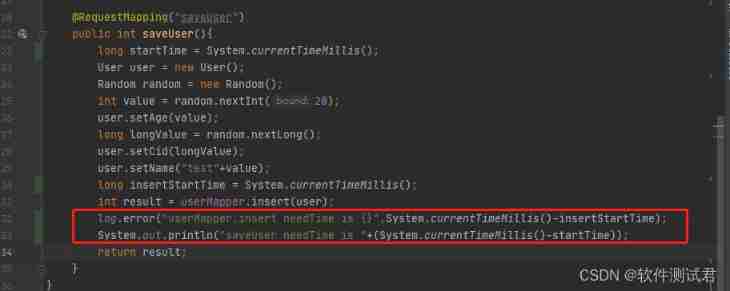

What's the difference between an ordinary tester and an awesome tester? After these two leaps, you can also

Packaging El Pagination

Mybatic framework (II)

How to take anti extortion virus measures for enterprise servers

Welcome the golden age of maker education + Internet

Abbexa 一抗、二抗、蛋白质等生物试剂方案

Physical principle of space design in maker Education