当前位置:网站首页>Clickhouse series: Clickhouse optimized block+lsm

Clickhouse series: Clickhouse optimized block+lsm

2022-06-09 22:25:00 【Import\u bigdata】

Click on the above In blue , choice “ Set to star ”

reply " interview " Get more surprises

The first part :

Block + LSM

In fact, the title of this section can also be changed to batch processing + Pre sort .clickhouse adopt block Design to implement batch processing , adopt lsm Algorithm to achieve pre sorting . Let's analyze separately , The impact of this combination on query speed .

First , We analyze the impact of ordered storage and unordered storage on query speed . We usually make inquiries , It can be roughly divided into query by value and query by range .

Two kinds of queries on disk access

As can be seen from the table , When indexes are used , If the query is by value, the ordered storage and unordered storage can basically achieve one-time disk IO Data reading can be realized . But read by range , Because it's orderly storage , Therefore, you only need one access to the disk to read all the data . For data stored out of order , In the worst case, you may need to read n Secondary disk .

Let's take a small example to illustrate :

SELECT avg(price) FROM orders where age between 20 and 30;Calculate the age in the order 20 To 30 The average order amount of year-old users . Suppose there is 1 One hundred million records , Each piece of data is about 1k, among 20-30 User orders between the ages of about 10%.

In the data according to age In the case of orderly storage , The amount of data read is 1 Billion *10%*1KB≈10G.

If the data is not in accordance with age Orderly storage , In this case , The amount of data read is 1 Billion *10%4K(1-27.1%)≈29.2G. The difference between the two is close 3 times .

thus it can be seen , On the whole , Ordered data is more advantageous in query . therefore ,clickhouse Pre write sorting is used in design , To ensure faster query speed . However, this will inevitably lead to the delay of data writing , therefore clickhouse It is not suitable for writing more and reading less .

Having said that, pre sort , Let's talk about the impact of next batch processing on performance .clickhouse The smallest unit that can be handled is block,block It's a collection of rows , The default maximum 8192 Rows form a block.

In fact, batch processing after pre sorting is well understood , After all, stored in clickhouse The data in the database are all in order , and clickhouse Hundreds of millions of records are designed to process , Therefore, the amount of data returned by general range queries is very large , If each treatment 1 If there are rows of data , Will greatly increase the number of disks IO Number of times . Of course , up to now , Just added IO frequency , It doesn't reduce the amount of data , So by this time , according to block The optimization of reading seems unnecessary , After all, once IO Compared with the time of reading data , It can be ignored . Readers don't have to worry , real block The time-saving point is in the next paragraph .

block The point that really exerts its power is actually compressing ! Yes , you 're right , It's an insignificant compression ! So how much data can compression save ? Let's take clickhouse The data actually stored in the storage engine . With clickhouse Official hits_v1 Library as an example , I chose one of them UserID List as an example , Use clickhouse Provided compressor The tool reads the data file of this column , You can see every one in this file block Size before and after compression .

I have a general look , The one with the largest compression ratio block Before compression is 130272 byte , Only after compression 639 byte , Compression up to 203 times ! Of course , This is a special case , Then let's count the of the whole document block Size before and after compression , Let's take this column as an example ,UserID Before column compression is 70991184 byte , After compression is 11596909 byte , The compression ratio is about 6.2 times !

Can achieve such a high compression ratio , In fact, it is the credit of inventory , For column storage database , Since each column is stored separately , Therefore, each data file is more regular than the row storage database , Therefore, a very high compression ratio can be achieved .

Come here , The power of batch processing comes out , By compressing , It's down again 6 Times the file size , That is, reduce again 6 Multiple disks IO Time .

Here is the clickhouse The most important optimization on the storage engine , By batch + Pre sort , Compared with the columnar database without this function , Reduced range queries in 10% About 18 Times the disk read time . And if in a 10 billion database , Query quantity 1% It can save 24 Times the disk read time .

Of course , Any architecture has two sides , Save disk read time , It also brings the following disadvantages :

Suitable for mass writing of data , If writing is frequent , Will affect write performance

If the range query has a large amount of data , Then the performance improvement will be low . Therefore, the amount of data is too small to give full play to the greatest advantage .

In accordance with block As the smallest processing unit , Therefore, the performance of deleting a single piece of data is not high .

The modified performance is poor , In particular, the columns used for sorting have been modified . Therefore, it is not suitable for transactional database .

attach

Some readers may ask , Why should unordered storage be multiplied by 4K. This is because the operating system reads the disk , According to the principle of data locality , Will be read in pages , The default size of each page is 4k. stay unistd.h In the header file getpagesize() You can get the page size of this machine , Here, it is calculated according to the default size .

In the formula 27.1% Refers to the cache hit rate , The hit rate is determined by the percentage of data to be queried in all data r decision . In this case, follow 4k Page size and 1k Record size , The relationship between hit rate and data proportion is shown in the figure below :

It's not hard to find out , There is a negative correlation between the two .

The second part :

LSM The algorithm first appeared in 1991 Year of ACM The journal of , After that, the idea is widely used in various data storage systems , for example LevelDB,HBase,Cassandra……LSM The algorithm adapts to different scenarios , There are many variations ,clickhouse Also used lsm Calculation to achieve its pre sorting function , This section will focus on clickhouse The use of , At the same time, it will also appropriately involve the use of some other systems, so that readers can experience the arbitrary architecture design .

We all know , The user is calling insert towards clickhouse When inserting data , Data doesn't have to be sorted by the sort key , The probability is out of order data . So how can this kind of out of order request be written to disk orderly ? This is LSM algorithmic .

LSM The core steps of the algorithm :

Before data is written to the storage system, log first , Prevent system crash

After logging, it can be used in memory , Write to disk when memory reaches its limit , Record the number of merges Level by 0(L=0). Files that have been written to disk are immutable .

After a period of time will be on the disk L and L+1 File merge for

Let's use an example to show the whole process

T=0 moment , The database is empty .

T=1 moment ,clickhouse I received a message 500 strip insert Insert request for , this 500 The data is out of order . here ,clickhouse Start the insert operation . First of all, will 500 Insert requests to write to the log at one time . Then sort in memory , After sorting, write the ordered results to disk , here L=0;

T=2 moment ,clickhouse I received a message 800 strip insert Insert request for , this 800 The data is out of order . here ,clickhouse Start the insert operation . First of all, will 800 Insert requests to write to the log at one time . Then sort in memory , After sorting, write the ordered results to disk , here L=0;

T=3 moment ,clickhouse Began to merge , At the moment , There are two L=0 The file of . These two files are in order inside each file , But there may be overlap .( For example, the first batch 500 The scope of the article is 300-400, The second batch of 800 The range of data is 350-700). So we need to merge .clickhouse After merging in the background , A new L=1 The file of . Put two L=0 Marked for deletion .

T=4 moment ,clickhouse Start cleaning , Actually physically delete two files marked for deletion .

T=5 moment ,clickhouse I received a message 100 strip insert Insert request for , this 100 The data is out of order . here ,clickhouse Start the insert operation . First of all, will 100 Insert requests to write to the log at one time . Then sort in memory , After sorting, write the ordered results to disk , here L=0;

T=6 moment ,clickhouse Began to merge , At the moment , There is... On disk 1 individual L=0 Documents and 1 individual L=1 The file of . These two files are in order inside each file , But there is no overlap .( for example L0 The scope of the document is 100-200,L1 The scope of the document is 300-700). So there's no need to merge .clickhouse In the background L=0 Upgrade to L=1, At this point, there are two L=1 Files that don't coincide with each other .

……

That's all LSM Algorithm in clickhouse Application on , Let's summarize ,clickhouse Use LSM The algorithm sorts the out of order data into ordered data in memory , Then write it to disk and save it , And periodically merge overlapping disk files .

It's not hard to find out , All of the above processes are written sequentially for the disk , So this is also LSM A feature of the algorithm —— A large number of random writes can be converted to sequential writes, thus reducing the number of disks IO Time .leveldb With the help of lsm This characteristic of . Of course ,clickhouse This feature is not used . Here's a brief introduction leveldb How to use LSM Of .

clickhouse With the help of LSM The function of pre sorting is realized , Improved disk utilization , But it also brought some sacrifices . Again , There is no perfect architecture , When architecture solves a problem , It's bound to bring a whole new problem .

about clickhouse It's the same thing , Readers already know ,clickhouse Many times insert Create a separate data file on request . although clickhouse Will merge at the right time , But if the query happened before the merge , It's possible that the data is distributed in two data files . here clickhouse By default, two lists are returned , The two lists are ordered internally , But there will be overlap between them . This brings inconvenience to users , The picture below shows this situation .

It can be seen that , here clickhouse When not merged, the query results are divided into 4 It's an independent result , Each result is ordered internally , But there's overlap , In other words, in this case, users need to merge by themselves . We wait for it to merge and query again , give the result as follows :

clickhouse The merger will solve the problem .

LevelDB Usage of

leveldb Is a database that allows modification , So it's important for LSM The use of and clickhouse similar , The main difference is that the operation after writing the log is different .

clickhouse After logging , Will sort directly in memory , To write to disk . If at this time clickhouse I got another write in , It's going to restart a new process .

and leveldb After logging , Will cache the data in memory first , Wait for the subsequent operation to continue to operate this memory , Until the memory is full , Will write data to disk at one time .

The main difference is that the two databases are facing different scenarios ,clickhouse It is mainly oriented to the analysis scenario of more reading and less writing , Emphasis on mass write once to increase throughput . and leveldb It is mainly oriented to the business scenario of more writing and less reading , Emphasis on low latency .

Throughput and delay are always two opposite indicators , There are trade-offs between these two indicators in different systems . I will also write an article on the love and killing between these two indicators when I have a chance in the future , And the thinking of well-known open source software between these two indicators .

other

Pull back , Because of the different scenarios ,clickhouse and leveldb Yes LSM There are differences in the use of . It also gives us an inspiration , As an architect , We have to make use of it with one heart . To be able to understand what the requirements of the business we are designing are , Then make changes that meet the requirements . Instead of thinking mindlessly LSM It must be used to write more and read less .

It's going to be a little hard to do that , But fortunately, we can stand on the shoulders of our predecessors , Experience the exquisite architecture designed by predecessors . With such experience and thinking , We can think more deeply when we have the same problem .

That's why I wrote this series ,clickhouse It's really a model of engineer design , Whole clickhouse No new scientific theories have been invented , But let us see that with the help of existing theories, performance can also be played to the extreme in a certain aspect , This pursuit of the ultimate engineer spirit fascinates me deeply , I think I need to pass on this exquisite design idea to you . I hope one day , Our Chinese engineers can also bring the ultimate products to the world . Because of you , Because of me , The joint efforts of many ordinary and great engineers , This day will surely come . towards clickhouse Our R & D team salutes .

If this article is helpful to you , Don't forget it 「 Looking at 」 「 give the thumbs-up 」 「 Collection 」 Third company, hello !

The worst age of the Internet may really come

I am here B Standing University , Big data

We are learning Flink When , What are you learning ?

193 This article beat up Flink, You need to pay attention to this collection

Flink Production environment TOP Problems and optimization , Alibaba Sutra Pavilion YYDS

Flink CDC I can't keep Jesus after I eat !| Flink CDC Online problem small disk point

We are learning Spark When , What are you learning ?

In all Spark Module , I would like to say SparkSQL For the strongest !

Rigid Hive | 4 A summary of the interview on the basis of ten thousand words

Encyclopedia of data governance methodology and practice

Label system under the user portrait construction guide

4 Ten thousand words long text | ClickHouse Basics & practice & Analysis from a full perspective

Another decade of big data starts |《 Hard and rigid series 》 The first edition is over

I wrote about growing up / interview / Articles on career advancement

When we are learning Hive What were you learning when you were ?「 Rigid Hive Sequel 」

边栏推荐

- TL, how do you manage project risks?

- 202206007 Simulation Competition summary

- Oracle paging

- Find My技术|物联网时代,苹果Find My实现真正的智能防丢

- Day6-t1345 & T39 -2022-01-21-not answer by yourself

- 华为设备配置Hub and Spoke

- Bonner visual lens lcf08lk1f

- IAR打开工程编译未响应、卡死 & STM32CubeMX生成工程时出错

- C语言试题163之计算某一天是对应年的第几天,这一年一共多少天;计算两个日期之间相隔的天数。两个日期由键盘输入。

- IAR open project compilation unresponsive, stuck & stm32cubemx error generating project

猜你喜欢

调查显示macOS应用开发者普遍表示产品如何被用户发现是他们最大的挑战

购买内存条前的行动

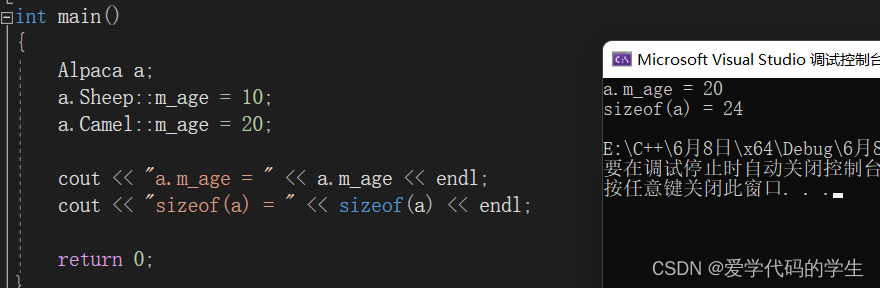

All inherited features

10个常见触发IO瓶颈的高频业务场景

Introduction to startup of spider PI intelligent vision hexapod robot 0602

This book has won the 2022 Beijing college entrance examination composition

Huawei cloud zero code development image compression tool

【报表工具的第二次革命】基于SPL语言优化报表结构、提升报表运算性能

AQUANEE将在近期登陆Gate以及BitMart,低位布局的良机

工业互联网+危化安全生产数字化综合管理云平台

随机推荐

Day5-t2029 & T39 -2022-01-20-not answer by yourself

chez scheme 环境搭建

Function object (functor)

这本书押中了2022北京高考作文题

Industrial Internet + Digital Integrated Management cloud platform for hazardous chemical safety production

C语言试题163之计算某一天是对应年的第几天,这一年一共多少天;计算两个日期之间相隔的天数。两个日期由键盘输入。

SQL advanced processing

St link V2 Download: internal command error & error: flash download failed - target DLL has been canceled

C语言试题170之矩阵转置

健身是什么?

Ble link layer air packet format

稍微复杂的查询

目前28岁,从20年开始北漂闯荡到入职软件测试,我成功捧起15K薪资

TL,你是如何管理项目风险的?

FPN-Feature Pyramid Network

The Little Schemer 中文版

BLE链路层空中包格式

蓝牙协议分析(7)_BLE连接有关的技术分析

The application of prototype object strengthens the function of array object 0526

C语言试题169之谁家孩子跑得最慢