当前位置:网站首页>Crawling JS encrypted data of playwright actual combat case

Crawling JS encrypted data of playwright actual combat case

2022-07-29 08:23:00 【Make Jun Huan】

List of articles

Preface

Playwright Is a powerful Python library , Use only one API It can be executed automatically Chromium、Firefox、WebKit And other mainstream browser automation operations , And support headless mode at the same time 、 Head mode operation . Playwright The automation technology provided is green 、 Powerful 、 Reliable and fast , Support Linux、Mac as well as Windows operating system .

One 、Playwright Installation and use of

1. install

- To use Playwright, need Python 3.7 Version and above , Please make sure Python The version of meets the requirements .

install Playwright, The order is as follows :

# install playwright library

pip install playwright

# Install browser driver files ( The installation process is a little slow )

playwright install

2. Recording

- Use Playwright No need to write a line of code , We just need to manually operate the browser , It will record our actions , And then automatically generate code scripts

Enter the following command

# Help order

playwright codegen --help

# Try starting a Firefox browser , Then output the operation result to script.py file

playwright codegen -o script.py -b firefox

Two 、 Case realization

1. Ideas

- adopt playwright Open the browser to get JavaScript Rendered data , Thus bypassing decryption JavaScript Encrypted data , Follow Selenium Same , It's all analog people who open the browser to get data .

2. Import and stock in

The code is as follows ( Example ):

from playwright.sync_api import sync_playwright

from lxml import etree

import pymongo

# pymongo It has its own connection pool and automatic reconnection mechanism , But still need to capture AutoReconnect Exception and reissue the request .

from pymongo.errors import AutoReconnect

from retry import retry

# logging Used to output information

import logging

import time

# Starting time

start = time.time()

# Log output format

logging.basicConfig(level=logging.INFO,

format='%(asctime)s - %(levelname)s: %(message)s')

3. Drive the browser to access

- By opening the browser visit https://www.oklink.com/zh-cn/btc/tx-list?limit=100&pageNum=1 Then get the web source code , Then pass the web page source code into the generator , For the next call

The code is as follows ( Example ):

BTC_URL = 'https://www.oklink.com/zh-cn/btc/tx-list?limit=100&pageNum={pageNum}'

def run(playwright):

# Drive browser , And start headless mode

browser = playwright.chromium.launch(headless=True)

# open windows

page = browser.new_page()

for Num in range(1, 3):

# Trigger event

page.on('response', on_response)

# visit URL

page.goto(BTC_URL.format(pageNum=Num))

# Called wait_for_load_state Method waits for a state of the page to complete , Here we introduce state yes networkidle, That is, the network is idle

page.wait_for_load_state('networkidle')

# generator

yield page.content()

# html = page.content()

# Get_the_data(page.content())

# print(html)

browser.close()

4. Triggering event

- on_response Method is used to judge some requests ( /api/explorer/v1/btc/transactionsNoRestrict ) Whether the returned status is 200, If it is 200 , You can get the data returned by the request , That is, the encrypted data

The code is as follows ( Example ):

def on_response(response):

try:

# Filter requests , And judge the state

if '/api/explorer/v1/btc/transactionsNoRestrict' in response.url and response.status == 200:

# return json Format data

logging.info('get invalid status code %s while scraping %s',

response.status, response.url)

# data_set = response.json().get('data').get('hits')

# for item in data_set:

# Transaction_hashing = item.get('hash')

# The_block= item.get('blockHeight')

# print(Transaction_hashing)

# print(The_block)

return response.json()

if '/api/explorer/v1/btc/transactionsNoRestrict' in response.url and response.status != 200:

# If not 200 Print out the response code and link in the log

logging.error('get invalid status code %s while scraping %s',

response.status, response.url)

except Exception as e:

# exc_info Boolean value , If the value of this parameter is True when , The exception information will be added to the log message ; If not, it will None Add to log information .

logging.error('error occurred while scraping %s',

response.url, exc_info=True)

5. Get data

- In front we have passed run() Method to get the source code of the web page , And the data we want is also in the web source code , Just use it xpath Come and get the data

The code is as follows ( Example ):

def Get_the_data(html):

# Format source code

selector = etree.HTML(html)

data_set = selector.xpath(

'//*[@id="root"]/main/div/div[3]/div/div[2]/section/div/div/div/div/table/tbody/tr')[1:]

for data in data_set:

Transaction_hashing = data.xpath('td[1]/div/a/text()')[0]

The_block = data.xpath('td[2]/a/text()')[0]

Trading_hours = data.xpath('td[3]/div/span/text()')[0]

The_input = data.xpath('td[4]/span/text()')[0]

The_output = data.xpath('td[5]/span/text()')[0]

quantity = data.xpath('td[6]/span/span/text()')[0]

premium = data.xpath('td[7]/span/span/text()')[0]

BTC_data = {

' Transaction hash ': Transaction_hashing,

' Block ': The_block,

' Trading hours ': Trading_hours,

' Input ': The_input,

' Output ': The_output,

' Number (BTC)': quantity,

' Service Charge (BTC)': premium

}

yield BTC_data

6. Save data to Mongodb

The code is as follows ( Example ):

@retry(AutoReconnect, tries=4, delay=1)

def save_data(data):

""" Save data to mongodb Use update_one() The first parameter of the method is the query condition , The second parameter is the field to be modified . upsert: It's a special update , If you do not find a document that meets the conditions for updating , A new document will be created based on this condition and the updated document ; If a matching document is found , It will be updated normally ,upsert Very convenient , There is no need to preset the set , The same set of code can be used to create documents and update documents """

# If it exists, it will be updated , If it doesn't exist, create a new one ,

collection.update_one({

# Guarantee data Is the only one.

' Transaction hash ': data.get(' Transaction hash ')

}, {

'$set': data

}, upsert=True)

7. Calling method

The code is as follows ( Example ):

with sync_playwright() as playwright:

for html in run(playwright):

for data in Get_the_data(html):

logging.info('get detail data %s', data)

logging.info('saving data to mongodb')

save_data(data)

logging.info('data saved successfully')

# End time

end = time.time()

print('Cost time: ', end - start)

8. Run code

summary

playwright Compared with the existing automated testing tools, it has many advantages , such as :

- Cross browser , Support Chromium、Firefox、WebKit

- Cross operating system , Support Linux、Mac、Windows

- It can record and generate code , Liberating hands

At present, the disadvantage of mobile terminal is that the ecosystem and documents are not very complete .

边栏推荐

- Ga-rpn: recommended area network for guiding anchors

- Random lottery turntable wechat applet project source code

- New energy shared charging pile management and operation platform

- Reading papers on false news detection (4): a novel self-learning semi supervised deep learning network to detect fake news on

- 阿里巴巴政委体系-第三章、阿里政委与文化对接

- Hal learning notes - Advanced timer of 7 timer

- 【Transformer】ATS: Adaptive Token Sampling For Efficient Vision Transformers

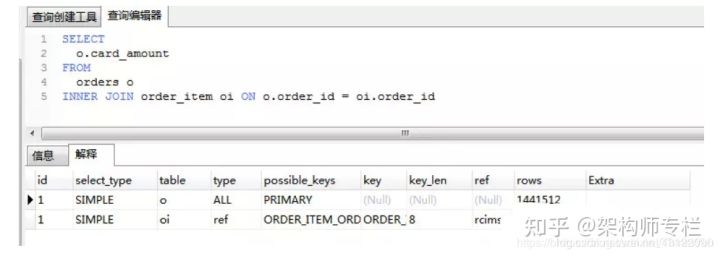

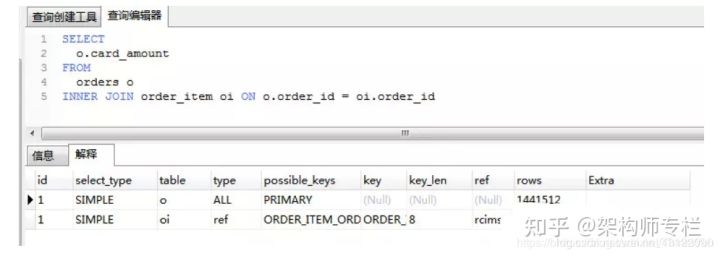

- A problem encountered in SQL interview

- Second week of postgraduate freshman training: convolutional neural network foundation

- Ws2812b color lamp driver based on f407zgt6

猜你喜欢

BiSeNet v2

110道 MySQL面试题及答案 (持续更新)

The computer video pauses and resumes, and the sound suddenly becomes louder

STM32 serial port garbled

Alibaba political commissar system - Chapter 4: political commissars are built on companies

数仓分层设计及数据同步问题,,220728,,,,

Day6: using PHP to write landing pages

STM32 MDK (keil5) contents mismatch error summary

A problem encountered in SQL interview

110 MySQL interview questions and answers (continuously updated)

随机推荐

Week 2: convolutional neural network basics

torch.nn.functional.one_hot()

【OpenCV】-算子(Sobel、Canny、Laplacian)学习

commonjs导入导出与ES6 Modules导入导出简单介绍及使用

User identity identification and account system practice

[beauty of software engineering - column notes] "one question and one answer" issue 3 | 18 common software development problem-solving strategies

Qpalette learning notes

Clion+opencv+aruco+cmake configuration

Inclination sensor accuracy calibration test

Hal learning notes - Advanced timer of 7 timer

Background management system platform of new energy charging pile

Cs4344 domestic substitute for dp4344 192K dual channel 24 bit DA converter

Preparation of SQL judgment statement

[beauty of software engineering - column notes] 29 | automated testing: how to kill bugs in the cradle?

Day6: using PHP to write landing pages

Simple calculator wechat applet project source code

Day5: PHP simple syntax and usage

简易计算器微信小程序项目源码

Charging pile charging technology new energy charging pile development

PostgreSQL手动创建HikariDataSource解决报错Cannot commit when autoCommit is enabled