当前位置:网站首页>Upgrade the project of log4j to log4j2

Upgrade the project of log4j to log4j2

2022-06-13 07:26:00 【Small side good side】

0. background

Although most of java The project uses logback, But there are still many open source projects using log4j, for example kafka、zookeeper. however log4j Already in 2012 Stop updating in , also log4j Sweep out the following security vulnerabilities :

CVE-2020-9488

CVE-2019-17571

For companies with high security requirements , This is not allowed , The problem is how to solve these problems without modifying the source code of these open source middleware CVE Loophole .

from log4j2 Its official website https://logging.apache.org/log4j/2.x/ come to know ,log4j and log4j2 There is a certain degree of compatibility , What they achieve is SLF4J Of API, In theory, it can be replaced jar Packet switched .

1.log4j Of jar Replace the package with log4j2

With zookeeper and kafka For example , Delete lib These three in the directory jar package :slf4j-log4j12、slf4j-api and log4j

introduce log4j2 My bag :

log4j-1.2-api-2.13.2.jar

log4j-api-2.13.2.jar

log4j-core-2.13.2.jar

log4j-slf4j-impl-2.13.2.jar

slf4j-api-1.7.30.jar

Pay special attention to log4j-1.2-api This package , This must be introduced , This is for compatibility log4j 1.x Provided . Originally used log4j2 The framework does not need this package , however kafka In some places, it is not used to print logs SLF4J Interface org.slf4j.Logger, Some places use it directly org.apache.log4j Class in . This needs to go through log4j-1.2-api This package bridges .

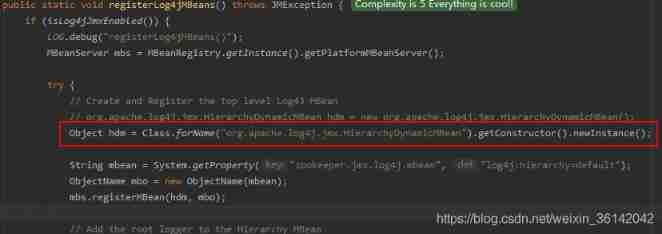

Now kafka There's no problem running ,zookeeper But the class is reported and cannot be found ,org.apache.log4j.jmx.HierarchyDynamicMBean, Isn't it log4j in log4j2 It's cold zk here ?

If you look carefully, zk Source code , This is jmx It is necessary to open , And it is instantiated through the class loader , Not directly import Of .

also zk Of jmx There is a switch to turn it off , That is to say, we are zk Turn off in the startup parameters of jmx You won't load this class . take zookeeper.jmx.log4j.disable Set to true that will do .

2. modify log4j To configure

The original replacement is complete jar After the package kafka and zookeeper The processes are running normally , But after a long time running, I found a very strange phenomenon , It is often found that the last line of the log file contains only half of the contents , The log was truncated ...

Log4j2 The official website of has a pair of log4j1.x Introduction to compatibility :

Except for some common API Compatible with , The configuration mode is also compatible . But this “experimental” The adjective is in a hole . Experimental support ? That is to say log4j1.x The configuration of cannot be completely converted to log4j2 Of , It's hard to say how far we can go .

So simply switch the configuration file to log4j2 Configuration mode , The problem of printing interruption will not occur again . for example kafka Of log4j.properties Change to the following configuration :

status = error

dest = err

name = PropertiesConfig

property.filename = d:/kafka/log/server.log

property.stateChange.filename = d:/kafka/log/state-change.log

property.request.filename = d:/kafka/log/kafka-request.log

property.cleaner.filename = d:/kafka/log/log-cleaner.log

property.controller.filename = d:/kafka/log/controller.log

property.authorizer.filename = d:/kafka/log/kafka-authorizer.log

property.sasl.filename = d:/kafka/log/kafka-sasl.log

filter.threshold.type = ThresholdFilter

filter.threshold.level = debug

appender.console.type = Console

appender.console.name = STDOUT

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %m%n

appender.console.filter.threshold.type = ThresholdFilter

appender.console.filter.threshold.level = error

appender.rolling.type = RollingFile

appender.rolling.name = RollingFile

appender.rolling.fileName = ${

filename}

appender.rolling.filePattern = d:/kafka/log/server-%d{

yyyy-MM-dd}-%i.log.gz

appender.rolling.layout.type = PatternLayout

appender.rolling.layout.pattern = %d %p %C{

1.} [%t] %m%n

appender.rolling.policies.type = Policies

appender.rolling.policies.time.type = TimeBasedTriggeringPolicy

appender.rolling.policies.time.interval = 30

appender.rolling.policies.time.modulate = true

appender.rolling.policies.size.type = SizeBasedTriggeringPolicy

appender.rolling.policies.size.size=10MB

appender.rolling.strategy.type = DefaultRolloverStrategy

appender.rolling.strategy.max = 30

appender.stateChange.type = RollingFile

appender.stateChange.name = stateChangeFile

appender.stateChange.fileName = ${

stateChange.filename}

appender.stateChange.filePattern = d:/kafka/log/state-change-%d{

yyyy-MM-dd}-%i.log.gz

appender.stateChange.layout.type = PatternLayout

appender.stateChange.layout.pattern = %d %p %C{

1.} [%t] %m%n

appender.stateChange.policies.type = Policies

appender.stateChange.policies.time.type = TimeBasedTriggeringPolicy

appender.stateChange.policies.time.interval = 30

appender.stateChange.policies.time.modulate = true

appender.stateChange.policies.size.type = SizeBasedTriggeringPolicy

appender.stateChange.policies.size.size=10MB

appender.stateChange.strategy.type = DefaultRolloverStrategy

appender.stateChange.strategy.max = 10

appender.request.type = RollingFile

appender.request.name = requestRollingFile

appender.request.fileName = ${

request.filename}

appender.request.filePattern = d:/kafka/log/kafka-request-%d{

yyyy-MM-dd}-%i.log.gz

appender.request.layout.type = PatternLayout

appender.request.layout.pattern = %d %p %C{

1.} [%t] %m%n

appender.request.policies.type = Policies

appender.request.policies.time.type = TimeBasedTriggeringPolicy

appender.request.policies.time.interval = 30

appender.request.policies.time.modulate = true

appender.request.policies.size.type = SizeBasedTriggeringPolicy

appender.request.policies.size.size=10MB

appender.request.strategy.type = DefaultRolloverStrategy

appender.request.strategy.max = 10

appender.cleaner.type = RollingFile

appender.cleaner.name = cleanerRollingFile

appender.cleaner.fileName = ${

cleaner.filename}

appender.cleaner.filePattern = d:/kafka/log/log-cleaner-%d{

yyyy-MM-dd}-%i.log.gz

appender.cleaner.layout.type = PatternLayout

appender.cleaner.layout.pattern = %d %p %C{

1.} [%t] %m%n

appender.cleaner.policies.type = Policies

appender.cleaner.policies.time.type = TimeBasedTriggeringPolicy

appender.cleaner.policies.time.interval = 30

appender.cleaner.policies.time.modulate = true

appender.cleaner.policies.size.type = SizeBasedTriggeringPolicy

appender.cleaner.policies.size.size=10MB

appender.cleaner.strategy.type = DefaultRolloverStrategy

appender.cleaner.strategy.max = 10

appender.controller.type = RollingFile

appender.controller.name = controllerRollingFile

appender.controller.fileName = ${

controller.filename}

appender.controller.filePattern = d:/kafka/log/controller-%d{

yyyy-MM-dd}-%i.log.gz

appender.controller.layout.type = PatternLayout

appender.controller.layout.pattern = %d %p %C{

1.} [%t] %m%n

appender.controller.policies.type = Policies

appender.controller.policies.time.type = TimeBasedTriggeringPolicy

appender.controller.policies.time.interval = 30

appender.controller.policies.time.modulate = true

appender.controller.policies.size.type = SizeBasedTriggeringPolicy

appender.controller.policies.size.size=10MB

appender.controller.strategy.type = DefaultRolloverStrategy

appender.controller.strategy.max = 10

appender.authorizer.type = RollingFile

appender.authorizer.name = authorizerRollingFile

appender.authorizer.fileName = ${

authorizer.filename}

appender.authorizer.filePattern = d:/kafka/log/kafka-authorizer-%d{

yyyy-MM-dd}-%i.log.gz

appender.authorizer.layout.type = PatternLayout

appender.authorizer.layout.pattern = %d %p %C{

1.} [%t] %m%n

appender.authorizer.policies.type = Policies

appender.authorizer.policies.time.type = TimeBasedTriggeringPolicy

appender.authorizer.policies.time.interval = 30

appender.authorizer.policies.time.modulate = true

appender.authorizer.policies.size.type = SizeBasedTriggeringPolicy

appender.authorizer.policies.size.size=10MB

appender.authorizer.strategy.type = DefaultRolloverStrategy

appender.authorizer.strategy.max = 5

appender.sasl.type = RollingFile

appender.sasl.name = saslRollingFile

appender.sasl.fileName = ${

sasl.filename}

appender.sasl.filePattern = d:/kafka/log/kafka-sasl-%d{

yyyy-MM-dd}-%i.log.gz

appender.sasl.layout.type = PatternLayout

appender.sasl.layout.pattern = %d %p %C{

1.} [%t] %m%n

appender.sasl.policies.type = Policies

appender.sasl.policies.time.type = TimeBasedTriggeringPolicy

appender.sasl.policies.time.interval = 30

appender.sasl.policies.time.modulate = true

appender.sasl.policies.size.type = SizeBasedTriggeringPolicy

appender.sasl.policies.size.size=10MB

appender.sasl.strategy.type = DefaultRolloverStrategy

appender.sasl.strategy.max = 5

logger.apachekafka.name=org.apache.kafka

logger.apachekafka.level=info

logger.apachekafka.additivity = true

logger.apachekafka.appenderRef.rolling.ref = RollingFile

logger.request.name=kafka.network

logger.request.level=info

logger.request.additivity = true

logger.request.appenderRef.rolling.ref=requestRollingFile

logger.controller.name=kafka.controller

logger.controller.level=TRACE

logger.controller.additivity = true

logger.controller.appenderRef.rolling.ref=controllerRollingFile

logger.cleaner.name=kafka.log.LogCleaner

logger.cleaner.level=info

logger.cleaner.additivity = true

logger.cleaner.appenderRef.rolling.ref=cleanerRollingFile

logger.state.name=state.change.logger

logger.state.level=info

logger.state.additivity = true

logger.state.appenderRef.rolling.ref=stateChangeFile

logger.authorizer.name=kafka.authorizer.logger

logger.authorizer.level=info

logger.authorizer.additivity = true

logger.authorizer.appenderRef.rolling.ref=authorizerRollingFile

logger.kafka.name=kafka

logger.kafka.level=info

logger.kafka.additivity = true

logger.kafka.appenderRef.rolling.ref=RollingFile

rootLogger.level = info

rootLogger.appenderRef.rolling.ref=STDOUT

3. Modify startup script

log4j and log4j2 Loading the configuration file is different , So revise kafka and zk Start script for

kafka modify kafka-server-start.sh Configuration item of log configuration file in

zk modify zkServer.sh, stay $QuorumPeerMain with

-Dlog4j.configurationFile=${zkpath}/zookeeper/conf/log4j.properties -Dzookeeper.jmx.log4j.disable=true $QuorumPeerMain

边栏推荐

- QT读取SQLserver数据库

- TCP协议的三次握手过程和四次挥手过程以及为什么要这样? ------一二熊猫

- socket编程2:IO复用(select && poll && epoll)

- SDN基本概述

- Find the first and last positions of elements in a sorted array

- TXT_ File encryption and compression

- RT thread simulator lvgl control: switch switch button control

- Detailed description of drawing ridge plot, overlapping densities of overlapping kernel density estimation curve, facetgrid object and function sns Kdeplot, function facetgrid map

- 关于c#委托、事件相关问题

- Interview questions must be asked - Optimization of large table Pagination

猜你喜欢

RT-Thread 模拟器 simulator LVGL控件:button 按钮事件

One article of quantitative framework backtrader read analyzer

![[Markov chain Monte Carlo] Markov chain Monte Carlo method sampling prior distribution](/img/8a/e6423168e110a168bc3cc6407628f6.png)

[Markov chain Monte Carlo] Markov chain Monte Carlo method sampling prior distribution

Learning notes of balanced binary tree -- one two pandas

C#合并多个richtextbox内容时始终存在换行符的解决方法

C语言:如何给全局变量起一个别名?

The biggest highlight of wwdc2022: metalfx

![[vivefocus uses the wavevr plug-in to obtain handle operation events]](/img/4f/2ca02799ef5cde1a28101d61199856.jpg)

[vivefocus uses the wavevr plug-in to obtain handle operation events]

SDN basic overview

Find the first and last positions of elements in a sorted array

随机推荐

C#合并多个richtextbox内容时始终存在换行符的解决方法

C # using multithreading

Awk use

oracle问题,字段里面的数据被逗号隔开,取逗号两边数据

【ViveFocus使用WaveVR插件获取手柄操作事件】

6. system call

The biggest highlight of wwdc2022: metalfx

NFV基本概述

平衡二叉树学习笔记------一二熊猫

关于c#委托、事件相关问题

Powerdispatcher reverse generation of Oracle data model

Nfv basic overview

TXT_ File encryption and compression

Adding certificates to different systems

Lightning data import

汇编语言基础:寄存器和寻址方式

mysql中时间字段 比较时间大小

Ticdc synchronization task

量化框架backtrader之一文读懂Analyzer分析器

25个国内外文献数据库