当前位置:网站首页>Install Kubernetes 1.24

Install Kubernetes 1.24

2022-06-13 10:17:00 【Etaon】

This article is about Wangshusen Read it all at once ( May be ) Latest version of the whole network Kubernetes 1.24.1 Cluster deployment Practice , Special thanks https://space.bilibili.com/479602299

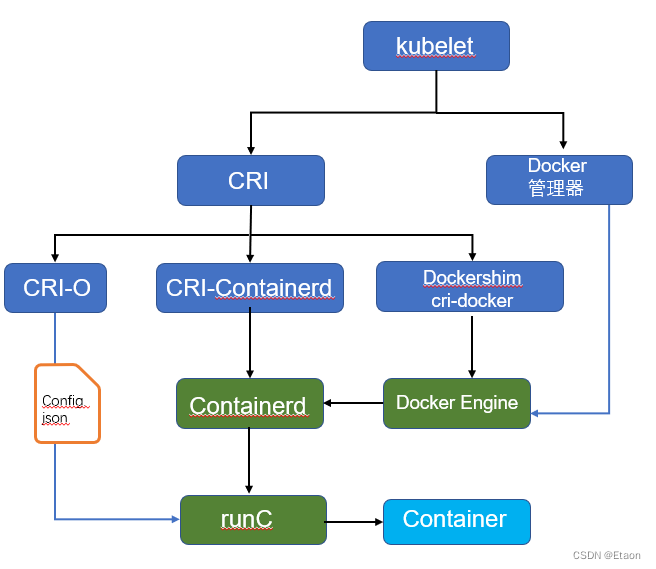

Kubernetes Container runtime evolution

In the early kubernetes runtime framework , It's not that complicated ,kubelet Create a container , Call directly docker daemon,docker daemon Call yourself libcontainer Just run the container .

International manufacturers believe that runtime standards cannot be Docker One company controls , So they colluded to establish the open container standard OCI. Flicker Docker hold libcontainer It encapsulates , become runC Donate it as OCI Reference implementation of .

OCI( Open container standard ), Specifies the 2 spot :

- What does the container image look like , namely ImageSpec. The general rule is that you need a compressed folder , In the folder with xxx The structure puts xxx file ;

- What instructions does the container need to be able to receive , What is the behavior of these instructions , namely RuntimeSpec. The general content of this is “ Containers ” To be able to perform “create”,“start”,“stop”,“delete” These orders , And behavior should be standardized .

runC Reference implementation , That is, it can run the container image according to the standard , The advantage of standards is that they are convenient for innovation , As long as it meets the standards , Other tools in the ecosystem can work with me (…… Of course OCI The standard itself is not well established , In real engineering, we still need to do some adapter Of ), Then my image can be built with any tool , my “ Containers ” You don't have to use namespace and cgroups To isolate . This allows various virtualization containers to better participate in the implementation of containers .

Next rkt(coreos To launch the , similar docker) Want to Docker Get a piece of it over there , hope Kubernetes Native support rkt As runtime , and PR It really fits in . however , There are many holes in the integration Kubernetes Running from .

then , stay Kubernetes 1.5 Launched CRI Mechanism , The container runtime interface (Container Runtime Interface),Kubernetes To tell you , You want to do it Runtime B: yes, you can , Just implement this interface , Successful counter-terrorism .

however , At that time Kubernetes It has not yet reached the status of the leader of the Wulin , Of course, when the container is running, you can't say I'm with Kubernetes Tied up to provide only CRI Interface , So there was shim( shim ) The idea that , One shim My duty is to be Adapter Adapt the interfaces of various container runtime to Kubernetes Of CRI On the interface , As shown in the figure below dockershim.

At this time ,Docker We need to Swarm March PaaS market , So I did a structure segmentation , Move all container operations to a single Daemon process containerd In the middle , Give Way Docker Daemon Responsible for the packaging and layout of the upper layer . unfortunately Swarm stay Kubernetes Face a crushing defeat .

after ,Docker The company put containerd The project is donated to CNCF Go back and be at ease Docker Enterprise version .

Docker+containerd Of runtime It's a bit complicated , therefore Kubernetes Just take it directly containerd do oci-runtime The plan . Of course , except Kubernetes outside ,containerd And then there's something like Swarm Equal dispatch system , So it's not going to be implemented directly CRI, Of course, this adaptation work will be handed over to a shim 了 .

containerd 1.0 in , Yes CRI Through a separate process CRI-containerd To complete ;

containerd 1.1 It's a little more beautiful , Cut off CRI-containerd This process , Put the adaptation logic as a plug-in containerd In the process of the main .

But in containerd Before doing these things , The community already has a more focused cri-runtime:CRI-O, It's very pure , It is compatible. CRI and OCI, Make one Kubernetes Dedicated runtime :

among conmon As the corresponding containerd-shim, The general intention is the same .

CRI-O and ( Call directly )containerd Compared with the default dockershim It's a lot simpler , But there's no validation case for a production environment . Until recently 1.24 edition ,Kubernetes Finally, native support is no longer available Docker, The future production environment must be more and more containerd We have a plan .

Kubernetes 1.24 Installation preparation

summary

From the above, we can see the following implementations runtime The way , among kubelet Call directly Docker Manager mode now 1.24 It's no longer supported .

- Cluster creation method 1:Containerd

By default ,Kubernetes When creating a cluster , What you use is Containerd The way . - Cluster creation method 2:Docker

Docker The penetration rate is high , although Kubernetes 1.24 Obsolete by default kubelet about Docker Support for , But we can also use Mirantis Maintenance of cri-dockerd Plug in mode Kubernetes Cluster creation . - Cluster creation method 3:CRI-O

CRI-O The way is Kubernetes The most direct way to create a container , When creating a cluster , It needs help from cri-o Plug-ins to achieve Kubernetes Cluster creation .

Be careful : The latter two methods need to be correct Kubelet The start-up parameters of the program are modified

Here are the three ways to implement :

We use Linux Ubuntu 20.04 As a host OS, First set up apt Source

#ali Source

deb http://mirrors.aliyun.com/ubuntu/ focal main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ focal main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ focal-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ focal-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ focal-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ focal-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ focal-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ focal-proposed main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ focal-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ focal-backports main restricted universe multiverse

# Tsinghua source

# The source image is annotated by default to improve apt update Speed , If necessary, please cancel the comment

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

apt update

precondition

stay Kubernetes In official documents , We can find the requirements for the environment

Before you begin

- A compatible Linux host .Kubernetes The project is based on Debian and Red Hat Of Linux Distributions and some distributions that do not provide a package manager provide general instructions

- Each machine 2 GB Or more RAM ( Less than this number will affect the running memory of your application )

- 2 CPU Nuclear or more

- The networks of all machines in the cluster can be connected to each other ( Both the public network and the Intranet can )

- There can be no duplicate host names in nodes 、MAC Address or product_uuid. Please see the here Learn more about .

- Turn on some ports on the machine . Please see the here Learn more about . Mainly 6443 port , The following command checks whether it is enabled

nc 127.0.0.1 6443

Disable swap partition . In order to ensure kubelet Normal work , you must Disable swap partition .

allow iptables Check bridge flow

- Make sure

br_netfilterThe module is loaded . This can be done by runninglsmod | grep br_netfilterTo complete . To explicitly load the module , Executablesudo modprobe br_netfilter - In order to make your Linux nodes iptables Be able to correctly view bridge traffic , You need to make sure that in your

sysctlConfiguration willnet.bridge.bridge-nf-call-iptablesSet to 1. for example :

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sudo sysctl --systemCheck br_netfilter

[email protected]:~# modinfo br_netfilter filename: /lib/modules/5.4.0-113-generic/kernel/net/bridge/br_netfilter.ko description: Linux ethernet netfilter firewall bridge author: Bart De Schuymer <[email protected]> author: Lennert Buytenhek <[email protected]> license: GPL srcversion: C662270B33245DF63170D07 depends: bridge retpoline: Y intree: Y name: br_netfilter vermagic: 5.4.0-113-generic SMP mod_unload modversions sig_id: PKCS#7 signer: Build time autogenerated kernel key sig_key: 6E:D3:96:DC:0A:DB:28:8D:E2:D1:37:5C:EA:E7:55:AD:E5:7E:DD:AA sig_hashalgo: sha512 signature: 72:D5:E8:E3:90:FC:1D:A6:EC:C9:21:0D:37:81:F9:20:7C:C6:29:85: C8:7A:61:17:1F:05:D1:2F:67:F7:0B:69:95:08:F1:71:0E:7C:3A:16: 58:C5:C6:03:08:BD:3C:F2:CE:6D:AC:FA:9A:CC:3B:53:0C:F6:C0:A1: 95:B3:B7:7F:2F:1C:CB:79:C0:3B:D0:8B:39:E6:1D:F0:94:EF:7F:0E: 2D:DA:03:0A:9D:4C:AB:83:DB:E2:DE:EC:60:60:26:A7:CC:4E:D6:5E: 74:10:22:E0:7E:13:23:AB:99:A0:A8:AB:66:87:5E:49:D9:B4:86:96: BF:02:F4:3D:D2:01:AE:CA:34:5B:53:D1:76:41:1C:02:8C:BE:B3:DA: D2:96:C3:15:01:76:25:71:81:44:C3:3E:1B:09:7E:F1:C5:3C:4F:9C: FA:E3:90:BF:53:E1:B5:9B:1F:62:68:06:AA:16:03:48:38:54:6D:18: 72:2D:62:93:68:B3:4A:DC:6B:51:CE:E6:91:A1:19:12:43:0D:CF:87: 43:FC:5D:86:CD:FF:C3:9E:9C:FF:D2:8F:EE:00:87:2F:08:79:51:F8: F3:F8:17:1C:86:52:E8:80:79:32:63:EC:3C:E2:AF:A5:F0:2B:BB:B2: 56:7F:0A:0E:98:0D:E4:DF:8A:96:A1:53:3C:AE:E6:7F:07:B3:21:3A: 22:78:2A:0D:C1:40:E7:CB:9A:9E:77:9C:71:4F:AC:8A:09:79:2A:05: BD:1A:AD:92:0E:65:50:FD:2E:EC:9F:60:46:D5:15:21:BC:1C:51:FD: EF:C9:CC:1C:AD:CD:49:49:C9:9C:B3:77:16:B3:A2:5D:BF:12:41:6F: 3C:95:FD:2D:3F:BF:A6:AD:E4:62:E6:E9:63:C2:C1:67:27:41:05:18: 46:CD:FA:99:5A:71:9A:9B:2D:6E:64:35:F6:67:1B:EA:D6:E4:17:A7: 7D:22:AB:A0:7A:E0:08:BB:76:B6:AF:1C:57:59:41:F3:AD:56:89:D7: 64:4A:B6:DD:76:6D:87:B1:CE:AD:1E:B2:C7:85:F0:85:80:79:0E:AE: 5A:DF:EE:6E:43:9E:49:0A:64:A3:11:5A:2E:F9:7B:B4:A7:A1:88:C8: AC:FB:1B:2E:4B:1A:03:C8:42:31:9A:D1:4A:18:0F:FA:AA:D1:E4:79: 75:2A:23:6C:4C:B3:8B:5A:CA:C2:29:BC:81:A1:91:8D:FC:41:1A:C2: AA:1F:2F:54:0D:D9:14:F1:CF:14:A8:44:CC:F5:4C:06:C8:DD:32:52: 4B:48:00:32:3E:41:6E:F7:3F:BE:5B:48:33:04:10:02:B0:68:20:F6: 2B:AD:08:6B:B8:D3:91:4A:A7:4D:79:F9Software deployment

Update software source

curl -s [https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg](https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg) | sudo apt-key add - echo "deb [https://mirrors.aliyun.com/kubernetes/apt/](https://mirrors.aliyun.com/kubernetes/apt/) kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list apt updatesee kubeadm Version of

[email protected]:~# apt-cache madison kubeadm | head kubeadm | 1.24.1-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.24.0-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.23.7-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.23.6-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.23.5-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.23.4-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.23.3-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.23.2-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.23.1-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 Packages kubeadm | 1.23.0-00 | https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial/main amd64 PackagesInstall the software

apt install -y kubeadm=1.24.1-00 kubelet=1.24.1-00 kubectl=1.24.1-00 # The latest version can be omitted Be careful : It will be installed automatically : conntrack cri-tools ebtables ethtool kubernetes-cni socatProhibited software updates

apt-mark hold kubelet kubeadm kubectl Be careful : apt-mark You can set flags on packages hold Flag specifies that the package is reserved (held back), Prevent automatic software updatesAfter installation kubelet Because there is no underlying container runtime ,service fail

[email protected]:~# systemctl status kubelet ● kubelet.service - kubelet: The Kubernetes Node Agent Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled) Drop-In: /etc/systemd/system/kubelet.service.d └─10-kubeadm.conf Active: activating (auto-restart) (Result: exit-code) since Wed 2022-06-01 03:37:39 UTC; 1s ago Docs: https://kubernetes.io/docs/home/ Process: 8935 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=1/FAILURE) Main PID: 8935 (code=exited, status=1/FAILURE)Automatically generate a configuration file : Modifiable

[email protected]:~# cat /etc/systemd/system/kubelet.service.d/10-kubeadm.conf # Note: This dropin only works with kubeadm and kubelet v1.11+ [Service] Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf" Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml" # This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env # This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use # the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file. EnvironmentFile=-/etc/default/kubelet ExecStart= ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGSCheck out the underlying container support

[email protected]:~# crictl images WARN[0000] image connect using default endpoints: [unix:///var/run/dockershim.sock unix:///run/containerd/containerd.sock unix:///run/crio/crio.sock unix:///var/run/cri-dockerd.sock]. As the default settings are now deprecated, you should set the endpoint instead. ERRO[0000] unable to determine image API version: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial unix /var/run/dockershim.sock: connect: no such file or directory" ERRO[0000] unable to determine image API version: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial unix /run/containerd/containerd.sock: connect: no such file or directory" ERRO[0000] unable to determine image API version: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial unix /run/crio/crio.sock: connect: no such file or directory" ERRO[0000] unable to determine image API version: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial unix /var/run/cri-dockerd.sock: connect: no such file or directory" FATA[0000] unable to determine image API version: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial unix /var/run/cri-dockerd.sock: connect: no such file or directory"among image connect using default endpoints:

- unix:///var/run/dockershim.sock #1.24 Itself no longer supports

- unix:///run/containerd/containerd.sock# Official acquiescence

- unix:///run/crio/crio.sock

- unix:///var/run/cri-dockerd.sock].

It is recommended to take a snapshot !

Containerd To create a cluster

This method requires :

- install Containerd

- Yes Containerd To configure

- Initialize the cluster and install CNI

install Containerd

Online installation

Install the basic software :

[email protected]:~# apt install -y curl gnupg2 software-properties-common apt-transport-https ca-certificatesinstall containerd.io

curl -fsSL https://download.docker.com/linux/ubuntu/gpg |sudo apt-key add - add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" apt update apt install-y containerd.ioCheck the installation containerd.io Result

[email protected]:~# dpkg -L containerd.io /. /etc /etc/containerd /etc/containerd/config.toml /lib /lib/systemd /lib/systemd/system /lib/systemd/system/containerd.service /usr /usr/bin /usr/bin/containerd /usr/bin/containerd-shim /usr/bin/containerd-shim-runc-v1 /usr/bin/containerd-shim-runc-v2 **/usr/bin/ctr /usr/bin/runc** /usr/share /usr/share/doc /usr/share/doc/containerd.io /usr/share/doc/containerd.io/changelog.Debian.gz /usr/share/doc/containerd.io/copyright /usr/share/man /usr/share/man/man5 /usr/share/man/man5/containerd-config.toml.5.gz /usr/share/man/man8 /usr/share/man/man8/containerd-config.8.gz /usr/share/man/man8/containerd.8.gz /usr/share/man/man8/ctr.8.gzContainerd Default configuration file /etc/containerd/config.toml

[email protected]:~# cat /etc/containerd/config.toml # Copyright 2018-2022 Docker Inc. # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # http://www.apache.org/licenses/LICENSE-2.0 # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. disabled_plugins = ["cri"] #root = "/var/lib/containerd" #state = "/run/containerd" #subreaper = true #oom_score = 0 #[grpc] # address = "/run/containerd/containerd.sock" # uid = 0 # gid = 0 #[debug] # address = "/run/containerd/debug.sock" # uid = 0 # gid = 0 # level = "info"You can see that the configuration is annotated , default containerd Can be configured through containerd config default see :

[email protected]:~# containerd config default disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 ...... [ttrpc] address = "" gid = 0 uid = 0To modify kubernetes Source , Next generate containerd And modify ( You can also modify kubelet Configuration of )

# Custom configuration mkdir -p /etc/containerd containerd config default | tee /etc/containerd/config.tomlDirectly modifying :/etc/containerd/config.toml

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6" ... [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] ... SystemdCgroup = true # Pay attention to revision SystemdCgroup # restart containerd systemctl restart containerdContainerd Provides ctr Command to operate , Such as

[email protected]:~# ctr image ls REF TYPE DIGEST SIZE PLATFORMS LABELSand kubernetes Yes ctr The command is encapsulated , obtain crictl command (ctr tools)

[email protected]:~# crictl images list WARN[0000] image connect using default endpoints: [unix:///var/run/dockershim.sock unix:///run/containerd/containerd.sock unix:///run/crio/crio.sock unix:///var/run/cri-dockerd.sock]. As the default settings are now deprecated, you should set the endpoint instead. ERRO[0000] unable to determine image API version: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial unix /var/run/dockershim.sock: connect: no such file or directory" E0601 05:48:16.809106 30961 remote_image.go:121] "ListImages with filter from image service failed" err="rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.ImageService" filter="&ImageFilter{Image:&ImageSpec{Image:list,Annotations:map[string]string{},},}" FATA[0000] listing images: rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.ImageServiceThis is because there is no runtime entry , We can modify it crictl The configuration file , get containerd Of sock Information

#cat /etc/crictl.yaml runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: falseRestart the service

systemctl restart containerd systenctl enable containerd [email protected]:~# systemctl status containerd ● containerd.service - containerd container runtime Loaded: loaded (/lib/systemd/system/containerd.service; enabled; vendor preset: enabled) Active: active (running) since Wed 2022-06-01 05:52:04 UTC; 29s ago Docs: https://containerd.io Main PID: 31549 (containerd) Tasks: 10 Memory: 19.4M CGroup: /system.slice/containerd.service └─31549 /usr/bin/containerd Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.079492081Z" level=info msg="loading plugin \"io.containerd.tracing.processor.v1.otlp\"..." type=io.contai> Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.079513258Z" level=info msg="skip loading plugin \"io.containerd.tracing.processor.v1.otlp\"..." error="no> Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.079524291Z" level=info msg="loading plugin \"io.containerd.internal.v1.tracing\"..." type=io.containerd.i> Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.079559919Z" level=error msg="failed to initialize a tracing processor \"otlp\"" error="no OpenTelemetry e> Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.079597162Z" level=info msg="loading plugin \"io.containerd.grpc.v1.cri\"..." type=io.containerd.grpc.v1 Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.079791605Z" level=warning msg="failed to load plugin io.containerd.grpc.v1.cri" error="invalid plugin con> Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.080004161Z" level=info msg=serving... address=/run/containerd/containerd.sock.ttrpc Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.080057668Z" level=info msg=serving... address=/run/containerd/containerd.sock Jun 01 05:52:04 cp systemd[1]: Started containerd container runtime. Jun 01 05:52:04 cp containerd[31549]: time="2022-06-01T05:52:04.081450178Z" level=info msg="containerd successfully booted in 0.030415s"Offline installation mode

Need to download runc and containerd

- Make sure

Releases · containerd/containerd

wget https://github.com/opencontainers/runc/releases/download/v1.1.2/runc.amd64

cp runc.amd64 /usr/local/bin/runc # hold runc Just copy it directly

chmod +x /usr/local/bin/runc

cp /usr/local/bin/runc /usr/bin

cp /usr/local/bin/runc /usr/local/sbin/

wget https://github.com/containerd/containerd/releases/download/v1.6.4/cri-containerd-cni-1.6.4-linux-amd64.tar.gz

tar xf cri-containerd-cni-1.6.4-linux-amd64.tar.gz -C /

[email protected]:~# containerd --version

containerd github.com/containerd/containerd v1.6.4 212e8b6fa2f44b9c21b2798135fc6fb7c53efc16

By installing node-cp see containerd Service for

[email protected]:~# systemctl status containerd

● containerd.service - containerd container runtime

Loaded: loaded **(/lib/systemd/system/containerd.service**; enabled; vendor preset: enabled)

Active: active (running) since Wed 2022-06-01 06:19:25 UTC; 3h 5min ago

Docs: https://containerd.io

Main PID: 36533 (containerd)

[email protected]:~# cat /lib/systemd/system/containerd.service

# Copyright The containerd Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

The offline installation is in /etc/systemd/system/containerd.service, through systemctl status containerd see

[email protected]:~# cat /etc/systemd/system/containerd.service

# Copyright The containerd Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

Start the service

[email protected]:~# systemctl daemon-reload

[email protected]:~# systemctl restart containerd

Create configuration directory

mkdir -p /etc/containerd

From the installed node Pass the configuration file /etc/containerd/config.toml and /etc/crictl.yaml, You can also modify it after generation according to the previous method

[email protected]:~# scp /etc/containerd/config.toml [email protected]:/etc/containerd/config.toml

[email protected]:~# scp /etc/crictl.yaml [email protected]:/etc/crictl.yaml

systemctl restart containerd

Kubeadm Initialize cluster

See how much images( Error reporting means that you cannot access k8s.gcr.io)

[email protected]:~# kubeadm config images list

W0601 06:40:29.809756 39745 version.go:103] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://storage.googleapis.com/kubernetes-release/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

W0601 06:40:29.809867 39745 version.go:104] falling back to the local client version: v1.24.1

k8s.gcr.io/kube-apiserver:v1.24.1

k8s.gcr.io/kube-controller-manager:v1.24.1

k8s.gcr.io/kube-scheduler:v1.24.1

k8s.gcr.io/kube-proxy:v1.24.1

k8s.gcr.io/pause:3.7

k8s.gcr.io/etcd:3.5.3-0

k8s.gcr.io/coredns/coredns:v1.8.6

Use kubeadm Command to initialize the cluster

kubeadm init --kubernetes-version=1.24.1 --apiserver-advertise-address=192.168.81.21 --apiserver-bind-port=6443 --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --pod-network-cidr=10.211.0.0/16 --service-cidr=10.96.0.0/12 --cri-socket=unix:///run/containerd/containerd.sock --ignore-preflight-errors=Swap

It can be used kubeadm init —help To see the syntax .

[email protected]:~# kubeadm init --kubernetes-version=1.24.1 --apiserver-advertise-address=192.168.81.21 --apiserver-bind-port=6443 --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --pod-network-cidr=10.211.0.0/16 --service-cidr=10.96.0.0/12 --cri-socket=unix:///run/containerd/containerd.sock --ignore-preflight-errors=Swap

[init] Using Kubernetes version: v1.24.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

......

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.81.21:6443 --token fybv6g.xlt3snl52qs5wyoo \

--discovery-token-ca-cert-hash sha256:8545518e775368c0982638b9661355e6682a1f3ba98386b4ca0453449edc97ca

It's already done images

[email protected]:~# crictl images ls

IMAGE TAG IMAGE ID SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7a 13.6MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.3-0 aebe758cef4cd 102MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.24.1 e9f4b425f9192 33.8MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.24.1 b4ea7e648530d 31MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.24.1 beb86f5d8e6cd 39.5MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.24.1 18688a72645c5 15.5MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.6 6270bb605e12e 302kB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.7 221177c6082a8 311kB

# If you use ctr The command needs to specify namespace

[email protected]:~# ctr namespace ls

NAME LABELS

default

k8s.io

[email protected]:~# ctr -n k8s.io image ls

REF TYPE DIGEST SIZE PLATFORMS LABELS

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6 application/vnd.docker.distribution.manifest.list.v2+json sha256:5b6ec0d6de9baaf3e92d0f66cd96a25b9edbce8716f5f15dcd1a616b3abd590e 13.0 MiB linux/amd64,linux/arm,linux/arm64,linux/mips64le,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/[email protected]:5b6ec0d6de9baaf3e92d0f66cd96a25b9edbce8716f5f15dcd1a616b3abd590e application/vnd.docker.distribution.manifest.list.v2+json sha256:5b6ec0d6de9baaf3e92d0f66cd96a25b9edbce8716f5f15dcd1a616b3abd590e 13.0 MiB linux/amd64,linux/arm,linux/arm64,linux/mips64le,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.3-0 application/vnd.docker.distribution.manifest.list.v2+json sha256:13f53ed1d91e2e11aac476ee9a0269fdda6cc4874eba903efd40daf50c55eee5 97.4 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/[email protected]:13f53ed1d91e2e11aac476ee9a0269fdda6cc4874eba903efd40daf50c55eee5 application/vnd.docker.distribution.manifest.list.v2+json sha256:13f53ed1d91e2e11aac476ee9a0269fdda6cc4874eba903efd40daf50c55eee5 97.4 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.24.1 application/vnd.docker.distribution.manifest.list.v2+json sha256:ad9608e8a9d758f966b6ca6795b50a4723982328194bde214804b21efd48da44 32.2 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/[email protected]:ad9608e8a9d758f966b6ca6795b50a4723982328194bde214804b21efd48da44 application/vnd.docker.distribution.manifest.list.v2+json sha256:ad9608e8a9d758f966b6ca6795b50a4723982328194bde214804b21efd48da44 32.2 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.24.1 application/vnd.docker.distribution.manifest.list.v2+json sha256:594a3f5bbdd0419ac57d580da8dfb061237fa48d0c9909991a3af70630291f7a 29.6 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/[email protected]:594a3f5bbdd0419ac57d580da8dfb061237fa48d0c9909991a3af70630291f7a application/vnd.docker.distribution.manifest.list.v2+json sha256:594a3f5bbdd0419ac57d580da8dfb061237fa48d0c9909991a3af70630291f7a 29.6 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.24.1 application/vnd.docker.distribution.manifest.list.v2+json sha256:1652df3138207570f52ae0be05cbf26c02648e6a4c30ced3f779fe3d6295ad6d 37.7 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/[email protected]:1652df3138207570f52ae0be05cbf26c02648e6a4c30ced3f779fe3d6295ad6d application/vnd.docker.distribution.manifest.list.v2+json sha256:1652df3138207570f52ae0be05cbf26c02648e6a4c30ced3f779fe3d6295ad6d 37.7 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.24.1 application/vnd.docker.distribution.manifest.list.v2+json sha256:0d2de567157e3fb97dfa831620a3dc38d24b05bd3721763a99f3f73b8cbe99c9 14.8 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/[email protected]:0d2de567157e3fb97dfa831620a3dc38d24b05bd3721763a99f3f73b8cbe99c9 application/vnd.docker.distribution.manifest.list.v2+json sha256:0d2de567157e3fb97dfa831620a3dc38d24b05bd3721763a99f3f73b8cbe99c9 14.8 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 application/vnd.docker.distribution.manifest.list.v2+json sha256:3d380ca8864549e74af4b29c10f9cb0956236dfb01c40ca076fb6c37253234db 294.7 KiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.7 application/vnd.docker.distribution.manifest.list.v2+json sha256:bb6ed397957e9ca7c65ada0db5c5d1c707c9c8afc80a94acbe69f3ae76988f0c 304.0 KiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/[email protected]:3d380ca8864549e74af4b29c10f9cb0956236dfb01c40ca076fb6c37253234db application/vnd.docker.distribution.manifest.list.v2+json sha256:3d380ca8864549e74af4b29c10f9cb0956236dfb01c40ca076fb6c37253234db 294.7 KiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

registry.cn-hangzhou.aliyuncs.com/google_containers/[email protected]:bb6ed397957e9ca7c65ada0db5c5d1c707c9c8afc80a94acbe69f3ae76988f0c application/vnd.docker.distribution.manifest.list.v2+json sha256:bb6ed397957e9ca7c65ada0db5c5d1c707c9c8afc80a94acbe69f3ae76988f0c 304.0 KiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

sha256:18688a72645c5d34e1cc70d8deb5bef4fc6c9073bb1b53c7812856afc1de1237 application/vnd.docker.distribution.manifest.list.v2+json sha256:0d2de567157e3fb97dfa831620a3dc38d24b05bd3721763a99f3f73b8cbe99c9 14.8 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

sha256:221177c6082a88ea4f6240ab2450d540955ac6f4d5454f0e15751b653ebda165 application/vnd.docker.distribution.manifest.list.v2+json sha256:bb6ed397957e9ca7c65ada0db5c5d1c707c9c8afc80a94acbe69f3ae76988f0c 304.0 KiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

sha256:6270bb605e12e581514ada5fd5b3216f727db55dc87d5889c790e4c760683fee application/vnd.docker.distribution.manifest.list.v2+json sha256:3d380ca8864549e74af4b29c10f9cb0956236dfb01c40ca076fb6c37253234db 294.7 KiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

sha256:a4ca41631cc7ac19ce1be3ebf0314ac5f47af7c711f17066006db82ee3b75b03 application/vnd.docker.distribution.manifest.list.v2+json sha256:5b6ec0d6de9baaf3e92d0f66cd96a25b9edbce8716f5f15dcd1a616b3abd590e 13.0 MiB linux/amd64,linux/arm,linux/arm64,linux/mips64le,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

sha256:aebe758cef4cd05b9f8cee39758227714d02f42ef3088023c1e3cd454f927a2b application/vnd.docker.distribution.manifest.list.v2+json sha256:13f53ed1d91e2e11aac476ee9a0269fdda6cc4874eba903efd40daf50c55eee5 97.4 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x,windows/amd64 io.cri-containerd.image=managed

sha256:b4ea7e648530d171b38f67305e22caf49f9d968d71c558e663709b805076538d application/vnd.docker.distribution.manifest.list.v2+json sha256:594a3f5bbdd0419ac57d580da8dfb061237fa48d0c9909991a3af70630291f7a 29.6 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

sha256:beb86f5d8e6cd2234ca24649b74bd10e1e12446764560a3804d85dd6815d0a18 application/vnd.docker.distribution.manifest.list.v2+json sha256:1652df3138207570f52ae0be05cbf26c02648e6a4c30ced3f779fe3d6295ad6d 37.7 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

sha256:e9f4b425f9192c11c0fa338cabe04f832aa5cea6dcbba2d1bd2a931224421693 application/vnd.docker.distribution.manifest.list.v2+json sha256:ad9608e8a9d758f966b6ca6795b50a4723982328194bde214804b21efd48da44 32.2 MiB linux/amd64,linux/arm/v7,linux/arm64,linux/ppc64le,linux/s390x io.cri-containerd.image=managed

Use calico As CNI

[email protected]:~# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

Worker Nodes to join

[email protected]:~# kubeadm join 192.168.81.21:6443 --token fybv6g.xlt3snl52qs5wyoo \

> --discovery-token-ca-cert-hash sha256:8545518e775368c0982638b9661355e6682a1f3ba98386b4ca0453449edc97ca

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

#CP check:

[email protected]:/home/zyi# kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

cp Ready control-plane 30h v1.24.1 192.168.81.21 <none> Ubuntu 20.04.4 LTS 5.4.0-113-generic containerd://1.6.4

worker01 Ready <none> 30h v1.24.1 192.168.81.22 <none> Ubuntu 20.04.4 LTS 5.4.0-113-generic containerd://1.6.4

worker02 Ready <none> 27h v1.24.1 192.168.81.23 <none> Ubuntu 20.04.4 LTS 5.4.0-113-generic containerd://1.6.4

[email protected]:~# kubectl get po -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-56cdb7c587-v46wk 1/1 Running 0 118m 10.211.5.3 worker01 <none> <none>

kube-system calico-node-2qq4n 1/1 Running 0 118m 192.168.81.21 cp <none> <none>

kube-system calico-node-slnp9 1/1 Running 0 2m27s 192.168.81.23 worker02 <none> <none>

kube-system calico-node-v2xd8 1/1 Running 0 118m 192.168.81.22 worker01 <none> <none>

kube-system coredns-7f74c56694-4b4wp 1/1 Running 0 3h 10.211.5.1 worker01 <none> <none>

kube-system coredns-7f74c56694-mmvgb 1/1 Running 0 3h 10.211.5.2 worker01 <none> <none>

kube-system etcd-cp 1/1 Running 0 3h 192.168.81.21 cp <none> <none>

kube-system kube-apiserver-cp 1/1 Running 0 3h 192.168.81.21 cp <none> <none>

kube-system kube-controller-manager-cp 1/1 Running 0 3h 192.168.81.21 cp <none> <none>

kube-system kube-proxy-4n2jk 1/1 Running 0 2m27s 192.168.81.23 worker02 <none> <none>

kube-system kube-proxy-8zdvt 1/1 Running 0 169m 192.168.81.22 worker01 <none> <none>

kube-system kube-proxy-rpf78 1/1 Running 0 3h 192.168.81.21 cp <none> <none>

kube-system kube-scheduler-cp 1/1 Running 0 3h 192.168.81.21 cp <none> <none>

Use Docker Create a cluster at run time

The description of the installation official website can be used Docker Engine Create clusters

Note: The following operations assume that you use

[cri-dockerd](https://github.com/Mirantis/cri-dockerd)Adapter to connect Docker Engine And Kubernetes Integrate .

On each of your nodes , follow install Docker engine Guide for your Linux Distribution installation Docker

Follow the instructions in the source code repository to install

[cri-dockerd](https://github.com/Mirantis/cri-dockerd).about

cri-dockerd, By default ,CRI The socket is/run/cri-dockerd.sockinitialization kubernetes Cluster

install Docker-ce

Online installation , Configure software source, etc

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

apt update

apt install -y containerd.io docker-ce docker-ce-cli

Be careful :

By default ,docker The service uses containerd Interface services ,

adopt journalctl -u docker.service

You know :unix:///run/containerd/containerd.sock

see Docker info

[email protected]:~# docker info

Client:

...

Server:

Containers: 0

...

Server Version: 20.10.16

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

**Cgroup Driver: cgroupfs**

Cgroup Version: 1

...

WARNING: No swap limit support

You can see up here docker Of default Cgroup Driver yes cgroupfs, And look at kubelet nevertheless systemd

[email protected]:~# journalctl -u kubelet | grep systemd |more

Jun 03 02:48:41 worker01 systemd[1]: kubelet.service: Main process exited

, code=exited, status=1/FAILURE

Jun 03 02:48:41 worker01 systemd[1]: kubelet.service: Failed with result

'exit-code'.

Jun 03 02:48:52 worker01 systemd[1]: kubelet.service: Scheduled restart j

ob, restart counter is at 4441.

Jun 03 02:48:52 worker01 systemd[1]: Stopped kubelet: The Kubernetes Node

Agent.

Jun 03 02:48:52 worker01 systemd[1]: Started kubelet: The Kubernetes Node

Agent.

Jun 03 02:48:52 worker01 kubelet[97187]: --cgroup-driver string

Driver that the kubelet uses to manipula

te cgroups on the host. Possible values: 'cgroupfs', 'systemd' (default

"cgroupfs") (DEPRECATED: This parameter should be set via the config file

specified by the Kubelet's --config flag. See https://kubernetes.io/docs

/tasks/administer-cluster/kubelet-config-file/ for more information.)

Jun 03 02:48:52 worker01 systemd[1]: kubelet.service: Main process exited

, code=exited, status=1/FAILURE

Let's change it docker Of Cgroup Dirver Parameters of ( Each machine does ):

Create your own systemd Service management directory

mkdir -p /etc/systemd/system/docker.service.d

Custom profiles

tee /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2" } EOF

# Restart the service

systemctl daemon-reload

systemctl restart docker

systemctl enable docker

[email protected]:~# docker info |grep Cgroup

Cgroup Driver: systemd

Cgroup Version: 1

WARNING: No swap limit support

obtain cri-dockers Plug in to support docker

plug-in unit cri-dockerd Installation mode

mkdir -p /data/softs && cd /data/softs

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.1/cri-dockerd-0.2.1.amd64.tgz

tar xf cri-dockerd-0.2.1.amd64.tgz

mv cri-dockerd/cri-dockerd /usr/local/bin/

# Inspection effect

cri-dockerd --version

[email protected]:/data/softs# cri-dockerd --version

cri-dockerd 0.2.1 (HEAD)

Custom service files /etc/systemd/system/cri-docker.service, by cri-dockerd Start reading

[Unit]

Description=CRI Interface for Docker Application container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/bin/cri-dockerd --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --container-runtime-endpoint=unix:///var/run/cri-dockerd.sock --image-pull-progress-deadline=30s --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 --cri-dockerd-root-directory=/var/lib/dockershim --docker-endpoint=unix:///var/run/docker.sock --cri-dockerd-root-directory=/var/lib/docker

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

killMode=process

[Install]

WantedBy=multi-user.target

Customized services (Optional)socket file /usr/lib/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=/var/run/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

Start the service

systemctl daemon-reload

systemctl enable cri-docker.service

systemctl restart cri-docker.service

systemctl status --no-pager cri-docker.service

# Detection effect

crictl --runtime-endpoint /var/run/cri-dockerd.sock ps

[email protected]:/data/softs# crictl --runtime-endpoint /var/run/cri-dockerd.sock ps

I0604 10:50:12.902161 380647 util_unix.go:104] "Using this format as endpoint is deprecated, please consider using full url format." deprecatedFormat="/var/run/cri-dockerd.sock" fullURLFormat="unix:///var/run/cri-dockerd.sock"

I0604 10:50:12.911201 380647 util_unix.go:104] "Using this format as endpoint is deprecated, please consider using full url format." deprecatedFormat="/var/run/cri-dockerd.sock" fullURLFormat="unix:///var/run/cri-dockerd.sock"

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

At this time there is go:104 Tips , following yaml The file can remove the previous prompt

# cat /etc/crictl.yaml

runtime-endpoint: "unix:///var/run/cri-dockerd.sock"

image-endpoint: "unix:///var/run/cri-dockerd.sock"

timeout: 10

debug: false

pull-image-on-create: true

disable-pull-on-run: false

The test results

crictl ps

[email protected]:/data/softs# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

Next , Ensure that all hosts get the configuration file

[email protected]:/data/softs# scp /etc/systemd/system/cri-docker.service worker01:/etc/systemd/system/cri-docker.service

cri-docker.service 100% 934 1.9MB/s 00:00

[email protected]:/data/softs# scp /usr/lib/systemd/system/cri-docker.socket worker01:/usr/lib/systemd/system/cri-docker.socket

cri-docker.socket 100% 210 458.5KB/s 00:00

[email protected]:/data/softs# scp /etc/crictl.yaml worker01:/etc/crictl.yaml

crictl.yaml 100% 183 718.5KB/s 00:00

Create clusters

kubelet reform , all node

#cat/etc/systemd/system/kubelet.service.d/10-kubeadm.conf

ExecStart=...--pod-infra-container-image=registry.cn-[hangzhou.aliyuncs.com/google_containers/pause:3.7 --](http://hangzhou.aliyuncs.com/google_containers/pause:3.7--)container-runtime-endpoint=unix:///var/run/cri-dockerd.sock --containerd=unix:///var/run/cri-dockerd.sock

systemctl daemon-reload

systemctl restart kubelet

kubeadm Cluster initialization

kubeadm init --kubernetes-version=1.24.1 \

--apiserver-advertise-address=192.168.81.20 \

--image-repository registry.cn-h[angzhou.aliyuncs.com/google_containers](http://angzhou.aliyuncs.com/google_containers) \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.211.0.0/16 \

--cri-socket unix:///var/run/cri-dockerd.sock \

--ignore-preflight-errors=Swap

[email protected]:/data/softs# kubeadm init --kubernetes-version=1.24.1 \

> --apiserver-advertise-address=192.168.81.20 \

> --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.211.0.0/16 \

> --cri-socket unix:///var/run/cri-dockerd.sock \

> --ignore-preflight-errors=Swap

[init] Using Kubernetes version: v1.24.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 192.168.81.20]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [192.168.81.20 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [192.168.81.20 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 9.002541 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: zpqirm.so0xmeo6b46gaj41

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.81.20:6443 --token zpqirm.so0xmeo6b46gaj41 \

--discovery-token-ca-cert-hash sha256:e8469d13b8ff07ce2803134048bb109a16e6b15b9e3279c4c556066549025c47

[email protected]:/data/softs# mkdir -p $HOME/.kube

[email protected]:/data/softs# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[email protected]:/data/softs# sudo chown $(id -u):$(id -g) $HOME/.kube/config

stay worker node

[email protected]:/data/softs# kubeadm join 192.168.81.20:6443 --token zpqirm.so0xmeo6b46gaj41 \

> --discovery-token-ca-cert-hash sha256:e8469d13b8ff07ce2803134048bb109a16e6b15b9e3279c4c556066549025c47

Found multiple CRI endpoints on the host. Please define which one do you wish to use by setting the 'criSocket' field in the kubeadm configuration file: unix:///var/run/containerd/containerd.sock, unix:///var/run/cri-dockerd.sock

To see the stack trace of this error execute with --v=5 or higher

This is because the underlying default cri yes containerd

perform kubeadm join Join in CRI

[email protected]:/data/softs# kubeadm join 192.168.81.20:6443 --token zpqirm.so0xmeo6b46gaj41 --discovery-token-ca-cert-hash sha256:e8469d13b8ff07ce2803134048bb109a16e6b15b9e3279c4c556066549025c47 --cri-socket unix:///var/run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

CRI-O Create a cluster at run time

Documents on the official website

install CRI-O

OS=xUbuntu_20.04

CRIO_VERSION=1.24

echo "deb http://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/ /" | sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable.list

echo "deb http://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable:/cri-o:/$CRIO_VERSION/$OS/ /" | sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable:cri-o:$CRIO_VERSION.list

curl -L https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:$CRIO_VERSION/$OS/Release.key | sudo apt-key add -

curl -L https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/Release.key | sudo apt-key add -

#echo "deb [signed-by=/usr/share/keyrings/libcontainers-archive-keyring.gpg] https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/ /" > /etc/apt/sources.list.d/devel:kubic:libcontainers:stable.list

#echo "deb [signed-by=/usr/share/keyrings/libcontainers-crio-archive-keyring.gpg] https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable:/cri-o:/$VERSION/$OS/ /" > /etc/apt/sources.list.d/devel:kubic:libcontainers:stable:cri-o:$VERSION.list

#mkdir -p /usr/share/keyrings

#curl -L https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/Release.key | gpg --dearmor -o /usr/share/keyrings/libcontainers-archive-keyring.gpg

#curl -L https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable:/cri-o:/$VERSION/$OS/Release.key | gpg --dearmor -o /usr/share/keyrings/libcontainers-crio-archive-keyring.gpg

apt-get update

apt-get install cri-o cri-o-runc

systemctl start crio

systemctl enable crio

systemctl status crio

Modify the configuration

Modify the default network segment

#/etc/cni/net.d/100-crio-bridge.conf

sed -i 's/10.85.0.0/10.211.0.0/g' /etc/cni/net.d/100-crio-bridge.conf

Modify the basic configuration

#grep -Env '#|^$|^\['/etc/crio/crio.conf

169:cgroup_manager = "systemd"

451:pause_image ="[registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6](http://registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6)"

Restart the service configuration

systemctl restart crio

Verification effect

curl -v --unix-socket /var/run/crio/crio.sock [http://localhost/info](http://localhost/info)

[email protected]:~# curl -v --unix-socket /var/run/crio/crio.sock http://localhost/info

* Trying /var/run/crio/crio.sock:0...

* Connected to localhost (/var/run/crio/crio.sock) port 80 (#0)

> GET /info HTTP/1.1

> Host: localhost

> User-Agent: curl/7.68.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Content-Type: application/json

< Date: Sat, 04 Jun 2022 15:10:27 GMT

< Content-Length: 239

<

* Connection #0 to host localhost left intact

{

"storage_driver":"overlay","storage_root":"/var/lib/containers/storage","cgroup_driver":"systemd","default_id_mappings":{

"uids":[{

"container_id":0,"host_id":0,"size":4294967295}],"gids":[{

"container_id":0,"host_id":0,"size":4294967295}]}}

To configure crictl.yaml Parameters

# cat /etc/crictl.yaml

runtime-endpoint: "unix:///var/run/crio/crio.sock"

image-endpoint: "unix:///var/run/crio/crio.sock"

timeout: 10

debug: false

pull-image-on-create: true

disable-pull-on-run: false

Initialize cluster

modify kubelet Parameters

#cat/etc/systemd/system/kubelet.service.d/10-kubeadm.conf

ExecStart=...--container-runtime=remote --cgroup-driver=systemd --container-runtime-endpoint='unix:///var/run/crio/crio.sock' --runtime-request-timeout=5m

systemctl daemon-reload

systemctl restart kubelet

Cluster initialization

kubeadm init --kubernetes-version=1.24.1 \

--apiserver-advertise-address=192.168.81.1 \

--image-repository=registry.cn-[hangzhou.aliyuncs.com/google_containers \](http://hangzhou.aliyuncs.com/google_containers%5C)

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.211.0.0/16 \

--cri-socket unix:///var/run/crio/crio.sock \

--ignore-preflight-errors=Swap

[email protected]:~# kubeadm init --kubernetes-version=1.24.1 \

> --apiserver-advertise-address=192.168.81.1 \

> --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.211.0.0/16 \

> --cri-socket unix:///var/run/crio/crio.sock \

> --ignore-preflight-errors=Swap

[init] Using Kubernetes version: v1.24.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local main] and IPs [10.96.0.1 192.168.81.1]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost main] and IPs [192.168.81.1 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost main] and IPs [192.168.81.1 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 7.003679 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node main as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node main as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: k9dmq6.cuhj0atd4jhz4y6o

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.81.1:6443 --token k9dmq6.cuhj0atd4jhz4y6o \

--discovery-token-ca-cert-hash sha256:99de4906a2f690147d59ee71c1e2e916e64b6a8f6efae5bd28bebcb711cd28ab

Worker To join the cluster

[email protected]:~# kubeadm join 192.168.81.1:6443 --token k9dmq6.cuhj0atd4jhz4y6o \

> --discovery-token-ca-cert-hash sha256:99de4906a2f690147d59ee71c1e2e916e64b6a8f6efae5bd28bebcb711cd28ab

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

边栏推荐

- GCC compilation process

- 虚拟机内存结构简述

- Node red series (27): instructions for S7 node of the extension node

- 软件测试常问,你真的会搭建测试环境吗?

- UNIX Environment advanced programming --3-file io---3.10 file sharing

- Implementation of fruit mall wholesale platform based on SSM

- [ssl1280] full arrangement

- Système de gestion des défauts du projet Cynthia

- WIN7无法被远程桌面问题

- Apple zoom! It's done so well

猜你喜欢

Double carbon in every direction: green demand and competition focus in the calculation from the east to the West

Sunyuchen, head of Grenada delegation, attended the WTO MC12 and emphasized the development of digital economy

![[51nod p2673] shortest path [heap optimization Dijk]](/img/08/5e68466fe8ff8458f736bc50d897da.jpg)

[51nod p2673] shortest path [heap optimization Dijk]

Test redundancy code for error problem type solution - excerpt

Cynthia项目缺陷管理系统

计算循环冗余码--摘录

Oracle自定义数据类型Type疑问

【轴承故障分解】基于matlab ITD轴承故障信号分解【含Matlab源码 1871期】

MySQL monitoring tool PMM, let you go to a higher level (Part 2)

冗余码题型--后面加0的区别

随机推荐

架构师必备:系统容量现状checklist

MySQL事务隔离级别和MVCC

软件测试常问,你真的会搭建测试环境吗?

C# 11 更加实用的 nameof

多线程 从UE4的无锁队列开始 (线程安全)

逐向双碳:东数西算中的绿色需求与竞争焦点

六月集训(第13天) —— 双向链表

deepin系统中Qt5.12无法输入中文(无法切换中文输入法)解决办法

[51nod p2106] an odd number of times [bit operation]

隐私计算FATE-核心概念与单机部署

Apple zoom! It's done so well

【图像去噪】基于matlab高斯+均值+中值+双边滤波图像去噪【含Matlab源码 1872期】

说说MySQL索引机制

【20220526】UE5.0.2 release d11782b

架构师必备:系统容量现状checklist

聊聊 C# 方法重载的底层玩法

Système de gestion des défauts du projet Cynthia

【轴承故障分解】基于matlab ITD轴承故障信号分解【含Matlab源码 1871期】

Weekend book: power BI data visualization practice

Execution order of subclass and parent constructor