当前位置:网站首页>CEPH distributed storage

CEPH distributed storage

2022-06-27 21:21:00 【Ink Sky Wheel】

One 、Ceph summary :

summary :Ceph According to the University of California Santa Cruz Branch school Sage Weil The new generation of free software distributed file system designed and developed by Dr , Its design goal is good scalability (PB Above grade )、 High performance 、 high reliability .Ceph Its name and UCSC(Ceph Birthplace ) The mascot of , This mascot is “Sammy”, A banana colored slug , It is the SHELLLESS mollusk of cephalopods . These multi tentacled cephalopods , It is a metaphor for a highly parallel distributed file system . Its design follows three principles : Separation of data and metadata , Dynamic distributed metadata management , Reliable and unified distributed object storage mechanism .

Two 、 Basic framework :

1.Ceph Is a highly available 、 Easier to manage 、 Open source distributed storage system , Object storage can be provided simultaneously in a set of systems 、 Block storage and file storage services . It is mainly composed of Ceph The core of the storage system RADOS as well as Block storage Interface 、 object Storage Interfaces and file systems Storage Interface composition ;

2. Storage type :

Ø Block storage :

In common storage DAS、SAN It also provides block storage 、openstack Of cinder Storage , for example iscsi The storage ;

Ø Object storage :

The concept of object storage emerged late , Storage standardization organization SINA As early as 2004 It was defined in , But in the early stage, it mostly appeared in very large-scale systems , So it is not well known to the public , Related products have never been warm . Until the concept of cloud computing and big data was strongly promoted by the whole people , Just slowly into public view . Block storage and file storage mentioned earlier , Basically, it's still used inside the proprietary lan , The advantage scenario of object storage is the Internet or public network , It mainly deals with massive data , Massive concurrent access requirements . Application based on Internet is the main adaptation of object storage ( Of course, this condition also applies to cloud computing , Internet based applications are the easiest to migrate to the cloud , Because before the word "cloud" appeared , They're already up there ), Almost all mature public clouds provide object storage products , Whether at home or abroad ;

This interface is usually in the form of QEMU Driver perhaps Kernel Module The way , This interface needs to be implemented Linux Of Block Device Or QEMU Provided Block Driver Interface , Such as Swift 、S3 as well as Gluster、Sheepdog,AWS Of EBS, The cloud disk drive of Qingyun and the Pangu system of Alibaba cloud , also Ceph Of RBD(RBD yes Ceph Block storage oriented interface );

Ø File system storage :

Compared with traditional file systems such as Ext4 It's a type of , But the difference is that distributed storage provides the ability of parallelization , Such as Ceph Of CephFS (CephFS yes Ceph File storage oriented interface ), But sometimes I will GlusterFS ,HDFS This kind of non POSIX The class file storage interface of the interface belongs to this class . Of course NFS、NAS Also belongs to the file system storage ;

Ø summary : contrast ;

3.Ceph Basic framework :

3、 ... and 、 Details of architecture components :

ØRADOS: The basis for the use and deployment of all other client interfaces . from following Components :

OSD:Object StorageDevice, Provide Data entity Storage resources ;

Monitor: Maintain the whole Ceph colony Heartbeat information of each node in , Maintain the entire cluster of Global status ;

MDS:Ceph Metadata Server, File system metadata service node .MDS It also supports the distribution of multiple machines Flexible deployment , To achieve high availability of the system .

Typical RADOS The deployment architecture consists of a small number of Monitor Monitors and a lot of OSD Storage device composition , It can provide a stable storage environment on the dynamic heterogeneous storage device cluster 、 Extensible 、 High performance single logical object storage interface .

ØCeph Client interface (Clients) :Ceph In addition to the underlying infrastructure RADOS Above LIBRADOS、RADOSGW、RBD as well as Ceph FS Unity is called Ceph Client interface . In short RADOSGW、RBD as well as Ceph FS according to LIBRADOS Provide multi programming language interface development . So there is a ladder between them transition The relationship between .

1.RADOSGW : Ceph Object storage gateway , It is a bottom layer based on librados Provide to client RESTful Interface object storage interface . at present Ceph Two kinds of support API Interface :

S3.compatible:S3 Compatible interface , Provide with Amazon S3 Most of the RESTfuI API Interface compatible API Interface .

Swift.compatible: Provide with OpenStack Swift Most interfaces are compatible API Interface .Ceph Of Object storage Use the gateway daemon (radosgw), radosgw The structure diagram is shown in the figure :  2.RBD : A data block is a sequence of bytes ( for example , One 512 Byte data block ). Block based storage interfaces are the most common media , Such as hard disk , Compact disc , floppy disk , Even traditional 9 Tracks of magnetic tape to store data . Block device interface The popularity of makes virtual block devices become building blocks Ceph Ideal for mass data storage systems . In a Ceph In the cluster , Ceph S block devices support thin provisioning , Resizing and storing data .Ceph The block device can make full use of RADOS function , Implementation such as snapshot , Replication and data consistency .Ceph Of RADOS Block device ( namely RBD) adopt RADOS Protocol and kernel module or librbd Interact with the library ..RBD The structure of is as shown in the figure :

2.RBD : A data block is a sequence of bytes ( for example , One 512 Byte data block ). Block based storage interfaces are the most common media , Such as hard disk , Compact disc , floppy disk , Even traditional 9 Tracks of magnetic tape to store data . Block device interface The popularity of makes virtual block devices become building blocks Ceph Ideal for mass data storage systems . In a Ceph In the cluster , Ceph S block devices support thin provisioning , Resizing and storing data .Ceph The block device can make full use of RADOS function , Implementation such as snapshot , Replication and data consistency .Ceph Of RADOS Block device ( namely RBD) adopt RADOS Protocol and kernel module or librbd Interact with the library ..RBD The structure of is as shown in the figure :

3.Ceph FS :Ceph file system (CEPH FS) It's a POSIX Compatible file system , Use Ceph Deposit Storage cluster to store its data .CEPH FS The structure diagram of is as follows :

Extended understanding address :https://www.sohu.com/a/144775333_151779

Four 、Ceph Data stored procedures :

Ceph The storage cluster receives files from clients , Each file will be cut into one or more objects by the client , Then group these objects , And then store it to the cluster according to certain strategies OSD In nodes , The stored procedure is shown in the figure :  In the figure , The distribution of objects needs to go through two stages of calculation :

In the figure , The distribution of objects needs to go through two stages of calculation :

1. Object to PG Mapping .PG(PlaccmentGroup) Is a logical collection of objects .PG Is the system to OSD The basic unit of data distributed by a node , identical PG Objects in will be distributed to the same OSD In nodes ( A master OSD Multiple backup nodes OSD node ). Object's PG By the object ID It's through Hash Algorithm , Combined with some other modified parameters .

2.PG To the corresponding OSD Mapping ,RADOS The system uses the corresponding hash algorithm according to the current state of the system and PG Of ID Number , Will all PG Distribute to OSD In the cluster .

5、 ... and 、Ceph The advantages of :

1.Ceph At the heart of RADOS Usually a small number of people are responsible for cluster management Monitor Processes and a large number of people responsible for data storage OSD The structure of the process , Adopt the distributed architecture without central node , Store data in blocks and multiple copies . It has good scalability and high availability .

2. Ceph The distributed file system provides a variety of clients , Including object storage interfaces 、 Block storage interface and file system interface , It has a wide range of applicability , And the client is connected with the OSD The device directly interacts with data , Greatly improve the data access performance .

3.Ceph As a distributed file system , It can maintain POSIX Compatibility with replication and fault tolerance . from 2010 year 3 End of month , And can be in Linux kernel ( from 2.6.34 version ) Find Ceph The figure of , As Linux One of the file system alternatives for ,Ceph.ko Has been integrated into Linux In the kernel .Ceph Not just a file system , It is also an object storage ecosystem with enterprise level functions .

6、 ... and 、 Case study : build Ceph Distributed storage ;

Case environment :

System | IP Address | Host name ( The logged in user ) | Hosting role |

Centos 7.4 64Bit 1708 | 192.168.100.101 | dlp(dhhy) | admin-node |

Centos 7.4 64Bit 1708 | 192.168.100.102 | node1(dhhy) Add a hard disk | mon-node osd0-node mds-node |

Centos 7.4 64Bit 1708 | 192.168.100.103 | node2(dhhy) Add a hard disk | mon-node osd1-node |

Centos 7.4 64Bit 1708 | 192.168.100.104 | ceph-client(root) | ceph-client |

Case steps :

Ø Configure the base environment :

Ø To configure ntp Time service ;

Ø Respectively in dlp node 、node1、node2 node 、client Install on the client node Ceph Program ;

Ø stay dlp Node management node Storage nodes , Install the registration service , Node information ;

Ø To configure Ceph Of mon Monitor progress ;

Ø To configure Ceph Of osd Storage process ;

Ø Verify view ceph Cluster status information :

Ø To configure Ceph Of mds Metadata process ;

Ø To configure Ceph Of client client ;

Ø test Ceph Client storage for ;

Ø Error sorting ;

Ø Configure the base environment :

[[email protected] ~]# useradd dhhy

[[email protected] ~]# echo "dhhy" |passwd --stdin dhhy

[[email protected] ~]# cat <

192.168.100.101 dlp

192.168.100.102 node1

192.168.100.103 node2

192.168.100.104 ceph-client

END

[[email protected] ~]# echo "dhhy ALL = (root) NOPASSWD:ALL" >> etc/sudoers.d/dhhy

[[email protected] ~]# chmod 0440 etc/sudoers.d/dhhy

[[email protected]node1~]# useradd dhhy

[[email protected]node1 ~]# echo "dhhy" |passwd --stdin dhhy

[[email protected]node1 ~]# cat <

192.168.100.101 dlp

192.168.100.102 node1

192.168.100.103 node2

192.168.100.104 ceph-client

END

[[email protected]node1 ~]#echo "dhhy ALL = (root) NOPASSWD:ALL" >> etc/sudoers.d/dhhy

[[email protected]node1 ~]#chmod 0440 etc/sudoers.d/dhhy

[[email protected]node2 ~]# useradd dhhy

[[email protected]node2 ~]# echo "dhhy" |passwd --stdin dhhy

[[email protected]node2 ~]# cat <

192.168.100.101 dlp

192.168.100.102 node1

192.168.100.103 node2

192.168.100.104 ceph-client

END

[[email protected]node2 ~]#echo "dhhy ALL = (root) NOPASSWD:ALL" >> etc/sudoers.d/dhhy

[[email protected]node2 ~]#chmod 0440 etc/sudoers.d/dhhy

[[email protected] ~]# useradd dhhy

[[email protected] ~]# echo "dhhy" |passwd --stdin dhhy

[[email protected] ~]# cat <

192.168.100.101 dlp

192.168.100.102 node1

192.168.100.103 node2

192.168.100.104 ceph-client

END

[[email protected] ~]# echo "dhhy ALL = (root) NOPASSWD:ALL" >> etc/sudoers.d/dhhy

[[email protected] ~]# chmod 0440 etc/sudoers.d/dhhy

Ø To configure ntp Time service ;

[[email protected]dlp ~]# yum -y install ntp ntpdate

[[email protected]dlp ~]#sed -i '/^server/s/^/#/g' etc/ntp.conf

[[email protected]dlp ~]#sed -i '25aserver 127.127.1.0\nfudge 127.127.1.0 stratum 8' etc/ntp.conf

[[email protected]dlp ~]#systemctl start ntpd

[[email protected]dlp ~]# systemctl enable ntpd

[[email protected]dlp ~]# netstat -utpln

[[email protected]node1 ~]# yum -y install ntpdate

[[email protected]node1 ~]# usr/sbin/ntpdate 192.168.100.101

[[email protected]node1 ~]# echo "/usr/sbin/ntpdate 192.168.100.101" >>/etc/rc.local

[[email protected]node1 ~]# chmod +x etc/rc.local

[[email protected]node2 ~]# yum -y install ntpdate

[[email protected]node2 ~]# usr/sbin/ntpdate 192.168.100.101

[[email protected]node1 ~]# echo "/usr/sbin/ntpdate 192.168.100.101" >>/etc/rc.local

[[email protected]node1 ~]# chmod +x etc/rc.local

[[email protected]ceph-client ~]# yum -y install ntpdate

[[email protected]ceph-client ~]# usr/sbin/ntpdate 192.168.100.101

[[email protected]ceph-client ~]# echo "/usr/sbin/ntpdate 192.168.100.101" >>/etc/rc.local

[[email protected]ceph-client ~]# chmod +x etc/rc.local

Ø Respectively in dlp node 、node1、node2 node 、client Install on the client node Ceph;

[[email protected]dlp ~]#yum -y install yum-utils

[[email protected]dlp ~]#yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/

[[email protected]dlp ~]# yum -y install epel-release--nogpgcheck

[[email protected]dlp ~]# cat <

[Ceph]

name=Ceph packages for \$basearch

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/\$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

END

[[email protected]dlp ~]#ls etc/yum.repos.d/ #### There must be a default official website source , combination epel Source and NetEase ceph Source , To install ;

bak CentOS-fasttrack.repo ceph.repo

CentOS-Base.repo CentOS-Media.repo dl.fedoraproject.org_pub_epel_7_x86_64_.repo

CentOS-CR.repo CentOS-Sources.repo epel.repo

CentOS-Debuginfo.repo CentOS-Vault.repo epel-testing.repo

[[email protected]dlp ~]# su - dhhy

[dhhy@dlp ~]$ mkdir ceph-cluster ## establish ceph Home directory

[dhhy@dlp ~]$ cd ceph-cluster

[dhhy@dlp ceph-cluster]$ sudo yum -y install ceph-deploy ## install ceph Management tools

[dhhy@dlp ceph-cluster]$ sudo yum -y install ceph --nogpgcheck ## install ceph The main program

[[email protected]node1 ~]#yum -y install yum-utils

[[email protected] node1 ~]#yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/

[[email protected]node1 ~]# yum -y install epel-release--nogpgcheck

[[email protected]node1 ~]# cat <

[Ceph]

name=Ceph packages for \$basearch

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/\$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

END

[[email protected]node1 ~]#ls etc/yum.repos.d/ #### There must be a default official website source , combination epel Source and NetEase ceph Source , To install ;

bak CentOS-fasttrack.repo ceph.repo

CentOS-Base.repo CentOS-Media.repo dl.fedoraproject.org_pub_epel_7_x86_64_.repo

CentOS-CR.repo CentOS-Sources.repo epel.repo

CentOS-Debuginfo.repo CentOS-Vault.repo epel-testing.repo

[[email protected]node1 ~]# su - dhhy

[dhhy@node1 ~]$ mkdir ceph-cluster

[dhhy@node1~]$ cd ceph-cluster

[dhhy@node1 ceph-cluster]$ sudo yum -y install ceph-deploy

[dhhy@node1 ceph-cluster]$ sudo yum -y install ceph --nogpgcheck

[dhhy@node1 ceph-cluster]$ sudo yum -y install deltarpm

[[email protected]node2 ~]#yum -y install yum-utils

[[email protected] node1 ~]#yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/

[[email protected]node2 ~]# yum -y install epel-release--nogpgcheck

[[email protected]node2 ~]# cat <

[Ceph]

name=Ceph packages for \$basearch

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/\$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

END

[[email protected]node2 ~]#ls etc/yum.repos.d/ #### There must be a default official website source , combination epel Source and NetEase ceph Source , To install ;

bak CentOS-fasttrack.repo ceph.repo

CentOS-Base.repo CentOS-Media.repo dl.fedoraproject.org_pub_epel_7_x86_64_.repo

CentOS-CR.repo CentOS-Sources.repo epel.repo

CentOS-Debuginfo.repo CentOS-Vault.repo epel-testing.repo

[[email protected]node2 ~]# su - dhhy

[dhhy@node2 ~]$ mkdir ceph-cluster

[dhhy@node2 ~]$ cd ceph-cluster

[dhhy@node2 ceph-cluster]$ sudo yum -y install ceph-deploy

[dhhy@node2 ceph-cluster]$ sudo yum -y install ceph --nogpgcheck

[dhhy@node2 ceph-cluster]$ sudo yum -y install deltarpm

[[email protected] ~]#yum -y install yum-utils

[[email protected] node1 ~]#yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/

[[email protected] ~]# yum -y install epel-release--nogpgcheck

[[email protected] ~]# cat <

[Ceph]

name=Ceph packages for \$basearch

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/\$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

END

[[email protected]~]#ls etc/yum.repos.d/ #### There must be a default official website source , combination epel Source and NetEase ceph Source , To install ;

bak CentOS-fasttrack.repo ceph.repo

CentOS-Base.repo CentOS-Media.repo dl.fedoraproject.org_pub_epel_7_x86_64_.repo

CentOS-CR.repo CentOS-Sources.repo epel.repo

CentOS-Debuginfo.repo CentOS-Vault.repo epel-testing.repo

[[email protected] ~]# yum -y install yum-plugin-priorities

[[email protected] ~]# yum -y install ceph ceph-radosgw --nogpgcheck

Ø stay dlp Node management node Storage nodes , Install the registration service , Node information ;

[[email protected] ceph-cluster]$ pwd ## The current directory must be ceph Installation directory location of

/home/dhhy/ceph-cluster

[[email protected] ceph-cluster]$ ssh-keygen -t rsa ## The master node needs remote management mon node , You need to create a key pair , And copy the public key to mon node

[[email protected] ceph-cluster]$ ssh-copy-id [email protected]

[[email protected] ceph-cluster]$ ssh-copy-id [email protected]

[[email protected] ceph-cluster]$ ssh-copy-id [email protected]

[[email protected] ceph-cluster]$ ssh-copy-id [email protected]

[[email protected] ceph-cluster]$ cat <

Host dlp

Hostname dlp

User dhhy

Host node1

Hostname node1

User dhhy

Host node2

Hostname node2

User dhhy

END

[[email protected] ceph-cluster]$ chmod 644 home/dhhy/.ssh/config

[[email protected] ceph-cluster]$ ceph-deploy new node1 node2 ## Initialize node

[[email protected] ceph-cluster]$ cat <

osd pool default size = 2

END

[[email protected] ceph-cluster]$ ceph-deploy install node1 node2 ## install ceph

Ø To configure Ceph Of mon Monitor progress ;

[[email protected] ceph-cluster]$ ceph-deploy mon create-initial ## initialization mon node

annotation :node The configuration file of the node is in /etc/ceph/ Under the table of contents , Will automatically synchronize dlp Manage the configuration file of the node ;

Ø To configure Ceph Of osd Storage ;

To configure node1 Node osd0 The storage device :

[[email protected] ceph-cluster]$ ssh [email protected] ## establish osd The directory location where the node stores data

[dhhy@node1 ~]$ sudo fdisk dev/sdb

n p enter enter enter p w

[[email protected] ~]$ sudo partx -a /dev/sdb

[[email protected] ~]$ sudo mkfs -t xfs /dev/sdb1

[dhhy@node1 ~]$ sudo mkdir /var/local/osd0

[[email protected] ~]$ sudo vi /etc/fstab

/dev/sdb1 /var/local/osd0 xfs defaults 0 0

:wq

[[email protected] ~]$ sudo mount -a

[dhhy@node1 ~]$ sudo chmod 777 /var/local/osd0

[dhhy@node1 ~]$ sudo chown ceph:ceph /var/local/osd0/

[[email protected] ~]$ ls -ld /var/local/osd0/

[[email protected] ~]$ df -hT

[dhhy@node1 ~]$ exit

To configure node2 Node osd1 The storage device :

[[email protected] ceph-cluster]$ ssh [email protected]

[dhhy@node2 ~]$ sudo fdisk /dev/sdb

n p enter enter enter p w

[[email protected]2 ~]$ sudo partx -a /dev/sdb

[[email protected]2 ~]$ sudo mkfs -t xfs /dev/sdb1

[dhhy@node2 ~]$ sudo mkdir /var/local/osd1

[[email protected]2 ~]$ sudo vi /etc/fstab

/dev/sdb1 /var/local/osd1 xfs defaults 0 0

:wq

[[email protected]2 ~]$ sudo mount -a

[dhhy@node2 ~]$ sudo chmod 777 /var/local/osd1

[dhhy@node2 ~]$ sudo chown ceph:ceph /var/local/osd1/

[[email protected]2~]$ ls -ld /var/local/osd1/

[[email protected]2 ~]$ df -hT

[dhhy@node2 ~]$ exit

dlp Manage node registration node node :

[[email protected] ceph-cluster]$ ceph-deploy osd prepare node1:/var/local/osd0 node2:/var/local/osd1 ## Initial creation osd Node and specify the node storage file location

[[email protected] ceph-cluster]$ chmod +r /home/dhhy/ceph-cluster/ceph.client.admin.keyring

[[email protected] ceph-cluster]$ ceph-deploy osd activate node1:/var/local/osd0 node2:/var/local/osd1

## Activate ods node

[[email protected] ceph-cluster]$ ceph-deploy admin node1 node2 ## Copy key Manage key files to node In nodes

[[email protected] ceph-cluster]$sudo cp /home/dhhy/ceph-cluster/ceph.client.admin.keyring /etc/ceph/

[[email protected] ceph-cluster]$sudo cp /home/dhhy/ceph-cluster/ceph.conf /etc/ceph/

[[email protected] ceph-cluster]$ ls /etc/ceph/

ceph.client.admin.keyring ceph.conf rbdmap

[[email protected] ceph-cluster]$ceph quorum_status --format json-pretty ## see Ceph Cluster details

{

"election_epoch": 4,

"quorum": [

0,

1

],

"quorum_names": [

"node1",

"node2"

],

"quorum_leader_name": "node1",

"monmap": {

"epoch": 1,

"fsid": "dc679c6e-29f5-4188-8b60-e9eada80d677",

"modified": "2018-06-02 23:54:34.033254",

"created": "2018-06-02 23:54:34.033254",

"mons": [

{

"rank": 0,

"name": "node1",

"addr": "192.168.100.102:6789\/0"

},

{

"rank": 1,

"name": "node2",

"addr": "192.168.100.103:6789\/0"

}

]

}

}

Ø Verify view ceph Cluster status information :

[[email protected] ceph-cluster]$ ceph health

HEALTH_OK

[[email protected] ceph-cluster]$ceph -s ## see Ceph Cluster state

cluster 24fb6518-8539-4058-9c8e-d64e43b8f2e2

health HEALTH_OK

monmap e1: 2 mons at {node1=192.168.100.102:6789/0,node2=192.168.100.103:6789/0}

election epoch 6, quorum 0,1 node1,node2

osdmap e10: 2 osds: 2 up, 2 in

flags sortbitwise,require_jewel_osds

pgmap v20: 64 pgs, 1 pools, 0 bytes data, 0 objects

10305 MB used, 30632 MB / 40938 MB avail ## Already used 、 The remaining 、 Total capacity

64 active+clean

[[email protected] ceph-cluster]$ ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 0.03897 root default

-2 0.01949 host node1

0 0.01949 osd.0 up 1.00000 1.00000

-3 0.01949 host node2

1 0.01949 osd.1 up 1.00000 1.00000

[[email protected] ceph-cluster]$ ssh [email protected] ## verification node1 The port listening status of the node, its configuration file and disk usage

[[email protected] ~]$ df -hT |grep sdb1

/dev/sdb1 xfs 20G 5.1G 15G 26% /var/local/osd0

[[email protected] ~]$ du -sh /var/local/osd0/

5.1G /var/local/osd0/

[[email protected] ~]$ ls /var/local/osd0/

activate.monmap active ceph_fsid current fsid journal keyring magic ready store_version superblock systemd type whoami

[[email protected] ~]$ ls /etc/ceph/

ceph.client.admin.keyring ceph.conf rbdmap tmppVBe_2

[[email protected] ~]$ cat /etc/ceph/ceph.conf

[global]

fsid = 0fcdfa46-c8b7-43fc-8105-1733bce3bfeb

mon_initial_members = node1, node2

mon_host = 192.168.100.102,192.168.100.103

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

osd pool default size = 2

[[email protected] ~]$ exit

[[email protected] ceph-cluster]$ ssh [email protected] ## verification node2 The port listening status of the node, its configuration file and its disk usage

[[email protected] ~]$ df -hT |grep sdb1

/dev/sdb1 xfs 20G 5.1G 15G 26% /var/local/osd1

[[email protected] ~]$ du -sh /var/local/osd1/

5.1G /var/local/osd1/

[[email protected] ~]$ ls /var/local/osd1/

activate.monmap active ceph_fsid current fsid journal keyring magic ready store_version superblock systemd type whoami

[[email protected] ~]$ ls /etc/ceph/

ceph.client.admin.keyring ceph.conf rbdmap tmpmB_BTa

[[email protected] ~]$ cat /etc/ceph/ceph.conf

[global]

fsid = 0fcdfa46-c8b7-43fc-8105-1733bce3bfeb

mon_initial_members = node1, node2

mon_host = 192.168.100.102,192.168.100.103

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

osd pool default size = 2

[[email protected] ~]$ exit

Ø To configure Ceph Of mds Metadata process ;

[[email protected] ceph-cluster]$ ceph-deploy mds create node1

[[email protected] ceph-cluster]$ssh [email protected]

[[email protected] ~]$ netstat -utpln |grep 68

(No info could be read for "-p": geteuid()=1000 but you should be root.)

tcp 0 0 0.0.0.0:6800 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:6801 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:6802 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:6803 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:6804 0.0.0.0:* LISTEN -

tcp 0 0 192.168.100.102:6789 0.0.0.0:* LISTEN -

[[email protected] ~]$ exit

Ø To configure Ceph Of client client ;

[[email protected] ceph-cluster]$ ceph-deploy install ceph-client ## Prompt for password , Please enter dhhy, Be gentle ;

[[email protected] ceph-cluster]$ ceph-deploy admin ceph-client

[[email protected] ceph-cluster]$ ssh [email protected]

[[email protected] ~]# chmod +r /etc/ceph/ceph.client.admin.keyring

[[email protected] ~]# exit

[[email protected] ceph-cluster]$ ceph osd pool create cephfs_data 128 ## Data storage pool

pool 'cephfs_data' created

[[email protected] ceph-cluster]$ ceph osd pool create cephfs_metadata 128 ## Metadata storage pool

pool 'cephfs_metadata' created

[[email protected] ceph-cluster]$ ceph fs new cephfs cephfs_data cephfs_metadata ## Create file system

new fs with metadata pool 1 and data pool 2

[[email protected] ceph-cluster]$ ceph fs ls ## Check out the file system

name: cephfs, metadata pool: cephfs_data, data pools: [cephfs_metadata ]

[[email protected] ceph-cluster]$ ceph -s

cluster 24fb6518-8539-4058-9c8e-d64e43b8f2e2

health HEALTH_WARN

clock skew detected on mon.node2

too many PGs per OSD (320 > max 300)

Monitor clock skew detected

monmap e1: 2 mons at {node1=192.168.100.102:6789/0,node2=192.168.100.103:6789/0}

election epoch 6, quorum 0,1 node1,node2

fsmap e5: 1/1/1 up {0=node1=up:active}

osdmap e17: 2 osds: 2 up, 2 in

flags sortbitwise,require_jewel_osds

pgmap v54: 320 pgs, 3 pools, 4678 bytes data, 24 objects

10309 MB used, 30628 MB / 40938 MB avail

320 active+clean

Ø test Ceph Client storage for ;

[[email protected] ceph-cluster]$ ssh [email protected]

[[email protected] ~]# mkdir /mnt/ceph

[[email protected] ~]# grep key /etc/ceph/ceph.client.admin.keyring |awk '{print $3}' >>/etc/ceph/admin.secret

[[email protected] ~]# cat /etc/ceph/admin.secret

AQCd/x9bsMqKFBAAZRNXpU5QstsPlfe1/FvPtQ==

[[email protected] ~]# mount -t ceph192.168.100.102:6789:/ /mnt/ceph/ -o name=admin,secretfile=/etc/ceph/admin.secret

[[email protected] ~]# df -hT |grep ceph

192.168.100.102:6789:/ ceph 40G 11G 30G 26% /mnt/ceph

[[email protected] ~]# dd if=/dev/zero of=/mnt/ceph/1.file bs=1G count=1

Recorded 1+0 Read in of

Recorded 1+0 Write

1073741824 byte (1.1 GB) Copied ,14.2938 second ,75.1 MB/ second

[[email protected] ~]# ls /mnt/ceph/

1.file

[[email protected] ~]# df -hT |grep ceph

192.168.100.102:6789:/ ceph 40G 13G 28G 33% /mnt/ceph

[[email protected] ~]# mkdir /mnt/ceph1

[[email protected] ~]# mount -t ceph 192.168.100.103:6789:/ /mnt/ceph1/ -o name=admin,secretfile=/etc/ceph/admin.secret

[[email protected] ~]# df -hT |grep ceph

192.168.100.102:6789:/ ceph 40G 15G 26G 36% /mnt/ceph

192.168.100.103:6789:/ ceph 40G 15G 26G 36% /mnt/ceph1

[[email protected] ~]# ls /mnt/ceph1/

1.file 2.file

Ø Error sorting :

1. If there is a problem during configuration , Recreate the cluster or reinstall ceph, So you need to ceph The data in the cluster is cleared , The order is as follows ;

[[email protected] ceph-cluster]$ ceph-deploy purge node1 node2

[[email protected] ceph-cluster]$ ceph-deploy purgedata node1 node2

[[email protected] ceph-cluster]$ ceph-deploy forgetkeys && rm ceph.*

2.dlp The node is node Node and client installation ceph when , There will be yum Installation timeout , Mostly due to network problems , You can execute the installation command several more times ;

3.dlp Node assignment ceph-deploy Command management node In node configuration , The current directory must be /home/dhhy/ceph-cluster/, Otherwise, it will prompt that ceph.conf Configuration file for ;

4.osd Node /var/local/osd*/ The directory permission of the storage data entity must be 777, And the owner and group must be ceph;

5. stay dlp Manage node installation ceph There are the following problems in the process

resolvent :

1. again yum install node1 perhaps node2 Of epel-release software package ;

2. If it cannot be solved , Download package , Use the following command for local installation ;

6. If you are dlp In the management node /home/dhhy/ceph-cluster/ceph.conf The main configuration file has changed , Then you need to synchronize its main configuration file to node node , The order is as follows :

node After the node receives the configuration file , The process needs to be restarted :

7. stay dlp Management node view ceph In cluster state , Appear as follows , The reason is that the time is inconsistent ;

resolvent : take dlp Node ntpd Time service restart ,node The node synchronizes the time again , As shown below :

8. stay dlp Management node for management node Node time , The location must be /home/dhhy/ceph-cluster/, Otherwise, it will prompt that ceph.conf Master profile ;

边栏推荐

- Mongodb introduction and typical application scenarios

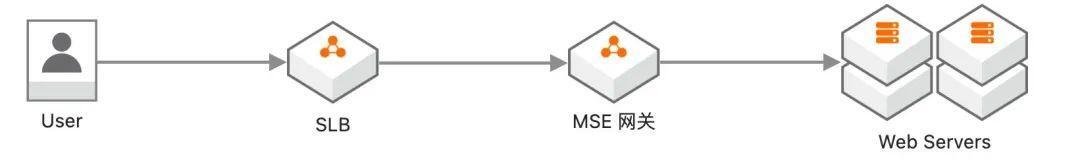

- 大促场景下,如何做好网关高可用防护

- Can Oracle's CTAs bring constraints and other attributes to the new table?

- 爱数课实验 | 第七期-基于随机森林的金融危机分析

- Leetcode 1381. Design a stack that supports incremental operations

- 灵活的IP网络测试工具——— X-Launch

- 实现字符串MyString

- mime. Type file content

- Is it safe to open an account and buy stocks on the Internet? New to stocks, no guidance

- It took me 6 months to complete the excellent graduation project of undergraduate course. What have I done?

猜你喜欢

SQL audit platform permission module introduction and account creation tutorial

A set of system to reduce 10 times the traffic pressure in crowded areas

Oracle architecture summary

通过CE修改器修改大型网络游戏

大促场景下,如何做好网关高可用防护

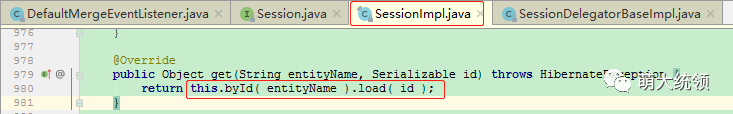

JPA踩坑系列之save方法

Graduation design of police report convenience service platform based on wechat applet

行业案例|从零售之王看银行数字化转型的运营之道

体验Navicat Premium 16,无限重置试用14天方法(附源码)

![[STL programming] [common competition] [Part 1]](/img/ce/4d489e62d6c8d16134262b65d4b0d9.png)

[STL programming] [common competition] [Part 1]

随机推荐

Cerebral cortex: predicting children's mathematical skills from task state and resting state brain function connections

SQL audit platform permission module introduction and account creation tutorial

分享下我是如何做笔记的

众昂矿业:新能源或成萤石最大应用领域

VMware vSphere esxi 7.0 installation tutorial

Installing services for NFS

通过CE修改器修改大型网络游戏

Is it safe to open an account and buy stocks on the Internet? New to stocks, no guidance

请教CMS小程序首页的幻灯片在哪里设置?

Experiment of love number lesson | phase V - emotion judgment of commodity review based on machine learning method

Explore gaussdb and listen to what customers and partners say

What is a low code development platform? Why is it so hot now?

实际工作中用到的shell命令 - sed

实现字符串MyString

Character interception triplets of data warehouse: substrb, substr, substring

OpenSSL 编程 二:搭建 CA

MySQL client tools are recommended. I can't imagine that it is best to use Juran

送你12个常用函数公式,用过的都说好

A set of system to reduce 10 times the traffic pressure in crowded areas

Galaxy Kirin system LAN file sharing tutorial