当前位置:网站首页>Pytorch (network model)

Pytorch (network model)

2022-06-26 05:40:00 【Yuetun】

Neural network chicken wings nn.Module

Official website

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))# Convolution 、 Nonlinear processing

return F.relu(self.conv2(x))

practice

import torch

import torch.nn as nn

import torch.nn.functional as F

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

return x+1

dun=Dun()

x=torch.tensor(1.0)# Transformation types

output=dun(x);# call forward

print(output)# Output

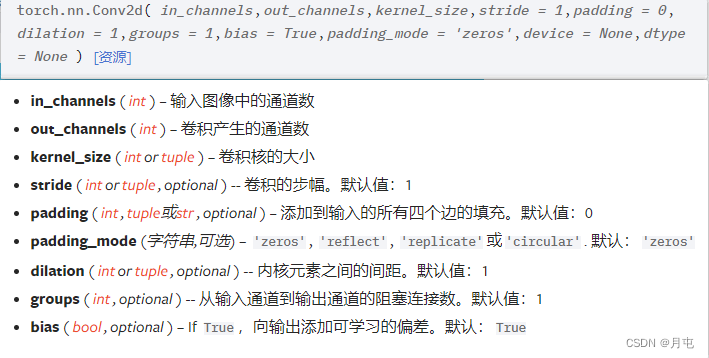

Convolution layer

import torch

input=torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]])

kernel=torch.tensor([[1,2,1],

[0,1,0],

[2,1,0]])

print(input.shape)# Output size

print(kernel.shape)

input=torch.reshape(input,(1,1,5,5))# Type conversion

kernel=torch.reshape(kernel,(1,1,3,3))# Type conversion

print(input)

print(kernel)

print(input.shape)

print(kernel.shape)

# Convolution operation

out= F.conv2d(input,kernel,stride=1)

print(out)

out= F.conv2d(input,kernel,stride=2)

print(out)

# fill

out= F.conv2d(input,kernel,stride=1,padding=1)

print(out)

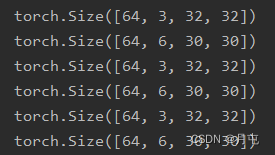

Output chanel yes 2 when

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset=torchvision.datasets.CIFAR10("./data_set_test",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader= DataLoader(dataset,batch_size=64)

# Convolution class

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.conv1=Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)

def forward(self,x):

return self.conv1(x)

dun=Dun()

print(dun)

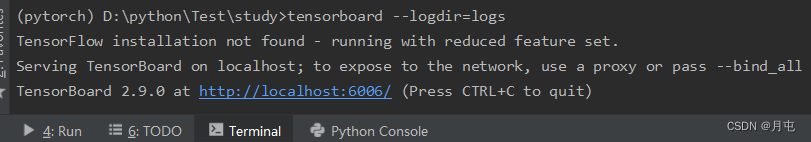

writer= SummaryWriter("./logs")

step=0

# Convolution operation

for data in dataloader:

img,target=data

output=dun(img)

print(img.shape)

print(output.shape)

writer.add_images("input",img,step)

output=torch.reshape(output,(-1,3,30,30))# -1 It will be automatically calculated according to the following values

writer.add_images("output",output,step)

step+=1

writer.close()

Pooling layer

effect : It's like changing from high-definition video to low-definition video

import torch

from torch import nn

from torch.nn import MaxPool2d

input =torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]],dtype=torch.float32)

input=torch.reshape(input,(-1,1,5,5))

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.maxpool=MaxPool2d(kernel_size=3,ceil_mode=True)# ceil_model false and True The results are consistent with the expectations

def forward(self,inut):

return self.maxpool(input)

dun=Dun()

out=dun(input)

print(out)

The image processing

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset=torchvision.datasets.CIFAR10("./data_set_test",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader= DataLoader(dataset,batch_size=64)

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.maxpool=MaxPool2d(kernel_size=3,ceil_mode=True)# ceil_model false and True The results are consistent with the expectations

def forward(self,input):

return self.maxpool(input)

dun=Dun()

step=0

writer=SummaryWriter("logs")

for data in dataloader:

img,target=data

writer.add_images("input",img,step)

output=dun(img)

writer.add_images("output",output,step)

step+=1

writer.close()

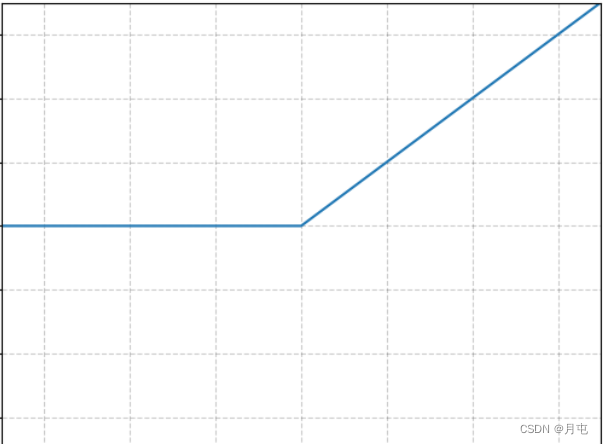

Nonlinear activation

The purpose of nonlinear transformation is to introduce nonlinear features , Can better handle information

ReLU

import torch

from torch import nn

from torch.nn import ReLU

input= torch.tensor([[1,-0.5],[-1,3]])

input=torch.reshape(input,(-1,1,2,2))

print(input.shape)

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.relu1=ReLU()

def forward(self,input):

return self.relu1(input)

dun=Dun()

output=dun(input)

print(output)

sigmoid

import torch

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset=torchvision.datasets.CIFAR10("./data_set_test",train=False,download=True,transform=torchvision.transforms.ToTensor())

dataloader=DataLoader(dataset,batch_size=64)

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.relu1=ReLU()

self.sigmoid=Sigmoid()

def forward(self,input):

return self.sigmoid(input)

dun=Dun()

writer=SummaryWriter("./logs")

step=0

for data in dataloader:

img,target=data

writer.add_images("input",img,global_step=step)

output=dun(img)

writer.add_images("output",output,global_step=step)

step+=1

writer.close()

Linear layer

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10("./data_set_test",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader=DataLoader(dataset,batch_size=64)

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.linear=Linear(196608,10)

def forward(self,input):

return self.linear(input)

dun=Dun()

for data in dataloader:

img,target=data

print(img.shape)

# input=torch.reshape(img,(1,1,1,-1))

input= torch.flatten(img)# Flatten the data on a row , Instead of the above line

print(input.shape)

output=dun(input)

print(output.shape)

Regularization layer

Accelerate the training speed of neural network

# With Learnable Parameters

m = nn.BatchNorm2d(100)

# Without Learnable Parameters

m = nn.BatchNorm2d(100, affine=False)

input = torch.randn(20, 100, 35, 45)

output = m(input)

Other layers have Recurrent Layers、Transformer Layers、Linear Layers etc.

A simple network model

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Dun(nn.Module):

def __init__(self):

super().__init__()

# 2.

self.model1 = Sequential(Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10))

# 1.

# self.conv1=Conv2d(3,32,5,padding=2)

# self.maxpool1=MaxPool2d(2)

# self.conv2=Conv2d(32,32,5,padding=2)

# self.maxpool2=MaxPool2d(2)

# self.conv3=Conv2d(32,64,5,padding=2)

# self.maxpool3=MaxPool2d(2)

# self.flatten=Flatten()

# self.linear1=Linear(1024,64)

# self.linear2=Linear(64,10)

def forward(self,x):

x=self.model1(x)

return x

dun=Dun()

# test

input=torch.ones((64,3,32,32))

print(dun(input).shape)

writer=SummaryWriter("./logs")

writer.add_graph(dun,input)

writer.close()

loss function

L1Loss、MSELoss

import torch

from torch.nn import L1Loss

from torch import nn

input=torch.tensor([1,2,3],dtype=torch.float32)

targrt=torch.tensor([1,2,5],dtype=torch.float32)

loss=L1Loss(reduction="sum")# This parameter has sum and mean Two kinds of , The default is mean

print(loss(input,targrt))

loss_mse=nn.MSELoss()

print(loss_mse(input,targrt))

CROSSENTROPYLOSS

import torch

from torch.nn import L1Loss

from torch import nn

x=torch.tensor([0.1,0.2,0.3])

y=torch.tensor([1])

x=torch.reshape(x,(1,3))

loss_cross=nn.CrossEntropyLoss()

print(loss_cross(x,y))

Use

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10("./data_set_test",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader=DataLoader(dataset=dataset,batch_size=1)

# Classification neural network

class Dun(nn.Module):

def __init__(self):

super().__init__()

# 2.

self.model1 = Sequential(Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10))

def forward(self,x):

x=self.model1(x)

return x

dun=Dun()

loss=nn.CrossEntropyLoss()

for data in dataloader:

img,target=data

output=dun(img)

print(output)

print(target)

result_loss=loss(output,target)# Loss function estimation

#print(result_loss)

result_loss.backward()# Back propagation

print("ok")

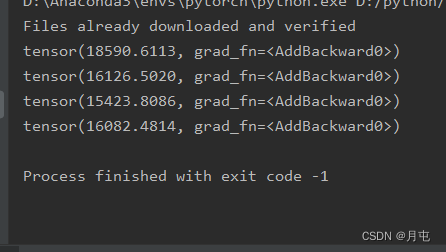

Optimizer

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10("./data_set_test",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader=DataLoader(dataset=dataset,batch_size=1)

# Classification neural network

class Dun(nn.Module):

def __init__(self):

super().__init__()

# 2.

self.model1 = Sequential(Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10))

def forward(self,x):

x=self.model1(x)

return x

dun=Dun()# Examples of classified neural networks

loss=nn.CrossEntropyLoss() # Loss function

optim=torch.optim.SGD(dun.parameters(),lr=0.01) # Optimizer

# do 20 Time training

for epoch in range(20):

running_loss=0.0

for data in dataloader:

img,target=data

output=dun(img)

result_loss=loss(output,target)# Loss function estimation

optim.zero_grad()# The previous gradient is cleared

#print(result_loss)

result_loss.backward()# Back propagation , Find the gradient of each node

optim.step()# Parameter tuning

running_loss+=result_loss

print(running_loss)

Network model saving and reading

Model preservation

import torch

import torchvision

from torch import nn

vgg16=torchvision.models.vgg16(pretrained=False)

# Save the way 1( The structure and parameters of the network model are saved )

torch.save(vgg16,"vgg16_method1.pth")

# The way 2: Save the parameters of the model ( In the form of a dictionary )

torch.save(vgg16.state_dict(),"vgg16_method2.pth")

# trap

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.conv1=nn.Conv2d(3,64,kernel_size=3)

def forward(self,x):

return self.conv1(x)

dun=Dun()

torch.save(dun,"dun_method1.pth")

Model loading

import torch

# Save the way 1-》 load

# model=torch.load("vgg16_method1.pth")

# print(model)

# Mode 2 loading , Just dictionary mode

# model=torch.load("vgg16_method2.pth")

# print(model)

## To restore the network model

import torchvision

from torch import nn

vgg16=torchvision.models.vgg16(pretrained=False)

vgg16.load_state_dict(torch.load("vgg16_method2.pth"))

print(vgg16)

# trap

# You need to write the model

class Dun(nn.Module):

def __init__(self):

super().__init__()

self.conv1=nn.Conv2d(3,64,kernel_size=3)

def forward(self,x):

return self.conv1(x)

model=torch.load("dun_method1.pth")

print(model)

边栏推荐

- Serious hazard warning! Log4j execution vulnerability is exposed!

- The news of thunderbolt

- uni-app吸顶固定样式

- 电机专用MCU芯片LCM32F037系列内容介绍

- A new explanation of tcp/ip five layer protocol model

- Security problems in wireless networks and modern solutions

- 工厂方法模式、抽象工厂模式

- Could not get unknown property ‘*‘ for SigningConfig container of type org.gradle.api.internal

- BOM document

- Mise en file d'attente des messages en utilisant jedis Listening redis stream

猜你喜欢

Ribbon负载均衡服务调用

cartographer_ pose_ graph_ 2d

How to ensure the efficiency and real-time of pushing large-scale group messages in mobile IM?

uni-app吸顶固定样式

Navicat如何将当前连接信息复用另一台电脑

Leetcode114. Expand binary tree into linked list

Yunqi lab recommends experience scenarios this week, free cloud learning

【活动推荐】云原生、产业互联网、低代码、Web3、元宇宙……哪个是 2022 年架构热点?...

![[arm] build boa based embedded web server on nuc977](/img/fb/7dc1898e35ed78b417770216b05286.png)

[arm] build boa based embedded web server on nuc977

原型模式,咩咩乱叫

随机推荐

无线网络存在的安全问题及现代化解决方案

Leetcode114. 二叉树展开为链表

写在父亲节前

Use jedis to monitor redis stream to realize message queue function

Pytorch中自己所定义(修改)的模型加载所需部分预训练模型参数并冻结

Command line interface of alluxio

一段不离不弃的爱情

redis探索之布隆过滤器

MySQL数据库-01数据库概述

电机专用MCU芯片LCM32F037系列内容介绍

Redis discovery bloom filter

FindControl的源代码

MySQL source code reading (II) login connection debugging

使用Jedis监听Redis Stream 实现消息队列功能

使用Jenkins执行TestNg+Selenium+Jsoup自动化测试和生成ExtentReport测试报告

工厂方法模式、抽象工厂模式

Last flight

循环位移

pytorch(环境、tensorboard、transforms、torchvision、dataloader)

定位设置水平,垂直居中(多种方法)