当前位置:网站首页>[point cloud processing paper crazy reading frontier version 11] - unsupervised point cloud pre training via occlusion completion

[point cloud processing paper crazy reading frontier version 11] - unsupervised point cloud pre training via occlusion completion

2022-07-03 09:14:00 【LingbinBu】

OcCo:Unsupervised Point Cloud Pre-training via Occlusion Completion

Abstract

- Method : A pre training method for point cloud is proposed Occlusion Completion (OcCo)

- Technical details :

- mask The blocked point in the camera's perspective

- Learn one encoder-decoder Model , Used to reconstruct occluded points

- Use encoder As the initialization of the downstream point cloud task

- application : object classification & part-based and semantic segmentation

- Code :https://github.com/hansen7/OcCo ( Support PyTorch and TensorFlow)

introduction

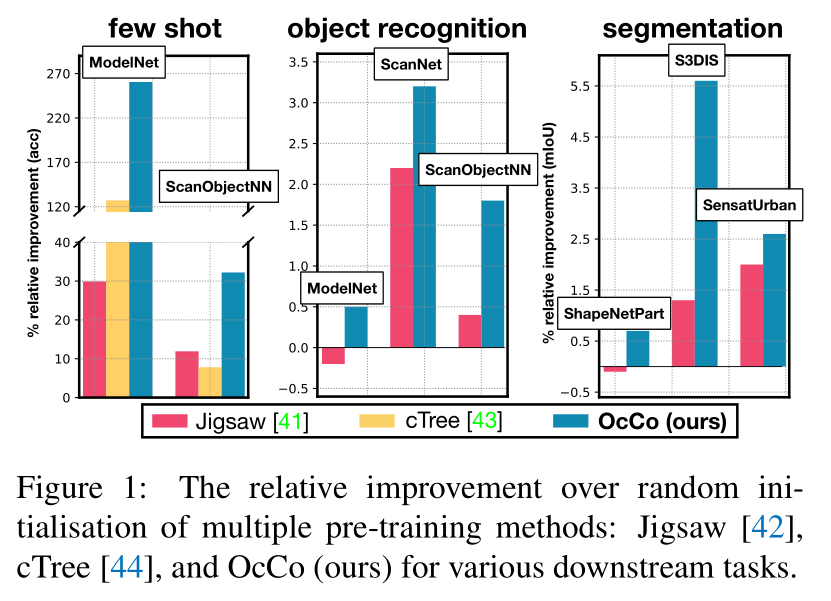

OcCo It has the following properties :

- Study in small samples (few-shot learning) The sampling efficiency can be improved in the experiment

- It can improve generalization in classification and segmentation tasks

- After fine adjustment, it is easier to find the local minimum

- adopt network dissection Able to describe a more semantic representation

- stay jittering, translation and rotation Better classification quality can still be maintained under transformation

Method

remember P \mathcal{P} P by 3D A group of point clouds in European space , P = { p 1 , p 2 , … , p n } \mathcal{P}=\left\{p_{1}, p_{2}, \ldots, p_{n}\right\} P={ p1,p2,…,pn}, Each of these points p i p_{i} pi It contains coordinates ( x i , y i , z i ) \left(x_{i}, y_{i}, z_{i}\right) (xi,yi,zi) And other features ( Color and normal vector ) Vector . First from occlusion mapping o ( ⋅ ) o(\cdot) o(⋅) Begin to describe , And then introduce ompletion model c ( ⋅ ) c(\cdot) c(⋅), Pseudocode and structural details are shown in the appendix .

Generating Occlusions

Define a randomised occlusion mapping o : P → P o: \mathbb{P} \rightarrow \mathbb{P} o:P→P , among P \mathbb{P} P It's point cloud space , The description is from all point clouds P \mathcal{P} P To cover the point cloud P ~ \tilde{\mathcal{P}} P~ Mapping between . This mapping is done by removing P \mathbb{P} P Those points that cannot be seen from a specific point of view P ~ \tilde{\mathcal{P}} P~, Steps are as follows :

- The complete point cloud in the world coordinate system is projected onto the coordinates in the camera coordinate system according to the viewpoint of the camera

- Determine the occluded point under this viewpoint

- Then back project the points in the camera coordinate system to the world coordinate system

Viewing the point cloud from a camera

Define from... Through pinhole camera 3D Mapping between the world coordinate system and a specific camera coordinate system :

among ( x , y , z ) (x, y, z) (x,y,z) Is the coordinate of the original point cloud in the world coordinate system , The camera viewpoint is rotated matrix R \mathbf{R} R Vector of peaceful shift t \mathbf{t} t decision . Inside the camera K \mathbf{K} K By focal length f f f,skewness γ \gamma γ, The width of the image w w w, high h h h decision . After giving the above parameters , You can calculate the coordinates of the point in the camera coordinate system ( x c a m , y c a m , z c a m ) \left(x_{\mathrm{cam}}, y_{\mathrm{cam}}, z_{\mathrm{cam}}\right) (xcam,ycam,zcam).

Determining occluded points

Handle points in two ways ( x c a m , y c a m , z c a m ) \left(x_{\mathrm{cam}}, y_{\mathrm{cam}}, z_{\mathrm{cam}}\right) (xcam,ycam,zcam) :

- In the camera coordinate system 3D spot ( x c a m , y c a m , z c a m ) \left(x_{\mathrm{cam}}, y_{\mathrm{cam}}, z_{\mathrm{cam}}\right) (xcam,ycam,zcam)

- Depth is z c a m z_{\mathrm{cam}} zcam Of 2D Pixel coordinates ( f x c a m / z c a m , f y c a m / z c a m ) \left(f x_{\mathrm{cam}} / z_{\mathrm{cam}}, f y_{\mathrm{cam}} / z_{\mathrm{cam}}\right) (fxcam/zcam,fycam/zcam)

In this way , If some points obtained by projection have the same pixel coordinates , But the depth value is different , Then there may be an occlusion relationship between these points . To determine which points are obscured , We use... First Delaunay triangulation To rebuild a polygon mesh, Then remove those belonging to hidden surface The point of , This hidden surface adopt z-buffering decision .

Mapping back from camera frame to world frame

Once the occluded point is removed , We can re project the point into the original world coordinate system , The principle used is formula 1 The inverse transformation of . therefore randomised occlusion mapping o ( ⋅ ) o(\cdot) o(⋅) The construction steps of are as follows :

- Fix a set of initial point clouds P \mathcal{P} P

- Given the camera's internal parameter matrix K \mathbf{K} K, External parameters under multiple viewpoints [ [ R 1 ∣ t 1 ] , … , [ R V ∣ t V ] ] \left[\left[\mathbf{R}_{1} \mid \mathbf{t}_{1}\right], \ldots,\left[\mathbf{R}_{V} \mid \mathbf{t}_{V}\right]\right] [[R1∣t1],…,[RV∣tV]], among V V V Indicates the number of viewpoints

- For each viewpoint v ∈ [ V ] v \in[V] v∈[V], Use the formula 1 take P \mathcal{P} P Are projected into the corresponding camera coordinate system

- Find the occlusion points and remove them

- Back project the remaining points into the world coordinate system , For each viewpoint v ∈ [ V ] v \in[V] v∈[V], Get the final occlusion point cloud P ~ v \tilde{\mathcal{P}}_{v} P~v

The Completion Task

Given by occlusion mapping o ( ⋅ ) o(\cdot) o(⋅) Obtained point cloud P ~ \tilde{\mathcal{P}} P~, The goal of the completion task is to start from P ~ \tilde{\mathcal{P}} P~ Learn one completion mapping c : P → P c: \mathbb{P} \rightarrow \mathbb{P} c:P→P, Used to complete the point cloud P ^ \hat{\mathcal{P}} P^. If meet E P ~ ∼ o ( P ) ℓ ( c ( P ~ ) , P ) → 0 \mathbb{E}_{\tilde{\mathcal{P}} \sim o(\mathcal{P})} \ell(c(\tilde{\mathcal{P}}), \mathcal{P}) \rightarrow 0 EP~∼o(P)ℓ(c(P~),P)→0, It means that completion mapping To be accurate , among ℓ ( ⋅ , ⋅ ) \ell(\cdot, \cdot) ℓ(⋅,⋅) Is the loss function . The structure of the complement model is a encoder-decoder Network of ,encoder Map the occluded network into a vector ,decoder Complete the point cloud . After pre training ,encoder The weight of can be used as the initial value of downstream tasks .

experiment

OcCo Pre-Training Setup

Used in all experiments ModelNet40 As a pre training data set . The internal parameter of the camera is set to { f = 1000 , γ = 0 , w = 1600 , h = 1200 } \left\{ {f=1000,\gamma=0,w=1600,h=1200} \right\} { f=1000,γ=0,w=1600,h=1200} . For each group of point clouds , Random selection 10 Group viewpoint , The viewpoint rotation is different , Pan is set to 0.

completion model in ,encoder It can be set to PointNet, PCN and DGCNN.decoder choice folding operation , The reconstruction step is divided into two steps , The first step will be 1024 The occlusion vector of dimension is converted to include 1024 Point coarse Point cloud P ^ coarse \hat{\mathcal{P}}_{\text {coarse }} P^coarse , Then on P ^ coarse \hat{\mathcal{P}}_{\text {coarse }} P^coarse Every point in the uses 4 × 4 4 \times 4 4×4 Of 2D Mesh reconstruction with 16384 Point fine shape P ^ fine \hat{\mathcal{P}}_{\text {fine }} P^fine , Use Chamfer Distance (CD) As a prediction P ^ \hat{\mathcal{P}} P^ and ground truth P \mathcal{P} P Loss function between :

C D ( P ^ , P ) = 1 ∣ P ^ ∣ ∑ x ^ ∈ P ^ min x ∈ P ∥ x ^ − x ∥ 2 + 1 ∣ P ∣ ∑ x ∈ P min x ^ ∈ P ^ ∥ x − x ^ ∥ 2 \begin{aligned} \mathrm{CD}(\hat{\mathcal{P}}, \mathcal{P}) &= \frac{1}{|\hat{\mathcal{P}}|} \sum_{\hat{x} \in \hat{\mathcal{P}}} \min _{x \in \mathcal{P}}\|\hat{x}-x\|_{2}+\frac{1}{|\mathcal{P}|} \sum_{x \in \mathcal{P}} \min _{\hat{x} \in \hat{\mathcal{P}}}\|x-\hat{x}\|_{2} \end{aligned} CD(P^,P)=∣P^∣1x^∈P^∑x∈Pmin∥x^−x∥2+∣P∣1x∈P∑x^∈P^min∥x−x^∥2

The ultimate model loss is coarse and fine The shape of the Chamfer distances Weighted sums :

ℓ : = CD ( P ^ coarse , P coarse ) + α C D ( P ^ fine , P fine ) \ell:=\operatorname{CD}\left(\hat{\mathcal{P}}_{\text {coarse }}, \mathcal{P}_{\text {coarse }}\right)+\alpha \mathrm{CD}\left(\hat{\mathcal{P}}_{\text {fine }}, \mathcal{P}_{\text {fine }}\right) ℓ:=CD(P^coarse ,Pcoarse )+αCD(P^fine ,Pfine )

Fine-Tuning Setup

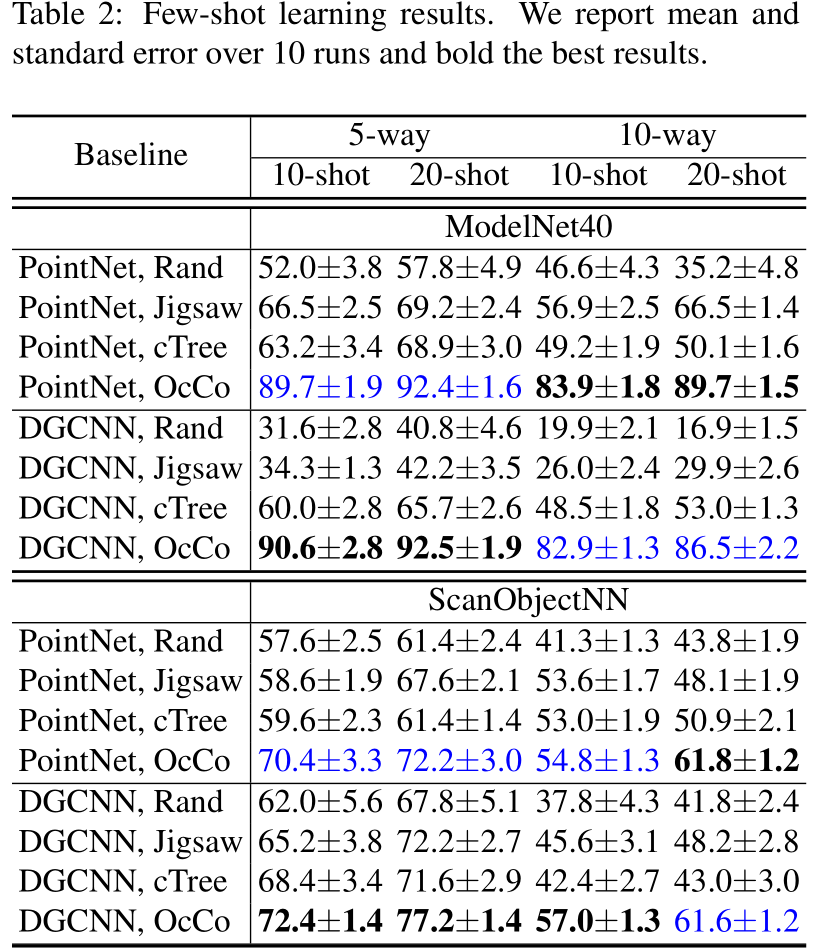

Few-shot learning

The goal of small sample learning is to use very limited data to train accurate models , During the training , Random selection K K K individual classes, Every category Both contain N N N Samples .

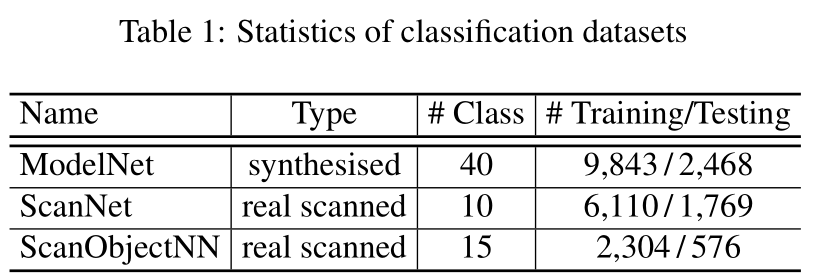

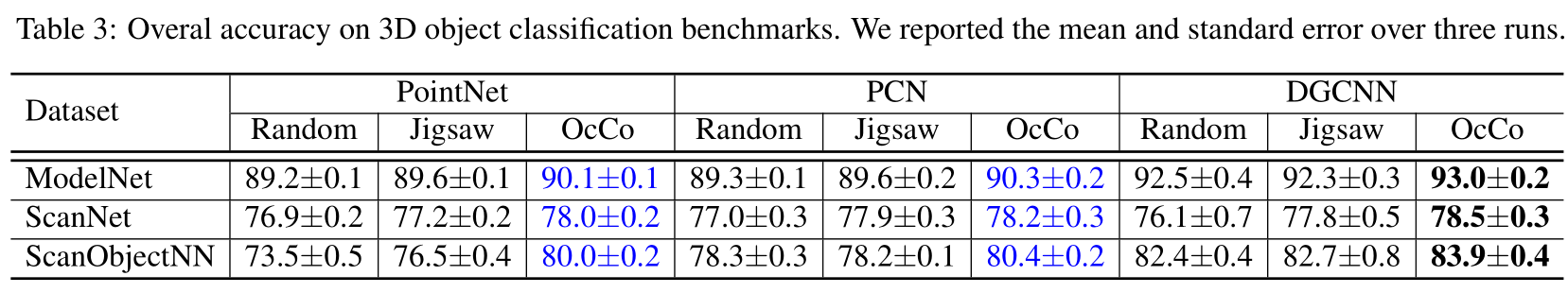

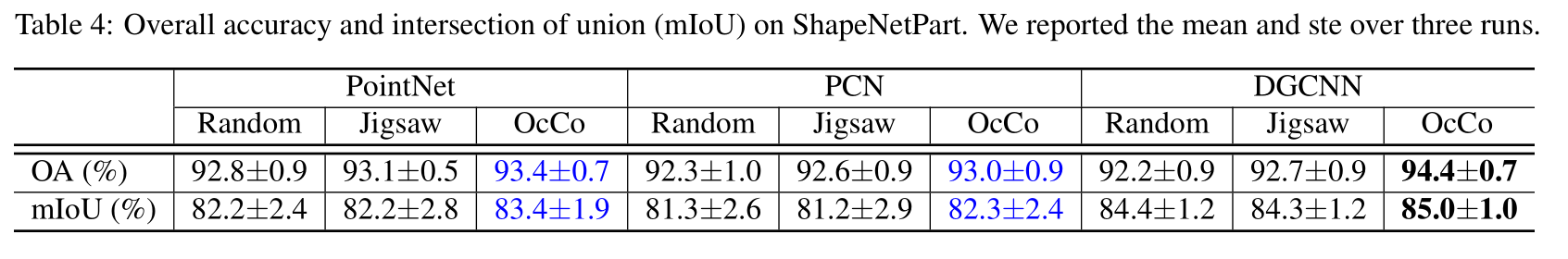

Object classification

Part segmentation

Semantic segmentation

analysis

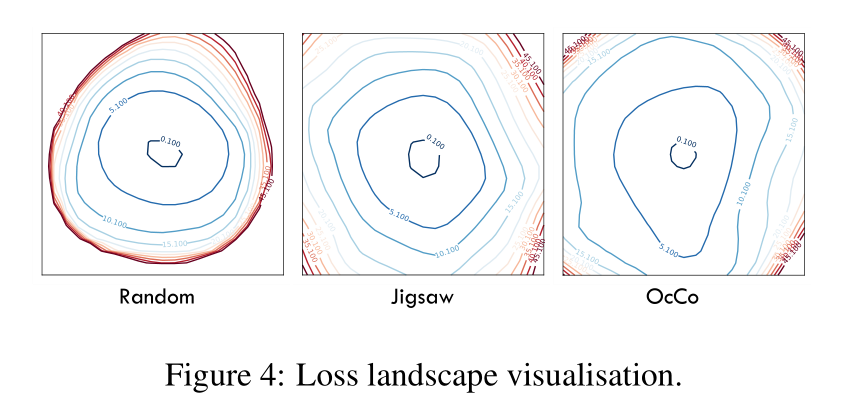

Visualisation of optimisation landscape

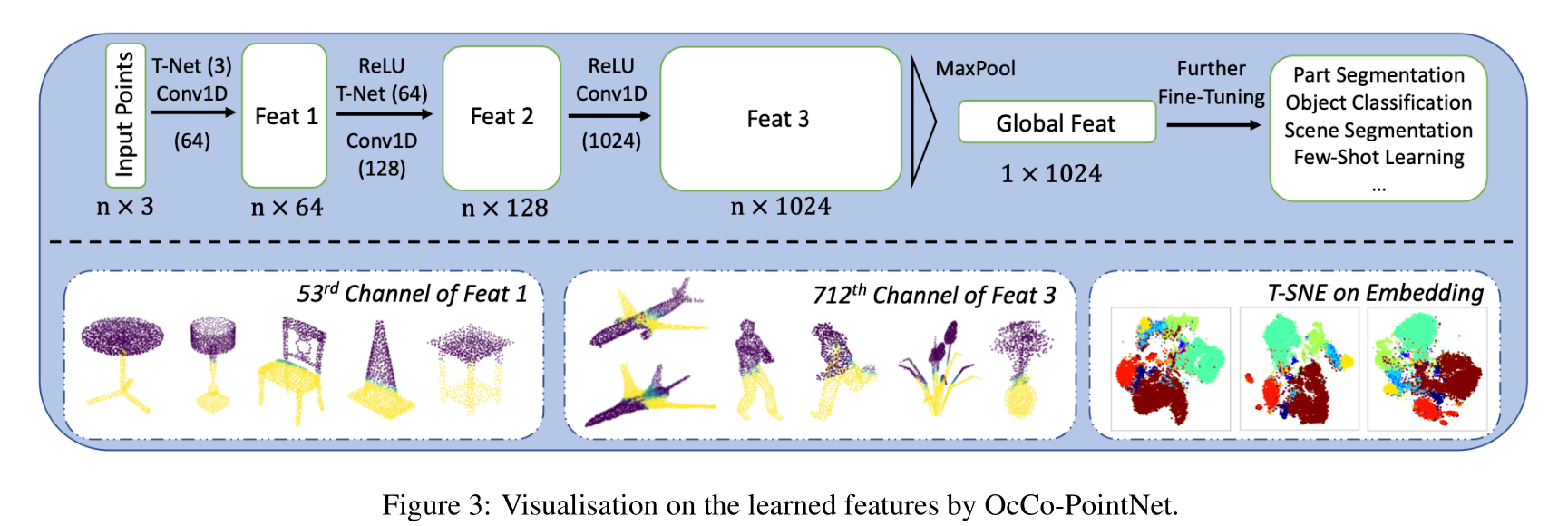

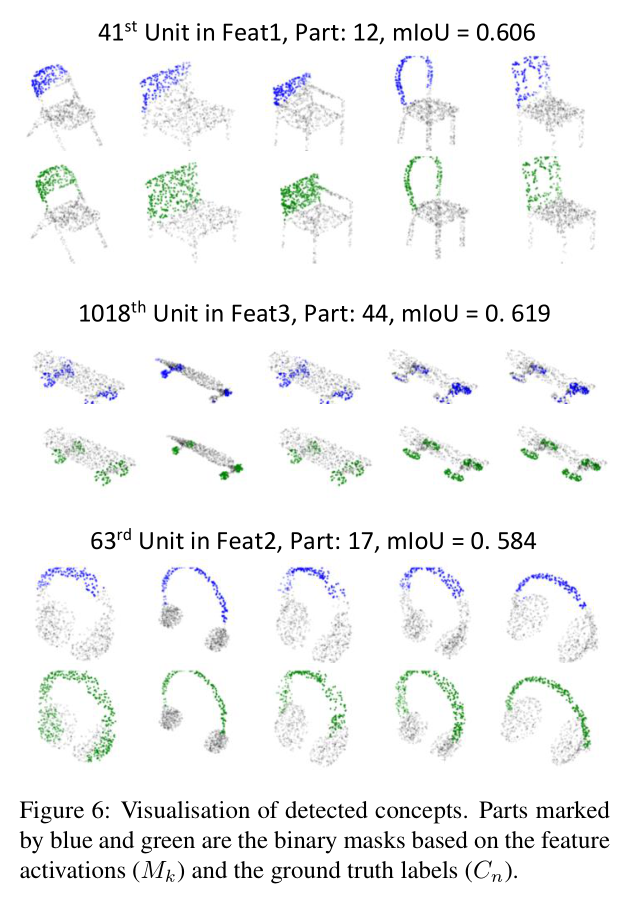

Visualisation of learned features

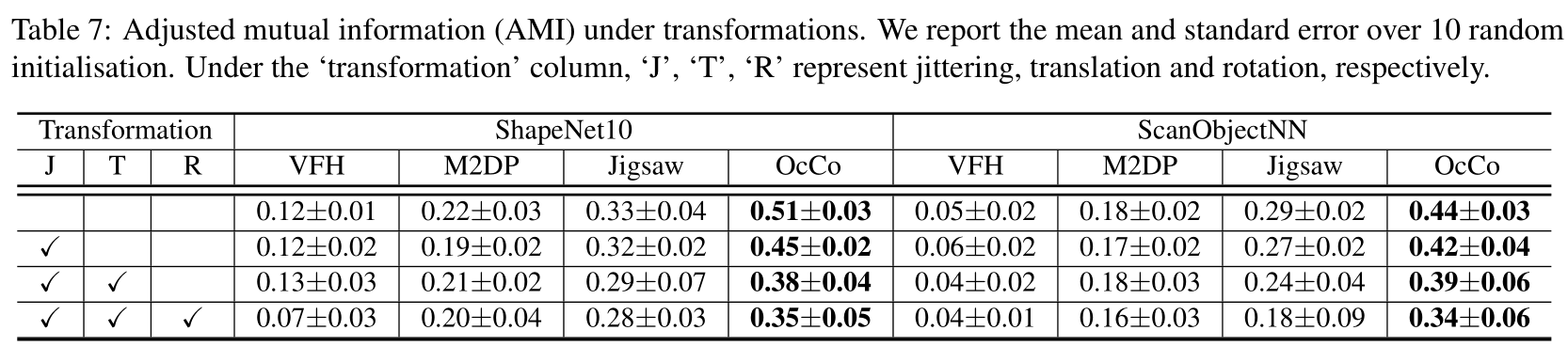

Unsupervised mutual information probe

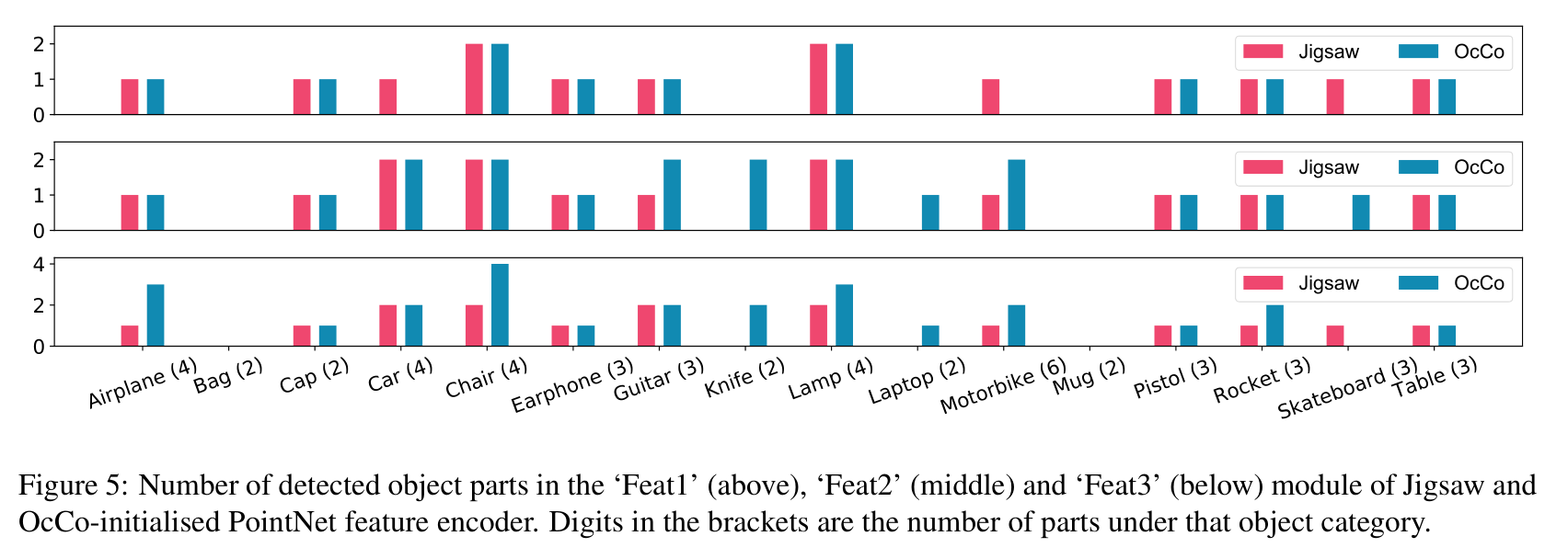

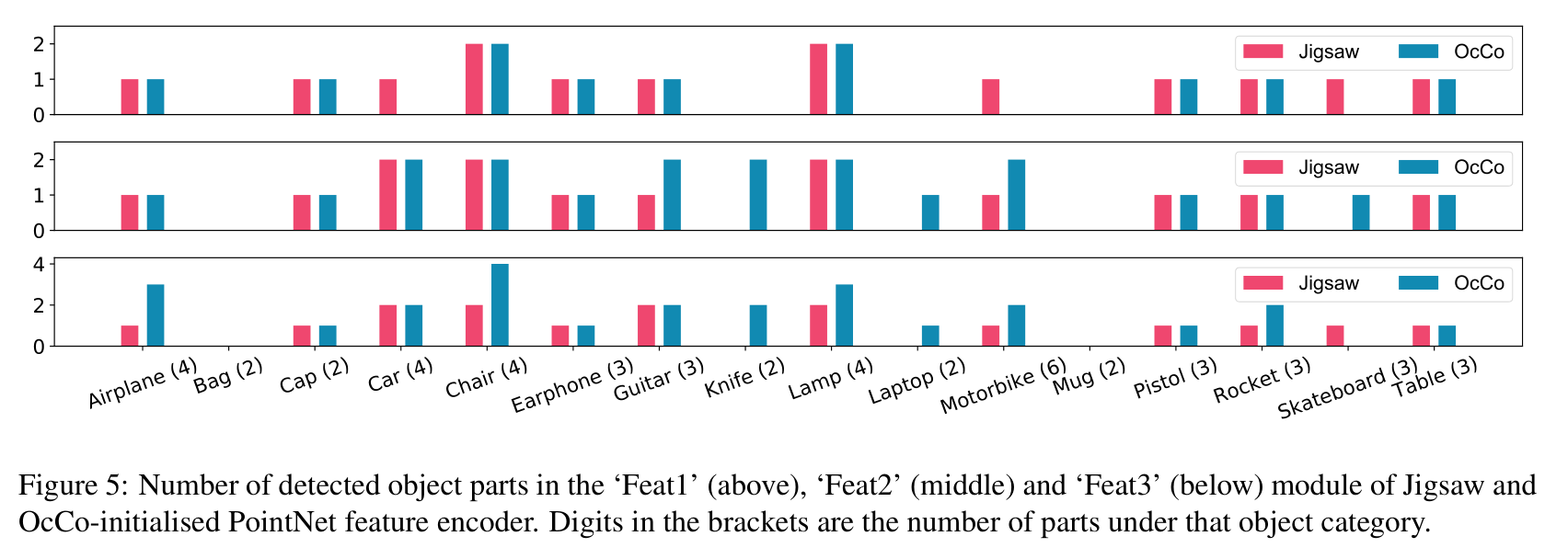

Detection of semantic concepts

Discuss

some time , It would be more interesting to design a complete model that shows the occlusion steps considered . The model may converge faster , You need fewer parameters , It can also be used as a strong bias in training .

New words

- a flurry of A lot of

边栏推荐

- 【点云处理之论文狂读经典版9】—— Pointwise Convolutional Neural Networks

- Digital management medium + low code, jnpf opens a new engine for enterprise digital transformation

- 我们有个共同的名字,XX工

- LeetCode 715. Range module

- 数字化管理中台+低代码,JNPF开启企业数字化转型的新引擎

- On a un nom en commun, maître XX.

- Character pyramid

- Discussion on enterprise informatization construction

- State compression DP acwing 91 Shortest Hamilton path

- Find the combination number acwing 886 Find the combination number II

猜你喜欢

即时通讯IM,是时代进步的逆流?看看JNPF怎么说

【点云处理之论文狂读前沿版11】—— Unsupervised Point Cloud Pre-training via Occlusion Completion

Instant messaging IM is the countercurrent of the progress of the times? See what jnpf says

Vs2019 configuration opencv3 detailed graphic tutorial and implementation of test code

高斯消元 AcWing 883. 高斯消元解线性方程组

【点云处理之论文狂读经典版13】—— Adaptive Graph Convolutional Neural Networks

Save the drama shortage, programmers' favorite high-score American drama TOP10

教育信息化步入2.0,看看JNPF如何帮助教师减负,提高效率?

【点云处理之论文狂读前沿版8】—— Pointview-GCN: 3D Shape Classification With Multi-View Point Clouds

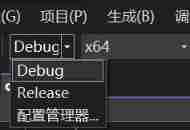

Debug debugging - Visual Studio 2022

随机推荐

[point cloud processing paper crazy reading classic version 12] - foldingnet: point cloud auto encoder via deep grid deformation

【点云处理之论文狂读经典版14】—— Dynamic Graph CNN for Learning on Point Clouds

Use of sort command in shell

Methods of using arrays as function parameters in shell

DOM render mount patch responsive system

2022-1-6 Niuke net brush sword finger offer

LeetCode 241. 为运算表达式设计优先级

常见渗透测试靶场

【点云处理之论文狂读经典版7】—— Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

一个优秀速开发框架是什么样的?

【点云处理之论文狂读前沿版13】—— GAPNet: Graph Attention based Point Neural Network for Exploiting Local Feature

Severity code description the project file line prohibits the display of status error c2440 "initialization": unable to convert from "const char [31]" to "char *"

Basic knowledge of network security

AcWing 786. 第k个数

The difference between if -n and -z in shell

AcWing 785. Quick sort (template)

干货!零售业智能化管理会遇到哪些问题?看懂这篇文章就够了

How to use Jupiter notebook

状态压缩DP AcWing 91. 最短Hamilton路径

LeetCode 532. 数组中的 k-diff 数对