当前位置:网站首页>Redis learning journey - cache exceptions (CACHE penetration, cache avalanche, cache breakdown)

Redis learning journey - cache exceptions (CACHE penetration, cache avalanche, cache breakdown)

2022-06-13 07:28:00 【Zhao JC】

Cache exception

Redis The use of caching , Greatly improve the performance and efficiency of the application , Especially in data query . But at the same time , It also brings some problems . among , The most important question , Namely

The consistency of data , Strictly speaking , This problem is more complicated . If there is a high requirement for data consistency , Then you can't use caching . Other typical problems are ,

Cache penetration 、 Cache avalanche and cache breakdown . at present , There are also popular solutions in the industry . Data consistency

background

background : Use to cache , Whether it is caching in local memory or using Redis Do the cache , Then there will be the problem of data synchronization , Cause the inconsistency between the database and the buffered data

Solution

- Update the database first , Update cache after

- Update cache first , Update the database after

- So let's delete the cache , Update the database after

- Update the database first , Delete cache after

The problems of the first scheme : In the scenario of concurrent database update , Will brush dirty data into the cache

The problems of the second scheme : If you update the cache first , But the database update failed , It will also cause the problem of inconsistency in the poem

Therefore, the first and second schemes are generally not used , And use threeorfour schemes

So let's delete the cache , Update the database after

The third solution also has problems , At this point, there are two requests , request A( update operation ) And request B( Query operation )

- request A Write operation , Delete cache ( But the database has not been updated yet )

- request B Query found that the cache does not exist

- request B The old value is obtained by querying the database

- request B Write old values to the cache

- request A Update the new value to the database

The above situation will lead to data inconsistency , And if you do not set the expiration time policy for the cache , This data is always dirty data , So how to solve it Answer 1 : Delay double delete

Pseudo code :

public void write(String key, Object data) {

Redis.delkey(key);

db.uppdateDate(data);

Thread.sleep(1000);

Redis.delkey(key);

}

- Eliminate the cache first

- Write the database again

- Sleep for a second , Eliminate the cache again ( Do it , Can be 1s Cache dirty data caused by , Delete again ) Ensure that after the request is completed , The write request can delete the dirty cache data caused by the read request again

Answer two : Update and read operations are asynchronously serialized

- Asynchronous serialization

- Read to remove heavy

So let's delete the cache , Update the database after

There will be problems in this situation , For example, updating the database is successful , However, there was an error in deleting the cache stage. The deletion was not successful , At this time, when reading the cache, the data is always wrong

Solution : Use message queuing for deletion compensation

- request A Update the database first

- Right again Redis Failed to delete , Report errors

- here Redis Of key Send it to the message queue as the message body

- After receiving the message sent by the message queue, the system sends the message to Redis Delete operation

Cache penetration ( Not found in a large area )

Concept

The concept of cache penetration is simple , The user wants to query a data , Find out redis Memory databases don't have , That is, cache miss , So query the persistence layer database . There is no , So this query failed . When there are a lot of users , No cache hits ( seckill !), So they all went to the persistence layer database . This will put a lot of pressure on the persistence layer database , This is equivalent to cache penetration .

Solution

- The bloon filter

A bloom filter is a data structure , For all possible query parameters, use hash stored , Check at the control level first , If not, discard , Thus, the query pressure on the underlying storage system is avoided ;

- Caching empty objects

When the storage tier misses , Even empty objects returned are cached , At the same time, an expiration time will be set , Then accessing this data will get from the cache , Protected back-end data sources ;

But there are two problems with this approach :

- If null values can be cached , This means that the cache needs more space to store more keys , Because there may be a lot of The null value of the key ;

- Even if expiration time is set for null value , There will be some inconsistency between the data of cache layer and storage layer for a period of time , This is for Businesses that need to be consistent have an impact .

Cache breakdown ( Too much , Cache expiration !)

Concept

We need to pay attention to the difference between this and cache breakdown , Cache breakdown , It means a key Very hot , Constantly carrying big concurrency , Large concurrent set

Access this point , When this key At the moment of failure , Continuous large concurrency breaks through the cache , Direct request database , It's like cutting a hole in a barrier .

When a key At the moment of expiration , There are a lot of requests for concurrent access , This kind of data is generally hot data , Due to cache expiration , Will access the database at the same time to query the latest data , And write back to the cache , Will cause the database transient pressure is too large .

Solution

- Never expired data settings

At the cache level , Expiration time is not set , So there will be no hot spots key Problems after expiration .

- Add mutex lock

Distributed lock : Using distributed locks , Guarantee for each key At the same time, there is only one thread to query the back-end service , Other threads do not have access to distributed locks , So just wait . In this way, the pressure of high concurrency is transferred to distributed locks , So the test of distributed locks is great .

Cache avalanche ( power failure / Downtime )

Concept

Cache avalanche , At a certain time , Expiration in cache set .Redis Downtime !

One of the reasons for the avalanche , For example, when writing this article , It's about double twelve o'clock , There will soon be a rush , This wave of commodity time is put into the cache , Suppose you cache for an hour . Then at one o'clock in the morning , The cache of this batch of goods has expired . And the access to this batch of products , It's all in the database , For databases , There will be periodic pressure peaks . So all the requests will reach the storage layer , The number of calls to the storage layer will skyrocket , Cause the storage layer to hang up .

Actually, the concentration is overdue , It's not very deadly , More deadly cache avalanche , It means that a node of the cache server is down or disconnected . Because of the cache avalanche formed naturally , Cache must be created in a certain time period , This is the time , The database can also withstand the pressure . It's just periodic pressure on the database . The cache service node is down , The pressure on the database server is unpredictable

Of , It's likely to crush the database in an instant .

Solution

- redis High availability

The meaning of this idea is , since redis It's possible to hang up , I'll add more redis, After this one goes down, others can continue to work , In fact, it's a cluster built .( Different live !)

- Current limiting the drop

The idea of this solution is , After cache failure , Control the number of threads that read the database write cache by locking or queuing . For example, to some key Only one thread is allowed to query data and write cache , Other threads wait .

- Data preheating

Data heating means before deployment , I'll go through the possible data first , In this way, some of the data that may be accessed in large amounts will be loaded into the cache . Manually trigger loading cache before large concurrent access occurs key, Set different expiration times , Make the cache failure time as uniform as possible .

边栏推荐

- C#合并多个richtextbox内容时始终存在换行符的解决方法

- P1434 [SHOI2002] 滑雪 (记忆化搜索

- 简单了解C语言基本语

- 比较DFS和BFS的优点和缺点及名称词汇

- 微隔离(MSG)

- What languages can be decompiled

- A solution to the problem that there is always a newline character when C merges multiple RichTextBox contents

- 平衡二叉树学习笔记------一二熊猫

- How is it that the income of financial products is zero for several consecutive days?

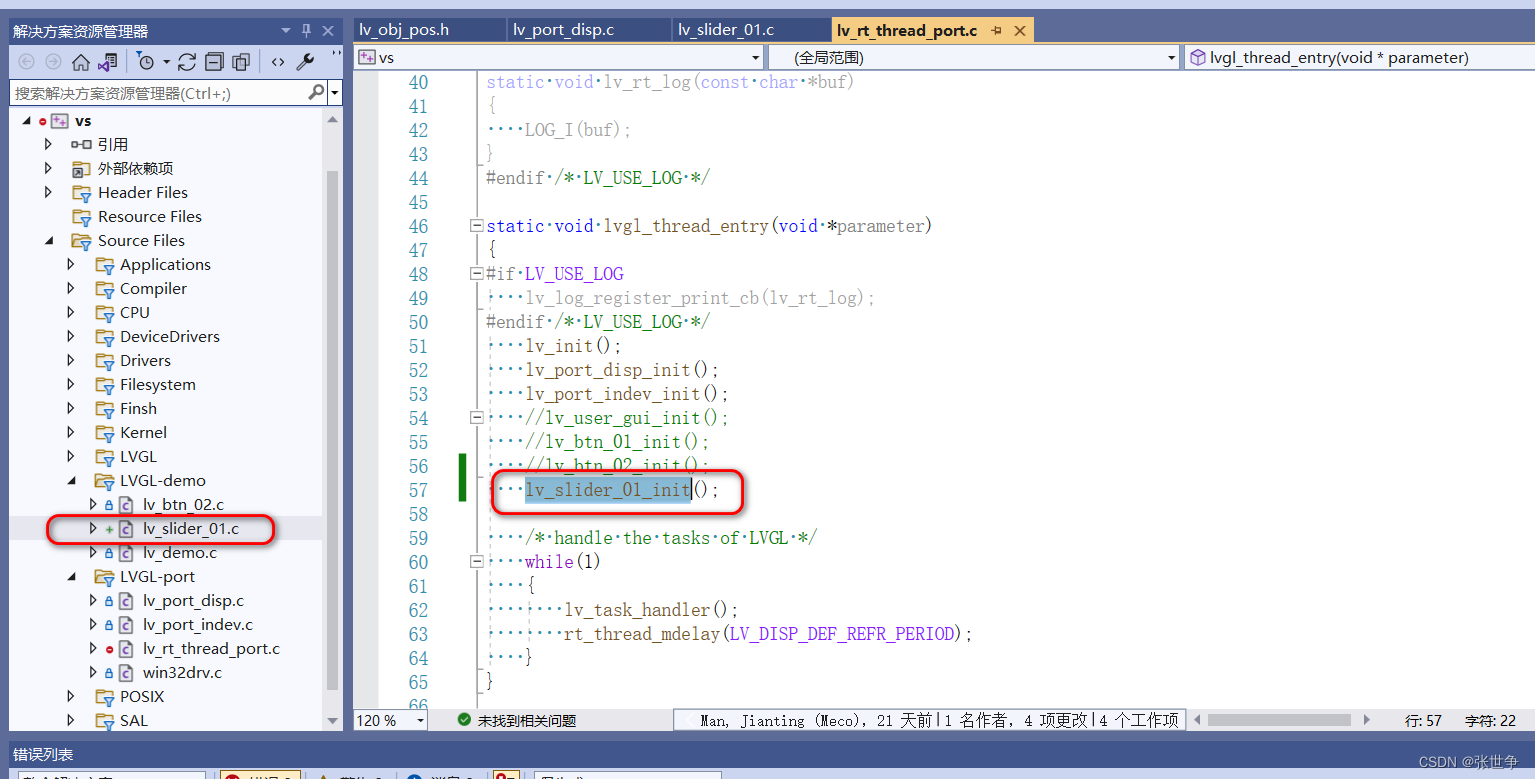

- RT-Thread 模拟器 simulator LVGL控件:slider 控件

猜你喜欢

Reflection of C # Foundation

C language: how to give an alias to a global variable?

Make cer/pfx public and private key certificates and export CFCA application certificates

SDN基本概述

Simple understanding of basic language of C language

RT thread simulator lvgl control: button button style

The biggest highlight of wwdc2022: metalfx

Powerdispatcher reverse generation of Oracle data model

全志V3S环境编译开发流程

RT-Thread 模拟器 simulator LVGL控件:slider 控件

随机推荐

A. Vacations (DP greed

QT读取SQLserver数据库

Ticdc introduction

个人js学习笔记

Priority analysis of list variables in ansible playbook and how to separate and summarize list variables

[log4j2 log framework] sensitive character filtering

RT-Thread 模拟器 simulator LVGL控件:slider 控件

Table access among Oracle database users

部署RDS服务

Word document export

Database connection under WinForm

考研英语

Socket programming 2:io reuse (select & poll & epoll)

9. process control

Number of detection cycles "142857“

FTP_ Manipulate remote files

Department store center supply chain management system

Relevant knowledge under WinForm

redis-6. Redis master-slave replication, cap, Paxos, cluster sharding cluster 01

Learning notes of balanced binary tree -- one two pandas