当前位置:网站首页>WebRTC AEC 流程解析

WebRTC AEC 流程解析

2022-06-12 05:24:00 【非典型废言】

今天我们要介绍3A算法中最难的一个算法,也是WebRTC流程解析这个系列的最后一个算法,声学回声消除(Acoustic Echo Cancellation,AEC )。如果读者对WebRTC有一些了解的话,就知道WebRTC的AEC算法大致可以分为三个部分:时延估计、线性回声消除、非线性处理。

I. Introduction

回声消除的简单原理前面已经有介绍过了,可以有参考解析自适应滤波回声消除和基于卡尔曼滤波器的回声消除算法。WebRTC AEC的时延估计使用了频域自相关的方法。线性部分采用了分块频域自适应滤波器(Partitioned Block Frequency Domain Adaptive Filter, PBFDAF),这个滤波器在Speex中称为分块频域波器(Multidelayblock frequency Filter,MDF), 其实它们原理是一样的。有所不同的是Speex的AEC使用了两个滤波器(前景滤波器和背景滤波器)因此其线性回声消除部分性能更好一点,但是AEC3也引入了两个滤波器,这里就不展开讲了后面有机会再介绍。最后通过计算近端信号、误差信号和远端信号的频域相关性来进行的非线性处理(NonLinearProcessing, NLP)。

WebRTC AEC的流程和其他算法类似,首先我们要create一个实例。

int32_t WebRtcAec_Create(void** aecInst)在上面这个函数中我们创建AEC的实例和重采样的实例。

int WebRtcAec_CreateAec(AecCore** aecInst)

int WebRtcAec_CreateResampler(void** resampInst)在WebRtcAec_CreateAec中会开辟一些buffer,包括近端/远端/输出/延迟估计等。值得一提的是,WebRTC AEC的buffer结构体定义如下,我们可以发现除了数据之外还有一些记录位置的变量。

struct RingBuffer {

size_t read_pos;

size_t write_pos;

size_t element_count;

size_t element_size;

enum Wrap rw_wrap;

char* data;

};其中近端和输出的buffer大小一样(FRAME_LEN:80, PART_LEN:64)

aec->nearFrBuf = WebRtc_CreateBuffer(FRAME_LEN + PART_LEN, sizeof(int16_t));

aec->outFrBuf = WebRtc_CreateBuffer(FRAME_LEN + PART_LEN, sizeof(int16_t));远端buffer要大一点(kBufSizePartitions:250, PART_LEN1:64+1)

aec->far_buf = WebRtc_CreateBuffer(kBufSizePartitions, sizeof(float) * 2 * PART_LEN1);

aec->far_buf_windowed = WebRtc_CreateBuffer(kBufSizePartitions, sizeof(float) * 2 * PART_LEN1);有关时延估计的内容也会在这里初始化。

void* WebRtc_CreateDelayEstimatorFarend(int spectrum_size, int history_size)

void* WebRtc_CreateDelayEstimator(void* farend_handle, int lookahead)接下来是初始化,这里有两个采样率一个是原始的采样率,另一个是重采样后的采样率。原始采样率只支持8k/16k/32kHz, 重采样的采样率为1—96kHz。

int32_t WebRtcAec_Init(void* aecInst, int32_t sampFreq, int32_t scSampFreq)在下面这个函数会根据原始采样率设置对应参数,并初始WebRtcAec_Create开辟的各种buffer空间和各种参数变量以及FFT计算的初始化。

WebRtcAec_InitAec(AecCore* aec, int sampFreq)由于涉及到重采样,需要初始化重采样相关内容,可以发现重采样在WebRTC多个算法中均有出现。

int WebRtcAec_InitResampler(void* resampInst, int deviceSampleRateHz)最后是参数设定,WebRTC AEC的配置结构体如下

typedef struct {

int16_t nlpMode; // default kAecNlpModerate

int16_t skewMode; // default kAecFalse

int16_t metricsMode; // default kAecFalse

int delay_logging; // default kAecFalse

} AecConfig;在初始化过程中,它们被默认配置为如下参数

aecConfig.nlpMode = kAecNlpModerate;

aecConfig.skewMode = kAecFalse;

aecConfig.metricsMode = kAecFalse;

aecConfig.delay_logging = kAecFalse;可以通过WebRtcAec_set_config来设定各种参数。

int WebRtcAec_set_config(void* handle, AecConfig config)在处理每一帧时,WebRTC AEC会首先把远端信号放入buffer中

int32_t WebRtcAec_BufferFarend(void* aecInst,

const int16_t* farend,

int16_t nrOfSamples)如果需要重采样,会在这个函数内部调用重采样函数,aec的重采样非常简单直接线形插值处理,并没有接镜像抑制滤波器。这里的skew好像是对44.1 and 44 kHz 这种奇葩采样率的时钟补偿(更细节可以参考[4])。

void WebRtcAec_ResampleLinear(void* resampInst,

const short* inspeech,

int size,

float skew,

short* outspeech,

int* size_out)当far end的buffer有足够多的数据时,进行FFT计算,这里会计算两次,一次是加窗的一次是不加窗的,窗函数带来的影响可以参考分帧,加窗和DFT。

void WebRtcAec_BufferFarendPartition(AecCore* aec, const float* farend)II. Delay Estimation

在软件层面由于各种原因会导致麦克风收到的近端信号与网络传输的远端信号并不是对齐的,当近端信号和远端信号的延迟较大时就不得不使用较长的线性滤波器来处理,这无疑增加了计算量。如果我们能将近端信号和远端信号对齐,那么就可以减少滤波器的系数从而减少算法开销。

然后运行处理函数,其中msInSndCardBuf就是声卡实际输入和输出之间的时间差,即本地音频和消去参考音频之间的错位时间。对于8kHz和16kHz采样率的音频数据在使用时可以不管高频部分,只需要传入低频数据即可,但是对大于32kHz采样率的数据就必须通过滤波接口将数据分为高频和低频传入这就是nearend和nearendH的作用。

int32_t WebRtcAec_Process(void* aecInst,

const int16_t* nearend,

const int16_t* nearendH,

int16_t* out,

int16_t* outH,

int16_t nrOfSamples,

int16_t msInSndCardBuf,

int32_t skew)首先要进行一些判断,确定函数输入的参数是有效的,然后会根据这个变量的值extended_filter_enabled来确定是否使用extend模式,两种模式划分数目以及处理方式都有所不同。

enum {

kExtendedNumPartitions = 32

};

static const int kNormalNumPartitions = 12;如果使用extended模式需要人为设定延时(reported_delay_ms)

static void ProcessExtended(aecpc_t* self,

const int16_t* near,

const int16_t* near_high,

int16_t* out,

int16_t* out_high,

int16_t num_samples,

int16_t reported_delay_ms,

int32_t skew) 将延时转为采样点数后移动远端buffer指针,然后对delay进行筛选和过滤。

int WebRtcAec_MoveFarReadPtr(AecCore* aec, int elements)

static void EstBufDelayExtended(aecpc_t* self)如果使用normal模式

static int ProcessNormal(aecpc_t* aecpc,

const int16_t* nearend,

const int16_t* nearendH,

int16_t* out,

int16_t* outH,

int16_t nrOfSamples,

int16_t msInSndCardBuf,

int32_t skew)会有一个startup_phase的过程,当系统延迟处于稳定状态后,这个过程结束,AEC才会生效。AEC生效后首先进行对时延估计buffer, delay进行筛选和过滤。

static void EstBufDelayNormal(aecpc_t* aecpc) 接着就进入AEC的处理环节

void WebRtcAec_ProcessFrame(AecCore* aec,

const short* nearend,

const short* nearendH,

int knownDelay,

int16_t* out,

int16_t* outH)代码里面有很明确的注释,解释了AEC核心步骤

For each frame the process is as follows:

1) If the system_delay indicates on being too small for processing a

frame we stuff the buffer with enough data for 10 ms.

2) Adjust the buffer to the system delay, by moving the read pointer.

3) TODO(bjornv): Investigate if we need to add this:

If we can't move read pointer due to buffer size limitations we

flush/stuff the buffer.

4) Process as many partitions as possible.

5) Update the |system_delay| with respect to a full frame of FRAME_LEN

samples. Even though we will have data left to process (we work with

partitions) we consider updating a whole frame, since that's the

amount of data we input and output in audio_processing.

6) Update the outputs.我们直接看处理模块,即步骤4

static void ProcessBlock(AecCore* aec)首先记住这三个变量分别是近端信号、远端信号和误差信号。

d[PART_LEN], y[PART_LEN], e[PART_LEN]第一步会进行舒适噪声的噪声功率谱估计和平滑,接着就是延迟估计了。

int WebRtc_DelayEstimatorProcessFloat(void* handle,

float* near_spectrum,

int spectrum_size)其算法原理如下表所示,

首先根据远端信号和近端信号的功率谱计算子带振幅与阈值之间的关系得到二元谱,这样便得到了远端和近端信号二值化的频谱。

static uint32_t BinarySpectrumFloat(float* spectrum,

SpectrumType* threshold_spectrum,

int* threshold_initialized)然后通过求解两者的按位异或值,选择相似度最高的候选远端信号并计算对应的延时。

int WebRtc_ProcessBinarySpectrum(BinaryDelayEstimator* self,

uint32_t binary_near_spectrum) III. PBFDAF

接下来就是NLMS的部分了,其整体流程如下图所示:

PBFDAF的每一步都可以在上图中找到对应的流程,首先实现远端频域滤波,然后对结果进行IFFT运算,缩放后减去近端信号得到时域误差

static void FilterFar(AecCore* aec, float yf[2][PART_LEN1])接着对误差信号进行FFT变换并归一化误差信号

static void ScaleErrorSignal(AecCore* aec, float ef[2][PART_LEN1])最后经过了FFT/IFFT,把一半数值置零等操作,在频域更新滤波器权重。

static void FilterAdaptation(AecCore* aec, float* fft, float ef[2][PART_LEN1])IV. NLP

NLMS是线性滤波器并不能消除所有的回声,因为回声的路径不一定是非线性的,因此需要非线性处理来消除这些残余的回声,其基本原理就是信号的频域相干性:近端信号和误差信号的相似度高则不需要进行处理,远端信号和近端信号相似度高则需要进行处理,其中非线性体现在处理是使用指数衰减。WebRTC AEC的NLP处理在这个函数中

static void NonLinearProcessing(AecCore* aec, short* output, short* outputH)首先计算近端远端误差信号的功率谱,然后计算他们的互功率谱,从而计算近端-误差子带相干性、远端-近端子带相干性。接着得出平均相干性,估计回声状态,计算抑制因子然后进行非线性处理。

static void OverdriveAndSuppress(AecCore* aec,

float hNl[PART_LEN1],

const float hNlFb,

float efw[2][PART_LEN1])最后加上舒适噪声后进行IFFT,然后overlap and add得到最终的输出。

static void ComfortNoise(AecCore* aec,

float efw[2][PART_LEN1],

complex_t* comfortNoiseHband,

const float* noisePow,

const float* lambda)我们看下效果,第一个通道是远端数据,第二个通道是近端数据

WebRTC AEC处理后的效果

V. Conclusion

WebRTC AEC由线性部分和非线性部分组成,个人感觉体现了理论和工程两个方面在算法落地上的作用,总的来说WebRTC AEC的效果还是不错的,但是我们知道AEC的效果与硬件关系很大因此需要很多的精力和时间去调试参数。

本文相关代码,在公众号语音算法组菜单栏点击Code获取。

参考文献:

[1]. 实时语音处理实践指南

[2]. https://blog.csdn.net/VideoCloudTech/article/details/110956140

[3]. https://bobondemon.github.io/2019/06/08/Adaptive-Filters-Notes-2/

[4]https://groups.google.com/g/discuss-webrtc/c/T8j0CT_NBvs/m/aLmJ3YwEiYAJ

[5].https://www.bbcyw.com/p-25726216.html

边栏推荐

- Servlet core

- 17. print from 1 to the maximum n digits

- @Configurationproperties value cannot be injected

- [GIS tutorial] ArcGIS for sunshine analysis (with exercise data download)

- Performance test - GTI application service performance monitoring platform

- Calculation method notes for personal use

- Detailed explanation of data envelopment analysis (DEA) (taking the 8th Ningxia provincial competition as an example)

- The server time zone value ‘Ö Ð¹ ú±ê ×¼ ʱ ¼ ä‘ is unrecognized or represents more than one time zone. You

- Chrome is amazingly fast, fixing 40 vulnerabilities in less than 30 days

- One dragon and one knight accompanying programmers are 36 years old

猜你喜欢

When the build When gradle does not load the dependencies, and you need to add a download path in libraries, the path in gradle is not a direct downloadable path

Save the object in redis, save the bean in redis hash, and attach the bean map interoperation tool class

Introduction to audio alsa architecture

Detailed analysis of the 2021 central China Cup Title A (color selection of mosaic tiles)

4.3 simulate browser operation and page waiting (display waiting and implicit waiting, handle)

Performance test - GTI application service performance monitoring platform

4.3 模拟浏览器操作和页面等待(显示等待和隐式等待、句柄)

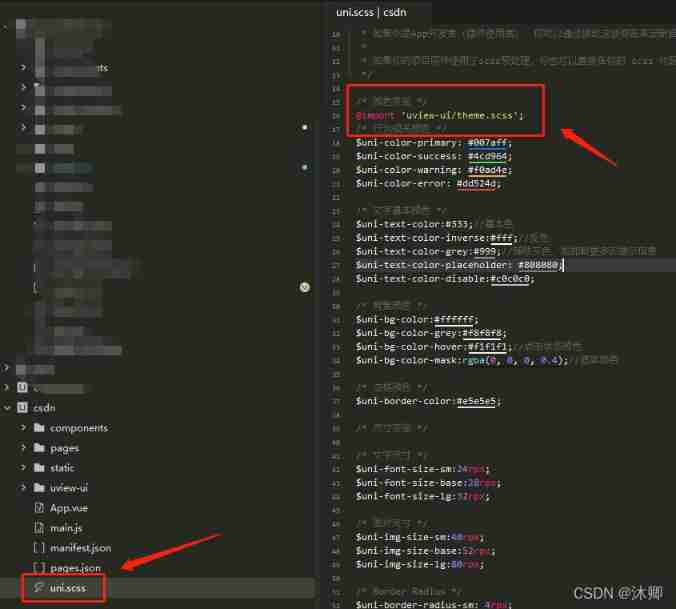

How to quickly reference uview UL in uniapp, and introduce and use uviewui in uni app

![[GIS tutorial] ArcGIS for sunshine analysis (with exercise data download)](/img/60/baebffb2024ddf5f2cb070f222b257.jpg)

[GIS tutorial] ArcGIS for sunshine analysis (with exercise data download)

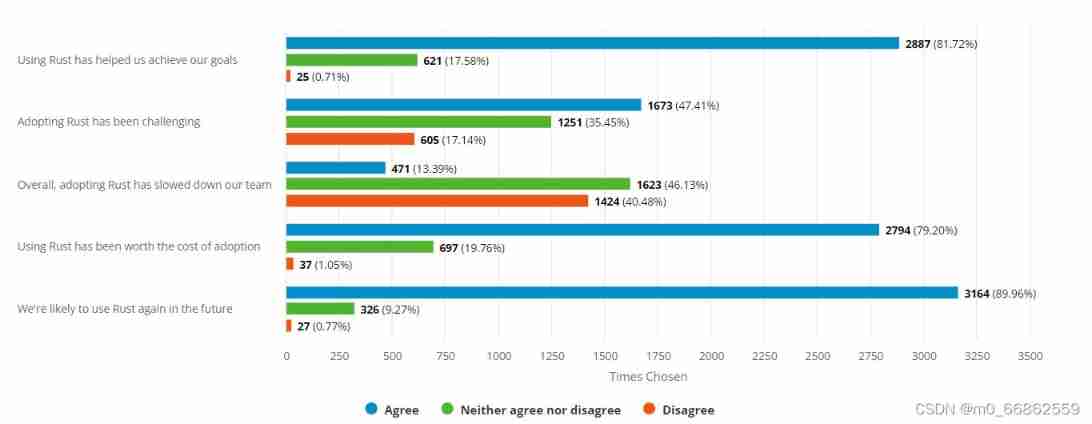

Big manufacturers compete to join rust, performance and safety are the key, and the 2021 rust developer survey report is announced

随机推荐

Sv806 QT UI development

[backtracking method] queen n problem

Save the object in redis, save the bean in redis hash, and attach the bean map interoperation tool class

62. the last number left in the circle

Surface net radiation flux data, solar radiation data, rainfall data, air temperature data, sunshine duration, water vapor pressure distribution, wind speed and direction data, surface temperature

How to count the total length of roads in the region and draw data histogram

SQL transaction

Self implementation of a UI Library - UI core drawing layer management

[backtracking method] backtracking method to solve the problem of Full Permutation

What is thinking

Transpiration and evapotranspiration (ET) data, potential evapotranspiration, actual evapotranspiration data, temperature data, rainfall data

Qinglong wool - Kaka

Automated testing - Po mode / log /allure/ continuous integration

[cjson] precautions for root node

Rv1109 lvgl UI development

MySQL Linux Installation mysql-5.7.24

4.3 simulate browser operation and page waiting (display waiting and implicit waiting, handle)

Applet pull-down load refresh onreachbottom

Detailed analysis of mathematical modeling problem a (vaccine production scheduling problem) of May Day cup in 2021

Detailed explanation of data envelopment analysis (DEA) (taking the 8th Ningxia provincial competition as an example)