当前位置:网站首页>Optimization method of live weak network

Optimization method of live weak network

2022-06-23 02:35:00 【Video cloud live broadcast helper】

background

There are many live broadcast platforms , The flow inlet gradually changes from the traditional PC End transition to mobile end . Live broadcast gauge Model explosive growth ,2016 Years is known as “ Live first year ”. The pan entertainment live broadcast represented by Games was an important part of the live broadcast ecology in this period .2015-2017 year ,4G Technology popularization , Mobile live broadcast is not supported by the device 、 Scenes and other restrictions began to spread rapidly , Promote the emergence of nationwide live broadcasting ; meanwhile , Due to the innovation of live broadcast function 、 Live broadcast platforms and capital have entered the market 、 Policy support , The live broadcasting industry once appeared “ Thousand broadcast battle ” The situation . period , The government introduced 《 Provisional Regulations on the management of E-sports 》 And other relevant policies of the game industry , It has further promoted the development of game live broadcast .

The period of commercial realization : The industry is developing steadily , The flow bonus is receding , Policy supervision tends to be standardized , E-commerce live broadcasting is emerging on e-commerce platforms and short video platforms , It has gradually become an important form of realization in the live broadcasting industry . Tiger tooth and other game live broadcast platforms have also started to make profits .2018 year , Taobao live has 81 The turnover of famous anchor is over 100 million , exceed 400 The number of live broadcasting rooms carries more than per month 100 Ten thousand yuan ; Same year , Tiger tooth made a profit for the first time , Annual revenue 46.6 One hundred million yuan , Net profit 4.6 Billion .2019 year , Taobao live broadcast only used during the double 11 8 Hours 55 branch , To guide the transaction to exceed 10 billion yuan ; And tiger tooth has also made profits again , Net profit increased to 7.5 Billion , Another head game live broadcast platform, Betta, also turned losses into profits , Annual revenue 72.8 One hundred million yuan , Net profit 3.5 Billion . The commercial value of live broadcasting is highlighted .

The black swan incident “ epidemic situation ” influence , Strong online demand , Many industries prefer live broadcasting , Accelerate the development and penetration of the live broadcasting industry .2020 year , The outbreak has further expanded the radiation range of the live broadcast . From the live broadcast platform , Multiple platforms develop live broadcast functions 、 Open the live broadcast traffic portal 、 Introduce live broadcasting support policies ; From the live broadcast category , education 、 automobile 、 Real estate and other industries that mainly operate offline have also begun to test the live broadcast on the water line ; From the point of view of the live anchor , The anchor group is more diverse , In addition to live broadcast , More and more stars 、KOL、 merchants 、 Government officials began to enter the field of live broadcasting . According to the data of China Internet Network Information Center , By 2020 year 3 month , The scale of live broadcast users reaches 5.60 Billion , namely 40% Chinese of 、62% Of the Internet users are live broadcast users . among , The scale of live e-commerce users is 2.65 Billion . Affected by the epidemic ,“ live broadcast ” The form has gained more attention in many industries , expect 2020 The annual data reached a new high .

Terminology

QoE Represents the user's subjective experience at the receiving end .

QoS It means to measure the transmission quality of the network and provide high-quality network services through some quantitative indicators .

HDS Http Dynamic Streaming(HDS) It's a by Adobe The company imitates HLS Another proposed protocol is based on Http Streaming media transport protocol of . Its mode is similar to HLS similar , But it's better than HLS The protocol is more complicated , It is also a way to download index files and media slice files .

problem

2021 year 2 month 3 Japan , China Internet Network Information Center (CNNIC) Release the 47 Time 《 Statistical report on Internet development in China 》( hereinafter referred to as 《 The report 》).《 The report 》 Show , By 2020 year 12 month , The number of Internet users in China has reached 9.89 Billion , a 2020 year 3 Monthly growth 8540 ten thousand , Internet penetration reached 70.4%.

Report analysis , With the network poverty alleviation action, non internet users in remote and poor areas are accelerated to transform , In terms of network coverage , Communication in poor areas “ Last mile ” Get through , As a result, rural Internet users have contributed to the increase of major Internet users . among , The number of Internet users in rural China has reached 3.09 Billion , Accounting for the overall number of Internet users 31.3%, a 2020 year 3 Monthly growth 5471 ten thousand ; The number of urban Internet users has reached 6.80 Billion , Accounting for the overall number of Internet users 68.7%, a 2020 year 3 Monthly growth 3069 ten thousand .

China's urbanization rate is about 60%, Lived in the countryside for about 40% People who . In the city , Network environment that can support live video broadcasting , family wifi The Internet , Office work network , The hotel , Shopping malls and large supermarkets mobile network . outdoors , Like the subway , transit , park , Gymnasium, etc , The network signal is poor , The charge is expensive , Network bandwidth is preempted , It's hard to meet live video .

At present, the network of mobile end users is not completely smooth WIFI Environmental Science , There are still quite a few users who mainly use 4G、3G、2G Wait for the Internet , In addition, due to the changeable use scenarios of mobile end products , If you get into the subway 、 Get on the bus 、 Get into the elevator and wait , Complex network environment , Signal stability is also very poor .

At present, the domestic network environment is relatively stable , family wifi The Internet , Office work network , Shopping malls and supermarkets . Compared with the countryside , The network infrastructure of the city , The base station coverage of mobile operators is also much better . In the city , Network infrastructure does not cover all places , There are very few network environments that can support live video , Basically, it is not suitable to watch live broadcast outdoors . Compared with live broadcast , At present, most domestic network environments are weak networks . Therefore, the weak network is a problem that the live broadcast has to face .

Transmission of live audio and video data , The amount of data transmitted is large , High requirements for delay , Data is generated in real time , Cannot prefetch in advance , Therefore, the network requirements are very high , The quality of the network is not good , Or insufficient bandwidth , Viewers cannot receive video data in time , The viewing experience is very poor .

Definition of weak net

Metrics to measure the network

The main indicators to measure network quality are as follows :

1、RTT,RTT The smaller the better. , The more stable the better ,RTT The smaller it is , The faster the data is transmitted .

2、 bandwidth , More bandwidth , The more data is transmitted per unit time .

3、 Packet loss rate , The smaller the packet loss rate , The lower the retransmission probability , The higher the success rate of data transmission .

4、 throughput , The greater the number of transmissions per unit time , The better the network quality is .

Weak network environment is a special scenario with high packet loss rate ,TCP Poor performance in similar scenes , When RTT by 30ms when , Once the packet loss rate reaches 2%,TCP The throughput will drop 89.9%, From the above table, we can see that packet loss pairs TCP The throughput of .

Weak network simulates some scenarios :

Common weak net definition

In today's mobile Internet era , The form of the network is not only wired connection , also 2G/3G/Edge/4G/Wifi And a variety of mobile phone network connections . Different agreements 、 Different systems 、 Different speeds , Make the scene of mobile application run more abundant .

From a testing point of view , The scene that needs extra attention is much more than disconnection 、 Network failure and so on . For the data definition of weak network , The meaning defined by different applications is different and unclear , Not only the lowest rate of each type of network should be considered , We also need to combine business scenarios and application types to divide . According to the characteristics of mobility , Generally, it is lower than 2G All of them are weak nets , Can also be 3G Divided into weak networks . besides , Weak signal Wifi It is usually included in the weak network test scenario .

Definition of live audio and video weak network

If the live broadcast needs to be played normally , Need to be able to quickly transmit enough audio and video data . As long as the player can receive enough data , The video can be played normally . Otherwise, buffer or playback exceptions will occur , Among them, network causes account for 90% above , We call it a weak net .

The reasons for the abnormality of the live video network are as follows :

1、 The network bandwidth is lower than the code rate of live video .

2、 Network packet loss is high , Cause frequent retransmissions , It consumes insufficient bandwidth .

3、 The network jitter , The network bandwidth fluctuates greatly , Network congestion .

4、 The network signal is weak , Frequent disconnection and reconnection .

Above 4 Conditions , Meet a , It can be called audio and video weak network , That is, due to network reasons , Audio and video data cannot be transmitted normally .

According to the definition of audio and video weak network , Domestic network environment , Wired network , Home and office wifi Outside the network , The others are weak nets ,4g There are few people on the Internet , City Center , Barely able to play video data , There are many people gathered , There is no way to guarantee the network quality , If you use it together 4g Play the video , It is easy to compete for bandwidth , There is basically no way to watch . In the audio and video scene ,4g There is no way to guarantee the quality of the network , Therefore, it is also a weak network .4g scenario , The Caton duration is wifi scenario 2~3 times .

Solution

Fundamental solution to weak network problem , Is to improve the current network quality , Build more network infrastructure . Construction of network infrastructure , Not only does the government need to invest a lot of money , And the construction period is also very long , Therefore, it is difficult to make a significant improvement in a short time , And not controlled by individuals or companies .

Weak network users have poor viewing experience , The root cause is insufficient network bandwidth , Not in a limited time , Download enough video data . The way to solve the problem , By reducing the image quality , Or lose some video data , Or improve the compression ratio of video data , Reduce the amount of data required for viewing , Reduce network load .

1. The user passively switches the bit rate

The network quality perceived by the player , Tell the user , Let users play with reduced definition , The specific operation is as follows :

This way , Users will be aware , Leave the decision-making power to the customer . From good to bad network quality , There will be more hints , The experience is better , But when the network quality changes from poor to good , There's no hint , The experience is not good , Cannot resume . If network quality changes frequently , Users will switch frequently , It will lead to a rapid decline in the user experience , Cause the user to give up watching .

Leave the decision-making power to the customer , Give the customer an impression , Is not intelligent , It can not make good use of network bandwidth , In order to improve the utilization of bandwidth , Improve the user viewing experience , Need to follow the logic of player and background automatic control .

2. Terminal adaptive bit rate

The player side depends on different network models , Evaluate network bandwidth , And according to the network bandwidth , Download streams with different bit rates from the server . In the case of weak network , Download a low bit rate stream from the server , By reducing the amount of data transmitted over the network , To improve the viewing experience of users .

If the network quality is constantly changing , According to the actual network situation , Dynamically adjust the bitrate of streaming . There are two main ways to distribute live streams , One is to distribute by pieces , That is, download in pieces , Short links are used , Such as DASH,HLS etc. , One is through long links , In a single link , Issue all audio and video data , Such as flv,rtmp etc. .

DASH/HLS Easy to implement , In the index file, the partition indexes of all code rates are brought , The client is based on the actual network situation , Select the appropriate partition to download . It is more convenient to download in different slices , Just change the partition address , No additional changes are required . Note that the timestamps of slices before different bit rates need to be aligned , In the process of switching code rate , No picture jump , Or the picture rendering fails .

Slicing mode to achieve adaptive bit rate logic , The key is the index file MPD. Index file download and fragment download can reuse a link , To reduce the cost of handshaking . Specifically MPD The structure is as follows :

because HLS/DASH There is no interdependency between the fragments of , Boundaries can also be aligned by keyframes , Different bit rates can be seamlessly switched .

HLS/DASH The delay is usually large , Although some delays can be reduced by reducing fragmentation , But it will be affected by the index file ,GOP And other factors .

FLV/RTMP, Is a long link , Addresses with different bit rates are generated according to fixed rules , adopt pts Align , They are all sent from the key frame .P The frame and B frame , Decoding depends on other frames , Can't render alone , So you must switch between key frames . from P The frame and B Frame alignment , The implementation is more complex , Streams with different bit rates , The frame rate may be different , Timestamps may not be perfectly aligned . Long connection mode , Streams with different bit rates , Using different links . Use http2 technology , You can reuse connections .

During adaptive rate switching , Two problems need to be solved , The first problem is boundary alignment , When switching between different bit rates , The picture shall be smooth , No jumping , Affect customers' viewing , When slicing, add additional information for identification , You can solve it .

The second problem is the rate switching strategy , It is mainly processed on the player side , The rate switching strategies can be divided into the following categories :

1、 Buffer-based: Client based playback buffer buffer The bit rate gear of a segment is determined in the case of .

2、 Rate-based: Based on the predicted bandwidth, the bit rate of the next segment is determined .

3、 Both the predicted bandwidth and buffer The information determines the bit rate gear of the next segment .

Want to design a reasonable rate switching algorithm , Consider both bandwidth and buffer length , Also consider the rate switching frequency and switching amplitude , Finally, you may have to consider the live content .

At present, Kwai is mainly used , The following is the implementation scheme of Kwai :

https://cloud.tencent.com/developer/article/1652531

3. Layered coding / Frame loss (SVC)

H.264 SVC (Scalable Video Coding) In order to H.264 Based on , It extends the syntax and tool set , Support the code stream with hierarchical characteristics ,H.264SVC yes H.264 Appendix to standard G, At the same time as H.264 new profile.H.264SVC stay 2007 year 10 Month becomes the official standard .

The codestream generated by the encoder contains one or more sub codestreams that can be decoded separately , The sub code streams may have different code rates , Frame rate and spatial resolution .

Type of grading :

Time domain scalable (Temporal scalability): A code stream with different frame rates can be proposed from the code stream .

The space can be graded (Spatial scalability): A code stream with different image sizes can be proposed from the code stream .

The quality can be graded (Quality scalability): A code stream with different image quality can be proposed from the code stream .

SVC Application of hierarchical coding :

1. Monitoring area : Surveillance video streams are generally generated 2 road ,1 Good road quality for storage ,1 The road is used to preview . use SVC The encoder can generate 2 Hierarchical bitstream of layer ,1 A base layer is used to preview ,1 An enhancement layer ensures that the stored image quality is high . Use the mobile phone to remotely monitor the preview , A low bit rate base layer can be generated .

2. Video conferencing : Video conference terminal uses SVC Make up multi-resolution , Layered quality , The central point of the meeting replaces the traditional MCU The secondary encoding and decoding method is changed to video routing decomposition and forwarding . The time domain scalability can also be used in the network packet loss environment , Abandon part of time domain level to realize network adaptability . In the field of cloud video SVC There is also room for imagination .

3. Streaming media IPTV application : The server can discard the quality layer according to different network conditions , Ensure smooth video .

4. Compatible with different network environments and terminal applications .

advantage : The advantage of hierarchical bitstream is that it is very flexible , Different code streams can be generated or extracted as required . Use SVC To achieve a layered coding than AVC It's more efficient to edit many times . Layered coding has technical advantages , New encoder H.265 The idea of layering is also used , It can realize flexible application , It can also improve network adaptability .

SFU Copy the audio and video stream information from the publishing client , Then distribute to multiple subscription clients . The typical application scenario is 1 Many to many live broadcast service .

SFU It is a good way to solve the performance problems of the server , Because it does not involve the computational cost of video decoding and coding , It forwards all media streams with the lowest overhead . It can realize massive client access . Heavy terminal , Light platform . But layered editing can also AVC Of Simulcast Technology achieves similar results .

SVC Encoding for the encoding layer FEC. Forward error correction of image coding layer , If the encoded data is missing , You can use another layer of data to make up for . But those who have used the network layer FEC A similar effect can be achieved .

shortcoming : The decoding complexity of the hierarchical code stream increases . The basic layer is AVC Compatible code stream , Coding efficiency has no effect . Under the same conditions , The compression efficiency of hierarchical code stream is lower than that of single-layer code stream 10% about , The higher the number of levels , The more efficiency decreases , current JSVM The encoder supports at most 3 Three airspace levels . Under the same conditions , The decoding computation complexity of hierarchical code stream is higher than that of single-layer code stream .SVC yes 2007 year 10 Month is the official standard , Compatibility and compatibility are far from AVC good , therefore SVC It is not widely used in practice .

Precisely because SVC Complex decoding control is not conducive to streaming processing , Most hard codecs do not support , Even a small part of it supports time-domain layering ( Different frame rates ), There are only two layers , Multiple relationship , Such as 30 frame /15 frame , This layering occurs in AVC Now you can make small changes on the basis . industry SVC It is basically a software implementation , or DSP And so on .

SVC The theory can be seen from the time domain , airspace , Quality stratification , Divided into multiple layers , This leads to many details and complexities in the negotiation of the agreement , Standards are not uniform , Every family's SVC The realization is not the same . At present, no product in the industry can do SVC Bitstream communication , None of them . Say yes SVC All the communication channels are media gateways , Secondary transcoding is required . Can do AVC The media gateway is also used by .

This is a brief introduction to SVC technology , The live broadcast can be encoded in time domain , According to the network , Get code streams with different frame rates , Discard video frames at some levels , Improve the live broadcast experience of the weak network environment .

notes : Brown can be discarded in turn 、 green 、 Blue gets code streams with different frame rates .

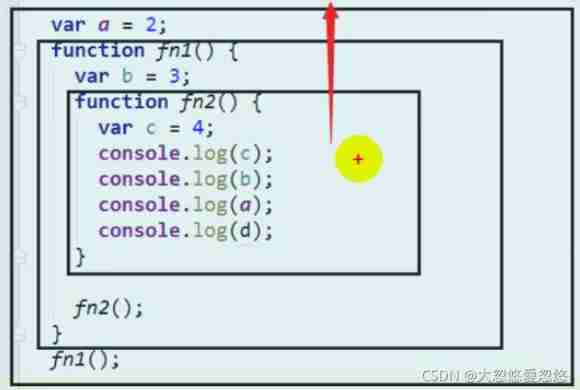

4. The client and the server cooperate to drop frames

AVC The encoded video frames are interdependent , Don't throw it away at will , If the lost frame is referenced by other video frames , Refer to the video frame of this frame , Decoding will fail , This leads to a splash screen . During the live broadcast I frame , Is a separate frame , You can render without relying on other frames , The number of bytes occupied is relatively large , Is the most important video frame .P The frame is dependent on the I Frame or P frame , Are all forward dependent .B Frames are minimal and depend on the previous and subsequent frames , It is also the most dependent frame , Will not be referenced , Therefore, it is of minimum importance , Something that can be discarded at will .

One picture group GOP, Yes I frame ,P frame ,B The frame of . According to the importance and dependency , Selective frame loss , The specific frame loss strategies are as follows :

1、 lose B frame

2、 from GOP One of them P Frame start , Throw away the GOP

3、 lose GOP All of the B The frame and P frame , Retain I frame .

4、 Throw away the whole GOP.

Be careful : Because there is very little audio data , It has little impact on the overall data volume , Generally, to ensure that the player can decode normally , You won't lose audio frames . from 1 To 4, The proportion of lost frames is getting higher and higher , The strategy is becoming more and more radical .

Except how to lose a frame , Also need to know when to lose , It is necessary to accurately judge the status of the network , At present, most frame loss is judged by the length of the application layer transmission queue stack , The accumulated data exceeds 5s, Start to lose frames , from 1~4 Step by step , If the queue length increases gradually , The anchor will enter a state with a better frame loss ratio .

After entering the frame loss state , It is necessary to periodically detect whether the network is restored , Conditions for recovery : If the queue is empty for more than a certain threshold , such as 5s, The measure will be rolled back , If you follow 4 Back to 3, Step by step recovery .

The above frame loss strategy only relies on the detection of the server side , Server side detection takes a certain amount of time , When the network jitters frequently , There will also be frequent policy adjustments , This leads to a decline in the user viewing experience .

If the client can actively tell the server user about the network , It can save detection time , The server can combine the client and server , Making comprehensive decisions . Reduce detection costs , Reduce switching frequency , Enhance the user's viewing experience .

5. RTMP 302 Cut off the flow and push again

We take an actual network case To introduce the problem ,2021 year 3 month 9 Japan , Anchor in Brazil , The following figure shows the streaming frame rate curve of this stream :

stay 20:50-21:50 During this period, the overall streaming frame rate is very low , Frame loss is very serious , But the number of users is very large , Yes 5w+ People online , Down close 100G Playback bandwidth

from cdn From the perspective of playback quality , Corresponding time period , The Caton rate is very high , The viewing quality is very poor .

In an emergency , Cut off the flow and force the flow pushing end to push the flow again , The stability of the push stream is quickly restored .

As shown in the figure above , stay 21 spot 47 about , After re streaming , The streaming frame rate is stable at 30 frame , The Caton rate also returned to normal .

After analysis , The anchor is in Brazil , The upstream connection is also in Brazil , There are a lot of slow logs on the server :

In fact, due to the single tcp Slow connection problem , It often occurs in the complex overseas streaming environment . Overseas links are complicated , And the link is very long , Sometimes at the beginning, the flow quality is very stable , After a long time , After the network jitters , There will be the deterioration of the above network , Cause unstable data transmission .

Developed countries such as Europe and the United States have good network quality , The bandwidth is sufficient , Can meet the normal live streaming . In some developing countries , Like the Middle East , Southeast Asia , The telecommunications infrastructure is poor , Insufficient network bandwidth , Local operators have various bandwidth limitations . It is easy to push streams after a period of time , The network is unstable , After the cut-off is pushed back, it can return to normal . To avoid scheduling to the same node , Usually through configuration host The way , Specify the access node , To avoid scheduling to the same node , To resume normal streaming .

The above abnormal conditions , Generally, it is pushed again by cutting off the flow or switching the flow nodes , It can often solve most problems . There is a high risk of the server actively disconnecting the anchor , If the streaming end is not handled well , There will also be an exception of the anchor streaming , This leads to streaming failure , It is easy to cause complaints , Therefore, it usually needs to be handled manually . The disadvantages of manual processing are obvious , The high cost , The problem was not dealt with in time , It takes a long time to deal with problems .

In order to solve the above problems , utilize rtmp302 characteristic , Made an improvement plan . If the server detects that the streaming is slow , adopt amf Control the way messages are sent , Push the new streaming address , Send to the streaming end , The streaming end combines the local network conditions , To make a comprehensive decision whether to cut off the flow and push again .

The highlight of this scheme is that the server only provides suggestions , Don't make decisions , The client can combine the information provided by the terminal and the background , Conduct a comprehensive assessment , Compare unilateral decisions , It can greatly improve the accuracy of decision-making .

In order to solve the problem of , Network anomaly , Adopted RTMP 302 Redirection scheme , The specific implementation logic is shown in the figure below :

Step one , In the process of streaming ,rtmp server The client supports continuous weak network detection , Support domain name + Configuration weak network detection of publishing point dimension .

Step two , When this condition is detected , It is necessary to report to the dispatching system , According to client IP, Request a legal 、 High quality streaming access node IP, Splicing complete redirection streaming addresses , adopt amf data To the streaming client .

Step three , The streaming client identifies the corresponding amf data,

The streaming terminal gets redirect After the redirection address in , Integrated local information , Determine whether it is necessary to cut off the flow and push again , if necessary , Use the address provided by the server to push the stream again , Solve the slow problem .

The above solutions , In the process of streaming , adopt RTMP 302 To get the slow information of the server , According to the client-side and server-side slow information , To cut off the flow and push again , Quickly resume live broadcast , Improve the success rate of streaming .

6. CDN Dynamic scheduling

Users have higher and higher requirements for live broadcast experience , To get into the studio faster , At the same time, the stability shall be considered . The traditional scheduling scheme has low hit rate 、 Scheduling is time-consuming 、 Culling slow 、 Low utilization of resources , It is not conducive to the realization of second on , It is not conducive to the stability of the live broadcast , It is necessary to adopt a scheduling scheme that is more suitable for the live broadcast scenario .

The live broadcast seconds are mainly affected by several delays :

1. Scheduling delay , Include HTTPDNS/DNS/302 etc. .

2. access delays , Including chain building delay 、 Data download delay 、 Render delay .

3. Return to source delay , Including the scheduling of returning to the source in case of miss 、 Build a chain 、 Overall delay of data download .

The first 1 spot , You can use methods such as client background scheduling , Achieve 0 Time delay ; The first 2 spot , It can be optimized through the client logic 、CDN Nearby access is greatly reduced .

And the first 3 spot , Due to the particularity of live broadcast service , Need real-time data , It can only be solved by going back to the source . The perfect solution to the problem , It requires the cooperation of the client and the server , In ensuring that the 1 Point scheduling delay is not affected , Get nodes with higher hit rate quickly , Greatly avoid the impact of source return delay .

at present , The traditional scheduling scheme uses DNS or HTTPDNS Domain name resolution CDN The address of the access point , There are several problems :

• Limited by Local DNS TTL, The user to CDN Abnormal machines are eliminated 、 The perception of load balancing policy adjustment is slow ( Even need to 5 Minutes or more ), Affect the stability of live broadcast .

• Limited by DNS Logic IP Number , The resources that can be utilized by a single land are limited , It is easy to produce local high load, etc , Affect the stability of live broadcast . as follows , once DNS Domain name resolution , Only returned 14 individual IP.

• Cannot perform convergence scheduling by flow dimension , Streams with fewer requests are CDN The edge hit rate is low , The first frame is large 、 The second opening experience is poor . as follows , The new scheduling service closes the flow convergence scheduling , Hit rate dropped , The average first frame goes up .

HTTPDNS/DNS Address prefetching solves the scheduling delay , But it doesn't solve the hit rate problem , The second to second driving experience is not optimal , So in order to pursue the ultimate second opening experience , A multi stream pre scheduling scheme is proposed .

1. When the client starts 、 Prefetch the stream information that the customer will access next before sliding {M,X,Y,Z}.

2. Stream information {M,X,Y,Z} Dispatch the service interface through the manufacturer , Get the exact address of the stream {A,B,C,D}.

3. When the user finally clicks or accesses , You can directly access the corresponding address , Such as access flow M, Can hit directly A The cache of

This scheme uses a special scheduling service to be responsible for user access scheduling , The user first requests the scheduling service to prefetch CDN The address of the access point , Then use this address to access CDN. The scheme has the following characteristics :

• Batch prefetching . Prefetch the scheduling results before the user clicks on the live broadcast , It also supports the scheduling result of requesting multiple streams at one time . Batch prefetching avoids additional scheduling delays , It is conducive to the second opening experience .

• Flow convergence scheduling . The dispatch service owns CDN List of all machines , It can perform global convergence scheduling according to flow , Increase the hit rate , It is conducive to the second opening experience .

• Fault fast sensing 、 Second level disaster recovery . The dispatch service owns CDN All machine status information , Be able to do a good job of global load balancing , Improve resource utilization , Besides , The scheduling service can quickly perceive and eliminate abnormal nodes , These are conducive to the stability of the live broadcast .

The biggest feature of the new scheme is based on the flow ID To schedule , Improve CDN Edge hit rate , Optimize seconds on , Meet the special needs of live broadcast scenarios .

It can be seen from the following figure that the multi flow scheduling interface is used , Significant improvement in hit rate and first frame . The figure below shows , After the convergence function is turned off , Hit rate dropped .

The figure below shows , Turn off aggregation , The average first frame goes up .

7. Video coding and compression

7.1 Fast HD technology

When the bit rate increases by a certain amount , With the increase of the initial bit rate , The increase of subjective feeling caused by the increase of bit rate tends to decrease , So when the current playback rate of the client is high to a certain extent , Although the network environment has become better , There is no need to raise the bit rate level ; The server also does not need to encode video with high bit rate , Save server resources .

When the bit rate decreases by a certain amount , As the initial bit rate increases , The subjective feeling caused by the decrease of bit rate tends to decrease , Therefore, on the one hand, the bit rate level of high bit rate video stored on the server can be reduced , The bit rate level of low bit rate video can be increased ; On the other hand, when the current video bit rate of the client is very low , Try to avoid bit rate reduction .

The human eye for low time complexity 、 Images with high spatial complexity are the most sensitive , For low time complexity 、 Images with low spatial complexity are the least sensitive . For insensitive video , Neither the change of initial bit rate nor the change of bit rate amplitude can have much impact on subjective perception ; So when the video currently played by the client is not sensitive , It is possible to perform less bit rate raising operations , Save bandwidth resources , For such resources, the server does not need to compile too many bit rate levels , Save server resources ; In a low resolution environment , The human eye is exquisite for details and vigorous in movement ( High spatial complexity 、 High time complexity ) The perception of images is limited , The sharp increase of bit rate does not help the perception of human eyes ; So when the client plays this kind of video , It can also reduce the rate raising operation , The server does not need to store too many bit rate levels in the high bit rate range for this kind of video .

The main idea of Tencent cloud extreme HD technology is to encode bits Try to put it in the most important and eye-catching place , adopt AI technology , Real time recognition of video content scene , Divide the video into games 、 Show 、 sports 、 outdoors 、 Comic 、 food 、 Several major categories, such as film and television plays, and dozens of sub category scenes , The picture features are obvious, and the scenes are like games 、 football 、 Basketball 、 Animation, etc CNN A network model , Recognition accuracy 98% above , TV play 、 Outdoor sports 、 food 、 The picture features such as tourism are relatively scattered, and the motion changes between frames are relatively large CNN combination RNN+LSTM Do time domain + Airspace analysis , Accuracy rate 85% about .

Different live broadcast coding parameters are configured for different scenarios , The live video coding parameters are based on the video source code rate 、 Frame rate 、 The resolution of the 、 Texture and motion change amplitude, as well as comprehensive machine load and image quality effect , Select the optimal coding template parameters . Classify different video categories according to different scenes , Different segments in the same video , Apply completely different coding parameters ;“ Different parameters ” The concept of includes but is not limited to :IBP The frame type 、 Quantizing parameters QP、 Resolution, etc , Support real-time update of coding parameters by frame .

No matter the standard H.264/JVT- G012 Rate control algorithm or x264 Rate control algorithm , In the scene with obvious motion changes , The theoretical convex curves of rate distortion are as close to the optimal distortion curve as possible , At home CDN Bandwidth is basically based on 95 Billing method ,CDN The bandwidth sampling point is 5 The mean value of the minute , For this kind of moving scene, we can detect the scene frame with obvious switching in real time x264 Rate control is based on the pre - coding QP The value and bit rate will be dynamically adjusted upward to ensure the picture effect , This scene switches between frames with large dynamic changes 5 The average bandwidth per minute is basically unaffected , But the quality VMAF The score will have 2-3 Points or more .

in the light of h264 Video encoding format , An algorithm to eliminate noise in the frequency domain of video residuals is designed . The algorithm combines the residual size of the current coding macroblock , Macroblock QP value , Historical frequency domain values, etc , And select matching according to different scenes video denoise Templates , Adaptively perform macroblock level video processing , Be able to CPU Consumption optimizes noise macroblocks , While preserving the integrity of clear macroblocks .

in summary , Tencent cloud's high-speed HD , It can be optimized through the above measures , Without reducing the video definition , Compress the video bit rate 30% about , save 30% Left and right cdn Playback bandwidth , Reduced video bandwidth requirements , Improved the experience of the audience .

7.2 H265 Instead of H264

H.265 yes ITU-T VCEG Following H.264 After that, the new video coding standard was established .H.265 Standards revolve around existing video coding standards H.264, Keep some of the original technology , At the same time, some related technologies are improved . New technology uses advanced technology to improve the bitstream 、 Coding quality 、 The relationship between delay and algorithm complexity , Achieve optimal settings . The specific research contents include : Improve compression efficiency 、 Improve robustness and error resilience 、 Reduce real-time delay 、 Reduce channel acquisition time and random access delay 、 Reduce complexity, etc .H.264 Because of the algorithm optimization , It can be lower than 1Mbps Speed to achieve standard definition ( The resolution is 1280P*720 following ) Digital image transmission ;H.265 Then we can make use of 1~2Mbps The speed of transmission 720P( The resolution of the 1280*720) Ordinary HD audio and video transmission .

H.263 Sure 2~4Mbps The transmission speed realizes standard definition broadcast level digital TV ( accord with CCIR601、CCIR656 Standard requirements 720*576); and H.264 Because of the algorithm optimization , It can be lower than 2Mbps Speed of standard definition digital image transmission ;H.265 High Profile Can achieve less than 1.5Mbps Transmission bandwidth , Realization 1080p Full HD video transmission .

In addition to the improvement of encoding and decoding efficiency , In terms of adaptability to the network H.265 There is also a significant improvement , It can run well in Internet And other complex network conditions .

In motion prediction , Next generation algorithms will no longer follow “ Macroblock ” The method of image segmentation , It is possible to adopt an object-oriented approach , Directly identify the moving subject in the picture . In terms of transformation , The next generation algorithm may no longer follow the algorithm family based on Fourier transform , There are many articles discussing , It draws attention to the so-called “ Super complete transformation ”, The main features are : Its MxN In the transformation matrix of ,M Greater than N, Even much larger than N, The transformed vector is large , But one of them 0 There are many elements , After entropy coding and compression , The information flow with high compression ratio can be obtained .

About the amount of computation ,H.264 Compression efficiency ratio MPEG-2 Improved 1 More than double , The cost is that the computation is increased by at least 4 times , This leads to the need for high-definition coding 100GOPS Peak computing power . For all that , It is still possible to use 2013 The mainstream of IC Process and general design techniques , A special hardware circuit is designed to achieve the above capability , And keep the cost of mass production at the original level .5 year ( Maybe longer ) in the future , New technology is accepted as a standard , Its compression efficiency should be higher than H.264 At least improve 1 times , It is estimated that the demand for computation will still increase 4 More than times . With the rapid progress of semiconductor technology , It is believed that the mass production cost of special chips with new technologies will not be significantly increased . therefore ,500GOPS, Perhaps it is the upper limit of computing power required by the new generation of technologies .

In the actual application scenario , With the same picture quality , Can save 30% Left and right bit rate , At present, the main live broadcast manufacturers in the market use H265 To optimize quality and cost .

7.3 AV1

AV1 Is a new open source 、 Copyright free video compression format , By the open media alliance (AOMedia) The industry alliance was established in 2018 Jointly developed and finalized at the beginning of the year .AV1 The main goal of the development is to achieve considerable compression rate improvement on the basis of the current codec , At the same time, it ensures the complexity of decoding and the practical feasibility of hardware . This article briefly introduces AV1 Key coding techniques in , And with VP9 and HEVC A preliminary compression performance comparison is made .

AV1 The development focus includes but is not limited to the following objectives : Consistent high-quality real-time video transmission 、 Compatibility with intelligent devices of various bandwidths 、 Easy to handle computing takes up space 、 Optimization of hardware and flexibility for commercial and non-commercial content . The codec originally used VP9 Tools and enhancements , then AOMedia Codec for 、 The hardware and test working group was proposed 、 test 、 Discussion and iteration generate new coding tools . As of today ,AV1 The code base is at the end bug Repair phase , And it has merged various new compression tools , And high-level syntax and parallelization features designed for specific use cases . This article will introduce AV1 Key coding tools in , High performance with the same quality libvpx VP9 Encoder comparison ,AV1 The average bit rate of is reduced by nearly 30%.

AV1 be relative to H265 There are two advantages of , The first is AV1 It's open source. , There are no copyright restrictions ,H265 There are copyright restrictions , It is easy to cause copyright disputes . The second is AV1 The compression ratio of is constantly optimized , The compression effect has exceeded H265, And has been H265 A certain distance has been drawn .

8. Protocol optimization

For audio and video scenes , Congestion control algorithm for the underlying network protocol , And retransmission packet loss algorithm .

8.1 TCP Optimize :

We will also use some algorithms to add , Make the best use of existing traffic . For example, in 600kb The packet loss rate is low and the delay is controllable , Then I can use some high-quality algorithms to 600kb The upper limit of is raised to 800~900kb The level of , The optimization effect is also obvious . For example, the typical method is FEC And ARQ.

FEC Originally from the optical fiber level algorithm optimization , For example, here I need to transmit 10 A packet , Once a packet is lost , The receiver will seek the packet again , One of these is the loss of a bag . therefore FEC A method of compensation has been adopted , If you need to send 10 A packet , Then the sender will send one more packet , The packet is based on the front 10 Packets pass through FEC The algorithm works out , The receiver actually only needs to successfully receive 11 Any of the bags 10 Each package can reassemble the original data stream . In this mode, if the control packet loss rate is 10% following , In fact, there is no need to make any retransmission request , So the packet loss rate is 10% The following delays have little effect . Of course there is a 10% Extra bandwidth consumption , If the packet loss rate is high and the bandwidth is no problem in some networks , that FEC It will reduce the loss caused by packet loss retransmission , Then reduce the delay loss to the minimum .

ARQ The receiver will automatically send a retransmission request when it detects a packet loss , For example, the sender sends ten packets :1、2、3、4、5、6、7、8、9、10; And the receiver is receiving 1、2、3、4、5、6、8、10 Not received after 7、9, Then the receiver will send a retransmission request to the sender , Request the sender to send again 7 And 9, Then the sender repackages 7 and 9 And send it to the opposite end .ARQ Is a very common retransmission algorithm , The retransmission algorithm also has continuous type ARQ With discontinuities ARQ,ARQ And FEC Can cooperate with each other . If the network bandwidth is good but the delay is obvious , We will give priority to FEC, And control the packet loss rate in 20% within ; If the packet loss rate exceeds 20%, Use FEC It will consume more bandwidth , And then it leads to the continuous deterioration of the whole transmission link . In general, the packet loss rate exceeds 20%, Even reach 10% when , Control down FEC The weight of the algorithm , And further use ARQ Can bring more excellent optimization effect .

except FEC And ARQ, There is another one called PacedSender The algorithm of . In video communication , There are peaks and troughs in the transmission , There may be hundreds of videos in a single frame KB, We know that there is I Frame B frame , In general I When the frame appears , It means to reach a traffic peak ; and B The frame is a very small piece , This makes the whole transmission jitter very serious . If it is after the video frame is encoded by the encoder , Just pack and send it now , A lot of data will be sent to the network in an instant , This may cause network attenuation and communication deterioration .WebRTC introduce pacer,pacer Will be based on estimator The evaluated bit rate , According to the minimum unit time (5ms) Do time slicing to send data step by step , Avoid instantaneous impact on the network .pacer The purpose is to make the video data evenly distributed in each time slice according to the evaluation code rate , So in the weak net WiFi Environmental Science ,pacer It's a very important step , It can be extended by , Make the jitter situation of the whole transmission tend to be gentle .

The above three algorithms can effectively improve the transmission efficiency and reduce the packet loss rate in the weak network environment . Maybe some people will mention SRT,SRT Not an algorithm but an open source package , It's actually built in FEC、ARQ And so on , adopt UDP+FEC+ARQ To simulate the TCP The algorithm of .TCP Strictly follow the quality priority strategy , It is not allowed to lose any data frame , Once the packet loss exceeds the limit, the link will be interrupted , And this is a little too harsh for the application scenarios of audio and video . Relatively speaking , be based on UDP Of SRT It is more suitable for audio and video application scenarios . We know that a lot of Companies in the industry have been testing these days SRT Algorithm , At present, the effect is very good .

TCP Agreement to use BBR Optimize network traffic

BBR Full name Bottleneck Bandwidth and RTT, It's Google in 2016 New network congestion control algorithm introduced in . To illustrate BBR Algorithm , I can't help but mention TCP Congestion algorithm .

Conventional TCP Congestion control algorithm , It is a protocol based on packet loss feedback . The protocol based on packet loss feedback is a passive congestion control mechanism , It judges the network congestion according to the packet loss events in the network . Even when the load in the network is very high , As long as there is no congestion or packet loss , The protocol will not reduce its sending speed .

TCP Maintain a congestion window at the sender cwnd, adopt cwnd To control the sending volume . use AIMD, It is controlled by means of additive increase and multiplicative decrease cwnd, In the congestion avoidance phase, add windows , In case of packet loss, the multiplicative window is reduced .

This congestion control algorithm assumes that all packet losses are caused by congestion .

TCP Congestion control protocol hopes to make the most of the remaining bandwidth of the network , Increase throughput . However , Due to the aggressiveness of the packet loss feedback protocol in the network near saturation state , On the one hand, it greatly improves the bandwidth utilization of the network ; But on the other hand , For the congestion control protocol based on packet loss feedback , Greatly improving the network utilization also means that the next congestion and packet loss event is not far away , Therefore, these protocols not only improve the utilization of network bandwidth, but also indirectly increase the packet loss rate of the network , The jitter of the whole network is aggravated .

TCP The assumption of congestion control algorithm is that packet loss is caused by congestion , As a matter of fact , Packet loss is not always caused by congestion , There are many possible reasons for packet loss , such as : Packet loss caused by router policy ,WIFI Error packets caused by signal interference , Signal to noise ratio of signal (SNR) And so on . These packet losses are not caused by network congestion , But it will cause TCP Large fluctuation of control algorithm , Even when the network bandwidth is very good , The sending rate will still not go up . Such as long fertilizer pipeline , High bandwidth ,RTT It's big . The probability of random packet loss in pipeline is very high , This will cause TCP Can't get up the sending speed .

Google Of BBR It has solved this problem well .BBR It is a congestion control algorithm based on bandwidth and delay feedback . It is a typical closed feedback system , How many messages to send and how fast to send these messages are constantly adjusted in each feedback .

BBR The core of the algorithm is to find two parameters , Maximum bandwidth and minimum delay . The product of the maximum bandwidth and the minimum delay is BDP(Bandwidth Delay Product), BDP It is the maximum capacity of data that can be stored in the network link . got it BDP It can solve the problem of how much data should be sent , And the maximum bandwidth of the network can solve the problem of how fast to send . If the network is compared to a highway , Compare data to cars , The maximum bandwidth is the number of cars allowed per minute , Minimum RTT There is no congestion , It takes time for the car to run back and forth , and BDP Is the number of cars lined up on this road .

BBR How to detect the maximum bandwidth and minimum delay

BBR How to detect the maximum bandwidth and minimum delay ? First of all, the maximum bandwidth and minimum delay cannot be obtained at the same time .

As shown in the figure , The horizontal axis is the amount of data in the network link , The vertical axis is RTT And bandwidth . It can be found in RTT When it doesn't change , Bandwidth is going up , Not reaching the maximum , Because the network is not congested at this time , And when bandwidth stops rising RTT Keep growing , Until a packet loss . Because at this time , The network began to jam , Packets accumulate in the router's buffer in , In this way, the delay continues to increase , And bandwidth doesn't get bigger . In the figure BDP The vertical bar of indicates the ideal maximum bandwidth and minimum delay . Obviously , To find the BDP, It's hard to find the smallest... At the same time RTT And maximum bandwidth . This is the smallest RTT And maximum bandwidth must be detected separately .

The method to detect the maximum bandwidth is to send as many data as possible , Put the buffer completely fill , The bandwidth will not increase for a period of time , In this way, we can get the maximum bandwidth at this time .

Detection is minimal RTT The way is to try to buffer Take off , Keep data delivery delays as low as possible .

thus ,BBR State machines based on different detection stages are introduced .

The state machine is divided into 4 Stages ,Startup,Drain,ProbeBW, ProbeRTT.

Startup It is similar to the slow start in general congestion control , The gain factor is 2ln2, Each round trip increases the rate of contracting by this factor , It is estimated that when the bandwidth is full, it will enter Drain state , Three back and forth in a row , The measured maximum bottleneck bandwidth is not larger than that of the previous round 25% above , Even if the bandwidth is full .

Get into Drain state , The gain factor is less than 1, It's slowing down . A bag goes back and forth , hold Startup The racket generated in the state is empty , How to calculate that the queue is empty ? It hasn't been sent yet ACK Number of packets inflight, And BDP Compare ,inflight < BDP The description is empty , The road is not so full , If inflght > BDP It means that we can't go to the next state , continue Drain.

ProbeBW It's steady state , At this time, a maximum bottleneck bandwidth has been measured , And try not to queue up . Every back and forth after , stay ProbeBW State cycle ( Unless you're going to go into ProbeRTT state ), Poll the following gain factors ,[5/4, 3/4, 1, 1, 1, 1, 1, 1], such , The biggest bottleneck bandwidth will linger where it stops growing . Most of the time should be in ProbeBW state .

The first three states , It's possible to get into ProbeRTT state . Over ten seconds, no smaller RTT value , Now enter ProbeRTT state , Reduce the contract volume , Make way to measure a RTT value , At least 200ms Or a package back and forth to exit this state . Check if the bandwidth is full , Into a different state : If not , Get into Startup state , If full , Get into ProbeBW state .

BBR The algorithm will not greatly reduce the throughput due to one or accidental packet loss , That's better than TCP It has strong anti packet loss ability .

As shown in the figure ,cubic When the packet loss rate increases , Throughput drops very fast . and BBR stay 5% The above packet losses will lead to significant throughput reduction .

BBR And based on packet loss feedback cubic And based on delayed feedback vegas The essential difference between algorithms is ,BBR Ignore random packet loss , Ignore the short fluctuation of time delay , The strategy of real-time acquisition and keeping time window is adopted , Keep an accurate sense of available bandwidth . in fact , Packet loss does not necessarily reduce bandwidth , An increase in latency does not necessarily lead to a reduction in bandwidth ,cubic It is impossible to determine whether packet loss is caused by congestion ,vegas Too sensitive to delay increase , It will lead to insufficient competitiveness .

BBR Noise packet loss and congestion packet loss can be distinguished , That means ,BBR Than traditional TCP Congestion control algorithm has better anti packet loss ability .

BBR Applications in the field of real-time audio and video

Real time audio and video systems require low delay , Good fluency , But the actual network state is complex and changeable , Packet loss , The delay and network bandwidth are changing all the time , This puts forward high requirements for network congestion control algorithm . It requires an accurate bandwidth estimation , Congestion control algorithm with good packet loss and jitter resistance .

at present Google Of webrtc Provides GCC Control algorithm , It is a control algorithm based on delay and packet loss on the sending side , The principle of this algorithm is described in detail in many places , No more details here .GCC The main problem for real audio and video is that when the bandwidth changes , Its bandwidth tracking time is relatively long , This will result in the failure to detect the bandwidth in time and accurately when the bandwidth changes , May cause audio and video jam .

since BBR It has good packet loss resistance , Naturally, it is also thought to be applied to the field of real-time audio and video . however ,BBR Not designed to handle real-time audio and video , So some problems need to be optimized .

First of all ,BBR When the packet loss rate reaches 25% above , Throughput will drop precipitously .

This is from BBR Algorithm pacing_gain Array [5/4, 3/4, 1, 1, 1, 1, 1, 1] Determined by a fixed parameter of .

stay pacing_gain Array , The multiple of its gain period is 5/4, Gain is 25%, It can be simply understood as , In the gain period ,BBR You can send more 25% The data of .

In the gain period , Whether the packet loss rate offsets the gain ratio 25%? in other words ,x Is it greater than 25.

Assume that the packet loss rate is fixed as 25%, that , In the gain period ,25% The gain of is completely 25% Is offset by packet loss , Equivalent to no income , Then comes the emptying cycle , Since the packet loss rate remains unchanged , It will reduce 25% Send data , At the same time, the packet loss rate is still 25%... And then the next 6 individual RTT, Keep... Going 25% The packet loss rate , The delivery rate is based on feedback only , I.e. decrease each time 25%, We can see , stay pacing_gain Identify all 8 cycle , The amount of data sent is only reduced , And it will continue , It will fall like a cliff .

How to combat packet loss , This requires considering the packet loss rate in each cycle , Compensate the packet loss rate . For example, the packet loss rate reaches 25% When , The gain factor becomes 50%, In this way, the feedback loss caused by packet loss can be avoided , However , How do you judge whether these packet losses are noise packet losses or congestion packet losses ? The answer lies in RTT, As long as the time window RTT Don't add , Then packet loss is not caused by congestion .

second ,BBR Minimum RTT There is one 10s Timeout time , stay 10s After a timeout , Get into ProbeRTT state , And keep the minimum 200ms, In this state , In order to empty the congestion ,inflight Only... Is allowed 4 A package , This will cause audio and video data to accumulate in the transmission queue during this period , Increase delay .

The feasible solution is , No longer keep ProbeRTT state , The congestion is evacuated by means of multiple rounds of descent , Then sample the minimum RTT, That is to say infight > bdp When , Set up pacing gain by 0.75, use 0.75 Times the bandwidth as the transmission rate , Continuous multiple rounds , until inflight < bdp, Besides , Minimum RTT The timeout of is changed to 2.5s, That is to say, it does not use very radical detection methods , Avoid large fluctuation of transmission rate , It can improve the delay in the transmission queue during the detection of new bandwidth .

Third , Begin to mention pacing gain The probe period of the array is 1.25 Double bandwidth , And then there was 0.75 Times the bandwidth period , these two items. RTT There will be a sharp drop in the transmission rate between cycles , This may cause audio and video data to stay in buffer Zhongfa can't go out , Introduce unnecessary delay .

The solution is to reduce the amplitude of the upper exploration period and the evacuation period , For example, use [1.1 0.9 1 1 1 1 1 1] such pacing gain Parameters , The advantage of this is that it can ensure the smooth sending of media streams , The sending rate will not fluctuate greatly , The disadvantage is that , When the network bandwidth improves , The exploration time will be longer .

Fourth ,BBR Detect the problem of slow convergence of new bandwidth

The original BBR The convergence of the algorithm is affected by pacing gain Cycle effects , When bandwidth drops suddenly ,BBR It takes multiple rounds to reduce to the actual bandwidth . This is because BBR You can only slow down once per wheel , and pacing gain Of 6 individual RTT The retention period of the greatly lengthens this time . The solution is to randomize pacing gain Of 6 Hold cycles , If it is 0.75 Times the period , Just reduce the speed once , This can greatly reduce BBR The convergence time of .

Last ,BBR The algorithm seems simple , But it is not so simple to apply to real-time audio and video , A lot of experimental optimization is required , Google is also here. webrtc Introduction in BBR, It is still under test . The improvement methods mentioned in this article are some attempts of Netease Yunxin in this regard , Hope to be able to lead the way , There are more interested people who can contribute to BBR Applied to the field of real-time audio and video

https://zhuanlan.zhihu.com/p/63888741

Big RTT Scene first frame optimization

as everyone knows ,tcp The congestion control algorithm includes slow start 、 Congestion avoidance 、 Congestion generation and fast retransmission algorithms . Slow start generally means that the congestion window is initialized before the connection is established cwnd The size is 1, It shows that you can pass a MSS Size data . Every time I receive a ACK,cwnd Size plus one , It's going up linearly . Every time after a round trip delay RTT(Round-Trip Time),cwnd Double the size directly , multiply 2, It's going up exponentially .

In fact, the process of slow start is tcp Detecting the affordability of the current network , So as not to directly disturb the order of network channels . In terms of fairness , This is a reasonable approach . And in RTT smaller , such as <20ms On the link of , The contracting rate can also be rapidly improved , Does not affect transmission efficiency .

However, in RTT > 100ms On the link of , The problem becomes more complicated . We start with a case For example, : For example, in Brazil, users visit the United States cdn Node this situation ,RTT roughly 120ms about . We test a pre optimization case:

Got the first one IDR Amount of frame data 152k about , The bit rate is relatively high , Time consuming 654ms.

Analyze the bag grabbing :

because RTT 120ms Too big , The speed growth cycle is too slow . Detailed analysis ,TCP The default first window size of the protocol stack 10 individual MSS. such as ,152k The data is roughly 106 individual MSS, It's about 4、5 individual RTT To send the data completely .

Change the default 10 individual MSS by 40 individual , Accelerate window growth .

Specific modification points :tcp_init_cwnd(), macro TCP_INIT_CWND,sysctl Parameters , Add a control in the kernel initcwnd Of proc Parameters , This method is applicable to all tcp The connection works ;

The same environment ,184k We only need 492ms, The first frame is lowered 160ms about , To optimize the 24.4%.

Adaptive window adjustment optimization :

If you only optimize the starting window size , That must be a fixed value , It is not conducive to optimization with live stream bit rate and different clients , So we propose an advanced scheme , Suppose a new dynamic proc Parameters init_win_size:

1、 Collect clients ip Dimensional bw( Historical bandwidth value ), Used to evaluate the bandwidth of new connections .

2、 Update the first frame size of each stream in real time first_I_size.

After a new request ,

if( hit bw)

if(bw >= first_I_size)

init_win_size = first_I_size/MSS

else

init_win_size = bw/MSS

else // Miss history bw

init_win_size = 48 // The statistical value of normal distribution of historical data of the market

From the market data, we can see that the adaptation is at the default value 48 It can also be optimized on the basis of 5%.

use fastopen Back to source implementation 0RTT

The threshold for each live broadcast platform anchor to start broadcasting is very low , There are a lot of unpopular anchors , There are also very few anchors that produce quality content . The main flow is concentrated in the head 5%~10% Our live room , Therefore, there are a large proportion of unpopular rooms , The number of visitors is very small .

A lot of cdn Or origin station , It's all multilayer , Most of the data transmission before the layer is through the external network . In order to reduce the loss of internal data transmission , Without an audience , Both the edge and the middle layer will stop pulling , Only the central node has live stream data . When an audience comes to watch , It will go back to the source step by step . Each level will recreate a new back to source connection .tcp The connection establishment phase is at least 1+RTT, Multi level connection establishment , Add up , The time spent will be more objective .

In order to reduce the time of returning to the source , It is suggested that tcp When the connection is established , Turn on tcp fastopen.

The following figure shows the normal connection establishment , And launch http get Request . stay ack When , launch http get request , Time consuming 1RTT+.

The following figure shows the opening tcp FastOpen, It can be done 0RTT. Greatly reduce the time to establish a connection .

The process when the client first establishes a connection :

1、 The client sends SYN message , The message contains Fast Open Options , And this option is Cookie It's empty , This indicates that the client requests Fast Open Cookie;

2、 Support TCP Fast Open The server generated Cookie, And put it in SYN-ACK In the packet Fast Open Option to send back to the client ;

Client received SYN-ACK after , Local cache Fast Open Options Cookie.

therefore , First launch HTTP GET On request , Still need the normal three times handshake process .

after , If the client establishes a connection to the server again :

1、 The client sends SYN message , The message contains 「 data 」( For non TFO Ordinary TCP Handshake process ,SYN The message does not contain 「 data 」) And what was recorded before Cookie;

2、 Support TCP Fast Open The server will receive Cookie check : If Cookie It works , The server will be SYN-ACK In the message SYN and 「 data 」 Confirm , The server will then 「 data 」 Delivery to the appropriate application ; If Cookie Invalid , The server will discard SYN The message contains 「 data 」, And then it sent out SYN-ACK The message will only confirm SYN The corresponding serial number of ;

3、 If the server accepts SYN In the message 「 data 」, The server can send 「 data 」, This reduces the amount of handshake 1 individual RTT Time consumption of ;

4、 The client will send ACK Confirm that the server sent back SYN as well as 「 data 」, But if the client is in the initial SYN Sent in a message 「 data 」 Not confirmed , The client will resend 「 data 」;

5、 After that TCP Connect the data transfer process and non TFO The normal situation is consistent with .

therefore , And then it started HTTP GET On request , You can bypass three handshakes , This reduces the amount of handshake 1 individual RTT Time consumption of .

tcp fastopen be relative to quic, No additional development and adaptation is required , Reuse existing architecture , Can be realized 0RTT.

according to 3 Layer back to source , Between servers RTT about 20ms, In the cold flow scenario , Can be reduced by about 40ms Delay . Server clusters are generally cross regional , The optimization effect will be more obvious .

8.2 UDP reform :

UDP To sum up, it is a ” It doesn't matter after sending ” The agreement . It's stateless , No race control or reliable transmission support , This has also led network operators to focus on UDP Limitation of protocol .UDP Belongs to the application layer protocol , It can combine the characteristics of audio and video , Scenario optimization of congestion control and retransmission algorithms at the application layer .

Quic agreement

QUIC It's based on UDP Low latency Internet transport layer protocol .TCP/IP Protocol family is the foundation of Internet , The transport layer protocol mainly includes TCP and UDP agreement . And TCP The agreement compares ,UDP Lighter weight , But error checking is also much less . It means UDP More efficient , But the reliability is not as good as TCP.

QUIC As a new transport layer protocol , Well solved TCP and UDP Disadvantages of each . It includes TCP、TLS、HTTP/2 And so on , But based on UDP transmission .QUIC One of the main goals of is to reduce connection latency .

QUIC The underlying transport is based on UDP Of , So for UDP Performance optimization can directly affect QUIC The transmission performance of .

QUIC The purpose of the design is to HTTP/2 The optimization of grammar , When the design HTTP/2 As the main application layer protocol ,quic Provides equivalent HTTP/2 Multiplexing and flow control , Equivalent to TLS Security transmission of , reliable , as well as TCP Congestion control quic For user space , Now? chrome It's already built in quic Support for , because quic Is based on the user space UDP Transport layer protocol ,quic The update will not be affected because various devices or intermediate devices do not support the new transport layer protocol 、 Or the impact of a slow upgrade .

QUIC Functionally equivalent to TCP + TLS + HTTP/2, But based on UDP above . QUIC contrast TCP + TLS + HTTP/2 The advantages of :

1、 Less TCP Three handshakes and TLS Handshake time .— Reduce tcp Time to establish connection , natural tcp Building links , need 1.5 individual RTT, For data transmission .

2、 Improved congestion control .— Flexible update tcp Congestion control algorithm , Perform congestion control tuning

3、 Multiplexing to avoid queue head blocking .— Only aim at http Parallel requests are useful

4、 Connection migration . — adopt connection-id To distinguish links , In the case of weak network , No reconnection required

5、 Forward redundancy error correction .

Applicable scenario :

1、 Long distance transmission , namely RTT The larger , Long fertilizer pipeline , Foreign networks are quite common , The improvement effect is obvious .

2、 Mobile Internet , Switch networks frequently , And the network situation is more complex , Jitter is frequent .3、 A large number of page resources are requested , There are many concurrent connections , For more complex websites , The improvement effect is good .

4、 Encrypted transmission is required .QUIC Native version , It's to improve https Performance of , Optimized versions are based on https Of , So if the data needs to be encrypted , You can use it directly , No adaptation processing is required .

Audio and video scenarios are quite different from ordinary website requests , There are obvious characteristics , As follows :

1. A large amount of data needs to be transmitted , Than ordinary web page text , The bandwidth required is much larger .

2. The importance of content information is low , No encryption required .

3. Main application scenarios , A terminal or user can only watch one video , There are few concurrent connections .

4. adopt cdn After acceleration , The server is very close to the user ,RTT They are very small .

Domestic live video broadcasting is mainly adopted http agreement , More than 90%. The mobile terminal basically adopts http,web Some browsers on the end have mandatory requirements , Can use some https.

Various domestic live broadcasting platforms , It has been tried in China quic, The improvement effect is not obvious . Because of the network transmission abroad RTT more , Therefore, it has obvious improvement .

CDN Not easy to use QUIC, Some modifications and improvements are needed , To achieve the optimization effect , As follows :

1. The server shares configuration information across machines , promote 0RTT The proportion , After this optimization ,0RTT The proportion of can be increased to 90% above .

2. Improve encryption and decryption performance , Reduce the performance loss caused by encryption and decryption .

3. Use BBR And other congestion control algorithms with better performance .

4. Split the encryption logic , Provide encryption and decryption free version of quic.

Currently in use QUIC All of the methods are based on the scenario of not changing the business logic , Accelerate the network , For example, streaming adopts rtmp over quic, Play with http+flv over quic, perhaps http+hls over quic etc. .

At present, it is mainly used in the market quic Solve the problem of the last kilometer , That is, the problem of live streaming ,QUIC stay 20% Packet loss rate scenario , Stable transmission can be maintained .

QUIC More like a finished version of TCP. According to the Google According to , Used QUIC after ,YouTube Of rebuffer The rate can be reduced by up to 18%.

SRT agreement

SRT Is based on UDT Transport protocol , Retain the UDT The core idea and mechanism of , Strong packet loss resistance . In recent years, you can feel , Now with the development of Technology ,RTMP The more the agreement works, the more it doesn't work , It's not just close 10 It hasn't been updated for years , Even major video websites are banned this year RTMP Protocol video streaming , In this context , We found that SRT Maybe a more reliable way out .

SRT Retain the UDT, The main performance is the improvement of low latency and anti packet loss ability , In the real-time field of audio and video ,SRT Time based message sending , So that it has a good ability to prevent traffic burst .SRT It provides rich congestion control statistics for the upper layer , Include RTT、 Packet loss rate 、inflight、send/receive bitrate etc. . Use this rich information , We can achieve bandwidth prediction , And according to the change of bandwidth in the coding layer to do adaptive dynamic coding and congestion control .

Before we support RTMP Protocol video streaming EasyDSS platform , There will be no packet loss , But when the network state is bad , The server will cache the package , The cumulative delay caused by , The delay time is usually a few seconds , This is a RTMP Common fault of agreement ; But through SRT When the protocol is transmitted , Because of the adoption of UDP transport , And use ARQ Packet loss recovery mechanism of , The transmission delay level based on the public network can be controlled at 1s within .

Low latency doesn't mean low quality video playback ,SRT The transmission and error correction mechanism can maximize the utilization of available bandwidth and eliminate network errors and interference , So it can transmit higher bit rate video stream in the same network environment , coordination H.264 and HEVC And so on , It can guarantee the high quality of video under the bad network condition .

SRT It mainly adopts a more radical packet loss retransmission algorithm , Through redundant data , Reduce the impact of network jitter on sending data . At present, it is mainly used in the first kilometer of video transmission , That is to use SRT Improve streaming quality .SRT Suitable for point-to-point streaming media transmission , Big anchor quality assurance , Live Mobile . At present, many large customers have applied in different scales SRT. We can see from the upstream Caton rate data , The Caton rate has improved significantly . Tencent cloud audio and video are also used in some major E-sports events SRT As an upstream stream . As can be seen from the figure below , In the use of SRT After pushing , It is obvious that the Caton rate has improved . And the current tradition rtmp The streaming mode is 5% Under the scenario of packet loss rate, the transmission cannot be stable .

SRT comparison QUIC Higher retransmission rate , But the packet loss resistance is better ; The network layer 50% Drop the bag ,SRT Stable and reliable transmission can still be ensured . The following figure shows the same live file on the same link , Every time 5 Minutes to improve 5% In the case of packet loss rate , Flow pushing quality .Rtmp over srt Streaming frame rate is more stable .

At present, Tencent cloud has supported rtmp over srt, Tencent cloud audio and video will SRT As a protocol above the transport layer , Any can be based on tcp The application layer protocol is modified to be based on SRT Application layer protocol . Tencent cloud also supports ts over SRT Push flow . adopt SRT Direct transmission of audio and video data ts flow , Downlink reuses the existing live broadcast system .TS over SRT As Haivision Hardware and OBS Streaming format standard .

WebRTC agreement

WebRTC, The name comes from web instant messaging (Web Real-Time Communication) Abbreviation , The browser does not need the transfer of the server , Can communicate directly .

WebRTC advantage :

Provide low latency 、 High quality real-time audio and video communication ;

A set of end-to-end streaming media framework , Full set of audio and video processing capabilities , And it has cross platform characteristics ;