当前位置:网站首页>Getting started with OpenMP

Getting started with OpenMP

2022-06-25 07:39:00 【AI little white dragon】

OpenMP yes Open MultiProcessing Abbreviation . Can be in Visual Studio perhaps gcc Use in .

Hello World

Save the following code as omp.cc

| #include <iostream> #include <omp.h> int main() { #pragma omp parallel for for (char i = 'a'; i <= 'z'; i++) std::cout << i << std::endl; return 0; } |

then g++ omp.cc -fopenmp That's all right.

Parallelization of loops

OpenMP Our designers hope to provide a simple way for programmers to write multithreaded programs without knowing how to create and destroy threads . So they designed some pragma, Instructions and functions to enable the compiler to insert threads in the right place. Most loops only need to be inserted in for Insert a... Before pragma Parallelization can be realized . and , By leaving these annoying details to the compiler , You can spend more time deciding where to multithread and optimize data structures

The following example puts 32 Bit RGB Color changes to 8 Bit grayscale data , You just need to for Add a sentence before pragma Parallelization can be realized

| #pragma omp parallel for for (int i = 0; i < pixelCount; i++) { grayBitmap[i] = (uint8_t)(rgbBitmap[i].r * 0.229 + rgbBitmap[i].g * 0.587 + rgbBitmap[i].b * 0.114); } |

Amazing , First , This example uses “work sharing”, When “work sharing” Used in for In the cycle , Each loop is assigned to a different thread , And promise to execute only once .OpenMP Determines how many threads need to be opened , Destroy and create , All you have to do is tell OpenMP Where to be threaded .

OpenMP There are five requirements for multithreaded loops :

Loop variables ( Namely i) Must be a signed integer , None of the others .

The comparison condition of the loop must be < <= > >= One of the

The increment part of the loop must be increased or decreased by a constant value ( That is, each cycle is constant ).

If the comparison symbol is < <=, So each cycle i Should increase , On the contrary, it should be reduced

The loop must be something that is not weird , You cannot jump from an internal loop to an external loop ,goto and break Can only jump inside a loop , The exception must be caught inside the loop .

If your cycle does not meet these conditions , Then we have to rewrite

Check if it supports OpenMP

| #ifndef _OPENMP fprintf(stderr, "OpenMP not supported"); #endif |

Avoid data dependency and competition

When a loop satisfies the above five conditions , It is still possible that the parallelization cannot be reasonably realized due to data dependency . When there is a data dependency between two different iterations , That's what happens .

| // Assume that the array has been initialized to 1 #pragma omp parallel for for (int i = 2; i < 10; i++) { factorial[i] = i * factorial[i-1]; } |

The compiler will multithread the loop , But it can not achieve the acceleration effect we want , The resulting array contains the wrong structure . Because each iteration depends on a different iteration , This is called the race condition . To solve this problem, we can only rewrite the loop or choose a different algorithm .

Race conditions are difficult to detect , Because it is also possible that the program is executed in the order you want .

Manage public and private data

Basically every loop reads and writes data , Determine which data is common between threads , It is the responsibility of the programmer that the data is private to the thread . When data is set to public , All threads access the same memory address , When data is made private , Each thread has its own copy . By default , Except for loop variables , All data is set to be public . There are two ways to make variables private :

Declare variables inside the loop , Be careful not to be static Of

adopt OpenMP Directive declares private variables

| // The following example is wrong int temp; // Declared outside the loop #pragma omp parallel for for (int i = 0; i < 100; i++) { temp = array[i]; array[i] = doSomething(temp); } |

It can be corrected in the following two ways

| // 1. Declare variables inside the loop #pragma omp parallel for for (int i = 0; i < 100; i++) { int temp = array[i]; array[i] = doSomething(temp); } |

| // 2. adopt OpenMP Instructions describe private variables int temp; #pragma omp parallel for private(temp) for (int i = 0; i < 100; i++) { temp = array[i]; array[i] = doSomething(temp); } |

Reductions

A common loop is to accumulate variables , Regarding this ,OpenMP There are special statements

For example, the following procedure :

| int sum = 0; for (int i = 0; i < 100; i++) { sum += array[i]; // sum You need to be private to parallelize , But it must be public to produce the correct results } |

In the above program ,sum Neither public nor private is right , To solve this problem ,OpenMP Provides reduction sentence ;

| int sum = 0; #pragma omp parallel for reduction(+:sum) for (int i = 0; i < 100; i++) { sum += array[i]; } |

Internal implementation ,OpenMP Private... Is provided for each thread sum Variable , When the thread exits ,OpenMP Then add the parts of each thread together to get the final result .

Of course ,OpenMP You can do more than just add up , All cumulative operations are possible , The following table :

Cyclic scheduling

Load balancing is the most important factor affecting performance in multithreaded programs , Only load balancing can ensure that all cores are busy , There will be no idle time . Without load balancing , Some threads end much earlier than others , The possibility of processor idle waste optimization .

In circulation , It is often due to the large time difference between each iteration and the destruction of load balance . You can usually check the source code to see if the loop changes . In most cases, each iteration may find approximately the same time , When this condition cannot be met , You may be able to find a subset that took approximately the same amount of time . for example , Sometimes all even numbered loops take the same time as all odd numbered loops , Sometimes it is possible that the first half of the cycle and the second half of the cycle take similar time . On the other hand , Sometimes you may not find a set of loops that take the same amount of time . Anyway , You should provide this information to OpenMP, In this way, we can make OpenMP There is a better chance to optimize the loop .

By default ,OpenMP Think that all loop iterations run at the same time , And that leads to this OpenMP Will divide different iterations equally into different cores , And let them be distributed to minimize memory access conflicts , This is because loops typically access memory linearly , Therefore, allocating the loop according to the first half and the second half can minimize the conflict . However, this is probably the best way to access memory , But load balancing may not be the best way , And conversely, the best load balancing may also destroy memory access . Therefore, a compromise must be made .

OpenMP Load balancing uses the following syntax

| #pragma omp parallel for schedule(kind [, chunk size]) |

among kind These can be the following types , and chunk size Must be a circularly invariant positive integer

Example

| #pragma omp parallel for for (int i = 0; i < numElements; i++) { array[i] = initValue; initValue++; } |

Obviously, there is a race condition in this cycle , Each loop depends on initValue This variable , We need to get rid of it .

| #pragma omp parallel for for (int i = 0; i < numElements; i++) { array[i] = initValue + i; } |

That's it , Because now we have not let initValue To be dependent

therefore , For a loop , We should try our best to loop-variant Variables are built on i On .

边栏推荐

- Weimeisi new energy rushes to the scientific innovation board: the annual revenue is 1.7 billion, and the book value of accounts receivable is nearly 400million

- 音频(五)音频特征提取

- LTpowerCAD II和LTpowerPlanner III

- Let's talk about MCU crash caused by hardware problems

- My debut is finished!

- Tupu software digital twin 3D wind farm, offshore wind power of smart wind power

- Zhugeliang vs pangtong, taking distributed Paxos

- Hanxin's trick: consistent hashing

- 13 `bs_ duixiang. Tag tag ` get a tag object

- 无“米”,也能煮“饭”利用“点云智绘”反演机载LiDAR林下缺失地面点攻略

猜你喜欢

What common APIs are involved in thread state changes

Sichuan Tuwei ca-is3105w fully integrated DC-DC converter

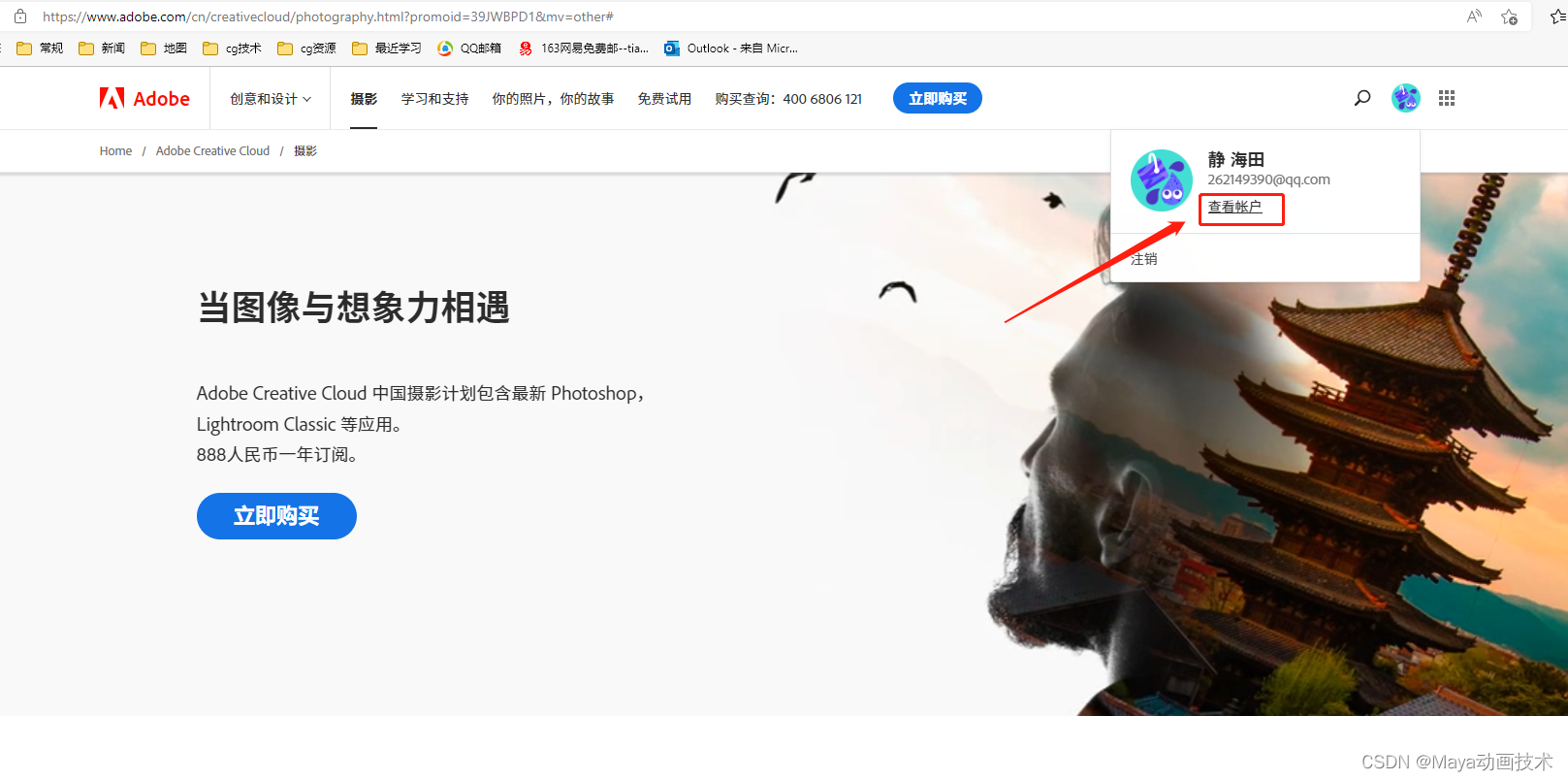

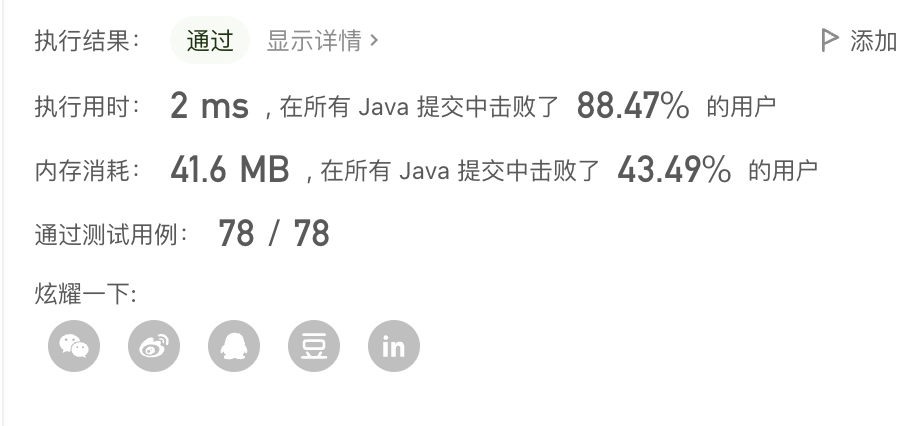

Genuine photoshop2022 purchase experience sharing

【批处理DOS-CMD命令-汇总和小结】-CMD窗口的设置与操作命令(cd、title、mode、color、pause、chcp、exit)

![[batch dos-cmd command - summary and summary] - application startup and call, service and process operation commands (start, call, and)](/img/19/b8c0fb72f1c851a6b97f2c17a18665.png)

[batch dos-cmd command - summary and summary] - application startup and call, service and process operation commands (start, call, and)

LeetCode 每日一题——515. 在每个树行中找最大值

China Mobile MCU product information

【批处理DOS-CMD命令-汇总和小结】-添加注释命令(rem或::)

【LeetCode】two num·两数之和

栅格地图(occupancy grid map)构建

随机推荐

Static bit rate (CBR) and dynamic bit rate (VBR)

Common functions of OrCAD schematic

Cocos learning diary 3 - API acquisition nodes and components

【批处理DOS-CMD命令-汇总和小结】-应用程序启动和调用、服务和进程操作命令(start、call、)

realsense d455 semantic_slam实现语义八叉树建图

My debut is finished!

Redis learning notes

Leetcode daily question - 515 Find the maximum value in each tree row

Debian introduction

我的处女作杀青啦!

[QT] shortcut key

Domestic MCU perfectly replaces STM chip model of Italy France

Chang Wei (variables and constants) is easy to understand

Unity3D邪门实现之GUI下拉菜单Dropdown设计无重复项

Authentique Photoshop 2022 expérience d'achat partage

一“石”二“鸟”,PCA有效改善机载LiDAR林下地面点部分缺失的困局

韩信大招:一致性哈希

lotus v1.16.0-rc3 calibnet

Ns32f103c8t6 can perfectly replace stm32f103c8t6

【批处理DOS-CMD命令-汇总和小结】-cmd扩展命令、扩展功能(cmd /e:on、cmd /e:off)