当前位置:网站首页>[machine learning] parameter learning and gradient descent

[machine learning] parameter learning and gradient descent

2022-06-25 12:57:00 【Coconut brine Engineer】

gradient descent

For hypothetical functions , We have a way to measure how well it fits the data . Now we need to estimate the parameters in the hypothesis function . This is where gradient descent comes in .

Imagine , We plot our hypothetical functions based on their fields θ0 and θ1( actually , We plot the cost function as a function of parameter estimation ). We're not drawing x and y In itself , Instead, we plot the parameter range of our hypothetical function and the cost of selecting a specific set of parameters .

We put θ0 stay x Axis and θ1 stay y On the shaft , The cost function is vertical z On the shaft . The point on our chart will be the result of the cost function , Use our assumptions and those specific θ Parameters . The following figure describes such a setting :

When our cost function is at the bottom of the pit in the figure , That is, when its value is the minimum , We will know that we have succeeded . The red arrow shows the smallest point in the figure .

The way we do this is to take the derivative of our cost function ( Tangent of function ). The slope of the tangent is the derivative of that point , It will provide us with a way forward . We reduce the cost function in the steepest direction of decline . The size of each step is determined by the parameter α decision , Called learning rate .

Look at the picture below , Is the definition of gradient descent algorithm :

We'll go back and do this , Until it converges , We need to update the parameters θj.

- := Indicates assignment , This is an assignment operator

- α It's called learning rate , It controls how far we go down the mountain

- Differential terms

The subtlety of implementing the gradient descent algorithm is , If you want to update this equation , Need to update at the same time θ0 and θ1, Further describe this process , You can use the picture below :

On the contrary , The following is an incorrect implementation , Because it doesn't update synchronously :

Gradient descent intuition

When the function has only one parameter , Suppose we have a cost function J, Only parameters θ1, Then the differential term is expressed as follows :

We use the gradient descent method at this point θ1 It's initialized , Here's the picture :

The following figure shows when the slope is negative ,θ1 increase , When it is positive , value θ1 Reduce , As shown in the figure below :

With an explanation , We should adjust our parameters α A method to ensure the convergence of gradient descent algorithm in a reasonable time . Failing to converge or taking too long to get the minimum value means that our step size is wrong , Here's the picture :

Smaller amplitude , This is because when we approach the local minimum , When the local derivative is obviously equal to the lowest point 0, So the gradient drop will automatically take a smaller magnitude , This is how the gradient falls , So there's really no need to reduce it any more α.

It also explains why gradient descent can achieve local optimal solution , Even in the learning rate α Fixed case .

The following figure can well explain : There's a story about θa Cost function of J, I want to minimize it , Then first initialize my algorithm ,

In gradient descent , If I take a step forward , Maybe it will take it to a new point , Go on , The less steep it is , Because the closer to the minimum , The corresponding derivative is getting closer and closer to 0. As I approach the optimal value , Every step , The new derivative will be smaller , So if I have to go one step further , Naturally take a slightly smaller step , Until it is closer to the global optimal value .

This is the gradient descent algorithm , You can use it to minimize any cost function J.

The gradient of linear regression decreases

The above three formulas , It's what we mentioned between us : Gradient descent algorithm 、 Linear regression model and linear hypothesis 、 Squared error cost function .

Next , We will use the gradient descent method to minimize the square error cost function . So we need to find out in the gradient descent method , What is the partial derivative term , The following figure shows its calculation process :

These differential terms are actually cost functions J The slope of , We put it back in the gradient descent method , Here are the results : Gradient descent dedicated to linear regression , Repeat the formula in parentheses until it converges

in fact , It can also be simplified into the following formula :

therefore , It's just the original cost function J The gradient of . This method looks at each example in the entire training set at each step , It is called batch gradient descent , We finally calculate the following formula : We m Synthesis of training examples , In fact, we are looking for training examples of the whole batch .

Please note that , Although gradient descent is generally susceptible to local minima , But the linear regression optimization problem we proposed here has only one global optimal value , There are no other local optima ; So gradient descent always converges ( Suppose the rate of learning α Not too big ) To the global minimum . in fact ,J Is a convex quadratic function . This is an example of gradient descent , Because it runs to minimize quadratic functions .

The ellipse shown above is the outline of the quadratic function . It also shows the trajectory of gradient descent , It's in (48,30) Initialization at . In the picture x( Connected by a straight line ) The process of gradient descent converging to the minimum value is marked θ Continuous values of .

边栏推荐

- 康威定律,作为架构师还不会灵活运用?

- PPT绘论文图之导出分辨率

- 原生js---无限滚动

- leetcode - 384. 打乱数组

- Slice() and slice() methods of arrays in JS

- Why are databases cloud native?

- Methods of strings in JS charat(), charcodeat(), fromcharcode(), concat(), indexof(), split(), slice(), substring()

- @Scheduled implementation of scheduled tasks (concurrent execution of multiple scheduled tasks)

- Slice and slice methods of arrays in JS

- nacos无法修改配置文件Mysql8.0的解决方法

猜你喜欢

![Select randomly by weight [prefix and + dichotomy + random target]](/img/84/7f930f55f8006a4bf6e23ef05676ac.png)

Select randomly by weight [prefix and + dichotomy + random target]

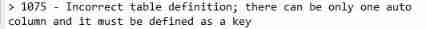

MySQL writes user-defined functions and stored procedure syntax (a detailed case is attached, and the problem has been solved: errors are reported when running user-defined functions, and errors are r

线上服务应急攻关方法论

出手即不凡,这很 Oracle!

2021-09-02

![[Visio]平行四边形在Word中模糊问题解决](/img/04/8a1de2983d648e67f823b5d973c003.png)

[Visio]平行四边形在Word中模糊问题解决

My first experience of go+ language -- a collection of notes on learning go+ design architecture

MySQL adds, modifies, and deletes table fields, field data types, and lengths (with various actual case statements)

使用Visio画立方体

剑指 Offer II 025. 链表中的两数相加

随机推荐

(6) Pyqt5--- > window jump (registration login function)

20220620 interview reply

剑指 Offer II 029. 排序的循环链表

Flutter automatically disappears after receiving push

visual studio2019链接opencv

Write regular isosceles triangle and inverse isosceles triangle with for loop in JS

MySQL 学习笔记

Resolved: could not find artifact XXX

Summer Ending

Event triggered when El select Clear clears content

J2EE从入门到入土01.MySQL安装

5 kinds of viewer for browser

更新pip&下载jupyter lab

2021-09-22

Match regular with fixed format beginning and fixed end

The amount is verified, and two zeros are spliced by integers during echo

重装cuda/cudnn/pytorch

Capabilities required by architects

剑指offer 第 3 天字符串(简单)

20220620 面试复盘