当前位置:网站首页>Visual slam lecture notes-10-1

Visual slam lecture notes-10-1

2022-06-11 18:43:00 【Sixi xiaoyibing】

Vision SLAM Lecture notes -10-1

List of articles

10 Back end

10.1 Sliding window filtering and optimization

10.1.1 In the actual environment BA structure

Graph optimization with camera pose and space points is called BA, It can effectively solve large-scale positioning and mapping problems . This is in SfM Very useful in questions . But in SLAM In the process , It is often necessary to control BA The scale of , Keep computing real-time . In reality , The back-end computing time must be limited . such as BA The scale cannot exceed 1 10000 road markings , Iterations do not exceed 20 Time , No more than 0.5 Seconds, etc . image SfM The algorithm of reconstructing a city map in a week , stay SLAM It doesn't always work .

There are many ways to control the scale of computing , For example, extract a part from a continuous video as Keyframes , Only the between key frame and road marking is constructed BA, therefore Non key frames are only used for positioning , No contribution to the construction of drawings . But even so , as time goes on , The number of keyframes will increase , The scale of the map will continue to grow . image BA Such a batch optimization method , Computing efficiency will continue to decline . To avoid that , Some means are needed to control the backend BA The scale of . These methods can be theoretical , It can also be engineering .

for example , The simplest control BA The idea of scale , Is to keep only the most recent... At the current time N Key frames , Remove keys that are earlier in time . therefore , BA Will be fixed in a time window , Those who leave this window are thrown away . This method is called Sliding window method . This kind of take N There may be some changes to the specific method of making key frames , For example, it is not necessary to take the most recent , And according to certain principles , Take time to approach , Spatially expandable keyframes , Avoid stopping the camera ,BA The structure of will shrink into a ball . If you think more deeply , image ORB-SLAM2 in , Define a type called ==“ Common view ” (Covisibility graph)==

Structure . The so-called common view , It means that “ There is a picture composed of key frames observed together with the current camera ”.

So in BA To optimize the , According to some principles, take some key frames and road signs in the common view for optimization , Only the optimization is related to the current frame 20 Key frames of more than common view landmarks , The rest is fixed . When the common view relationship can be correctly constructed , The optimization based on common view will also remain optimal for a longer time .

Sliding windows are also good , Common view is good , In fact, they are all some kind of engineering for real-time computing . But it also introduces a new problem in theory : Just mentioned that only keyframe information , So for non key frame information , How to deal with non key frame data ? We will discuss it carefully next .

10.1.2 Sliding window method

Consider a sliding window . Suppose this window has N N N Key frames , Their pose is expressed as :

x 1 , x 2 , . . . , x N x_1,x_2,...,x_N x1,x2,...,xN

Suppose they can be expressed by Lie algebra in vector space , So about these key frames , What can we talk about ?

obviously , Care about these key frames Where is the location , And their What is the uncertainty , this Corresponding to their mean covariance under the assumption of Gaussian distribution .

If these key frames also correspond to a local map , Then we can ask what the mean and variance of the whole local system should be . Set this sliding window to have M A road mark : y 1 , y 2 , . . . , y M y_1,y_2,...,y_M y1,y2,...,yM, They are associated with N N N Key frames make up the local map . Obviously, it can be used in the previous chapter BA Method to handle this sliding window , Including the establishment of graph optimization model , Build the whole Hessian matrix , Then edge all the road markings to speed up the solution .

At the time of marginalization , Consider the pose of keyframes , namely :

[ x 1 , x 2 , . . . , x N ] T ∼ N ( [ μ 1 , μ 2 , . . . , μ N ] , Σ ) [x_1,x_2,...,x_N]^T\sim N([\mu_1, \mu_2,...,\mu_N],\Sigma) [x1,x2,...,xN]T∼N([μ1,μ2,...,μN],Σ)

among μ k \mu_k μk For the first time k k k Position and pose mean value of key frames , Σ \Sigma Σ Covariance matrix for all keyframes . So clearly , The mean part means BA The result of the iteration , and Σ \Sigma Σ That's the whole thing BA Of H H H The result of the marginalization of the matrix , to H H H Matrix after Shure elimination S S S.

In the sliding window , When the window structure changes , How these state variables should change ? The matter can be discussed in two parts :

1. You need to add a new key frame in the window , And the road markings it observed .

2. You need to delete an old key frame in the window , It may also delete the road markings it observes .

At this time , Sliding window method and traditional BA The difference between .

In traditional BA Solving , This only corresponds to two different structures BA, There is no difference in the solution . But in the case of sliding windows , We need to discuss some specific details .

Add a new key frame and road marking

Consider at the last moment , The sliding window has been created N N N Key frames , It is also known that they obey a Gaussian distribution , The mean and variance are as described above . here , There is a new key frame x N + 1 x_{N+1} xN+1, Then the variable in the whole problem becomes N + 1 N+1 N+1 A collection of keys and more path punctuation points .

Delete an old keyframe

When considering deleting old keys , A theoretical problem will emerge . for example , To delete old keys x 1 x_1 x1, however x 1 x_1 x1 Not isolated , He will observe the same road signs as other frames . take x 1 x_1 x1 After marginalization, the whole problem will no longer be sparse .

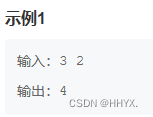

In the example above , hypothesis x 1 x_1 x1 See the road markings y 1 y_1 y1 To y 4 y_4 y4, So before processing , BA The problem of Hesian The matrix should look like the left side of the figure above , stay x 1 x_1 x1 Yes y 1 y_1 y1 To y 4 y_4 y4 Column has non-zero block , Express x 1 x_1 x1 See them . Consider marginalization at this time x 1 x_1 x1 , So Shure Xiaoyuan (Schur) The process is equivalent to eliminating several non-zero matrix blocks at the non diagonal line through matrix row and column operations , Obviously, this will result in the road punctuation matrix block in the lower right corner no longer being an off diagonal matrix . This process is called marginalization fill (Fill in).

In Chapter 9, marginalization is introduced , When marginalizing road markings , Fill in It will appear in the pose block in the upper left corner . however , because BA Don't ask the pose block to be a diagonal block , So sparse BA The solution is still feasible . however , When you edge keys , The diagonal block structure between the road markings at the lower right corner will be destroyed , At this time BA It cannot be solved iteratively in the previous sparse way . This is obviously a very bad problem . actually , Early EKF In the back end of the filter , People do maintain a dense Hessian matrix , It also makes EKF The back end cannot handle large sliding windows .

however , If the process of marginalization is transformed , You can also keep sliding windows BA The sparsity of . for example , When you edge an old keyframe , At the same time, it marginalizes the road markings it observes . such , The information of the landmark points will be converted into the common view information between the remaining key frames , So as to maintain the diagonal block structure of the lower right corner . In some SLAM In the frame , Marginalization strategies will be more complex . For example, in OKVIS in , Will determine which key frame to edge , Whether the road markings it sees are still visible in the latest key frame . If not, we will directly marginalize this road marking ; If you can , The observation of the road punctuation by the marginal key frame is discarded , So as to maintain BA The sparsity of .

SWF A direct explanation of marginalization in

The meaning of marginalization in probability is conditional probability . therefore , When you edge a key frame , namely " Keep the current estimate of this key frame , Find the conditional probability of other state variables under the condition of this key frame ". therefore , When a key frame is edged , The road markings it observes will produce a “ Where should these road signs be “ The prior information of , Thus affecting the estimated value of the rest . If you re edge these waypoint values , Then their observer will get a ” Observe where their keyframes should be “ The prior information of .

Mathematically , When you edge a key frame , The description of state variables in the whole window will change from joint distribution to a conditional probability distribution . Take the example above , That is to say :

p ( x 1 , … x 4 , y 1 , … y 6 ) = p ( x 2 , … , x 4 , y 1 , … y 6 ∣ x 1 ) p ( x 1 ) ⏟ House Go to p\left(\boldsymbol{x}_{1}, \ldots \boldsymbol{x}_{4}, \boldsymbol{y}_{1}, \ldots \boldsymbol{y}_{6}\right)=p\left(\boldsymbol{x}_{2}, \ldots, \boldsymbol{x}_{4}, \boldsymbol{y}_{1}, \ldots \boldsymbol{y}_{6} \mid \boldsymbol{x}_{1}\right) \underbrace{p\left(\boldsymbol{x}_{1}\right)}_{\text { House Go to }} p(x1,…x4,y1,…y6)=p(x2,…,x4,y1,…y6∣x1) House Go to p(x1)

Then the information of the marginalized part is discarded . After variables are marginalized , It should not be used in engineering . therefore Sliding window method is more suitable for VO System , But not suitable for large-scale mapping system .

Because now g2o and Ceres The edge operation in sliding window method is not directly supported , Omit the corresponding experiment section in this section .

10.2 Pose diagram

10.2.1 The meaning of the pose diagram

Feature points occupy most of the optimization problems . actually , After several observations , The position of the convergent characteristic point changes very little , The outer points of divergence have been eliminated . Then optimize the convergence point , It seems to be a little thankless . therefore , They prefer to fix the feature points after several optimizations , Consider them only as constraints for pose estimation , Instead of actually optimizing their position estimation .

Continue to think along this line , Think of : Whether we can completely ignore the road signs and just focus on the tracks ? It is completely possible to build a graph optimization with only trajectories , And the edges between pose nodes , The initial value can be given by the motion estimation obtained after feature matching between two key frames . The difference is , Once the initial estimate is completed , No longer optimize the location of those road markings , And only care about the relationship between all camera pose . In this way , It saves a lot of calculation of feature point optimization , Only keyframe tracks are retained , Thus the so-called pose graph is constructed (Pose Graph). The schematic diagram of posture is shown in the following figure , When no longer optimized BA Road markings in , When only regarding them as constraints on attitude nodes , We get a much smaller calculation scale of the pose diagram .

stay BA The number of features in is much larger than that of pose nodes . A key frame is often associated with hundreds of keys , And real time BA Maximum computational scale of , That is, using sparsity , In the current mainstream CPU In general, it is about tens of thousands of points . That's the limit SLAM Application scenarios . therefore , When the robot moves in a larger range of time and space , Some solutions must be considered : Or like the sliding window method , Discard some historical data ; Or do it the same way as the seat graph , Abandon the optimization of road markings , Only keep Pose The edge between . Besides , If there are additional measurements Pose Sensor , So pose graph is also a common fusion Pose Method of measurement .

10.2.2 Optimization of position and posture diagram

What do nodes and edges mean in pose graph optimization ? The nodes here are camera pose , With T 1 T_1 T1, T 2 T_2 T2,…, T n T_n Tn To express . And the edge , Is the estimation of relative motion between two pose nodes , The motion estimation can come from the feature point method or the direct method , It can also come from GPS perhaps IMU integral . Either way , Suppose that T i T_i Ti and T j T_j Tj A movement between Δ T i j \Delta T_{ij} ΔTij. The motion can be expressed in several ways , Take the more natural one :

Δ ξ i j = ξ i − 1 ∘ ξ j = l n ( T i − 1 T j ) ∨ \Delta \xi_{ij} = \xi_i^{-1} \circ \xi_j = ln(T_i^{-1}T_j)^{\vee} Δξij=ξi−1∘ξj=ln(Ti−1Tj)∨

Or according to the way of Li Qun :

T i j = T i − 1 T j T_{ij} = T_{i}^{-1}T_{j} Tij=Ti−1Tj

According to the idea of graph optimization , In practice, this equation will not be exact , Therefore, the least square error is established , And then as usual , Discuss the derivative of the error with respect to the optimized variable . here , Put the above type T i j T_{ij} Tij Move to the right of the equation , Construction error e i j e_{ij} eij:

e i j = Δ ξ i j l n ( T i j − 1 T i − 1 T j ) ∨ e_{ij} = \Delta \xi_{ij} ln(T_{ij}^{-1}T_i^{-1}T_j)^{\vee} eij=Δξijln(Tij−1Ti−1Tj)∨

Note that there are two optimization variables : ξ i \xi_i ξi and ξ j \xi_j ξj. therefore , seek e i j e_{ij} eij About the derivative of these two variables . According to the derivation of Lie algebra , to ξ i \xi_i ξi and ξ j \xi_j ξj One disturbance each : δ ξ i \delta \xi_i δξi and δ ξ j \delta \xi_j δξj. therefore , The error becomes :

e ^ i j = ln ( T i j − 1 T i − 1 exp ( ( − δ ξ i ) ∧ ) exp ( δ ξ j ∧ ) T j ) ∨ \hat{\boldsymbol{e}}_{i j}=\ln \left(\boldsymbol{T}_{i j}^{-1} \boldsymbol{T}_{i}^{-1} \exp \left(\left(-\boldsymbol{\delta} \boldsymbol{\xi}_{i}\right)^{\wedge}\right) \exp \left(\delta \boldsymbol{\xi}_{j}^{\wedge}\right) \boldsymbol{T}_{j}\right)^{\vee} e^ij=ln(Tij−1Ti−1exp((−δξi)∧)exp(δξj∧)Tj)∨

In this formula, two disturbances are sandwiched in the middle . In order to take advantage of BCH The approximate , You want to move the perturbation term to the left or right of the equation .

According to the exercises after class in Chapter 4 Formula 4.55( The law of exchange ):

exp ( ξ ∧ ) T = T exp ( ( Ad ( T − 1 ) ξ ) ∧ ) \exp \left(\boldsymbol{\xi}^{\wedge}\right) \boldsymbol{T}=\boldsymbol{T} \exp \left(\left(\operatorname{Ad}\left(\boldsymbol{T}^{-1}\right) \boldsymbol{\xi}\right)^{\wedge}\right) exp(ξ∧)T=Texp((Ad(T−1)ξ)∧)

The formula shows that , By introducing an adjoint term , can ” In exchange for “ The left and right sides of the perturbation term T T T. Take advantage of it , You can move the disturbance to the far right , The Jacobian matrix in the form of right multiplication is derived :

e ^ i j = ln ( T i j − 1 T i − 1 exp ( ( − δ ξ i ) ∧ ) exp ( δ ξ j ∧ ) T j ) ∨ = ln ( T i j − 1 T i − 1 T j exp ( ( − Ad ( T j − 1 ) δ ξ i ) ∧ ) exp ( ( Ad ( T j − 1 ) δ ξ j ) ∧ ) ∨ ≈ ln ( T i j − 1 T i − 1 T j [ I − ( Ad ( T j − 1 ) δ ξ i ) ∧ + ( Ad ( T j − 1 ) δ ξ j ) ∧ ] ) ∨ ≈ e i j + ∂ e i j ∂ δ ξ i δ ξ i + ∂ e i j ∂ δ ξ j δ ξ j \begin{aligned} \hat{\boldsymbol{e}}_{i j} &=\ln \left(\boldsymbol{T}_{i j}^{-1} \boldsymbol{T}_{i}^{-1} \exp \left(\left(-\boldsymbol{\delta} \boldsymbol{\xi}_{i}\right)^{\wedge}\right) \exp \left(\delta \boldsymbol{\xi}_{j}^{\wedge}\right) \boldsymbol{T}_{j}\right)^{\vee} \\ &=\ln \left(\boldsymbol{T}_{i j}^{-1} \boldsymbol{T}_{i}^{-1} \boldsymbol{T}_{j} \exp \left(\left(-\operatorname{Ad}\left(\boldsymbol{T}_{j}^{-1}\right) \boldsymbol{\delta} \boldsymbol{\xi}_{i}\right)^{\wedge}\right) \exp \left(\left(\operatorname{Ad}\left(\boldsymbol{T}_{j}^{-1}\right) \boldsymbol{\delta} \boldsymbol{\xi}_{j}\right)^{\wedge}\right)^{\vee}\right.\\ & \approx \ln \left(\boldsymbol{T}_{i j}^{-1} \boldsymbol{T}_{i}^{-1} \boldsymbol{T}_{j}\left[\boldsymbol{I}-\left(\operatorname{Ad}\left(\boldsymbol{T}_{j}^{-1}\right) \boldsymbol{\delta} \boldsymbol{\xi}_{i}\right)^{\wedge}+\left(\operatorname{Ad}\left(\boldsymbol{T}_{j}^{-1}\right) \boldsymbol{\delta} \boldsymbol{\xi}_{j}\right)^{\wedge}\right]\right)^{\vee} \\ & \approx \boldsymbol{e}_{i j}+\frac{\partial \boldsymbol{e}_{i j}}{\partial \delta \xi_{i}} \boldsymbol{\delta} \boldsymbol{\xi}_{i}+\frac{\partial \boldsymbol{e}_{i j}}{\partial \boldsymbol{\delta} \boldsymbol{\xi}_{j}} \boldsymbol{\delta} \boldsymbol{\xi}_{j} \end{aligned} e^ij=ln(Tij−1Ti−1exp((−δξi)∧)exp(δξj∧)Tj)∨=ln(Tij−1Ti−1Tjexp((−Ad(Tj−1)δξi)∧)exp((Ad(Tj−1)δξj)∧)∨≈ln(Tij−1Ti−1Tj[I−(Ad(Tj−1)δξi)∧+(Ad(Tj−1)δξj)∧])∨≈eij+∂δξi∂eijδξi+∂δξj∂eijδξj

therefore , According to the derivation rule on Lie Algebra , The Jacobian matrix of the error with respect to the two positions is obtained . About T i T_i Ti And about T j T_j Tj Of :

∂ e i j ∂ δ ξ i = − J r − 1 ( e i j ) Ad ( T j − 1 ) ∂ e i j ∂ δ ξ j = J r − 1 ( e i j ) Ad ( T j − 1 ) \begin{aligned} \frac{\partial \boldsymbol{e}_{i j}}{\partial \boldsymbol{\delta} \boldsymbol{\xi}_{i}} &=-\mathcal{J}_{r}^{-1}\left(\boldsymbol{e}_{i j}\right) \operatorname{Ad}\left(\boldsymbol{T}_{j}^{-1}\right) \\ \frac{\partial \boldsymbol{e}_{i j}}{\partial \boldsymbol{\delta} \boldsymbol{\xi}_{j}} &=\mathcal{J}_{r}^{-1}\left(\boldsymbol{e}_{i j}\right) \operatorname{Ad}\left(\boldsymbol{T}_{j}^{-1}\right) \end{aligned} ∂δξi∂eij∂δξj∂eij=−Jr−1(eij)Ad(Tj−1)=Jr−1(eij)Ad(Tj−1)

because se(3) Jacobian matrix on J r \mathcal{J}_{r} Jr The form is too complicated , Usually take their approximation . If the error is close to zero , Let them be approximately I I I perhaps :

J r − 1 ( e i j ) ≈ I + 1 2 [ ϕ e ∧ ρ e ∧ 0 ϕ e ∧ ] \mathcal{J}_{r}^{-1}\left(\boldsymbol{e}_{i j}\right) \approx \boldsymbol{I}+\frac{1}{2}\left[\begin{array}{cc} \phi_{e}^{\wedge} & \boldsymbol{\rho}_{e}^{\wedge} \\ 0 & \phi_{e}^{\wedge} \end{array}\right] Jr−1(eij)≈I+21[ϕe∧0ρe∧ϕe∧]

Theoretically , Even after optimization , Since the observation data given by each side are not consistent , The error is not necessarily close to zero , So simply put here J r \mathcal{J}_{r} Jr Set to I I I There will be some loss .

After knowing Jacobi's derivation , The rest is the same as normal graph optimization . In short , All pose vertices and poses — Pose edges constitute a graph optimization , It is essentially a least square problem , The optimization variable is the pose of each vertex , The edges are derived from pose observation constraints . remember ε \varepsilon ε For the collection of all edges , Then the overall objective function is :

min ξ 1 2 ∑ i , j ∈ E e i j T Σ i j − 1 e i j \min _{\boldsymbol{\xi}} \frac{1}{2} \sum_{i, j \in \mathcal{E}} \boldsymbol{e}_{i j}^{T} \boldsymbol{\Sigma}_{i j}^{-1} \boldsymbol{e}_{i j} ξmin21i,j∈E∑eijTΣij−1eij

Gauss Newton method can still be used 、 Levenberg - Marquardt method to solve this problem , In addition to using Lie algebra to represent the optimal pose , Everything else is similar . Based on previous experience , It can be used Ceres perhaps g2o To solve the , There is no need to discuss the detailed process .

边栏推荐

- Two methods for matlab to save imshow drawing pictures to a specified folder

- 视觉SLAM十四讲笔记-10-1

- Specific methods for JS to realize full screen display

- Mysql深入完全学习---阶段1---学习总述

- 力扣39题组合总和

- 牛客刷题——part8

- 牛客刷题——合法括号序列判断

- 添加自己喜欢的背景音乐

- MySQL in-depth and complete learning - stage 1 - overview of learning

- . Net core redis hyperloglog type

猜你喜欢

Niu Ke brushes the question - no two

基于TI AM5728 + Artix-7 FPGA开发板(DSP+ARM) 5G通信测试手册

Niu Ke swipes the question -- converting a string to an integer

Ti am64x - the latest 16nm processing platform, designed for industrial gateways and industrial robots

v-for循环遍历

力扣刷题——根据二叉树创建字符串

Monitoring loss functions using visdom

使用Visdom对损失函数进行监控

Niu Ke's question -- Fibonacci series

金融银行_催收系统简介

随机推荐

Specific methods for JS to realize full screen display

力扣23题,合并K个升序链表

SAP BTP 上 workflow 和 Business Service 的 project 管理

Mysql从0到1的完全深入学习--阶段二---基础篇

Common interview questions of network and concurrent programming

MySQL in-depth and complete learning - stage 1 - overview of learning

Ubuntu installs PSQL and runs related commands

Monitoring loss functions using visdom

Financial bank_ Introduction to collection system

Force deduction 33 questions, search rotation sorting array

2023年西安交通大学管理学院MPAcc提前批面试网报通知

使用Visdom對損失函數進行監控

Combination sum of 39 questions

视觉SLAM十四讲笔记-10-2

实现可以继续上局

新项目 搭建环境方法

Balanced search binary tree -- AVL tree

Labelme for image data annotation

高并发架构设计

Niu Ke's question -- finding the least common multiple