当前位置:网站首页>[paper reading | deep reading] line: large scale information network embedding

[paper reading | deep reading] line: large scale information network embedding

2022-06-25 10:19:00 【Haihong Pro】

Catalog

Preface

Hello!

Thank you very much for reading Haihong's article , If there are mistakes in the text , You are welcome to point out ~

Self introduction. ଘ(੭ˊᵕˋ)੭

nickname : Sea boom

label : Program the ape |C++ player | Student

brief introduction : because C Language and programming , Then I turned to computer science , Won a National Scholarship , I was lucky to win some national awards in the competition 、 Provincial award … It has been insured .

Learning experience : Solid foundation + Take more notes + Knock more code + Think more + Learn English well !

Only hard work

Know what it is Know why !

This article only records what you are interested in

ABSTRACT

This paper studies the problem of embedding a very large information network into a low dimensional vector space ( Large scale network embedding )

We propose a new network embedding method , be called “LINE”, it Suitable for any type of information network : Undirected 、 Directed sum / Or weighted

- This method optimizes a well-designed objective function , The local and global network structures are preserved at the same time

- Aiming at the limitation of classical random gradient descent algorithm , Put forward a kind of Edge sampling algorithm , It improves the effectiveness and efficiency of reasoning .

Experimental proof LINE In the language network 、 Social networks 、 Citation network and other real information networks are effective

The algorithm is very efficient , You can learn how to embed a network with millions of vertices and billions of edges in a few hours on a typical single machine ( It is suitable for efficient network embedding in large networks )

1. INTRODUCTION

LINE Optimize the target while preserving the local and global network structure

Naturally , The local structure is represented by the connections observed in the network , It reflects the first-order proximity between vertices

In real networks , many ( If not most of them ) Legitimate connections are not actually observed . Therefore, the first-order proximity observed in real-world data is not enough to preserve the global network structure

Supplement , We explore the second-order proximity between vertices , This is not by looking at the strength of the connections between nodes , But through Shared neighborhood structure of vertices To determine the

The general concept of second-order proximity can be explained as Nodes sharing neighbors may be similar

This intuition can be found in sociological and linguistic theories .

The following is an example

- Because of the vertex 6 and 7 The weights of the edges between are large , namely 6 and 7 It has high first-order proximity , Therefore, they should be represented closely in the embedded space .

- The vertices 5 And vertex 6 Although there is no connection between , But they have many common neighbors , That is, they have a high degree of second-order approximation , Therefore, they should also be closely expressed

We expect Second order proximity considerations It effectively complements the sparsity of the first-order proximity , And better preserve the global structure of the network .

Even if a reasonable objective optimization function is found , Optimizing for a very large network is also a challenge

Random gradient descent method (SGD) It is an optimization method that has attracted much attention in recent years . However , Direct deployment of random gradient descent is problematic for real-world information networks

- Because in many networks , The edges are weighted

- And the weight usually shows a high variance

- For example, consider a word co-occurrence network , The weight of word pairs ( Altogether ) from 1 To hundreds of thousands .

- The weights of these edges are multiplied into the gradient , Cause a gradient burst , Which affects performance .

To solve this problem , We proposed A new edge sampling method , It improves the validity and efficiency of inference

- We use Probability proportional to its weight Sample edges , Then the sampled edge is used as a binary edge to update the model .

- During this sampling process , The objective function remains unchanged , The weight of the edges no longer affects the gradient

Contribution of this paper

- We propose a new network embedding model , be called “LINE”, It is suitable for any type of information network , It can be easily extended to millions of nodes . It has a well-designed objective function , Keep the first-order and second-order proximity at the same time .

- We propose an edge sampling algorithm to optimize the target . This algorithm solves the limitation of classical random gradient algorithm , It improves the effectiveness and efficiency of reasoning .

- We conduct extensive experiments on real-world information networks . The result of the experiment proves that LINE Effectiveness and efficiency of the model .

2. RELATED WORK

General classical methods of graph embedding or dimensionality reduction , Such as MDS[4]、IsoMap[20]、LLE[18] and Laplacian Eigenmap[2]

- Usually, the affinity graph is constructed by using the feature vectors of data points , For example, data k A picture of the neighborhood

- Then the affinity graph [22] Embedded in low dimensional space

However , These algorithms usually rely on solving the dominant eigenvectors of the affinity matrix , Its complexity is at least quadratic of the number of nodes , This makes them inefficient when dealing with large-scale networks

Graph decomposition [1] technology

- Find the low dimensional embedding of large graph by matrix decomposition

- And the random gradient descent method is used for optimization

However , The goal of matrix decomposition is not designed for networks , therefore It may not be able to maintain the global structure of the network

Intuitively , Graph decomposition requires that nodes with high first-order proximity be represented closely .

The graph decomposition method is only applicable to undirected graphs

DeepWalk[16], It deploys a truncated random walk for social network embedding .

Although empirically effective , but DeepWalk Did not provide a clear goal , Explain which network attributes are reserved .

- Intuitively ,DeepWalk It is expected that nodes with higher second-order proximity will produce similar low dimensional representations

- and LINE Keep the first and second order proximity at the same time

difference :

- DeepWalk Use random walk to expand the neighborhood of vertices , This is similar to depth first search , and LINE Adopt width first search strategy , This is a reasonable second-order approximation method .

- DeepWalk Only for unweighted networks , and LINE It is suitable for networks with both weighted and unweighted edges .

3. PROBLEM DEFINITION

Definition 1. (Information Network)

G = ( V , E ) G=(V,E) G=(V,E)

e = ( u , v ) , e ∈ E , u , v ∈ V e=(u,v), e\in E,u,v\in V e=(u,v),e∈E,u,v∈V

w u , v w_{u,v} wu,v: node u、v Weight between the sides

In reality , Information networks can be directional ( Such as reference to the network ), It can also be non directional ( Such as Facebook Social networks of users in ). The weights of these edges can be binary , You can also take any real number

- for example , In citation networks and social networks , w u v w_{uv} wuv Take two values (0 or 1);

- In the co-occurrence network between different objects , w u v w_{uv} wuv You can take any nonnegative value .

Be careful , Although negative weighted edges are possible , But in this study, we only consider the nonnegative weighted edge .

In some networks , When some objects appear together many times while others may appear only a few times , The weights of edges may differ .( Did not understand )

Definition 2. (First-order Proximity)

The first-order proximity in a network is the local pairwise proximity between two vertices .

- For each pair of edges ( u , v ) (u, v) (u,v) Connected vertices , The weight of the edge w u v w_{uv} wuv Express u u u and v v v First order approximation between .

- If u u u and v v v There is no edge between , Then their first order approximation is 0.

First order proximity usually means that The similarity of the two nodes .

for example , People who are friends with each other in social networks tend to have similar interests ; Linked pages on the World Wide Web tend to talk about similar topics .

Because of this importance , Many existing graph embedding algorithms , Such as ISOMAP、LLE、 Laplace feature mapping and graph factorization , Both aim to maintain first-order proximity .

However , In the real world information network , Only a small part of the observed connections , There are many other links missing [10].

A pair of nodes on the missing link have zero first-order proximity ( Missing edge , The first order proximity is 0), Even if they are essentially very similar to each other .

therefore , Only the first-order proximity is not enough to preserve the network structure , It is important to Seek another concept of proximity to solve the sparsity problem .

A natural intuition is , Vertices sharing similar neighbors are often similar to each other .

for example

- In social networks , People who have similar friends often have similar interests , To become friends ;

- In the word co-occurrence network , Words that always co-exist with the same group of words often have similar meanings .

therefore , We define the second-order proximity , it First order proximity is added , And maintain the network structure .

Definition 3. (Second-order Proximity)

A pair of vertices in a network ( u , v ) (u, v) (u,v) The second order proximity between is The similarity between the neighborhood network structures .

set up p u = ( w u , 1 , … , w u , ∣ V ∣ ) p_u = (w_{u,1},…, w_{u,|V |}) pu=(wu,1,…,wu,∣V∣) Express u u u First order proximity to all other vertices

be u u u And v v v The second order approximation of is given by p u p_u pu And p v p_v pv The similarity determines

If no vertex is connected to u and v, that u and v The second-order adjacency between is 0.

Definition 4. (Large-scale Information Network Embedding)

Given a large network G = ( V , E ) G = (V, E) G=(V,E), The goal of large-scale information network embedding problem is to embed each vertex v ∈ V v∈V v∈V To a low dimensional space R d R^d Rd in

Learning function f G : V → R d f_G: V→R^d fG:V→Rd, among d < < ∣ V ∣ d << |V | d<<∣V∣.

In the space R d R^d Rd in , The first-order proximity and the second-order proximity between vertices remain unchanged .

4. LINE: LARGE-SCALE INFORMATION NETWORK EMBEDDING

An ideal real world information network embedding model must satisfy the following conditions :

- First of all , It must be able to maintain the first-order and second-order proximity between vertices at the same time

- secondly , It must be suitable for very large networks , Like millions of vertices and billions of edges ;

- Third , It can handle networks with any type of edge : There is a directional side 、 Undirected edges and / Or weighted edges

4.1 Model Description

We described LINE Model , Keep the first-order proximity and the second-order proximity respectively , Then a simple method combining two order proximity is introduced .

4.1.1 LINE with First-order Proximity

First order approximation refers to the local pairwise approximation between vertices in a network .

In order to simulate the first order approximation , For each undirected edge (i, j), We define vertices v i v_i vi and v j v_j vj The joint probability between them is as follows

among u ⃗ i ∈ R d \vec u_i \in R^d ui∈Rd, It means a vertex v i v_i vi Vector representation of

equation (1) Defined V × V V × V V×V A distribution in space p ( ⋅ , ⋅ ) p(·,·) p(⋅,⋅), Its empirical probability can be defined as p 1 ^ ( i , j ) = w i j W \hat{p_1}(i, j) = \frac{w_{ij}}{W} p1^(i,j)=Wwij, among W = ∑ ( i , j ) ∈ E w i j W =\sum_{(i,j)∈E}w_{ij} W=∑(i,j)∈Ewij

In order to keep the first order close , A direct approach is to minimize the following objective functions

d ( ⋅ , ⋅ ) d(·,·) d(⋅,⋅) Is the distance between two distributions

We choose to minimize Of two probability distributions KL The divergence (KL-divergence)

KL-divergence:https://zhuanlan.zhihu.com/p/425693597

use K L − scattered degree KL - The divergence KL− scattered degree Replace d ( ⋅ , ⋅ ) d(·,·) d(⋅,⋅), And take out some constants , We have

Be careful , The first order approximation is only applicable to undirected graphs , Not for directed graphs .

By finding { u ^ i } i = 1... ∣ V ∣ \{\hat u_i \} _{i=1...|V|} { u^i}i=1...∣V∣ Minimize the equation (3)

4.1.2 LINE with Second-order Proximity

Second order adjacency It is applicable to digraph , It also applies to undirected graphs

Given a network , Without losing generality , We Suppose it is directed

An undirected edge can be thought of as having two opposite directions 、 Directed edges with equal weights

Second order approximation assumes that there are many connections between vertices , They are similar to each other . under these circumstances , Each vertex is also treated as a specific “ Context ”, stay “ Context ” Vertices with similar distributions on are considered to be similar .

Personal understanding : If the context distribution of vertices is similar , Then these nodes are likely to be similar

therefore , Each vertex plays two roles : The vertex itself and Specific to other vertices “ Context ”.

We introduce two vectors u ⃗ i \vec u_i ui and u ⃗ i ′ \vec u_{i}^{'} ui′

- among u ⃗ i \vec u_i ui Yes, it will v i v_i vi The representation when regarded as a vertex

- u ⃗ i ′ \vec u_{i}^{'} ui′ Yes, it will v i v_i vi As specific “ Context ” Representation of time

For every directed edge (i, j), Let's first define The vertices v i v_i vi Generate “ Context ” v j v_j vj Probability by :

- among ∣ V ∣ |V| ∣V∣ Is a vertex or “ Context ” The number of

- here v i v_i vi The role of “ Node itself ”, v j v_j vj The role is the context of other nodes

For each vertex v i v_i vi, equation (4) It actually defines a context based conditional distribution p 2 ( ⋅ ∣ v i ) p_2(·|v_i) p2(⋅∣vi)

As mentioned above , Second order approximation hypothesis Vertices with similar distributions in the context are similar to each other

In order to keep the second order close , We should make the low dimensional representation of the specified context p 2 ( ⋅ ∣ v i ) p_2(·|v_i) p2(⋅∣vi) The conditional distribution of is close to the empirical distribution p ^ 2 ( ⋅ ∣ v i ) \hat p_2(·|v_i) p^2(⋅∣vi)

therefore , We minimize the following objective function :

- d ( ⋅ , ⋅ ) d(·,·) d(⋅,⋅) Is the distance between two distributions , It also uses KL The divergence

- λ i λ_i λi To represent vertices in the network i The prestige of , λ i = d i λ_i = d_i λi=di, d i d_i di For the top i Of The degree of

Empirical distribution p ^ 2 ( ⋅ ∣ v i ) \hat p_2(·|v_i) p^2(⋅∣vi) Defined as p 2 ( v j ∣ v i ) = w i j d i p_2(v_j |v_i) = \frac{w_{ij}}{d_i} p2(vj∣vi)=diwij

- w i j w_{ij} wij For edge (i, j) A weight ,

- d i d_i di For the top i The degree of , namely d i = ∑ k ∈ N ( i ) w i k d_i=\sum_{k \in N(i)}w_{ik} di=∑k∈N(i)wik, among N ( i ) N (i) N(i) by v i v_i vi A collection of neighbors

Get the final objective optimization function :

Through the study u ⃗ i \vec u_i ui and u ⃗ i ′ \vec u_{i}^{'} ui′ To minimize the equation (6)

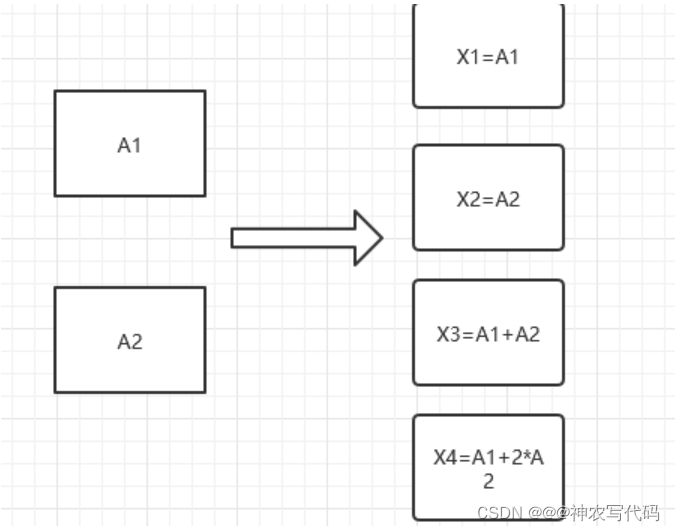

4.1.3 Combining first-order and second-order proximities

In order to maintain the first-order and second-order proximity at the same time, the network is embedded , We have found a simple and effective method in practice , namely

- The first-order and second-order proximity preserving LINE Model

- Then, for each vertex, the embedding trained by the two methods is connected

4.2 Model Optimization

Optimization objectives (6) The cost of computing is very high , In calculating conditional probabilities p 2 ( ⋅ ∣ v i ) p_2(·|v_i) p2(⋅∣vi) You need to sum the entire vertex set .

To solve this problem , We use [13] The negative sampling method proposed in , For each side (i, j) Sampling multiple negative edges according to a certain noise distribution .

say concretely , It's for each side (i, j) The following objective functions are specified :

among σ ( x ) = 1 1 + e − x \sigma(x) = \frac{1}{1+e^{-x}} σ(x)=1+e−x1

The first item models the observed edges , The second item models the negative edge extracted from the noise distribution ,k Is the number of negative edges .

We set up P n ( v ) ∝ d v 3 / 4 P_n(v)∝d_v^{3/4} Pn(v)∝dv3/4, d v d_v dv For the top v v v The degree of

For the objective function (3), There is a trivial solution : u i k = ∞ u_{ik} =∞ uik=∞, about i = 1 , … , ∣ V ∣ i=1,…, |V| i=1,…,∣V∣, k = 1 , … , d k = 1,…, d k=1,…,d.

To avoid trivial solutions , We can still use the negative sampling method (7), Just put the u ⃗ j ′ T \vec u_j^{'T} uj′T Change it to u ⃗ j T \vec u_j^T ujT

We use asynchronous random gradient algorithm (ASGD)[17] On the equation (7) To optimize

At each step ,ASGD The algorithm will sample a small number of edges , Then update the model parameters .

If an edge is sampled (i, j), Then the gradient w.r.t Compute vertices i Embedding vector of u ⃗ i \vec u_i ui by :

Be careful , The gradient will be multiplied by the weight of the edge

When the weight variance of the edge is large , There will be problems

for example , In a word co-occurrence network , Some words appear many times ( for example , Tens of thousands of times ), Some words only appear a few times . In such a network , The scale of the gradient is divergent , It's hard to find a good learning rate .

If we choose a large learning rate according to the edge with small weight , Then the gradient on the weighted edge will explode , And if we choose the learning rate according to the edge with great weight , The gradient will be too small .

4.2.1 Optimization via Edge Sampling

The intuition to solve the above problem is , If all edges have equal weights ( for example , A network with two valued edges ), Then there is no problem in choosing the appropriate learning rate

therefore , A simple way to deal with this is to put A weighted edge is expanded into multiple binary edges

for example , A weight is w w w The edges of are expanded to w w w Two valued edges

This will solve the problem , But it will significantly increase memory requirements , Especially when the weight of the edge is very large .

To solve this problem , We can sample from the original edge , The sampled edges are treated as binary edges , The sampling probability is proportional to the weight of the original edge .

Through this edge sampling processing , The overall objective function remains unchanged .

This problem boils down to how to sample the edges according to their weights

set up W = ( w 1 , w 2 , … , w ∣ E ∣ ) W = (w1, w2,…, w|E|) W=(w1,w2,…,w∣E∣) A sequence of edge weights . We can simply calculate the sum of the weights w s u m = ∑ i = 1 ∣ E ∣ w_{sum} =\sum_{i=1}^{|E|} wsum=∑i=1∣E∣, And then in [ 0 , w s u m ] [0,w_{sum}] [0,wsum] Sampling a random value in the range , See which range the random value belongs to [ ∑ j = 0 i − 1 w j , ∑ j = 0 i w j ) [\sum_{j=0}^{i-1}w_j, \sum_{j=0}^{i}w_j) [∑j=0i−1wj,∑j=0iwj)

This method requires O ( ∣ E ∣ ) O(|E|) O(∣E∣) Time to draw a sample , When the edge ∣ E ∣ |E| ∣E∣ When the number is large , This method is very expensive .

We use the alias table method [9] Draw the sample according to the weight of the edge , When the sample is drawn repeatedly from the same discrete distribution , It only needs O(1) Time .

It takes constant time to sample an edge from the alias table O ( 1 ) O(1) O(1), Negative sampling optimization requires O ( d ( K + 1 ) ) O(d(K+ 1)) O(d(K+1)) Time , among K K K Is the number of negative samples .

therefore , in general , Each step takes time O ( d K ) O(dK) O(dK).

In practice , We find that the number of steps used for optimization is usually related to the number of edges O ( ∣ E ∣ ) O(|E|) O(∣E∣) In direct proportion to . therefore ,LINE The overall time complexity of is O ( d K ∣ E ∣ ) O(dK|E|) O(dK∣E∣), And the number of sides ∣ E ∣ |E| ∣E∣ linear , And the number of vertices ∣ V ∣ |V| ∣V∣ irrelevant .

Edge sampling processing improves the effectiveness of random gradient descent algorithm without affecting the efficiency .

4.3 Discussion

Low degree vertices

A practical problem is How to accurately embed vertices with very small degree . Because the number of neighbors of such a node is very small , So it is difficult to infer its representation accurately , In particular, use relies heavily on “ Context ” Second order proximity method of quantity .

An intuitive solution is to expand the neighbors of these vertices by adding higher-order neighbors , Like a neighbor's neighbor .

In this paper , Let's just think about Add a second-order neighborhood on each vertex , That is, the neighborhood of the neighborhood .

The vertices i i i With its second-order neighbors j j j The measurement of the weight between is

In practice , We can only add one vertex j {j} j Subset , The subset is related to the vertex i i i Of w i j w_{ij} wij Maximum

New vertices

Another practical problem is how to find the representation of the newly arrived vertex .

For a new vertex i, If you know its relationship to an existing vertex , We can get the empirical distribution on the existing vertex p ^ 1 ( ⋅ , v i ) \hat p_1(·, v_i) p^1(⋅,vi) and p ^ 2 ( ⋅ ∣ v i ) \hat p_2(·|v_i) p^2(⋅∣vi).

According to the objective function equation (3) or (6) Get the embedding of the new vertex

A direct approach is to minimize any of the following objective functions

By updating the embedding of new vertices , Keep existing vertices embedded .

If no connection between the new vertex and the existing vertex is observed , We must turn to other information , For example, the text information of the vertex , We leave it to future work

5. EXPERIMENTS

We are right. LINE The effectiveness and efficiency of . We apply this method to many different types of large-scale real-world networks , Including a language network 、 Two social networks and two citation networks .

5.1 Experiment Setup

Data sets

Baseline approach

- GF

- Deep Walk

- Skip Gram

- LINE-SDD(1st)

- LINE-SDD(2nd)

- LINE(1st)

- LINE(2nd)

5.2 Quantitative Results

5.2.1 Language Network

Word Analogy.

Document Classification

Use first-order and second-order approximations to compare most similar words .

5.2.2 Social Network

Flickr Multi label classification results on the network

Youtube Multi label classification results on the network

5.2.3 Citation Network

be based on DBLP(AuthorCitation) Multi label classification results of the network

be based on DBLP(PaperCitation) Multi label classification results of the network .

5.3 Network Layouts

A visual network of co authors . The author is mapped to two-dimensional space using t-SNE Package learning is embedded as input .

The color of the node represents the author's community

- Red :“ data mining ”

- Blue :“ machine learning ”

- green :“ Computer vision ”.

Conclusion

The article is only used as a personal study note , Record from 0 To 1 A process of

Hope to help you a little bit , If you have any mistakes, you are welcome to correct them

边栏推荐

- Flutter replaces the default icon of Gaud positioning

- Rxjs TakeUntil 操作符的学习笔记

- Free platform for wechat applet making, steps for wechat applet making

- How do wechat sell small commodity programs do? How to open wechat apps to sell things?

- Fluent creates, reads and writes JSON files

- Solutions using protobuf in TS projects

- NetCore性能排查

- tokenizers>=0.11.1,!=0.11.3,<0.13 is required for a normal functioning of this module,

- Puzzle (019.2) hexagonal lock

- P2P network core technology: Gossip protocol

猜你喜欢

【论文阅读|深读】LINE: Large-scale Information Network Embedding

The path of Architects

Redis(二)分布式锁与Redis集群搭建

Basic use and cluster construction of consult

How much does a small program cost? How much does a small program cost? It's clear at a glance

浅谈二叉树

链表 删除链表中的节点

![[MySQL learning notes 21] storage engine](/img/3a/a3cd573281efc689cafdb7d7562ce0.png)

[MySQL learning notes 21] storage engine

Minio基本使用与原理

Deep understanding of JVM - JVM memory model

随机推荐

【论文阅读|深读】LINE: Large-scale Information Network Embedding

Get started quickly with jetpack compose Technology

CyCa children's physical etiquette Yueqing City training results assessment successfully concluded

Basic use and cluster construction of consult

我希望按照我的思路盡可能將canvas基礎講明白

Use evo

Learning notes of rxjs takeuntil operator

WebApi性能优化

字符串 实现 strStr()

Can two Mitsubishi PLC adopt bcnettcp protocol to realize wireless communication of network interface?

How much does a small program cost? How much does a small program cost? It's clear at a glance

Flask blog practice - archiving and labeling of sidebar articles

Solutions using protobuf in TS projects

我希望按照我的思路尽可能将canvas基础讲明白

The problem of automatic page refresh after the flyer WebView pops up the soft keyboard

虚幻引擎图文笔记:使用VAT(Vertex Aniamtion Texture)制作破碎特效(Houdini,UE4/UE5)上 Houdini端

Floating window --- create an activity floating window (can be dragged)

BUG-00x bug description + resolve ways

Neat Syntax Design of an ETL Language (Part 2)

字符串 最长公共前缀