当前位置:网站首页>Simple but easy to use: using keras 2 to realize multi-dimensional time series prediction based on LSTM

Simple but easy to use: using keras 2 to realize multi-dimensional time series prediction based on LSTM

2022-07-24 06:14:00 【A small EZ】

Hello friends , After a long time, I started to write time series related blogs again .

New Year , The past time series prediction blog has adopted a version of Keras 2.2 tensorflow-gpu 1.13.1 Version implementation .

The theme of this blog is :

Provide a kind of For beginners Of LSTM Time series prediction model

Very effective and easy to use

Simultaneous adoption Keras 2 + TensorFlow 2 Realization , Provide the whole process of prediction and verification .

edition :

cuda 10.1

cudnn 8.0.5

keras 2.4.3

tensorflow-gpu 2.3.0

Keras 2.4 Version only supports TensorFlow As the back-end , See Keras 2.4 Release , Really become TensorFlow Of Keras ,import There are also some changes compared with the previous version .

Data is introduced

This data set is a pollution data set , We need to use the multidimensional time series to predict pollution This dimension , use 80% As a training set ,20% As test set .

Start

The first step of deep learning

import tensorflow as tf

Compared to the old version keras Here are some changes

from tensorflow.keras import Sequential

from tensorflow.keras.layers import LSTM,Dense,Activation,Dropout

from tensorflow.keras.callbacks import History,Callback,EarlyStopping

import numpy as np

Model implementation

The model uses the simplest sequential model ,

Use double layer LSTM Add a full connection layer to realize prediction

The specific structure is as follows

Then I added a early stopping Mechanism

def lstm_model(train_x,train_y,config):

model = Sequential()

model.add(LSTM(config.lstm_layers[0],input_shape=(train_x.shape[1],train_x.shape[2]),

return_sequences=True))

model.add(Dropout(config.dropout))

model.add(LSTM(

config.lstm_layers[1],

return_sequences=False))

model.add(Dropout(config.dropout))

model.add(Dense(

train_y.shape[1]))

model.add(Activation("relu"))

model.summary()

cbs = [History(), EarlyStopping(monitor='val_loss',

patience=config.patience,

min_delta=config.min_delta,

verbose=0)]

model.compile(loss=config.loss_metric,optimizer=config.optimizer)

model.fit(train_x,

train_y,

batch_size=config.lstm_batch_size,

epochs=config.epochs,

validation_split=config.validation_split,

callbacks=cbs,

verbose=True)

return model

At the same time, the input and output of the specific model are based on train_x and train_y Of shape To set up . So this is an adaptive model .

Just make sure that train_x The dimensions are 3,train_y The dimensions are 2, Can run smoothly .

Specific parameters

# Use classes to implement a configuration file

class Config:

def __init__(self):

self.path = './Model/'

self.dimname = 'pollution'

# Before using n_predictions Step by step to predict the next step

self.n_predictions = 30

# Appoint EarlyStopping If a single training val_loss The value cannot be reduced at least min_delta when , Retraining is allowed at most patience Time

# How many... Can you tolerate epoch There's nothing in the improvement

self.patience = 10

self.min_delta = 0.00001

# Appoint LSTM Number of neurons in two layers

self.lstm_layers = [80,80]

self.dropout = 0.2

self.lstm_batch_size = 64

self.optimizer = 'adam'

self.loss_metric = 'mse'

self.validation_split = 0.2

self.verbose = 1

self.epochs = 200

## Is an array Such as [64,64]

def change_lstm_layers(self,layers):

self.lstm_layers = layers

The structure of the model is shown in the figure

Because of the adoption of Early Stopping Mechanism Training 28 It's over

Epoch 28/200

438/438 [==============================] - 10s 23ms/step - loss: 8.4697e-04 - val_loss: 4.9450e-04

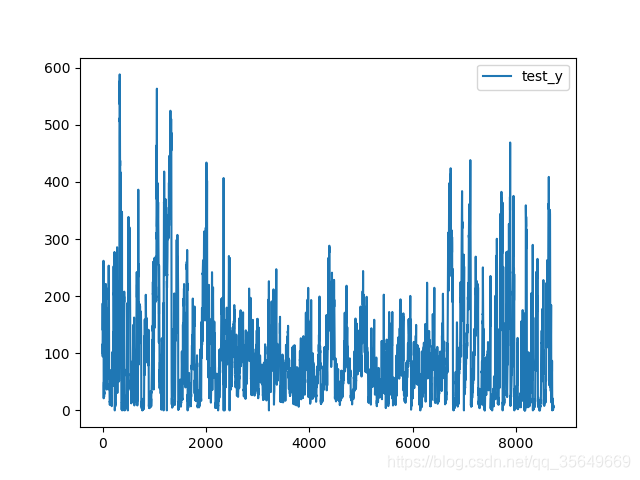

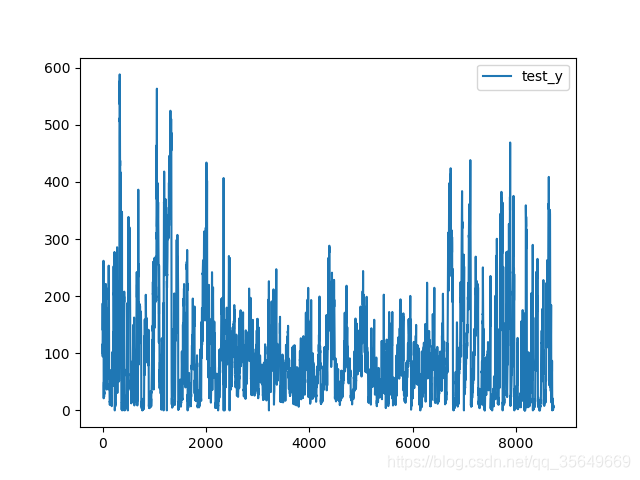

result

Let's see the results

RMSE by 24.096020043963737

MAE by 13.384563587562422

MAPE by 25.183164455025054

We can see that our method is simple , But the prediction effect is very good ~

notes

This code has been uploaded to my github

An old version of this tutorial is also attached ( The gradient search part may be a little small bug If you need to proofread carefully )

If this article I've praised 1000 perhaps github This project star too 100

I will open source Pass through the hall into the inner chamber LSTM: Use LSTM Simple time series anomaly detection

A new version of the Better implementation .

Reference resources

Anomaly Detection in Time Series Data Using LSTMs and Automatic Thresholding

边栏推荐

- JUC并发编程基础(9)--线程池

- 配置固定的远程桌面地址【内网穿透、无需公网IP】

- Channel attention and spatial attention module

- QT char to qstring hexadecimal and char to hexadecimal integer

- day1-jvm+leetcode

- HoloLens 2 开发:开发环境部署

- JSON. Dumps() function parsing

- What is monotone stack

- Find the ArrayList < double > with the most occurrences in ArrayList < ArrayList < double >

- Unity基础知识及一些基本API的使用

猜你喜欢

![[deep learning] handwritten neural network model preservation](/img/4a/27031f29598564cf585b3af20fe27b.png)

[deep learning] handwritten neural network model preservation

PDF Text merge

Hit the wall record (continuously updated)

头歌 平台作业

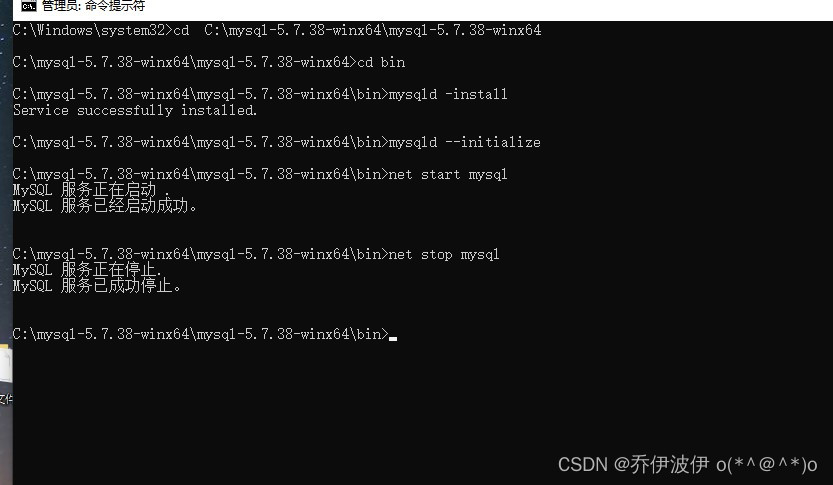

MySQL download and installation environment settings

简单却好用:使用Keras 2实现基于LSTM的多维时间序列预测

Installation of tensorflow and pytorch frames and CUDA pit records

Kernel pwn 基础教程之 Heap Overflow

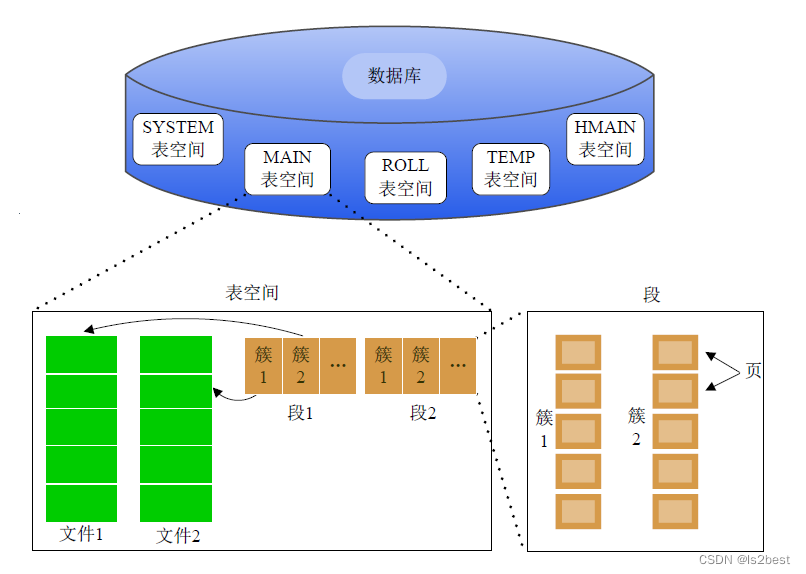

Dameng database_ Logical architecture foundation

Dameng database_ Various methods of connecting databases and executing SQL and scripts under disql

随机推荐

ue4 物品随机生成

Force buckle: 1-sum of two numbers

不租服务器,自建个人商业网站(3)

ue4 换装系统3.最终成果

QT novice entry level calculator addition, subtraction, multiplication, division, application

Search of two-dimensional array of "sword finger offer" C language version

Thymeleaf快速入门学习

头歌 平台作业

Dameng database_ Logical backup

Unity 3D帧率统计脚本

UE4 replacement system 3. Final results

day1-jvm+leetcode

Calculation steps of principal component analysis

day5-jvm

JUC并发编程基础(4)--线程组和线程优先级

Better CV link collection (dynamic update)

Openpose Unity 插件部署教程

常见AR以及MR头戴显示设备整理

Unity基础知识及一些基本API的使用

How to download videos on the web