当前位置:网站首页>Unity shader screen post-processing

Unity shader screen post-processing

2022-07-28 17:06:00 【Morita Rinko】

The brightness of the screen 、 saturation 、 Contrast

Realization effect :

unity Brightness of the screen after screen processing 、 saturation 、 Contrast

Implementation code :

Script :

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

// Detection base class in inheritance script :PostEffectsBase

public class BrightnessSaturationAndContrast : PostEffectsBase

{

// Definition shader And materials

public Shader briSatConShader;

private Material briSatConMaterial;

// brightness

[Range(0.0f, 3.0f)]

public float brightness = 1.0f;

// saturation

[Range(0.0f, 3.0f)]

public float saturation = 1.0f;

// Contrast

[Range(0.0f, 3.0f)]

public float contrast = 1.0f;

// Start is called before the first frame update

void Start()

{

}

// Update is called once per frame

void Update()

{

}

// Special effects processing

private void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (material != null)

{

material.SetFloat("_Brightness", brightness);

material.SetFloat("_Saturation", saturation);

material.SetFloat("_Contrast", contrast);

Graphics.Blit(source, destination, material);

}

else

{

Graphics.Blit(source, destination);

}

}

// visit private Of material

public Material material

{

get

{

// Use briSatConShader Of briSatConMaterial texture of material

briSatConMaterial = CheckShaderAndCreateMaterial(briSatConShader, briSatConMaterial);

return briSatConMaterial;

}

}

}

shader:

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/Chapter12-BrightnessSaturationAndContrast"

{

Properties

{

_MainTex ("Albedo (RGB)", 2D) = "white" {

}

}

SubShader

{

Pass{

// Deep write off cull off

ZTest Always Cull Off ZWrite Off

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "unityCG.cginc"

sampler2D _MainTex;

half _Brightness;

half _Saturation;

half _Contrast;

struct v2f{

float4 pos:SV_POSITION;

half2 uv:TEXCOORD0;

};

// Call the built-in structure , It only contains vertex information and texture coordinates

v2f vert(appdata_img v){

v2f i;

i.pos=UnityObjectToClipPos(v.vertex);

i.uv=v.texcoord;

return i;

}

fixed4 frag(v2f i):SV_Target{

fixed4 renderTex=tex2D(_MainTex,i.uv);

// The brightness is multiplied directly by the color

fixed3 finalColor =renderTex.rgb*_Brightness;

// saturation

// Calculate the brightness value

fixed luminance =renderTex.r*0.2125+renderTex.g*0.7154+renderTex.b*0.0721;

fixed3 luminanceColor =fixed3(luminance,luminance,luminance);

// The initial color value and finalcolor Difference obtained

finalColor=lerp(luminanceColor,finalColor,_Saturation);

// Contrast

fixed3 avgColor=fixed3(0.5,0.5,0.5);

finalColor=lerp(avgColor,finalColor,_Contrast);

// Use the transparent channel value of the sampled color

return fixed4(finalColor,renderTex.a);

}

ENDCG

}

}

FallBack Off

}

edge detection

principle :

Use some edge detection operators to convolute the image .

Common edge detection convolution kernel

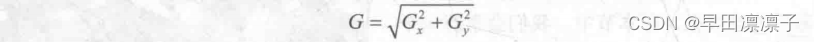

Because the judgment of edge is actually the attribute with obvious difference between adjacent pixels , So convolution kernels are calculated in both horizontal and vertical directions , Get two gradients .

The final gradient generally has the following two ways

The larger the gradient, the more likely it is to be an edge

Realization effect :

Script code :

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class EdgeDetection : PostEffectsBase

{

public Shader edgeDetectShader;

private Material edgeDetectMaterial;

// Screen post-processing can be adjusted and shader Required parameters

// Edge line strength , If 0 Then the edge line will be superimposed on the original rendered image , If 1 Then only the edge line

[Range(0.0f, 1.0f)]

public float edgesOnly = 0.0f;

// Edge line color

public Color edgeColor = Color.black;

// The background color

public Color backgroundColor = Color.white;

public Material material

{

get

{

edgeDetectMaterial = CheckShaderAndCreateMaterial(edgeDetectShader, edgeDetectMaterial);

return edgeDetectMaterial;

}

}

// Start is called before the first frame update

void Start()

{

}

// Update is called once per frame

void Update()

{

}

private void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (material != null)

{

material.SetFloat("_EdgeOnly", edgesOnly);

material.SetColor("_EdgeColor", edgeColor);

material.SetColor("_BackgroundColor", backgroundColor);

Graphics.Blit(source, destination, material);

}

else

{

Graphics.Blit(source, destination);

}

}

}

shader Code

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/Chapter12_EdgeDetection"

{

Properties

{

_MainTex ("Base (RGB)", 2D) = "white" {

}

}

SubShader

{

Pass{

ZTest Always Cull Off ZWrite Off

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "unityCG.cginc"

sampler2D _MainTex;

// The size of texture texture elements

half4 _MainTex_TexelSize;

// by 0 The time is superposition , by 1 When there is only edge

half _EdgeOnly;

half4 _EdgeColor;

// Only the background color at the edge

half4 _BackgroundColor;

struct v2f{

float4 pos:SV_POSITION;

half2 uv[9]:TEXCOORD0;

};

v2f vert(appdata_img i){

v2f o;

o.pos=UnityObjectToClipPos(i.vertex);

half2 uv=i.texcoord;

// Originally, only one texture coordinate was used , But the convolution kernel needs to use the surrounding texture coordinates , So you need to store 9 Texture coordinates

o.uv[0]=uv+_MainTex_TexelSize*half2(-1,-1);

o.uv[1]=uv+_MainTex_TexelSize*half2(0,-1);

o.uv[2]=uv+_MainTex_TexelSize*half2(1,-1);

o.uv[3]=uv+_MainTex_TexelSize*half2(-1,0);

o.uv[4]=uv+_MainTex_TexelSize*half2(0,0);

o.uv[5]=uv+_MainTex_TexelSize*half2(1,0);

o.uv[6]=uv+_MainTex_TexelSize*half2(-1,1);

o.uv[7]=uv+_MainTex_TexelSize*half2(0,1);

o.uv[8]=uv+_MainTex_TexelSize*half2(1,1);

return o;

}

// Calculate the brightness value of the color

fixed luminance(fixed4 color){

return 0.2125*color.r+0.7154*color.g+0.0721*color.b;

}

half Sobel(v2f i){

// Convolution kernel

const half Gx[9]={

-1,-2,-1,0,0,0,1,2,1};

const half Gy[9]={

-1,0,1,-2,0,2,-1,0,1};

// The color value of each texture element

half texColor;

// Gradient value

half edgeX=0;

half edgeY=0;

for(int j=0;j<9;j++){

texColor=luminance(tex2D(_MainTex,i.uv[j]));

edgeX+=texColor*Gx[j];

edgeY+=texColor*Gy[j];

}

return 1-abs(edgeX)-abs(edgeY);

}

fixed4 frag(v2f i):SV_Target{

half edge =Sobel(i);

//edge The larger the value, the smaller the gradient , Then the image color accounts for more

fixed4 withEdgeColor =lerp(_EdgeColor,tex2D(_MainTex,i.uv[4]),edge);

//edge The larger the value, the smaller the gradient , Then the background color is too large

fixed4 onlyEdgeColor =lerp(_EdgeColor,_BackgroundColor,edge);

// Finally, according to _EdgeOnly The difference gets the final color

fixed4 c=lerp(withEdgeColor,onlyEdgeColor,_EdgeOnly);

return c;

}

ENDCG

}

}

FallBack "Diffuse"

}

Gaussian blur

There are several ways to blur :

- The median fuzzy : Sort the pixels in the field and take the median , As the color of this pixel .

- The mean of fuzzy : Add the pixels in the field and divide by the number , The obtained mean value is used as the color .

Gaussian blur :

Use the Gauss kernel , Gaussian kernel is obtained from Gaussian equation , The data in Gaussian kernel is added as 1, It will not change the brightness of the picture .

x and y Represents the integer distance between the current position and the center of the convolution kernel , The farther the distance , The less the impact , The closer you get , The greater the impact .

We can compress the square Gaussian kernel into two horizontal and vertical convolution kernels , The effect is the same .

Horizontal is the addition of each vertical column , Vertical is the result of adding each row .

Realization effect :

Script code :

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class GaussianBlur : PostEffectsBase

{

public Shader gaussianBlurShader;

private Material gaussianBlurMaterial = null;

// The number of iterations

[Range(0, 4)]

public int iterations = 3;

// Fuzzy range

[Range(0.2f, 3.0f)]

public float blurSpread = 0.6f;

// Scaling factor , The larger the size, the less pixels to process , At the same time, it can further improve the degree of ambiguity , But too large may make the image pixelated .

[Range(1, 8)]

public int downSample = 2;

public Material material

{

get

{

gaussianBlurMaterial = CheckShaderAndCreateMaterial(gaussianBlurShader, gaussianBlurMaterial);

return gaussianBlurMaterial;

}

}

// Start is called before the first frame update

void Start()

{

}

// Update is called once per frame

void Update()

{

}

public void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (material != null)

{

int rtW = source.width / downSample;

int rtH = source.height / downSample;

// Create buffer buffer0

RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Set the filtering mode to bilinear

buffer0.filterMode = FilterMode.Bilinear;

// take src The screen image in is passed in buffer0

Graphics.Blit(source, buffer0);

for(int i = 0; i < iterations; i++)

{

// to shader The parameter

material.SetFloat("_BlurSize", 1.0f + i * blurSpread);

// newly build buffer1, And carry out vertical blur processing to transfer the obtained graphics to buffer1 in

RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

Graphics.Blit(buffer0, buffer1, material,0);

// Release buffer0, And assign the blurred figure to buffer0

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

//buffer1 Redistribution

buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

Graphics.Blit(buffer0, buffer1, material,1);

RenderTexture.ReleaseTemporary(buffer0);

//buffer0 Store the result of this blur as the input image of the next blur

buffer0 = buffer1;

}

// The result of blur processing is output to the screen

Graphics.Blit(buffer0, destination);

RenderTexture.ReleaseTemporary(buffer0);

}

else

{

Graphics.Blit(source, destination);

}

}

}

shader

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/Chapter12-GaussianBlur"

{

Properties

{

_MainTex ("Base (RGB)", 2D) = "white" {

}

_BlurSize("_BlurSize",Float)=1.0

}

SubShader

{

// Similar to header file

CGINCLUDE

#include "unityCG.cginc"

sampler2D _MainTex;

half4 _MainTex_TexelSize;

float _BlurSize;

// The structure of the fragment shader

struct v2f{

float4 pos:SV_POSITION;

// Store sampling coordinates

half2 uv[5]:TEXCOORD0;

};

// Vertical vertex shader

v2f vertBlurVertical(appdata_img v){

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

half2 uv=v.texcoord;

// The texture coordinates of each pixel are not just one, but five

o.uv[0] = uv;

o.uv[1] = uv + float2(0.0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[2] = uv - float2(0.0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[3] = uv + float2(0.0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

o.uv[4] = uv - float2(0.0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

return o;

}

// Horizontal vertex shader

v2f vertBlurHorizontal(appdata_img v){

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

half2 uv=v.texcoord;

o.uv[0] = uv;

o.uv[1] = uv + float2(_MainTex_TexelSize.x * 1.0,0.0) * _BlurSize;

o.uv[2] = uv - float2(_MainTex_TexelSize.x * 1.0,0.0) * _BlurSize;

o.uv[3] = uv + float2(_MainTex_TexelSize.x * 2.0,0.0) * _BlurSize;

o.uv[4] = uv - float2(_MainTex_TexelSize.x * 2.0,0.0) * _BlurSize;

return o;

}

// Universal fragment shader

fixed4 frag(v2f i):SV_Target{

// Horizontal and vertical Gaussian kernels are symmetrical

// Store weights , Because of symmetry, there are only three

float weight[3]={

0.4026,0.2442,0.0545};

// The weight in the middle is unique and not symmetrical, so it is calculated separately

fixed3 sum=tex2D(_MainTex,i.uv[0]).rgb*weight[0];

// Calculate the other four

for(int it=1;it<3;it++){

sum +=tex2D(_MainTex,i.uv[it]).rgb*weight[it];

sum +=tex2D(_MainTex,i.uv[it*2]).rgb*weight[it];

}

return fixed4(sum,1.0);

}

ENDCG

ZTest Always Cull Off ZWrite Off

Pass{

// Set up pass The name is convenient in other shader Call in

NAME "GAUSSIAN_BLUR_VERTICAL"

CGPROGRAM

#pragma vertex vertBlurVertical

#pragma fragment frag

ENDCG

}

Pass{

// Set up pass The name is convenient in other shader Call in

NAME "GAUSSIAN_BLUR_HORIZONTAL"

CGPROGRAM

#pragma vertex vertBlurHorizontal

#pragma fragment frag

ENDCG

}

}

FallBack Off

}

CGINCLUDE and ENDCG Code defined in the middle , Not included in any pass in , Only call the function name when using

Exposure effect

Implementation steps

- First, according to a threshold , Store the brighter areas in the picture in a rendered texture .

- Use Gaussian blur to blur this rendered texture , Areas with brighter diffusion .

- Blend the blurred texture with the original image .

Realization effect :

Script code

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Bloom : PostEffectsBase

{

public Shader bloomShader;

private Material bloomMaterial = null;

public Material material

{

get

{

bloomMaterial = CheckShaderAndCreateMaterial(bloomShader, bloomMaterial);

return bloomMaterial;

}

}

// The number of iterations

[Range(0, 4)]

public int iterations = 3;

// Fuzzy range

[Range(0.2f, 3.0f)]

public float blurSpread = 0.6f;

// Scaling factor

[Range(1, 8)]

public int downSample = 2;

// Extract the threshold of the brighter range

[Range(0.0f,4.0f)]

public float luminanceThreshold = 0.6f;

// Start is called before the first frame update

void Start()

{

}

// Update is called once per frame

void Update()

{

}

public void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (material != null)

{

// The ginseng

material.SetFloat("_LuminanceThreshold", luminanceThreshold);

int rtW = source.width / downSample;

int rtH = source.height / downSample;

RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0);

buffer0.filterMode = FilterMode.Bilinear;

// use material Medium shader The first of pass Extract the bright area and store it in buffer0 in

Graphics.Blit(source, buffer0, material, 0);

for(int i = 0; i < 3; i++)

{

material.SetFloat("_BlurSize", 1.0f + i * blurSpread);

RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

Graphics.Blit(buffer0, buffer1, material, 1);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

Graphics.Blit(buffer0, buffer1, material, 2);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

}

// The extracted image is blurred and stored in the texture

material.SetTexture("_Bloom", buffer0);

// Use the fourth pass Merge the blurred image with the original

Graphics.Blit(source, destination, material, 3);

RenderTexture.ReleaseTemporary(buffer0);

}

else

{

Graphics.Blit(source, destination);

}

}

}

shader

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/Chapter12-Bloom"

{

Properties

{

_MainTex ("Base (RGB)", 2D) = "white" {

}

_Bloom("Bloom (RGB)",2D)="black"{

}

_LuminanceThreshold("Luminance Threshold",Float)=0.5

_BlurSize("Blur Size",Float)=1.0

}

SubShader

{

CGINCLUDE

#include "unityCG.cginc"

sampler2D _MainTex;

half4 _MainTex_TexelSize;

sampler2D _Bloom;

float _LuminanceThreshold;

float _BlurSize;

struct v2f{

float4 pos:SV_POSITION;

half2 uv:TEXCOORD0;

};

// Extract vertex shaders for bright areas

v2f vertExtractBright(appdata_img v){

v2f o;

o.pos =UnityObjectToClipPos(v.vertex);

o.uv = v.texcoord;

return o;

}

float luminance(fixed4 color){

return color.r*0.2125+color.g*0.7154+color.b*0.0721;

}

// Extract clip shaders for bright areas

fixed4 fragExtractBright(v2f i):SV_Target{

fixed4 c =tex2D(_MainTex,i.uv);

// When the brightness is less than the threshold ,val by 0, Then the color of the original image is lost , Greater than the threshold , The maximum value is 1, That is, the original color of the image

fixed val=clamp(luminance(c)-_LuminanceThreshold,0.0,1.0);

return c*val;

}

// Structure diagram of fragment shader of mixed image

struct v2fbloom{

float4 pos:SV_POSITION;

half4 uv:TEXCOORD0;

};

// Vertex shader

v2fbloom vertBloom(appdata_img v){

v2fbloom o;

o.pos =UnityObjectToClipPos(v.vertex);

o.uv.xy =v.texcoord;

o.uv.zw =v.texcoord;

// Platform differentiation

#if UNITY_UV_STARTS_AT_TOP

if(_MainTex_TexelSize.y<0.0){

o.uv.w=1.0-o.uv.w;

}

#endif

return o;

}

// Fragment Shader

fixed4 fragBloom(v2fbloom i):SV_Target{

return tex2D(_MainTex,i.uv.xy)+tex2D(_Bloom,i.uv.zw);

}

ENDCG

ZTest Always Cull Off ZWrite Off

// Extract the light area pass

Pass{

CGPROGRAM

#pragma vertex vertExtractBright

#pragma fragment fragExtractBright

ENDCG

}

// blurred

UsePass "Custom/Chapter12-GaussianBlur/GAUSSIAN_BLUR_VERTICAL"

UsePass "Custom/Chapter12-GaussianBlur/GAUSSIAN_BLUR_HORIZONTAL"

// Blend images pass

Pass{

CGPROGRAM

#pragma vertex vertBloom

#pragma fragment fragBloom

ENDCG

}

}

FallBack Off

}

边栏推荐

- Easypoi multi sheet export by template

- leetcode9. 回文数

- 【深度学习】:《PyTorch入门到项目实战》第二天:从零实现线性回归(含详细代码)

- Simple addition, deletion, modification and query of commodity information

- Technology sharing | MySQL shell customized deployment MySQL instance

- Record development issues

- Jsonarray traversal

- 飞马D200S无人机与机载激光雷达在大比例尺DEM建设中的应用

- Given positive integers n and m, both between 1 and 10 ^ 9, n < = m, find out how many numbers have even digits between them (including N and m)

- 【深度学习】:《PyTorch入门到项目实战》:简洁代码实现线性神经网络(附代码)

猜你喜欢

RE14: reading paper illsi interpretable low resource legal decision making

综合设计一个OPPE主页--页面的售后服务

【深度学习】:《PyTorch入门到项目实战》:简洁代码实现线性神经网络(附代码)

MySQL 5.7 and sqlyogv12 installation and use cracking and common commands

结构化设计的概要与原理--模块化

College students participated in six Star Education PHP training and found jobs with salaries far higher than those of their peers

Re13:读论文 Gender and Racial Stereotype Detection in Legal Opinion Word Embeddings

![[deep learning]: model evaluation and selection on the seventh day of pytorch introduction to project practice (Part 1): under fitting and over fitting (including source code)](/img/19/18d6e94a1e0fa4a75b66cf8cd99595.png)

[deep learning]: model evaluation and selection on the seventh day of pytorch introduction to project practice (Part 1): under fitting and over fitting (including source code)

Comprehensively design an oppe homepage -- after sales service of the page

Re10:读论文 Are we really making much progress? Revisiting, benchmarking, and refining heterogeneous gr

随机推荐

【深度学习】:《PyTorch入门到项目实战》第七天之模型评估和选择(上):欠拟合和过拟合(含源码)

Exercise note 5 (square of ordered array)

Time complexity

Call DLL file without source code

Using MVC in the UI of unity

After paying $1.8 billion in royalties to Qualcomm, Huawei reportedly ordered 120million chips from MediaTek! Official response

RE14: reading paper illsi interpretable low resource legal decision making

Technology sharing | MySQL shell customized deployment MySQL instance

Some opinions on bug handling

Interesting kotlin 0x08:what am I

传英伟达已与软银展开会谈,将出价超过320亿美元收购Arm

Text filtering skills

海康威视回应'美国禁令'影响:目前所使用的元器件都有备选

Best cow fences solution

Oracle table partition

3D modeling tool Archicad 26 newly released

MySQL 5.7 and sqlyogv12 installation and use cracking and common commands

[JS] eight practical new functions of 1394-es2022

Go language slow entry - process control statement

ERROR: transport library not found: dt_ socket