当前位置:网站首页>Practice data Lake iceberg lesson 37 kakfa write the enfour, not enfour test of iceberg's icberg table

Practice data Lake iceberg lesson 37 kakfa write the enfour, not enfour test of iceberg's icberg table

2022-07-23 21:36:00 【*Spark*】

List of articles

Practical data Lake iceberg The first lesson introduction

Practical data Lake iceberg The second lesson iceberg be based on hadoop The underlying data format

Practical data Lake iceberg The third class stay sqlclient in , With sql The way is from kafka Read data to iceberg

Practical data Lake iceberg The fourth lesson stay sqlclient in , With sql The way is from kafka Read data to iceberg( Upgrade to flink1.12.7)

Practical data Lake iceberg Lesson Five hive catalog characteristic

Practical data Lake iceberg Lesson 6 from kafka Write to iceberg The problem of failure solve

Practical data Lake iceberg Lesson 7 Write to in real time iceberg

Practical data Lake iceberg The eighth lesson hive And iceberg Integrate

Practical data Lake iceberg The ninth lesson Merge small files

Practical data Lake iceberg The tenth lesson Snapshot delete

Practical data Lake iceberg Lesson Eleven Test the complete process of partition table ( Make numbers 、 Build table 、 Merge 、 Delete snapshot )

Practical data Lake iceberg The twelfth lesson catalog What is it?

Practical data Lake iceberg The thirteenth lesson metadata Problems many times larger than data files

Practical data Lake iceberg lesson fourteen Metadata merge ( Solve the problem that metadata expands with time )

Practical data Lake iceberg Lesson 15 spark Installation and integration iceberg(jersey Packet collision )

Practical data Lake iceberg Lesson 16 adopt spark3 open iceberg The gate of cognition

Practical data Lake iceberg Lesson 17 hadoop2.7,spark3 on yarn function iceberg To configure

Practical data Lake iceberg Lesson 18 Multiple clients and iceberg Interactive start command ( Common commands )

Practical data Lake iceberg Lesson 19 flink count iceberg, No result problem

Practical data Lake iceberg Lesson 20 flink + iceberg CDC scene ( Version of the problem , Test to fail )

Practical data Lake iceberg Lesson 21 flink1.13.5 + iceberg0.131 CDC( Test success INSERT, Change operation failed )

Practical data Lake iceberg Lesson 22 flink1.13.5 + iceberg0.131 CDC(CRUD Test success )

Practical data Lake iceberg Lesson 23 flink-sql from checkpoint restart

Practical data Lake iceberg Lesson 24 iceberg Metadata detailed analysis

Practical data Lake iceberg Lesson 25 Background operation flink sql The effect of addition, deletion and modification

Practical data Lake iceberg Lesson 26 checkpoint Setup method

Practical data Lake iceberg A wet night flink cdc Test program failure restart : From the last time checkpoint Click continue working

Practical data Lake iceberg Lesson 28 Deploy packages that do not exist in the public warehouse to the local warehouse

Practical data Lake iceberg Lesson 29 How to get... Gracefully and efficiently flink Of jobId

Practical data Lake iceberg Lesson 30 mysql->iceberg, Different clients have time zone problems

Practical data Lake iceberg Lesson 31 Use github Of flink-streaming-platform-web Tools , management flink Task flow , test cdc Restart the scene

Practical data Lake iceberg Lesson 32 DDL Statement passing hive catalog Persistence methods

Practical data Lake iceberg Lesson 33 upgrade flink To 1.14, Bring their own functioin Support json function

Practical data Lake iceberg Lesson 34 Based on data icerberg Flow batch integration architecture of - Flow architecture test

Practical data Lake iceberg Lesson 35 Based on data icerberg Flow batch integration architecture of – Test whether incremental reads are full reads or just incremental reads

Practical data Lake iceberg Lesson 36 Based on data icerberg Flow batch integration architecture of –update mysql select from icberg The syntax is an incremental update test

Practical data Lake iceberg Lesson 37 kakfa write in iceberg Of icberg Tabular enfource ,not enfource test

Practical data Lake iceberg More content directories

List of articles

Preface

test iceberg Read kafka The data of , Can it be based on kafka Upper id, When entering the lake , Auto update iceberg The data of , Test this scenario

test result : You can't

One 、 Test ideas

from kafka Manufacturing data writing iceberg,iceberg Set up pk when , Observe whether to append write or update .

Two 、 test not enforced Code

2.1 Test code

Test ideas : 1. select from kafka

2. insert to iceberg

The code is as follows :

CREATE TABLE IF NOT EXISTS KafkaTableTest2_XXZH (

`id` bigint,

`data` STRING

) WITH (

'connector' = 'kafka',

'topic' = 'test2_xxzh',

'properties.bootstrap.servers' = 'hadoop101:9092,hadoop102:9092,hadoop103:9092',

'properties.group.id' = 'testGroup',

'scan.startup.mode' = 'latest-offset',

'csv.ignore-parse-errors'='true',

'format' = 'csv'

);

CREATE CATALOG hive_iceberg_catalog WITH (

'type'='iceberg',

'catalog-type'='hive',

'uri'='thrift://hadoop101:9083',

'clients'='5',

'property-version'='1',

'warehouse'='hdfs:///user/hive/warehouse/hive_iceberg_catalog'

);

use catalog hive_iceberg_catalog;

CREATE TABLE IF NOT EXISTS ods_base.IcebergTest2_XXZH (

`id` bigint,

`data` STRING,

primary key (id) not enforced

)with(

'write.metadata.delete-after-commit.enabled'='true',

'write.metadata.previous-versions-max'='5',

'format-version'='2'

);

insert into hive_iceberg_catalog.ods_base.IcebergTest2_XXZH select * from default_catalog.default_database.KafkaTableTest2_XXZH;

2.2 Manufacturing data

[[email protected] conf]# kafka-console-producer.sh --broker-list hadoop101:9092,hadoop102:9092,hadoop103:9092 --topic test2_xxzh

>1,abc

[2022-07-22 14:55:51,643] WARN [Producer clientId=console-producer] Error while fetching metadata with correlation id 3 : {

test2_xxzh=LEADER_NOT_AVAILABLE} (org.apache.kafka.clients.NetworkClient)

>2,bb

>3,cc

>4,dd

>5,ee

>3,cccc

>6,666

>4,ddddd

>

2.3 Running results

spark-sql (default)> select * from ods_base.IcebergTest2_XXZH;

22/07/22 15:12:28 WARN HiveConf: HiveConf of name hive.metastore.event.db.notification.api.auth does not exist

id data

3 cc

4 ddddd

5 ee

3 cccc

6 666

4 dd

Time taken: 0.405 seconds, Fetched 6 row(s)

flink-sql Results of operation :

2.4 Operation conclusion

Not according to the kafka Declarative pk Yes iceberg updated . iceberg It is written in the appended mode .

3、 ... and 、 Change it to enforce, Report errors

3.1 Test code

iceberg Tabular pk Change it to enforced, Heavy run

Flink SQL> CREATE TABLE IF NOT EXISTS KafkaTableTest3_XXZH (

> `id` bigint,

> `data` STRING

> ) WITH (

> 'connector' = 'kafka',

> 'topic' = 'test2_xxzh',

> 'properties.bootstrap.servers' = 'hadoop101:9092,hadoop102:9092,hadoop103:9092',

> 'properties.group.id' = 'testGroup',

> 'scan.startup.mode' = 'latest-offset',

> 'csv.ignore-parse-errors'='true',

> 'format' = 'csv'

> );

>

[INFO] Execute statement succeed.

Flink SQL> CREATE CATALOG hive_iceberg_catalog WITH (

> 'type'='iceberg',

> 'catalog-type'='hive',

> 'uri'='thrift://hadoop101:9083',

> 'clients'='5',

> 'property-version'='1',

> 'warehouse'='hdfs:///user/hive/warehouse/hive_iceberg_catalog'

> );

[INFO] Execute statement succeed.

Flink SQL> use catalog hive_iceberg_catalog;

[INFO] Execute statement succeed.

Flink SQL> CREATE TABLE IF NOT EXISTS ods_base.IcebergTest3_XXZH (

> `id` bigint,

> `data` STRING,

> primary key (id) enforced

> )with(

> 'write.metadata.delete-after-commit.enabled'='true',

> 'write.metadata.previous-versions-max'='5',

> 'format-version'='2'

> );

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.api.ValidationException: Flink doesn't support ENFORCED mode for PRIMARY KEY constraint. ENFORCED/NOT ENFORCED controls if the constraint checks are performed on the incoming/outgoing data. Flink does not own the data therefore the only supported mode is the NOT ENFORCED mode

Error message :

org.apache.flink.table.api.ValidationException: Flink doesn’t support ENFORCED mode for PRIMARY KEY constraint. ENFORCED/NOT ENFORCED controls if the constraint checks are performed on the incoming/outgoing data. Flink does not own the data therefore the only supported mode is the NOT ENFORCED mode

flink You don't own these data , Therefore, only supported modes are non strong .

Running results :

Conclusion : iceberg There is no basis pk On data update

summary

iceberg From the kafka The inflow of data , It's additional .

边栏推荐

- BroadCast(广播)

- C - documents

- 壹沓数字机器人入选Gartner《中国AI市场指南》

- -2021 sorting and sharing of the latest required papers related to comparative learning

- Yushu A1 robot dog gesture control

- The third slam Technology Forum - Professor wuyihong

- Minimum spanning tree: prim

- Day109.尚医通:集成Nacos、医院列表、下拉列表查询、医院上线功能、医院详情查询

- Chapter 2 Regression

- It's good to change jobs for a while, and it's good to change jobs all the time?

猜你喜欢

Basic principle of synchronized lock

How to use cesium knockout?

Protocol buffers 的问题和滥用

Synchro esp32c3 Hardware Configuration Information serial port Print Output

Openlayers instance advanced view positioning advanced view positioning

2022-7-23 12点 程序爱生活 小时线顶背离出现,保持下跌趋势,等待反弹信号出现。

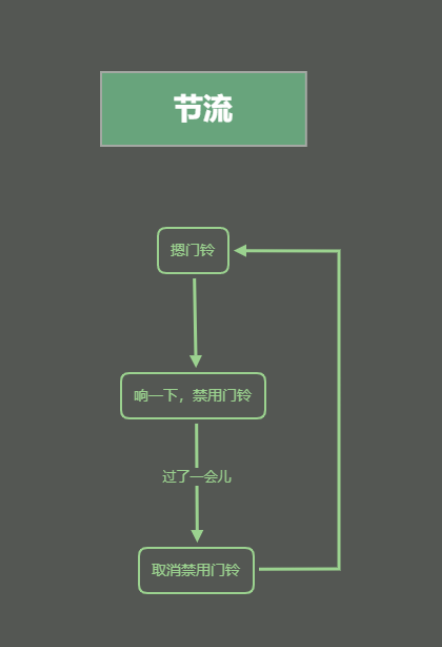

节流和防抖的说明和实现

-2021 sorting and sharing of the latest required papers related to comparative learning

Vite3 learning records

uniapp使用canvas写环形进度条

随机推荐

& 9 nodemon automatic restart tool

Kuberntes云原生实战六 使用Rook搭建Ceph集群

Green Tao theorem (4): energy increment method

The total ranking of blogs is 918

[complex overloaded operator]

High numbers | calculation of double integral 3 | high numbers | handwritten notes

Openlayers instances advanced mapbox vector tiles advanced mapbox vector maps

Proof of green Tao theorem (1): preparation, notation and Gowers norm

博客总排名为918

Protocol buffers 的问题和滥用

Use code to set activity to transparent

[Yugong series] June 2022.Net architecture class 084- micro service topic ABP vNext micro service communication

1309_ Add GPIO flip on STM32F103 and schedule test with FreeRTOS

(note) learning rate setting of optimizer Adam

Scala programming (intermediate advanced experimental application)

Chapter1 数据清洗

BroadCast(广播)

MySql的DDL和DML和DQL的基本语法

Unity—3D数学-Vector3

启牛是什么?请问一下手机开户股票开户安全吗?