当前位置:网站首页>Overview of self attention acceleration methods: Issa, CCNET, cgnl, linformer

Overview of self attention acceleration methods: Issa, CCNET, cgnl, linformer

2022-06-11 04:55:00 【Shenlan Shenyan AI】

Attention The mechanism first came into being NLP The field is proposed , be based on attention Of transformer Structure in recent years NLP On the various tasks of . In visual tasks ,attention Also received a lot of attention , Well known methods include Non-Local Network, Can be in time and space volume Modeling the global relationship in , Good results have been achieved . But in visual tasks self-attention Modules usually require matrix multiplication of large matrices , Video memory takes up a lot and takes a lot of time . So there have been a lot of optimizations in recent years self-attention The method of module speed , This note mainly discusses several related methods , If there is any mistake, please correct it .

Self-Attention brief introduction

Attention Mechanisms can usually be expressed in the form of

among ,

by query, by key, by value. From the perspective of retrieval tasks ,query It's the content to be retrieved ,key It's the index ,value Is the value to be retrieved .attention The process is to calculate query and key The correlation between , get attention map, Then based on the attention map To get value The eigenvalues in . And in the picture below self-attention in ,Q K V All of them are the same feature map.

The image above is a self-attention The basic structure of the module , Input is

, Pass respectively 1x1 Convolution obtains . You can get attention map by . Finally and Do matrix multiplication to get and input shape I want to be the same self-attention feature map.

stay self-attention in , The main reason for the large amount of computation and memory consumption is to generate attention map At the time of the

and final Two steps . about 64 The size of feature map, The size is . therefore ,self-attention Modules are usually placed in the lower resolution features of the second half of the network .

How to optimize attention Memory and computational efficiency within , The method introduced today has two main directions :

change attention In the form of , Avoid direct whole picture attention

Long + Short range attention:Interlaced Sparse Self-Attention

level + vertical attention:Ccnet: Criss-cross attention for semantic segmentation

A2-Nets: Double Attention Networks

Reduce attention A dimension in the calculation process

Reduce N dimension :Linformer: Self-Attention with Linear Complexity

Reduce C dimension : In common use , It's usually C/2 perhaps C/4

other

Optimize GNL:Compact generalized non-local network

Attention Form optimization

ISSA: Interlaced Sparse Self-Attention

The basic idea of the paper : The basic idea of this paper is “ staggered ”. As shown in the figure below , First, through permute take feature To disturb with certain regularity , And then feature map Divide it into several pieces and do it separately self-attention, What you get is long-range Of attention Information ; thereafter , Do it again permute Restore to the original feature location , Block again attention, To obtain the short-range Of attention. By dismantling long/short range Of attention, It can greatly reduce the amount of calculation .

The specific performance is shown in the figure below , It can be seen that , The most obvious decline is the occupancy of video memory , Mainly because of avoiding attention The large matrix in the process . And because the permute,divide It doesn't take up flop, But in inference It takes a certain amount of time , So the actual speed is not flops So much promotion . But overall , On the premise that the effect does not decrease significantly , This speed / The optimization of video memory is excellent .

When reading this article, I feel that I have a strong sense of vision , Then I thought that this was not hw Upper shufflenet Well .

CCNet: Criss-cross attention for semantic segmentation

The main idea of this paper is : The difference with Non-Local The overall situation in attention, In this paper, we propose that we should only do it on the cross corresponding to the feature points attention. Thus, the complexity is reduced from

Down to

CCNet The specific approach is , about

A point on , We can all get the corresponding eigenvectors , For the cross region of this point , We can Extract the corresponding features from , constitute , in the light of and Matrix multiplication , You can get attention map by . Finally, In the same way, cross features are extracted and matrix multiplication is performed , You can get the final result .

So how to get from the cross attention Transition to the big picture attention Well , The method is actually very simple , Just make two crosses attention, Each point can get the global information .

CCNet The theoretical calculation amount of (Flops and memory) Compared with Non-Local It's very advantageous . But the efficiency of cross feature extraction may not be very high , There is no specific code implementation in the paper .

A2-Nets: Double Attention Networks

Of this paper attention Look at the figure below

first feautre gathering, Can be understood as for each channel,softmax Find the most important position , Go again gathering all channel The most important feature in this position ; obtain CxC

the second feautre distribution, Can be understood as for each channel,softmax Find the most important position , And then to each channel This position is assigned a feature .

Of this article attention The way is interesting , It's worth pondering . But in terms of speed NL It should not have been improved much .

Attention Dimension optimization

Linformer: Self-Attention with Linear Complexity

Attention As mentioned above , It can be seen as

, This article is about N Do dimension reduction , take attention Turn into , stay K In the case of constant value , From the complexity of Down to

Most of this article is , Is to prove that this reduction of dimension is similar to the original result , I didn't understand the proof part

Experimental part ,K The bigger the effect, the better , But it's not obvious . That is, dimensionality reduction will have a very slight effect on the effect , At the same time, it's very efficient to increase the speed .

other

CGNL: Compact generalized non-local network

This article is mainly to optimize a more computationally expensive Self-attention Method :Generalized Non-local (GNL). It's not just about doing H W Two spatial On the scale of non-local attention, There's an extra consideration for C dimension . So the complexity is

.

The main idea of this article is : Using Taylor expansion , take

It's like . So we can calculate the last two terms first , Reduced complexity from

This article in the video understanding 、 The experimental results of target detection and other tasks are good , But the speed and experimental results are not analyzed .

author : Lin Tianwei

| About Deep extension technology |

Shenyan technology was founded in 2018 year 1 month , Zhongguancun High tech enterprise , It is an enterprise with the world's leading artificial intelligence technology AI Service experts . In computer vision 、 Based on the core technology of natural language processing and data mining , The company launched four platform products —— Deep extension intelligent data annotation platform 、 Deep extension AI Development platform 、 Deep extension automatic machine learning platform 、 Deep extension AI Open platform , Provide data processing for enterprises 、 Model building and training 、 Privacy computing 、 One stop shop for Industry algorithms and solutions AI Platform services .

边栏推荐

- Leetcode question brushing series - mode 2 (datastructure linked list) - 19:remove nth node from end of list (medium) delete the penultimate node in the linked list

- Minor problems encountered in installing the deep learning environment -- the jupyter service is busy

- Tianchi - student test score forecast

- C语言试题三(程序选择题进阶_含知识点详解)

- Leetcode classic guide

- Yolov5 training personal data set summary

- Simple knowledge distillation

- 华为设备配置BGP/MPLS IP 虚拟专用网地址空间重叠

- International qihuo: what are the risks of Zhengda master account

- Unzip Imagenet after downloading

猜你喜欢

Tianchi - student test score forecast

Huawei equipment is configured with bgp/mpls IP virtual private network address space overlap

What are the similarities and differences between the data center and the data warehouse?

华为设备配置跨域虚拟专用网

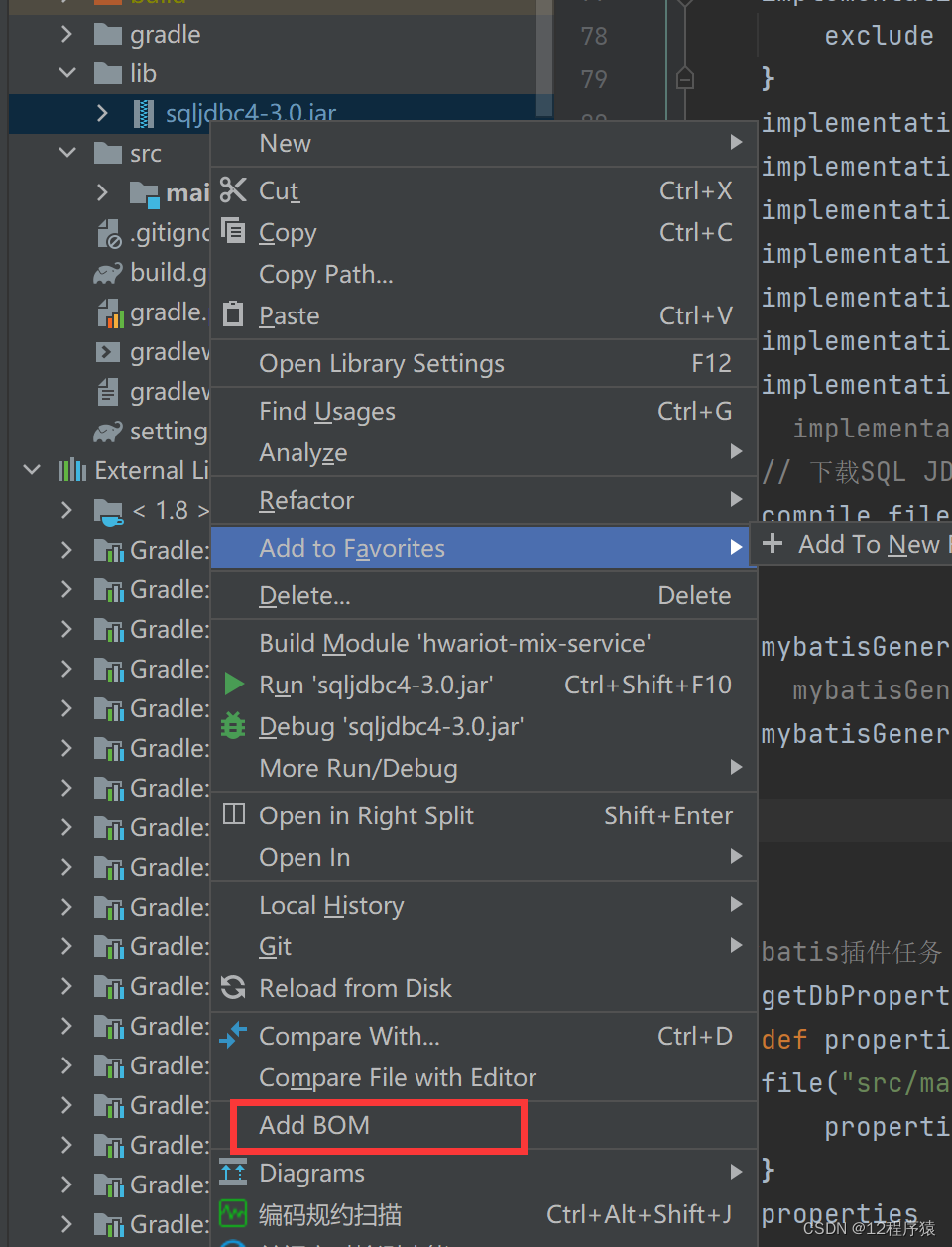

How the idea gradle project imports local jar packages

oh my zsh正确安装姿势

Deep extension technology: intelligent OCR recognition technology based on deep learning has great potential

Emlog new navigation source code / with user center

四大MQ的区别

Support vector machine -svm+ source code

随机推荐

How the idea gradle project imports local jar packages

Sorting out relevant programming contents of renderfeature of unity's URP

一大厂95后程序员对部门领导不满,删库跑路被判刑

Lianrui: how to rationally see the independent R & D of domestic CPU and the development of domestic hardware

PHP phone charge recharge channel website complete operation source code / full decryption without authorization / docking with the contract free payment interface

[Transformer]MViTv2:Improved Multiscale Vision Transformers for Classification and Detection

Network adapter purchase guide

What is the difference between a wired network card and a wireless network card?

Huawei equipment configuration MCE

精益产品开发体系最佳实践及原则

USB转232 转TTL概述

How to calculate the handling charge of international futures gold?

codesys 獲取系統時間

New library goes online | cnopendata immovable cultural relic data

Zhengda international qihuo: trading market

Deep extension technology: intelligent OCR recognition technology based on deep learning has great potential

Leetcode question brushing series - mode 2 (datastructure linked list) - 19:remove nth node from end of list (medium) delete the penultimate node in the linked list

Lianrui electronics made an appointment with you with SIFA to see two network cards in the industry's leading industrial automation field first

Relational database system

Leetcode question brushing series - mode 2 (datastructure linked list) - 160:intersection of two linked list