当前位置:网站首页>Nips 2014 | two stream revolutionary networks for action recognition in videos reading notes

Nips 2014 | two stream revolutionary networks for action recognition in videos reading notes

2022-06-25 08:26:00 【ybacm】

Two-Stream Convolutional Networks for Action Recognition in Videos

Author Unit: Visual Geometry Group, University of Oxford

Authors: Karen Simonyan Andrew Zisserman

Conference: NIPS 2014

Paper address: https://proceedings.neurips.cc/paper/2014/hash/00ec53c4682d36f5c4359f4ae7bd7ba1-Abstract.html

The following is a video sent by Li Mu's team (https://www.bilibili.com/video/BV1mq4y1x7RU?spm_id_from=333.1007.top_right_bar_window_history.content.click&vd_source=9a9f9a00848a88972d0fcfd341e9e738) Take notes .

Why do you do the classification task but the action recognition in the title ? Because videos related to human actions are more common , So in terms of practical significance or data set collection , It is more valuable to do motion recognition in video .

motivation : The author finds that convolution network is better at learning local information of images , But can't learn the motion information between frames , So the author puts forward the dual stream network , One is the spatial stream convolution network ( Single frame image input , In order to learn spatial information ), One is spatiotemporal stream convolution network ( Take multi frame optical flow picture as input , In order to learn sports information , It is equivalent to directly giving the motion characteristics to the convolution network ), So that the model can learn motion information explicitly .

Optical flow optical flow: It can effectively describe the motion between objects , By extracting optical flow , You can ignore the background and people's clothes , So you can focus on the action itself . You can see the picture of optical flow visualization , The greater the range of motion of an object , The brighter the color .

The reason for using optical flow pictures , It's also because we did better at that time hand-crafted The feature is based on the optical flow trajectory .

Abstract

Contribution has three aspects , First, a dual flow network is proposed ; The second is to prove that it is feasible to train on multi frame dense optical flow ConvNet It can achieve very good performance in the case of limited training data ; Third, I did multi task training , Is to train on two data sets at the same time , Then the accuracy is improved .

1 Introduction

Additionally, video provides natural data augmentation (jittering) for single image (video frame) classification.

Because the objects in the video will undergo various deformations , Changes in displacement or optical flow , It is much better than hard data enhancement .

late fusion It means at the end of the network logits Merge at this level ,early fusion It refers to merging the output of the network middle layer .

The two stream approach has two benefits : First, it can be used from ImageNet Use the pre trained model to initialize the spatial flow ; Second, time flow only uses optical flow information for training , It will be easier .

1.1 Related work

The paper [14] It is found that the effect of plugging multiple images into the network is basically the same as that of single frame input , Even with a huge data set Sports-1M So it is with .

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-q1HJiHgz-1655284293975)(Two-Stream%20Convolutional%20Networks%20for%20Action%20Recog%20280f23fb2c3944fa9481703ab5aabd63/Untitled.png)]](/img/91/b98b1afd12db95e367f56221380c56.png)

2 Two-stream architecture for video recognition

The overall framework is shown in the figure 1 Shown . The author considers two fusion methods : First, direct average , The second is to stacked L2-normalised softmax scores Train a multiclass linear as a feature SVM [6].

Spatial stream ConvNet Although the network of spatial flow is very simple , But it's also important , Because the objects in the video appearance( shape , size , Color, etc. ) It's very important. , It is possible to recognize that there is a basketball in the video and know that the probability is playing basketball .

3 Optical flow ConvNets

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-OmZGwBR2-1655284293975)(Two-Stream%20Convolutional%20Networks%20for%20Action%20Recog%20280f23fb2c3944fa9481703ab5aabd63/Untitled%201.png)]](/img/d0/fb700ce984f7b3b8c0e84b7afeb1c4.png)

The optical flow is calculated by two frames , If a video has L frame , Then you can get it L-1 An optical flow diagram , Suppose the input is two H×W×3, The resulting optical flow diagram is H×W×2, there 2 It refers to the optical flow in the horizontal direction and the optical flow in the vertical direction , Pictured 2(d)、(e).

3.1 ConvNet input configurations

So how to use this optical flow information , Pictured 3, The author proposes two ways : One is to directly stack the optical flow diagram at the same position ; The second is to know the position information after movement from the optical flow diagram , Continue the trajectory to superimpose the optical flow diagram . Although obviously the second way is more reasonable , But the effect is not as good as the former .

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-g6cfhxzL-1655284293976)(Two-Stream%20Convolutional%20Networks%20for%20Action%20Recog%20280f23fb2c3944fa9481703ab5aabd63/Untitled%202.png)]](/img/ba/4e8c112e60570949ce07d8457e09e8.png)

Bi-directional optical flow. Using the technique of two-way optical flow , Forward optical flow calculation is used in the first half of the video frame ( frame i To frame i+1), Backward optical flow calculation is used in the second half of the video frame ( frame i+1 To frame i), Maintain dimensional consistency .

The overall structure of the time flow network is consistent with the space flow , The input dimensions are different , Spatial flow is H×W×3, The time flow is H×W×2L( Dimension after optical flow diagram superposition ).

5 Implementation details

What should be noted :

Testing. While doing the test , The authors all take frames of fixed length at medium intervals in a video sequence ( Used here 25), For example, a video contains 100 frame , Every other day 4 Frame take once , Fetch 25 frame . And then to the 25 Frame done 10crop( Corner and center crop, Then flip the picture and take the four corners and the center crop, get 10crop), To obtain the 250view, Will this 250view Input into the spatial flow network ( The time flow is similar to ), Then take the average .

Optical flow. The author uses a technique to put dense The prediction of optical flow is transformed into sparse The flow of light , And use JPEG The compression method of , Save a lot of storage space .

6 Evaluation

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-CDBk7nLf-1655284293976)(Two-Stream%20Convolutional%20Networks%20for%20Action%20Recog%20280f23fb2c3944fa9481703ab5aabd63/Untitled%203.png)]](/img/03/42bbd31a9251e808a1671197985298.png)

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-eJSDGTKU-1655284293976)(Two-Stream%20Convolutional%20Networks%20for%20Action%20Recog%20280f23fb2c3944fa9481703ab5aabd63/Untitled%204.png)]](/img/fa/6ea2d4109eb21d9e4234226b8b10d3.png)

Using the time flow network alone can achieve better results , It can be seen how important motion information is for video understanding !

7 Conclusions and directions for improvement

Made a summary and future work . It is mentioned that the author does not understand why the optical flow fusion based on trajectory is not better , And this was a 15 Year of cvpr It's solved .

The contribution of this article is not just to add a time flow network , It mainly gives us an enlightenment : When a neural network can not solve some problems , Maybe you can't get a good promotion through the magic network , At this time, it may be a good way to add a modal input to help the neural network learn , Therefore, the two stream network can also be used as a precedent for multimodal learning .

边栏推荐

- Electronics: Lesson 008 - Experiment 6: very simple switches

- The first game of 2021 ICPC online game

- Stm32cubemx Learning (5) Input capture Experiment

- Can I grant database tables permission to delete column objects? Why?

- Bluecmsv1.6- code audit

- Prepare these before the interview. The offer is soft. The general will not fight unprepared battles

- How do I install the software using the apt get command?

- [unexpected token o in JSON at position 1 causes and solutions]

- CVPR 2022 oral 2D images become realistic 3D objects in seconds

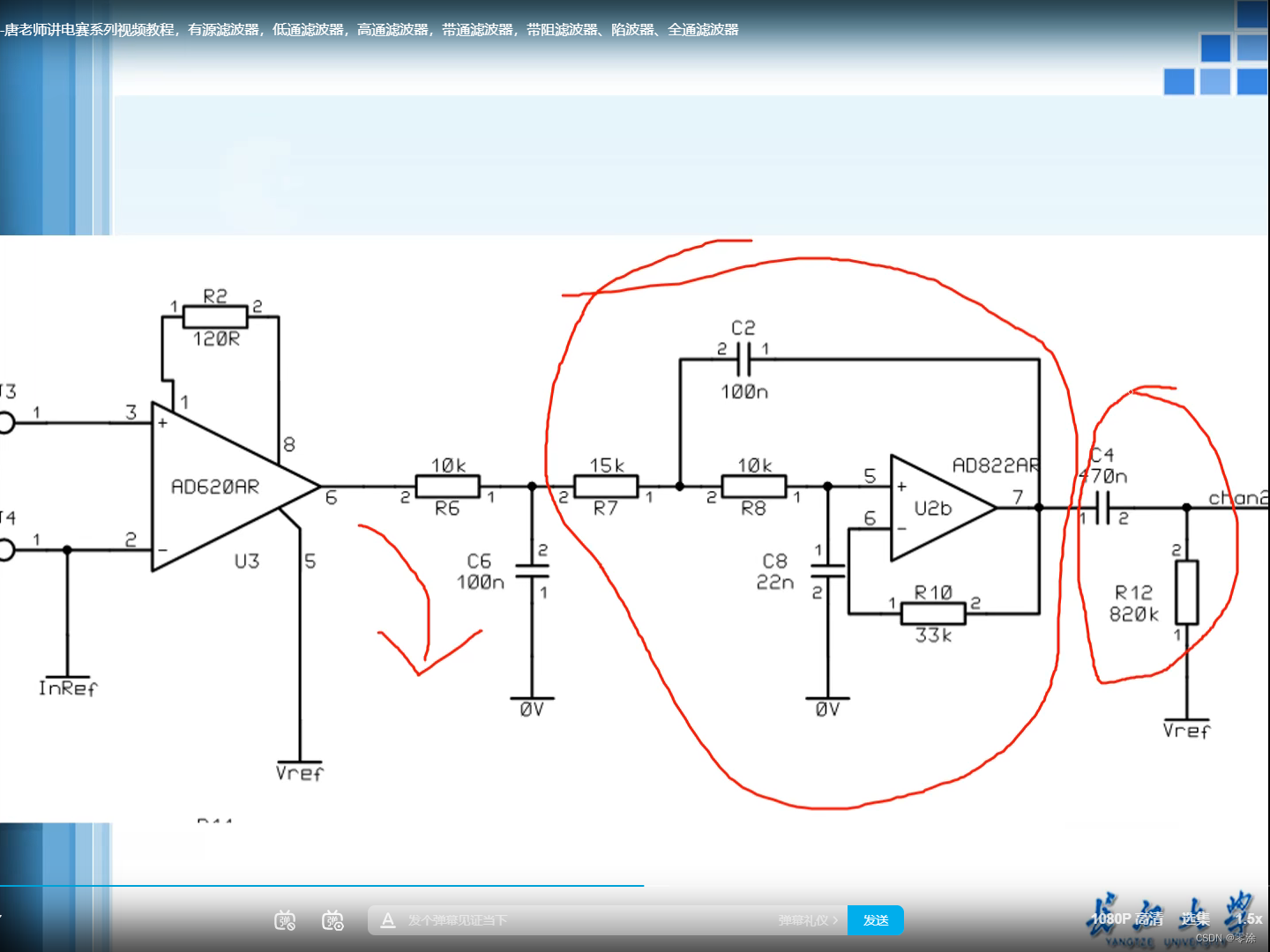

- Mr. Tang's lecture on operational amplifier (Lecture 7) -- Application of operational amplifier

猜你喜欢

NIPS 2014 | Two-Stream Convolutional Networks for Action Recognition in Videos 阅读笔记

家庭服务器门户Easy-Gate

Data-centric vs. Model-centric. The Answer is Clear!

Mr. Tang's lecture on operational amplifier (Lecture 7) -- Application of operational amplifier

Use pytorch to build mobilenetv2 and learn and train based on migration

leetcode. 13 --- Roman numeral to integer

Prepare these before the interview. The offer is soft. The general will not fight unprepared battles

Home server portal easy gate

堆栈认知——栈溢出实例(ret2libc)

每日刷题记录 (三)

随机推荐

[supplementary question] 2021 Niuke summer multi school training camp 6-n

Daily question brushing record (III)

Use Adobe Acrobat pro to resize PDF pages

TCP MIN_ A dialectical study of RTO

初体验完全托管型图数据库 Amazon Neptune

Mr. Tang's lecture on operational amplifier (Lecture 7) -- Application of operational amplifier

[supplementary question] 2021 Niuke summer multi school training camp 1-3

How to calculate the information entropy and utility value of entropy method?

Electronics: Lesson 008 - Experiment 6: very simple switches

测一测现在的温度

打新债安不安全 有风险吗

How to analyze the grey prediction model?

Free SSL certificate acquisition tutorial

Luogu p2839 [national training team]middle (two points + chairman tree + interval merging)

是否可以给数据库表授予删除列对象的权限?为什么?

Ffmpeg+sdl2 for audio playback

Apache CouchDB Code Execution Vulnerability (cve-2022-24706) batch POC

2022年毕业生求职找工作青睐哪个行业?

Unity Addressable批量管理

Allgero reports an error: program has encoded a problem and must exit The design will be saved as a . SAV file