当前位置:网站首页>torch DDP Training

torch DDP Training

2022-06-22 04:26:00 【Love CV】

01

There are three types of distributed training

The model is split into different GPU, The model is too big , It's almost useless

The model is placed in a , Data splitting is different GPU,torch.dataparallel

Basically, I won't report bug

sync bc Prepare yourself

Models and data are different gpu There is one on each , torch.distributeddataparallel

bug many , Data is not shared between processes , Access to the file is uncertain , In the log system , Data set preprocessing , Model loss Put it on the designated cuda Such places should be carefully designed .

sync yes pytorch Existing library

The principle and effect are theoretically the same as 2 Agreement , They all use bigger ones batchsize, It's really faster than that 2 fast , There seems to be a significant reduction in data to cuda When the

Support multiple computers

Cartido , Network running time is short , Actually, it's not as good as 2

02

principle

increase bs, Will bring about an increase bs Related disadvantages of

Over fitting , Use warm-up relieve , You need to explore how much you can increase without affecting generalization

Corresponding to multiplying learning rate , Data one epoch Less n times , And learning rate

DP Aggregate gradient , however bn Is based on a single gpu Data calculation , There will be incorrect situations , Use sync bn

map-reduce, Every gpu Get the last one , Pass it on to the next

There are two rounds altogether , The first round has all the data on each card , The second round synchronizes data to all cards

You only need to 1/N The data of , need 2N-2 Time , So theoretically, it is similar to GPU No matter the number

Model buffer, It's not a parameter , Its optimization is not back propagation but other ways , Such as bn Of variance and moving mean

03

DDP How to write specifically

You can call dist To see the current rank, after log Wait for tasks that don't need to be repeated rank=0 Conduct

When not in use by default rank=0

Use a card first debug

Use wandb Words , You need to call... Explicitly wandb.finish()

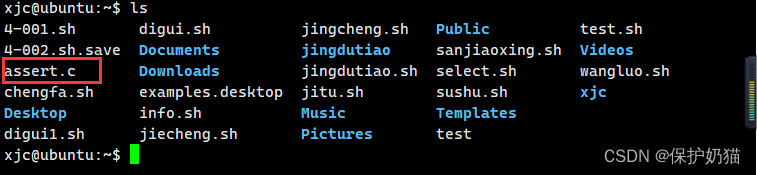

import torch.distributed as distfrom torch.nn.parallel import DistributedDataParallel as DDPimport torch.multiprocessing as mpdef demo_fn(rank, world_size):dist.init_process_group("nccl", rank=rank, world_size=world_size)# lots of code.if dist.get_rank() == 0:train_sampler = torch.utils.data.distributed.DistributedSampler(my_trainset)trainloader = torch.utils.data.DataLoader(my_trainset,batch_size=16, num_workers=2, sampler=train_sampler)model = ToyModel()#.to(local_rank)# DDP: Load The model has to be constructed DDP Before the model , And only in the master Just load it on .# ckpt_path = None# if dist.get_rank() == 0 and ckpt_path is not None:# model.load_state_dict(torch.load(ckpt_path))model = DDP(model, device_ids=[local_rank], output_device=local_rank)# DDP: It should be noted that , there batch_size It refers to each process batch_size.# in other words , total batch_size It's here batch_size Multiply by the parallel number (world_size).# torch.cuda.set_device(local_rank)# dist.init_process_group(backend='nccl')loss_func = nn.CrossEntropyLoss().to(local_rank)trainloader.sampler.set_epoch(epoch)data, label = data.to(local_rank), label.to(local_rank)if dist.get_rank() == 0:torch.save(model.module.state_dict(), "%d.ckpt" % epoch)def run_demo(demo_fn, world_size):mp.spawn(demo_fn,args=(world_size,),nprocs=world_size,join=True)

边栏推荐

- Convenient and easy to master, vivo intelligent remote control function realizes the control of household appliances in the whole house

- Windows10 cannot access LAN shared folder

- Cloud function realizes fuzzy search function

- POSIX semaphore

- It is about one-step creating Yum source cache in Linux

- Cloud security daily 220621: Intel microcode vulnerability found in Ubuntu operating system, which needs to be upgraded as soon as possible

- Django 学习--- 模型与数据库操作(二)

- 套用这套模板,玩转周报、月报、年报更省事

- [BP regression prediction] optimize BP regression prediction based on MATLAB GA (including comparison before optimization) [including Matlab source code 1901]

- Bubble sort

猜你喜欢

哈夫曼树

树莓派初步使用

首個女性向3A手遊要來了?獲IGN認可,《以閃亮之名》能否突出重圍

低功耗雷达感应模组,智能锁雷达感应方案应用,智能雷达传感器技术

爬梯子&&卖卖股份的最佳时期(跑路人笔记)

New chief maintenance personnel for QT project

window10无法访问局域网共享文件夹

Laravel implements soft deletion

Idea blue screen solution

![Chapter VIII programmable interface chip and application [microcomputer principle]](/img/63/5f01a74defd60f0d4a8f085ff0e2e9.png)

Chapter VIII programmable interface chip and application [microcomputer principle]

随机推荐

Go learning notes

爬梯子&&卖卖股份的最佳时期(跑路人笔记)

Convenient and easy to master, vivo intelligent remote control function realizes the control of household appliances in the whole house

Spark - Executor 初始化 && 报警都进行1次

Shell Sort

torch DDP Training

每日一问:ArrayList和LinkedList的区别

How do redis and MySQL maintain data consistency? Strong consistency, weak consistency, final consistency

New chief maintenance personnel for QT project

解决Swagger2显示UI界面但是没内容

首個女性向3A手遊要來了?獲IGN認可,《以閃亮之名》能否突出重圍

Calculation of audio frame size

Binary tree cueing

[day 23] given an array of length N, return the array after deleting the x-th element | array deletion operation

Clue binary tree

拓扑排序

IDEA安装及其使用详解教程

CentOS uses composer install to report an error - phpunitphppunit 8

Pytorch之contiguous函数

Basic concept of graph