当前位置:网站首页>Troubleshooting of datanode entering stale status

Troubleshooting of datanode entering stale status

2022-06-23 17:04:00 【Java OTA】

First say DataNode Why are you in Stale state

By default ,DataNode Every time 3s towards NameNode Send a heartbeat , If NameNode continued 30s No heartbeat received , Just put DataNode Marked as Stale state ; In another 10 I haven't received my heartbeat for minutes , Just mark it as dead state

NameNode There is one jmx indicators hadoop_namenode_numstaledatanodes, Get into statle State of DataNode Number , Normally, this value should be 0, If not 0 The alarm should be triggered

DataNode There is one jmx indicators hadoop_datanode_heartbeatstotalnumops, Indicates the number of heartbeats sent , adopt prometheus function increase(hadoop_datanode_heartbeatstotalnumops[1m]), We can draw 1 Number of heartbeats sent in minutes

The monitoring finds that there are nodes with heartbeat times of 0 The situation of :

Observe this period of time DataNode Of JVM state , Find out GC Very often ,1 Minutes up to 90 Time :

Check the log of this node , Found a warning log :

The main code is org.apache.hadoop.hdfs.server.datanode.DirectoryScanner.scan()

Is roughly DataNode It will scan the data blocks on the disk regularly , Check whether it is consistent with the data block information in memory . Get the lock before starting the comparison , When the lock is released after completion, a check will be performed , If the lock is held longer than the threshold (300ms), The warning log will be printed

Here the lock is held for 36s, It's a little too long , Guess why DataNode Storage configuration is unreasonable , Only one disk is configured , And the amount of data is large , There are many data blocks , The comparison takes a long time

And this time and DataNode The time of missing heartbeat is just the same

Spot check the time points of abnormal heartbeat transmission for several times , Have found this warning log

The high probability is that this affects the heartbeat transmission

The official also has the corresponding issue:

https://www.mail-archive.com/[email protected]/msg43698.html

https://issues.apache.org/jira/browse/HDFS-16013

https://issues.apache.org/jira/browse/HDFS-15415

stay 3.2.2, 3.3.1, 3.4.0 This problem is solved in version , In addition to optimizing performance , The key is to remove the lock , Timely and time-consuming , It will not be affected by holding the lock for a long time DataNode A healthy state

It is difficult for us to upgrade the version

First, continue to observe , Let's see if this situation will have a greater impact

In addition to the upgraded version , hold DataNode Change to multiple directories , One smaller disk per directory , It should also have an optimization effect

Reference article :

How to identify datanode stale

To talk about DataNode How to talk to NameNode Sending heartbeat

DataNode And DirectoryScanner analysis

边栏推荐

- R language uses colorblinr package to simulate color blind vision, and uses edit to visualize the image of ggplot2_ The colors function is used to edit and convert color blindness into visual results

- 【网络通信 -- WebRTC】WebRTC 源码分析 -- PacingController 相关知识点补充

- NLP paper reading | improving semantic representation of intention recognition: isotropic regularization method in supervised pre training

- What are the risks of opening a fund account? Is it safe to open an account

- Google Play Academy 组队 PK 赛,火热进行中!

- 三分钟学会如何找回mysql密码

- Now I want to buy stocks. How do I open an account? Is it safe to open a mobile account?

- Talk about the difference between redis cache penetration and cache breakdown, and the avalanche effect caused by them

- 什么是抽象类?怎样定义抽象类?

- 炒股买股票需要怎么选择呢?安全性不错的?

猜你喜欢

官方零基础入门 Jetpack Compose 的中文课程来啦

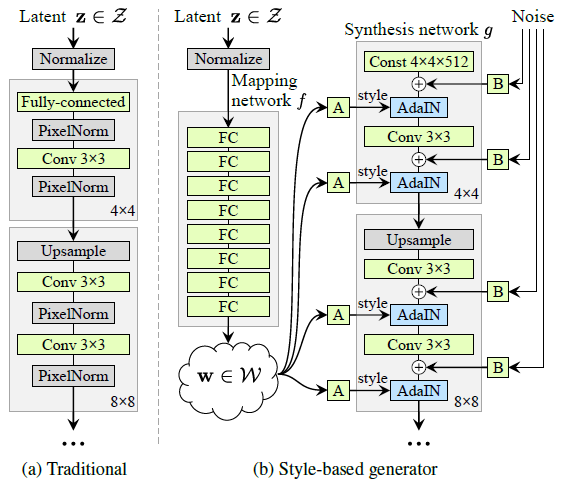

stylegan1: a style-based henerator architecture for gemerative adversarial networks

leetcode:30. 串联所有单词的子串【Counter匹配 + 剪枝】

Apache foundation officially announced Apache inlong as a top-level project

ASEMI超快恢复二极管ES1J参数,ES1J封装,ES1J规格

ASEMI肖特基二极管和超快恢复二极管在开关电源中的对比

Can the asemi fast recovery diodes RS1M, us1m and US1G be replaced with each other

Network remote access raspberry pie (VNC viewer)

Robot Orientation and some misunderstandings in major selection in college entrance examination

ASEMI快恢复二极管RS1M、US1M和US1G能相互代换吗

随机推荐

stylegan3:alias-free generative adversarial networks

What can the accelerated implementation of digital economy bring to SMEs?

亚朵更新招股书:继续推进纳斯达克上市,已提前“套现”2060万元

Six stone programming: the subtlety of application

How do you choose to buy stocks? Good security?

三分钟学会如何找回mysql密码

The R language uses the RMSE function of the yardstick package to evaluate the performance of the regression model, the RMSE of the regression model on each fold of each cross validation (or resamplin

科大讯飞神经影像疾病预测方案!

CoAtNet: Marrying Convolution and Attention for All Data Sizes翻译

什么是抽象类?怎样定义抽象类?

[untitled] Application of laser welding in medical treatment

供求两端的对接将不再是依靠互联网时代的平台和中心来实现的

NLP paper reading | improving semantic representation of intention recognition: isotropic regularization method in supervised pre training

Spdlog logging example - create a logger using sink

官方零基础入门 Jetpack Compose 的中文课程来啦!

JS常见的报错及异常捕获

ADC digital DGND, analog agnd mystery!

ADC数字地DGND、模拟地AGND的谜团!

leetcode:面試題 08.13. 堆箱子【自頂而下的dfs + memory or 自底而上的排序 + dp】

Comparison of asemi Schottky diode and ultrafast recovery diode in switching power supply