当前位置:网站首页>[machinetranslation] - Calculation of Bleu value

[machinetranslation] - Calculation of Bleu value

2022-06-26 02:44:00 【Muasci】

Preface

Recently, I am still stuck in the process of reproducing the results of my work . say concretely , I use the script provided by that job , It uses fairseq-generate To complete the evaluation of the results . Then I found that the results I got were completely inconsistent with those in the paper .

First , In the pretreatment stage , Such as Remember the training of a multilingual machinetranslation model Shown , I use moses Of tokenizer Accomplished tokenize, And then use moses Of lowercase Lowercase completed , Last use subword-nmt bpelearn and apply Subwords of . Of course , One side , Lowercase is not conducive to model performance comparison ( From senior brother ); On the other hand , have access to sentencepiece Use this tool to learn directly bpe, Without having to do extra tokenize, But this article will not consider .

How others do it ?

Main reference of this part :Computing and reporting BLEU scores

Consider the following : Training and testing data of machinetranslation , All through tokenize and truecased Preprocessing , as well as bpe Word segmentation . And in the post-processing stage , In ensuring the correct answer (ref) And model output (hyp) After the same post-processing operation , Different operations bring BLEU The values are very different . As shown in the figure below :

The main findings are as follows :

- 【 That's ok 1vs That's ok 2】 stay bpe Level calculated BLEU High value . explain : That's ok 1 No post-treatment has been taken , in other words ,hyp and ref All are tokenized、truecased Of bpe Sub word . here , Due to finer particle size , Original word The level is the wrong output , Break into finer grained subwords , May produce “ correct ” The results of the .

- 【 That's ok 3vs That's ok 4】 If not used sacreBLEU Provided standard tokenization, result BLEU High value . explain : That's ok 3 Didn't do detokenize, meanwhile , hold sacreBLEU Medium standard tokenization also ban It fell off ; Go ahead 4 I did it first. detokenize( That's ok 4 Medium tokenization To be understood as detokenize), And then used sacreBLEU Medium standard tokenization( Default should be 13a tokenizier). Generally speaking , That's ok 3 go by the name of tokenized BLEU(wrong), That's ok 4 go by the name of detokenized BLEU(right). But I don't know why the two are so different .

- 【 That's ok 5】 If you don't consider case , That is, completely lowercase ,BLEU High value .

- 【 That's ok 6】 This category should also be regarded as tokenized BLEU, in other words , It did it first detokenize, Just calculating BLEU when , It's going to happen again tokenize When , Using other third-party tokenizer instead of sacreBLEU Medium tokenizer, This practice also has an impact on the final result .

summary :

- use SacreBLEU!

- In the calculation BLEU Before , Be completely post-preprocess(undo BPE\truecase\detokenize wait )!

My mistake

After the previous part , You can see , The problem with my preprocessing steps is not so big ( Lower case results in higher results ), My main problem is , Running fairseq-generate when , I didn't provide it bpe\bpe-codes\tokenizer\scoring\post-process Parameters , in other words , I didn't do anything post-process. among , The functions of each parameter are as follows :

- bpe: adopt bpe.decode(x) Statement to do undo bpe. With bpe=subword_nmt For example , What I do is :

(x + " ").replace(self.bpe_symbol, "").rstrip(), among [email protected]@ - tokenizer: adopt tokenizer.decode(x) Statement to do detokenize. With tokenzier=moses For example , What I do is :

MosesDetokenizer.detokenize(inp.split()) - scoring: adopt scorer = scoring.build_scorer(cfg.scoring, tgt_dict) Statement to create a calculation BLEU The object of . If scoring=‘bleu’( Default ), The specific calculation statement is

scorer.add(target_tokens, hypo_tokens), among ,target\hypo_tokens After some pretreatment ( Maybe after undo bpe and detokenize, It is also possible that nothing has been done : Just use " " This separator puts sentence All of the tokenized bpe The subwords are connected into a string ), obtain target\hypo_str after , Do it again fairseq The simple tokenize(fairseq/fairseq/tokenizer.py), And then calculate BLEU value . And if the scoring=‘sacrebleu’, The specific calculation statement isscorer.add_string(target_str, detok_hypo_str), under these circumstances , If we do what we should do post-process,target_str Is the pure original text ,detok_hypo_str So it is . And calculating BLEU When the value of , We use it again sacrebleu Of standard tokenization, The comparability will be much higher . - post-process: The sum of this parameter bpe Parameters repeat

( Probably ) The correct approach

CUDA_VISIBLE_DEVICES=5 fairseq-generate ../../data/iwslt14/data-bin --path checkpoints/iwslt14/baseline/evaluate_bleu/checkpoint_best.pt --task translation_multi_simple_epoch --source-lang ar --target-lang en --encoder-langtok "src" --decoder-langtok --bpe subword_nmt --tokenizer moses --scoring sacrebleu --bpe-codes /home/syxu/data/iwslt14/code --lang-pairs "ar-en,de-en,en-ar,en-de,en-es,en-fa,en-he,en-it,en-nl,en-pl,es-en,fa-en,he-en,it-en,nl-en,pl-en" --quiet

It mainly provides bpe\bpe-codes\tokenizer\scoring, These four parameters . give the result as follows .

TBC

in addition , It's OK not to look at fairseq-generate Provided BLEU result , Instead, it generates hyp.txt and ref.txt file , And then use sacrebleu Tool to calculate scores .

TBC

Questions remain

- Why? tokenized\detokenized BLEU So different ?----> Said the elder martial brother , There may be some languages , Such as the Latin alphabet , In this case ,tokenized BLEU signify , Use the special word separator of Latin alphabet to segment words , It is possible to divide the text into characters .

- fairseq-generate Of –sacrebleu What's the usage? ?----> It seems useless .

Reference material

TBC

边栏推荐

猜你喜欢

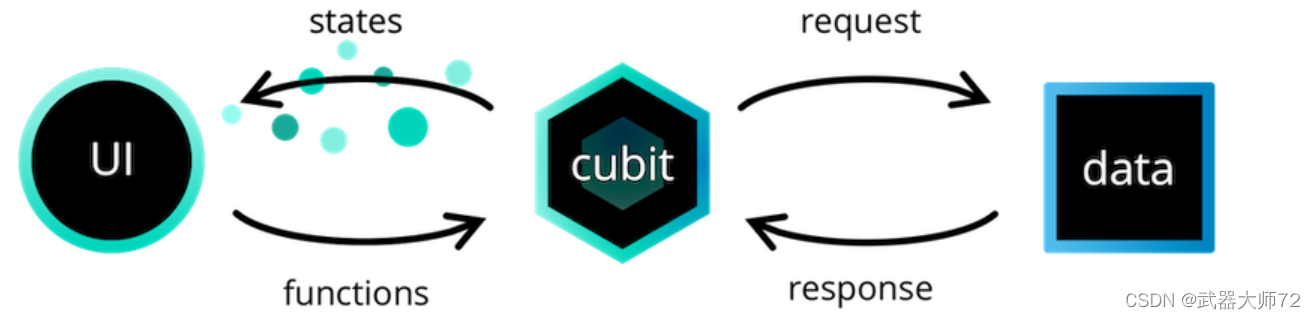

Introduction to bloc: detailed explanation of cube

55 pictures make you feel a bit B-tree at one time

@Query difficult and miscellaneous diseases

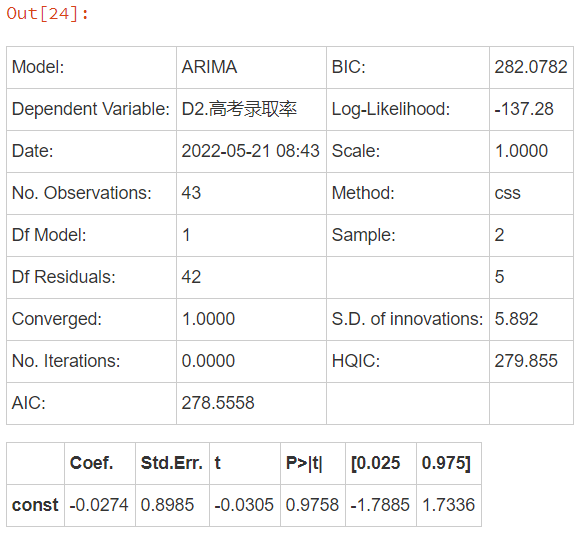

【机器学习】基于多元时间序列对高考预测分析案例

vulhub复现一 activemq

MySQL必須掌握4種語言!

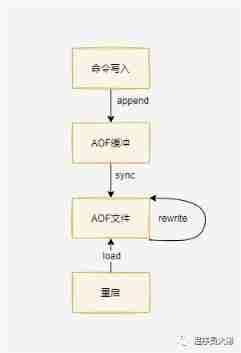

Redis classic 20 questions

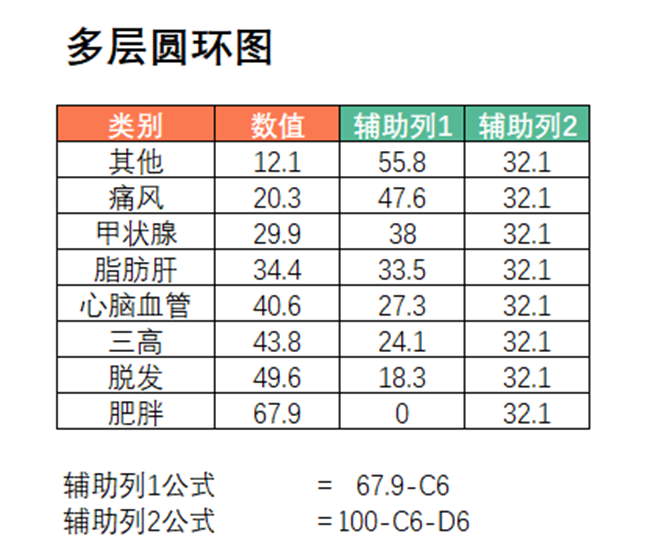

Pie chart metamorphosis record, the liver has 3000 words, collection is to learn!

DF reports an error stale file handle

Redis6.0新特性——ACL(权限控制列表)实现限制用户可执行命令和KEY

随机推荐

Breadth first traversal based on adjacency table

在 R 中创建非线性最小二乘检验

第一章:渗透测试的本质信息收集

Termux install openssh

Binary search

Technology is to be studied

数字商品DGE--数字经济的财富黑马

Simple use example of Aidl

财富自由技能:把自己产品化

# 云原生训练营毕业总结

PyQt theme

Wechat launched a web version transmission assistant. Is it really easy to use?

MySQL必須掌握4種語言!

How to default that an app is not restricted by traffic

UTONMOS坚持“藏品、版权”双优原则助力传统文化高质量发展

饼图变形记,肝了3000字,收藏就是学会!

Here comes the official zero foundation introduction jetpack compose Chinese course!

A few simple ways for programmers to exercise their waist

为 ServiceCollection 实现装饰器模式

Chapter I: essential information collection of penetration test