当前位置:网站首页>Summary of Baimian machine learning

Summary of Baimian machine learning

2022-07-24 14:20:00 【Strong fight】

One Feature Engineering

1 Feature normalization

Why normalize numerical type characteristics : Make different indicators comparable , Unify all features into a roughly the same numerical range .

Common methods :

① Normalization of linear function : Map the results to 【0,1】 The scope of the , Scale the original data proportionally

X_norm = (X-X_max)/(X_max-X_min)

② zero - Mean normalization : Map the raw data to a mean of 0, The standard deviation is 1 The distribution of

z=(X-u)/theta

( The model solved by gradient descent method usually needs normalization , Not applicable to decision tree , The decision tree is mainly based on data set when splitting nodes D About the characteristics of x The information gain ratio of )

2 Category features

① Serial number code : It is usually used to deal with data with size relationship between categories . High-income (3), Middle income (2), low-income (1).

② Hot coding alone : Handle features that do not have size relationships between categories . For example, male (1,0), Woman (0,1).

③ Binary code : First assign each category with serial number code ID, And then I'll put the categories ID The corresponding binary code as a result .( Binary coding essentially uses binary pairs ID

Hash map , The resulting 0/1 Eigenvector , And the dimension is less than that of single heat code , Save storage space )

3 Combination features / Processing of high dimensional composite features

In order to improve the fitting ability , Two features can be combined into second-order features . This combination seems to have no problem , But when you introduce ID The characteristics of the type , The problem arises

If the number of users is m, The number of items is n, Then the parameter scale to be learned is mn. In the Internet Environment , The number of users and items can reach tens of millions ,

It's almost impossible to learn mn Parameters of scale . Use users and items separately k A low dimensional vector representation of a dimension , The parameter scale that needs to be learned becomes mk+nk.( Equivalent to matrix decomposition )

Given the original input, how to effectively construct the decision tree ? Gradient lifting decision tree , The idea of this method is to build the next decision tree on the residual of the previous decision tree every time .

4 Text representation model

① Word bag model and N-gram Model

Cut the whole text into words , Each article can be expressed as a long vector , Each dimension in the vector represents a word , The weight corresponding to this dimension reflects the word in

The importance of the original article .

TF-IDF(t,d)=TF(t,d)IDF(t);

among TF(t,d) For the word t In the document d Frequency of occurrence in ,IDF(t) It's reverse document frequency

IDF(t)=log( The total number of articles / contain t The total number of documents +1)

Usually , You can put the continuous occurrence of n(n<=N) A group of words (N-gram) Also as a separate feature to vector representation , constitute N-gram Model .

② Theme model

③ Word embedding and deep learning model

Word embedding is a kind of model that quantifies words , The core idea is to map every word into a low dimensional space ( Usually K=50-300 dimension ) A dense vector over .

If a document has N Word , You can use one NK Dimension matrix to represent this document .

Deep learning model provides us with a way to automatically carry out feature Engineering , Each hidden layer in the model can be considered as corresponding to the characteristics of different levels of abstraction .

3、 ... and Classical algorithms

3 Decision tree

The decision tree is a top-down , The process of tree classification of sample data , It's made up of nodes and directed edges . Nodes are divided into inner nodes and leaf nodes , Each of these internal nodes

Representing a feature or attribute , A leaf node represents a category .

Applying the idea of ensemble learning to decision tree, we can get random forest , Gradient promotion decision tree and other models .

What are the common heuristic functions of decision tree ?

ID3 : Maximum information gain g(D,A)=H(D)-H(D|A)

C4.5 : Maximum information gain ratio gr(D,A)=g(D,A)/Ha(D)

CART : The largest Gini index

How to prune the decision tree ?

pre-pruning , That is, stop the growth of the decision tree in advance . After pruning , Prune the generated over fitting decision tree .

Pre pruning has the following methods for when to stop the growth of decision trees :

1. When the tree reaches a certain depth , Stop growing trees

2. When the number of samples arriving at the node is less than a certain threshold ,

3. Calculate the accuracy improvement of the test set at each split , When it is less than a certain threshold , No more expansion .

边栏推荐

- String -- 28. Implement strstr()

- Detailed explanation of IO model (easy to understand)

- Not configured in app.json (uni releases wechat applet)

- Cocoapod installation problems

- Detailed analysis of common command modules of ansible service

- C# unsafe 非托管对象指针转换

- Beijing all in one card listed and sold 68.45% of its equity at 352.888529 million yuan, with a premium rate of 84%

- Maotai ice cream "bucked the trend" and became popular, but its cross-border meaning was not "selling ice cream"

- C operator priority memory formula

- Summary of week 22-07-23

猜你喜欢

After reading this article, I found that my test cases were written in garbage

对话框管理器第二章:创建框架窗口

天然气潮流计算matlab程序

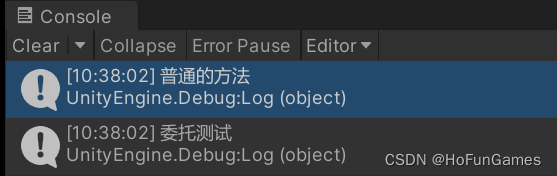

Simple understanding and implementation of unity delegate

茅台冰淇淋“逆势”走红,跨界之意却并不在“卖雪糕”

使用树莓派做Apache2 HA实验

【C语言笔记分享】——动态内存管理malloc、free、calloc、realloc、柔性数组

Typo in static class property declarationeslint

Introduction to Xiaoxiong school

Not configured in app.json (uni releases wechat applet)

随机推荐

【NLP】下一站,Embodied AI

天然气潮流计算matlab程序

北京一卡通以35288.8529万元挂牌出让68.45%股权,溢价率为84%

Nmap security testing tool tutorial

Detailed explanation of address bus, data bus and control bus

Rasa 3.x 学习系列-Rasa FallbackClassifier源码学习笔记

String -- 28. Implement strstr()

Class loading mechanism and parental delegation mechanism

Complete set of JS array common operations

SQL subquery

本机异步网络通信执行快于同步指令

JS judge whether it is an integer

C# 多线程锁整理记录

Csp2021 T3 palindrome

CSDN garbage has no bottom line!

Mmdrawercontroller first loading sidebar height problem

C language -- three ways to realize student information management

IntelliSense of Visual Studio: 'no members available'

Stack and queue - 225. Implement stack with queue

Noip2021 T2 series