当前位置:网站首页>Sqoop [environment setup 01] CentOS Linux release 7.5 installation configuration sqoop-1.4.7 resolve warnings and verify (attach sqoop 1 + sqoop 2 Latest installation package +mysql driver package res

Sqoop [environment setup 01] CentOS Linux release 7.5 installation configuration sqoop-1.4.7 resolve warnings and verify (attach sqoop 1 + sqoop 2 Latest installation package +mysql driver package res

2022-07-26 09:45:00 【Wind】

at present Sqoop Yes Sqoop1 and Sqoop2 Two versions , But so far , The official does not recommend the use of Sqoop2, Because of its relationship with Sqoop1 Not compatible , And the function is not perfect , Therefore, it is preferred to use Sqoop 1. The file for this installation is 【Sqoop1 Latest version 】 sqoop-1.4.7.bin-hadoop-2.6.0.tar.gz The following contents are described in this version .

1. Resource sharing

link :https://pan.baidu.com/s/1XRZs2PngAnrMczuD7Dn7Kg

Extraction code :w7b9

Contains resources :(Sqoop1 The latest version )sqoop-1.4.7.bin-hadoop-2.6.0.tar.gz and sqoop-1.4.7.tar.gz

(Sqoop2 The latest version )sqoop-1.99.7-bin-hadoop200.tar.gz and sqoop-1.99.7.tar.gz

2. Brief introduction

Sqoop Is a common data migration tool , It is mainly used to import and export data between different storage systems :

- Import data : from MySQL,Oracle Import data from the relational database to HDFS、Hive、HBase In an equally distributed file storage system ;

- Derived data : Export data from distributed file system to relational database .

Sqoop1 The principle of is to convert the execution command into MapReduce Job to realize data migration , Here's the picture :

3. precondition

because Sqoop1 Is to convert the execution command into MapReduce Job to realize data migration , All must be installed Hadoop, I installed 3.1.3 Version of , Refer to the installation tutorial 《Hadoop3.1.3 Stand alone installation configuration 》

[[email protected] ~]# hadoop version

Hadoop 3.1.3

4. Installation configuration

# 1. Unzip and move to /usr/local/sqoop/ Next

tar -zxvf sqoop-1.4.7.bin-hadoop-2.6.0.tar.gz

# Notice here The installation package downloaded from the official website is 【bin__hadoop】 I changed it to - 了 So after unzipping, it is double underlined

mv sqoop-1.4.7.bin__hadoop-2.6.0/ /usr/local/sqoop/

# 2. Configure environment variables :

vim /etc/profile.d/my_env.sh

# add to

export SQOOP_HOME=/usr/local/sqoop

export PATH=$SQOOP_HOME/bin:$PATH

# Make the configured environment variables take effect immediately :

# The first is to grant permission 【 Just operate it once 】

chmod +x /etc/profile.d/my_env.sh

source /etc/profile.d/my_env.sh

# Check it out

echo $SQOOP_HOME # Show /usr/local/sqoop It means success

# 3.sqoop To configure

# Copy ${SQOOP_HOME}/conf/sqoop-env-template.sh And modify it sqoop-env.sh The configuration file

cp sqoop-env-template.sh sqoop-env.sh

vim sqoop-env.sh

# Configuration in progress HADOOP_COMMON_HOME and HADOOP_MAPRED_HOME It must be configured Configure others when they are used

# Set Hadoop-specific environment variables here.

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/usr/local/hadoop-3.1.3

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/usr/local/hadoop-3.1.3

#set the path to where bin/hbase is available

#export HBASE_HOME=

#Set the path to where bin/hive is available

#export HIVE_HOME=

#Set the path for where zookeper config dir is

#export ZOOCFGDIR=

5. Copy database driver

take MySQL Copy the driver package to Sqoop1 Of the installation directory ${SQOOP_HOME}/lib/ Under the table of contents . Baidu SkyDrive mysql-connector-java-5.1.47.jar Share :

link :https://pan.baidu.com/s/1X15dNrH-B-U5oxw-H6sn8A

Extraction code :ibaj

6. verification

Since the sqoop Of bin Directory configuration to environment variables , Directly use the following command to verify whether the configuration is successful :

[[email protected] ~]# sqoop version

Warning: /usr/local/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /usr/local/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

2021-09-08 16:52:22,191 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

The corresponding version information indicates that the configuration is successful :

2021-09-08 16:52:22,191 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

Here are three Warning Warning because there is no configuration $HBASE_HOME、$HCAT_HOME and $ACCUMULO_HOME, If not used HBase、HCatalog and Accumulo, Can be ignored .Sqoop At startup, you will check whether these software are configured in the environment variables , If you want to remove these warnings , You can modify ${SQOOP_HOME}/bin/configure-sqoop , Note out unnecessary checks .

## Moved to be a runtime check in sqoop.

#if [ ! -d "${HBASE_HOME}" ]; then

# echo "Warning: $HBASE_HOME does not exist! HBase imports will fail."

# echo 'Please set $HBASE_HOME to the root of your HBase installation.'

#fi

## Moved to be a runtime check in sqoop.

#if [ ! -d "${HCAT_HOME}" ]; then

# echo "Warning: $HCAT_HOME does not exist! HCatalog jobs will fail."

# echo 'Please set $HCAT_HOME to the root of your HCatalog installation.'

#fi

#if [ ! -d "${ACCUMULO_HOME}" ]; then

# echo "Warning: $ACCUMULO_HOME does not exist! Accumulo imports will fail."

# echo 'Please set $ACCUMULO_HOME to the root of your Accumulo installation.'

#fi

To verify again , I found it refreshing

[[email protected] ~]# sqoop version

2021-09-08 17:03:51,446 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

7. summary

thus ,Sqoop1 Installation configuration successful There will be quite a lot of problems when using , It will be explained in detail later , Thank you for your support ~

边栏推荐

- Modern medicine in the era of "Internet +"

- Learning notes: what are the common array APIs that change the original array or do not change the original array?

- Development to testing: a six-year road to automation starting from 0

- [untitled]

- 2022年中科磐云——服务器内部信息获取 解析flag

- Use of OpenCV class

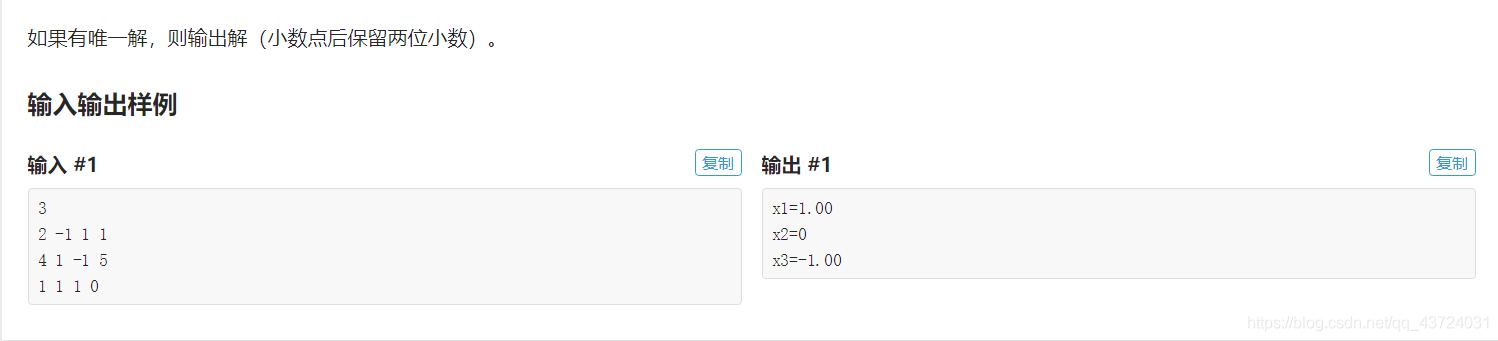

- 2019 ICPC Asia Yinchuan Regional(水题题解)

- Mo team learning summary (II)

- 【Datawhale】【机器学习】糖尿病遗传风险检测挑战赛

- 官方颁发的SSL证书与自签名证书结合实现网站双向认证

猜你喜欢

Fuzzy PID control of motor speed

Fiddler packet capturing tool for mobile packet capturing

新增市场竞争激烈,中国移动被迫推出限制性超低价5G套餐

Neural network and deep learning-6-support vector machine 1-pytorch

CSV data file settings of JMeter configuration components

附加到进程之后,断点显示“当前不会命中断点 还没有为该文档加载任何符号”

The diagram of user login verification process is well written!

SSG framework Gatsby accesses the database and displays it on the page

Gauss elimination

Alibaba cloud technology expert haochendong: cloud observability - problem discovery and positioning practice

随机推荐

Audio and video knowledge

asp. Net using redis cache (2)

m进制数str转n进制数

莫队学习笔记(一)

OFDM Lecture 16 - OFDM

The combination of officially issued SSL certificate and self signed certificate realizes website two-way authentication

2021年山东省中职组“网络空间安全”B模块windows渗透(解析)

如何添加一个PDB

uni-app学习总结

Application of Gauss elimination

Qt随手笔记(二)Edit控件及float,QString转化、

面试题目大赏

In the same CONDA environment, install pytroch first and then tensorflow

How to add a PDB

Does volatile rely on the MESI protocol to solve the visibility problem? (top)

Neural network and deep learning-6-support vector machine 1-pytorch

The problem of accessing certsrv after configuring ADCs

在同一conda环境下先装Pytroch后装TensorFlow

反射机制的原理是什么?

JS one line code to obtain the maximum and minimum values of the array