当前位置:网站首页>Copy in pytorch_ Summary of differences between (), detach (), data (), and clone () operations

Copy in pytorch_ Summary of differences between (), detach (), data (), and clone () operations

2022-06-22 01:40:00 【Not late not late】

1. clone

b = a.clone()

Create a tensor With the source tensor Have the same shape,dtype and device, Do not share memory addresses , But new tensor(b) The gradient of will be superimposed on the source tensor(a) On . It should be noted that ,b = a.clone() after ,b Not a leaf node , So you can't access its gradient .

import torch

a = torch.tensor([1.,2.,3.],requires_grad=True)

b = a.clone()

print('=========================== Do not share address =========================')

print(type(a), a.data_ptr())

print(type(b), b.data_ptr())

print('===========================clone Then output... Respectively =========================')

print('a: ', a) # a: tensor([1., 2., 3.], requires_grad=True)

print('b: ', b) #b: tensor([1., 2., 3.], grad_fn=<CloneBackward0>)

c = a ** 2

d = b ** 3

print('=========================== Back propagation =========================')

c.sum().backward() # 2* a

print('a.grad: ', a.grad) #a.grad: tensor([2., 4., 6.])

d.sum().backward() # 3b**2

print('a.grad: ', a.grad) #a.grad: tensor([ 5., 16., 33.]) , Will b The gradients add up

#print('b.grad: ', b.grad) # b.grad: None , It is no longer a leaf of the calculation graph , No access b.grad

Output :

=========================== Do not share address =========================

<class 'torch.Tensor'> 93899916787840

<class 'torch.Tensor'> 93899917014528

===========================clone Then output... Respectively =========================

a: tensor([1., 2., 3.], requires_grad=True)

b: tensor([1., 2., 3.], grad_fn=<CloneBackward0>)

=========================== Back propagation =========================

a.grad: tensor([2., 4., 6.])

a.grad: tensor([ 5., 16., 33.])

2. copy_

b = torch.empty_like(a).copy_(a)

copy_() The function needs a target tensor, In other words, you need to build b, And then a Copy to b, and clone Operation does not require .

copy_() Function completion and clone() function Similar functions , But there are differences . call copy_() The object of is the target tensor, The parameter is the copy operation from Of tensor, Finally, it will return to the target tensor; and clone() The calling object of is the source tensor, Return to a new tensor. Of course clone() The function can also use torch.clone() call , Will source tensor As a parameter .

import torch

a = torch.tensor([1., 2., 3.],requires_grad=True)

b = torch.empty_like(a).copy_(a)

print('====================copy_ Memory is different ======================')

print(a.data_ptr())

print(b.data_ptr())

print('====================copy_ Print ======================')

print(a)

print(b)

c = a ** 2

d = b ** 3

print('===================c Back propagation =======================')

c.sum().backward()

print(a.grad) # tensor([2., 2., 2.])

print('===================d Back propagation =======================')

d.sum().backward()

print(a.grad) # Source tensor The gradients add up

#print(b.grad) # None

Output :

====================copy_ Memory is different ======================

94358408685568

94358463065088

====================copy_ Print ======================

tensor([1., 2., 3.], requires_grad=True)

tensor([1., 2., 3.], grad_fn=<CopyBackwards>)

===================c Back propagation =======================

tensor([2., 4., 6.])

===================d Back propagation =======================

tensor([ 5., 16., 33.])

3. detach

detach() Function return and call object tensor A related one tensor, This new tensor With the source tensor Shared data memory ( that tensor The data must be the same ), but Its requires_grad by False, And does not contain the source tensor Calculation diagram information of .

import torch

a = torch.tensor([1., 2., 3.],requires_grad=True)

b = a.detach()

print('========================= Shared memory ==============================')

print(a.data_ptr())

print(b.data_ptr())

print('========================= Original value and detach==============================')

print(a)

print(b)

c = a * 2

d = b * 3 # No back propagation

print('========================= Original value back propagation ==============================')

c.sum().backward()

print(a.grad)

print('=========================detach No back propagation ==============================')

# d.sum().backward()

Output :

========================= Shared memory ==============================

94503766034432

94503766034432

========================= Original value and detach==============================

tensor([1., 2., 3.], requires_grad=True)

tensor([1., 2., 3.])

========================= Original value back propagation ==============================

tensor([2., 2., 2.])

=========================detach No back propagation ==============================

because b It has been separated from the calculation diagram ,pytorch Naturally, the subsequent calculation process is not tracked . If you want to b Add the calculation chart again , It only needs b.requires_grad_().

pytorch You can continue to track b The calculation of , But the gradient does not change from b Retrogression a, The gradient is truncated . But because of b And a Shared memory ,a And b Will always be equal .

4. data

data The way is to get a tensor Data information , The information returned is the same as that mentioned above detach() The information returned is the same , Also has the Same memory , Do not save gradient Characteristics of information . however data Sometimes it's not safe , Because they share memory , If you change one, the other will change , While using detach When using back propagation, an error will be reported .

import torch

import pdb

x = torch.FloatTensor([[1., 2.]]) # Default x.requires_grad == False, Only float Types can be backpropagated

w1 = torch.FloatTensor([[2.], [1.]])

w2 = torch.FloatTensor([3.])

w1.requires_grad = True

w2.requires_grad = True

d = torch.matmul(x, w1) # After multiplying ,d Of requires_grad = True( The addition operation is also True)

d_ = d.data # d and d_ Will share memory ,d_ Of requires_grad = False

# d_ = d.detach() #d and d_ Memory is also shared , But it can't be spread back

f = torch.matmul(d, w2)

d_[:] = 1 #d_ Changed the value , therefore d The value of

f.backward() # Use data Will get the wrong value , Use detach False report

Reference resources :

边栏推荐

- Brief introduction to jpom: simple and light low intrusive online construction, automatic deployment, daily operation and maintenance, and project monitoring software

- Find find files with different extensions

- How to modify the dictionary

- 技术探秘: 360数科夺得ICDAR OCR竞赛世界第一

- [number theory] leetcode1010 Pairs of Songs With Total Durations Divisible by 60

- Documenter l'utilisation de webcraper

- Application of C language dynamic memory function

- 容器云是什么意思?与堡垒机有什么区别?

- 亚马逊测评浏览器,亚马逊测评风控核心知识点

- Point cloud registration -- 4pcs principle and Application

猜你喜欢

机器学习 Pytorch实现案例 LSTM案例(航班人数预测)

对标Copilot,国内首个:自然语言一键生成方法级代码aiXcoder XL来了

![[解决方案] 明厨亮灶视频边缘计算网关解决方案](/img/67/20a9ece2dc7d3a842aff1fc651e4fc.png)

[解决方案] 明厨亮灶视频边缘计算网关解决方案

Shardingsphere-proxy-5.0.0 implementation of distributed hash modulo fragmentation (4)

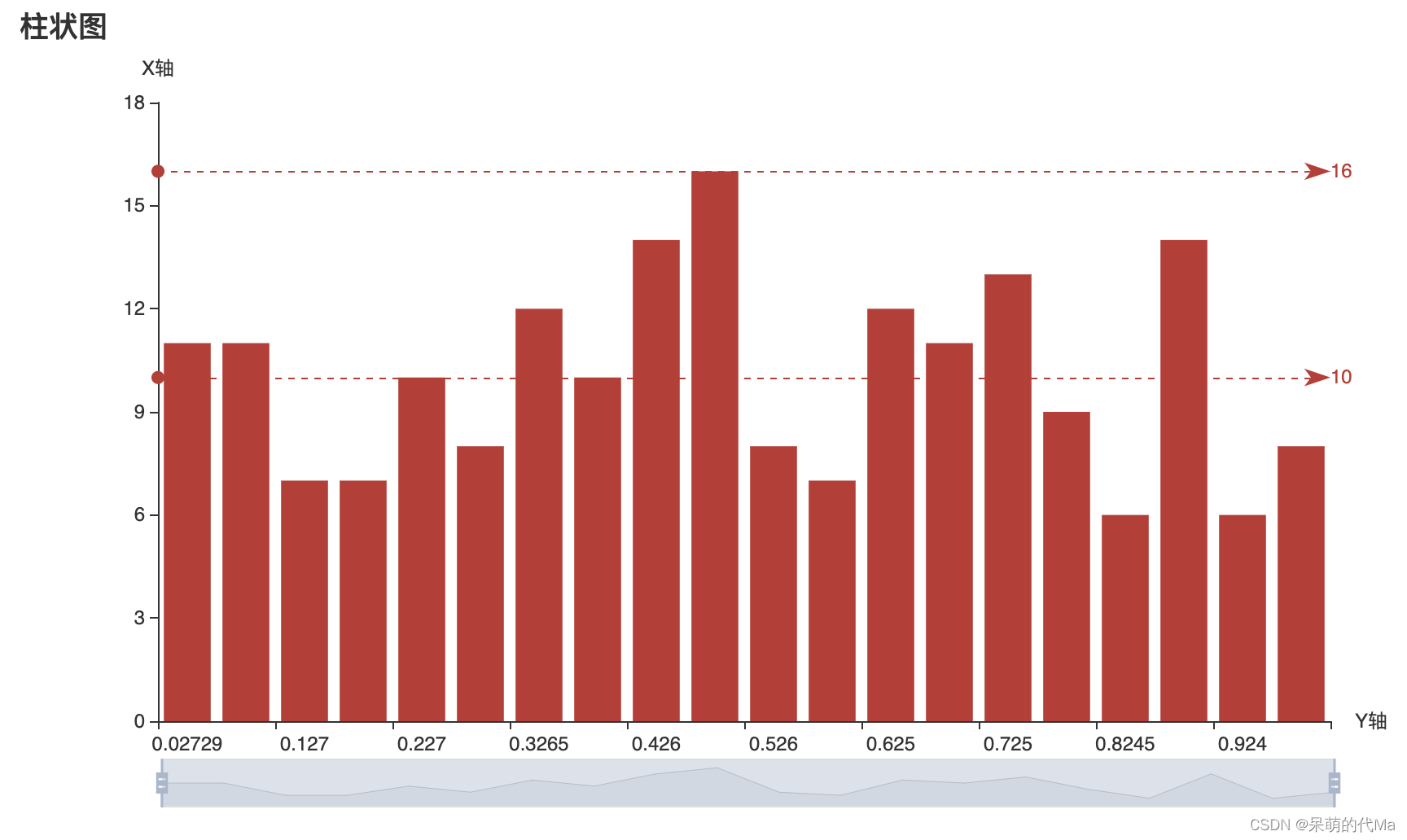

Making unequal interval histogram with Matplotlib

将列表分箱,并通过Pyechart绘制柱状图

LeetCode 5242. 兼具大小写的最好英文字母

Counter完之后,想统计字符串长度大于2的结果

数学知识复习:三重积分

DAST 黑盒漏洞扫描器 第四篇:扫描性能

随机推荐

有没有亚马逊跨境电商适合用的浏览器排名

Standing at the digital tuyere, how can tooling enterprises "fly"

Use of listctl virtual mode under wince

Jpom 简介: 简而轻的低侵入式在线构建、自动部署、日常运维、项目监控软件

Riscv cache

Google multi user anti Association tool

2011. variable value after operation

Winform项目控制台调试方式

亚马逊测评系统哪个好?

Creating a successful paradigm for cross-border e-commerce: Amazon cloud technology helps sellers lay out the next growth point

Brief description of advantages and disadvantages of cloud fortress distributed cluster deployment

技术探秘: 360数科夺得ICDAR OCR竞赛世界第一

How to make your website quickly found by search engines

Example and description of lvgl Demo 1

Interview question catalog collection

google多用户防止关联工具

LeetCode 5242. Best English letters with both upper and lower case

第 24 章 基于 Simulink 进行图像和视频处理--matlab深度学习实战整理

How to modify the dictionary

第八届“互联网+”大赛|百度杰出架构师毕然解读产业赛道命题