当前位置:网站首页>What is the MySQL database zipper table

What is the MySQL database zipper table

2022-06-21 07:31:00 【Yisu cloud】

mysql What is a database zipper table

This article mainly explains “mysql What is a database zipper table ”, Interested friends might as well come and have a look . The method introduced in this paper is simple and fast , Practical . Now let Xiaobian take you to learn “mysql What is a database zipper table ” Well !

Zipper table generation background

In the process of data model design of data warehouse , It's a common demand :

1、 Large amount of data ;

2、 Some of the fields in the table will be update, Such as the user's address , Product description , The status of the order and so on ;

3、 You need to view the historical snapshot information of a certain time point or period , such as , View the status of an order at a certain point in history , such as , Look at a user in a period of time in the past , Updated several times and so on ;

4、 The scale and frequency don't change very much , such as , All in all 1000 Ten thousand members , New and changed daily 10 All around ;

5、 If you keep a full copy of this watch every day , Then every time the whole volume will keep a lot of unchanged information , It's a huge waste of storage ;

There are several options for this table :

Scheme 1 : Keep only the latest one every day , For example, we use Sqoop Extract the latest full data to Hive in .

Option two : Keep a full copy of the slice data every day .

Option three : Use zipper Watch .

Comparison of the above schemes

Scheme 1

This kind of scheme doesn't have to be said more , It's easy to implement , Every day drop Drop the previous day's data , Take the latest one again .

The advantages are obvious , Save a space , Some ordinary use is also very convenient , You don't need to add a time partition when selecting a table .

The disadvantages are also obvious , No historical data , There are only other ways to get through the old books first , For example, from the water meter .

Option two

A full dose of sectioning a day is a relatively safe protocol , And the historical data is .

The disadvantage is that the storage space is too large , If you keep a full copy of this watch every day , Then every time the whole volume will keep a lot of unchanged information , It's a huge waste of storage , I feel that very deeply …

Of course, we can make some trade-offs , For example, only data of nearly one month is kept ? however , Demand is shameless , The life cycle of data is not something we can completely control .

Zipper table

Zipper watch in the use of the basic consideration of our needs .

First of all, it makes a choice in space , Although it is not as small as plan one , But its daily increment may be only one thousandth or even one thousandth of the second plan .

In fact, it can meet the needs of scheme 2 , It can get the latest data , You can also add filter conditions and get historical data .

So we still need to use the zipper watch .

Zipper table concept

Zipper watch is a data model , It is mainly defined according to the way in which tables store data in data warehouse design , seeing the name of a thing one thinks of its function , So called zipper , It's about recording history . Record a thing from the beginning , Information about all changes up to the current state . Zipper table can avoid mass storage problems caused by storing all records every day , It is also used to process slowly changing data (SCD2) A common way .

Baidu Encyclopedia's explanation : Zipper watch is to maintain historical status , And a table of the latest state data , Zipper table according to different zipper granularity , It's actually a snapshot , It's just optimization , Removed some of the unchanging records , Through the zipper table can be very convenient to restore the zipper time point of customer records .

Zipper table algorithm

1、 Collect the full amount of data of the day to ND(NowDay On the day ) surface ;

2、 The full data of yesterday can be taken from the history table and stored in OD(OldDay Last day ) surface ;

3、 Full field comparison between two tables ,(ND-OD) Is the new and changed data of the day , That is, the increment of the day , use W_I Express ;

4、 Full field comparison between two tables ,(OD-ND) Data that needs to be chained for the status to end , Need modification END_DATE, use W_U Express ;

5、 take W_I Insert all the contents of the table into the history table , These are new records ,start_date For the day , and end_date by max value , Can be set as ’9999-12-31‘;

6、 Change the history table W_U Partial update operation ,start_date remain unchanged , and end_date Change to the same day , That is, chain closing operation , History table (OD) and W_U Table comparison ,START_DATE,END_DATE With the exception of , With W_U The table shall prevail , The intersection of the two makes it END_DATE Change to current day , This indicates that the record is invalid .

Example of zipper 1

A simple example , For example, there is an order form :

6 month 20 No 3 Bar record :

| Order creation date | The order no. | The order status |

|---|---|---|

| 2012-06-20 | 001 | Create order |

| 2012-06-20 | 002 | Create order |

| 2012-06-20 | 003 | Pay to complete |

To 6 month 21 Japan , Table has 5 Bar record :

| Order creation date | The order no. | The order status |

|---|---|---|

| 2012-06-20 | 001 | Create order |

| 2012-06-20 | 002 | Create order |

| 2012-06-20 | 003 | Pay to complete |

| 2012-06-21 | 004 | Create order |

| 2012-06-21 | 005 | Create order |

To 6 month 22 Japan , Table has 6 Bar record :

| Order creation date | The order no. | The order status |

|---|---|---|

| 2012-06-20 | 001 | Create order |

| 2012-06-20 | 002 | Create order |

| 2012-06-20 | 003 | Pay to complete |

| 2012-06-21 | 004 | Create order |

| 2012-06-21 | 005 | Create order |

| 2012-06-22 | 006 | Create order |

How to keep this table in the data warehouse :

1、 Keep only one full copy , Then the data and 6 month 22 The record of the day is the same , If you need to check 6 month 21 Daily order 001 The state of , It can't satisfy ;

2、 Keep a full amount every day , The table in the data warehouse has 14 Bar record , But many records are kept repeatedly , No task changes , Like an order 002,004, There's a lot of data , It will cause a great waste of storage ;

If a historical zipper table is designed in the data warehouse to save the table , There will be a table like this :

| Order creation date | The order no. | The order status | dw_bigin_date | dw_end_date |

|---|---|---|---|---|

| 2012-06-20 | 001 | Create order | 2012-06-20 | 2012-06-20 |

| 2012-06-20 | 001 | Pay to complete | 2012-06-21 | 9999-12-31 |

| 2012-06-20 | 002 | Create order | 2012-06-20 | 9999-12-31 |

| 2012-06-20 | 003 | Pay to complete | 2012-06-20 | 2012-06-21 |

| 2012-06-20 | 003 | Shipped | 2012-06-22 | 9999-12-31 |

| 2012-06-21 | 004 | Create order | 2012-06-21 | 9999-12-31 |

| 2012-06-21 | 005 | Create order | 2012-06-21 | 2012-06-21 |

| 2012-06-21 | 005 | Pay to complete | 2012-06-22 | 9999-12-31 |

| 2012-06-22 | 006 | Create order | 2012-06-22 | 9999-12-31 |

explain :

1、dw_begin_date Indicates the start time of the life cycle of the record ,dw_end_date Indicates the end time of the life cycle of the record ;

2、dw_end_date = '9999-12-31’ Indicates that the record is currently in a valid state ;

3、 If you query all currently valid records , be select * from order_his where dw_end_date = ‘9999-12-31’;

4、 If you inquire 2012-06-21 Historical snapshot of , be select * from order_his where dw_begin_date <= ‘2012-06-21’ and end_date >= ‘2012-06-21’, This statement will query the following records :

| Order creation date | The order no. | The order status | dw_bigin_date | dw_end_date |

|---|---|---|---|---|

| 2012-06-20 | 001 | Pay to complete | 2012-06-21 | 9999-12-31 |

| 2012-06-20 | 002 | Create order | 2012-06-20 | 9999-12-31 |

| 2012-06-20 | 003 | Pay to complete | 2012-06-20 | 2012-06-21 |

| 2012-06-21 | 004 | Create order | 2012-06-21 | 9999-12-31 |

| 2012-06-21 | 005 | Create order | 2012-06-21 | 2012-06-21 |

And the source table 6 month 21 The record of the day is exactly the same :

| Order creation date | The order no. | The order status |

|---|---|---|

| 2012-06-20 | 001 | Create order |

| 2012-06-20 | 002 | Create order |

| 2012-06-20 | 003 | Pay to complete |

| 2012-06-21 | 004 | Create order |

| 2012-06-21 | 005 | Create order |

It can be seen that , Such a historical zipper table , It can meet the demand for historical data , It can also save storage resources to a great extent ;

Example of zipper 2:

In the history table, there may be only a few records of a person's life , It avoids the problem of mass storage caused by recording customer status every day :

| The person's name | Start date | End date | state |

|---|---|---|---|

| client | 19000101 | 19070901 | H At home |

| client | 19070901 | 19130901 | A Primary school |

| client | 19130901 | 19160901 | B Junior high school |

| client | 19160901 | 19190901 | C high school |

| client | 19190901 | 19230901 | D university |

| client | 19230901 | 19601231 | E company |

| client | 19601231 | 29991231 | H Retired at home |

Every record above does not count as the end , For example, to 19070901,client Already in A, instead of H 了 . So except for the last record, the status has not changed so far , The rest of the records are actually on the end date , Are no longer in the status on the end date of this record . This phenomenon can be understood as counting the head but not the tail .

Zipper table implementation method

1、 Define two temporary tables , One is the full data of the day , The other is the data that needs to be added or updated ;

CREATE VOLATILE TABLE VT_xxxx_NEW AS xxxx WITH NO DATA ON COMMIT PRESERVE ROWS;CREATE VOLATILE SET TABLE VT_xxxx_CHG,NO LOG AS xxxx WITH NO DATA ON COMMIT PRESERVE ROWS;

2、 Get the full data of the day

INSERT INTO VT_xxxx_NEW(xx) SELECT (xx,cur_date, max_date) FROM xxxx_sorce;

3、 Extract new or changed data , from xxxx_NEW Temporary table to xxxx_CHG A temporary table ;

INSERT INTO VT_xxxx_CHG(xx)SELECT xx FROM VT_xxxx_NEWWHERE (xx) NOT IN (select xx from xxxx_HIS where end_date='max_date');

4、 Update the failure record of the history table end_date by max value

UPDATE A1 FROM xxxx_HIS A1, VT_xxxx_CHG A2SET End_Date='current_date'WHERE A1.xx=A2.xx AND A1.End_Date='max_date';

5、 Insert new or changed data into the target table

INSERT INTO xxxx_HIS SELECT * FROM VT_xxxx_CHG;

Take commodity data as an example

There is an item table t_product, The table structure is as follows :

| Name | type | explain |

|---|---|---|

| goods_id | varchar(50) | Product id |

| goods_status | varchar(50) | Goods state ( To audit 、 For sale 、 On sale 、 deleted ) |

| createtime | varchar(50) | Product creation date |

| modifytime | varchar(50) | Item revision date |

2019 year 12 month 20 The daily data are as follows :

| goods_id | goods_status | createtime | modifytime |

|---|---|---|---|

| 001 | To audit | 2019-12-20 | 2019-12-20 |

| 002 | For sale | 2019-12-20 | 2019-12-20 |

| 003 | On sale | 2019-12-20 | 2019-12-20 |

| 004 | deleted | 2019-12-20 | 2019-12-20 |

The state of the goods , It will change over time , We need to keep all the historical information about the changes of the goods .

Scheme 1 : Snapshot every day's data to the warehouse

The plan is : Keep a full copy every day , Synchronize all data into the data warehouse , Many records are kept repeatedly , No change .

12 month 20 Japan (4 Data )

| goods_id | goods_status | createtime | modifytime |

|---|---|---|---|

| 001 | To audit | 2019-12-18 | 2019-12-20 |

| 002 | For sale | 2019-12-19 | 2019-12-20 |

| 003 | On sale | 2019-12-20 | 2019-12-20 |

| 004 | deleted | 2019-12-15 | 2019-12-20 |

12 month 21 Japan (10 Data )

| goods_id | goods_status | createtime | modifytime |

|---|---|---|---|

| The following is a 12 month 20 Daily snapshot data | |||

| 001 | To audit | 2019-12-18 | 2019-12-20 |

| 002 | For sale | 2019-12-19 | 2019-12-20 |

| 003 | On sale | 2019-12-20 | 2019-12-20 |

| 004 | deleted | 2019-12-15 | 2019-12-20 |

| The following is a 12 month 21 Daily snapshot data | |||

| 001 | For sale ( From to be approved to be sold ) | 2019-12-18 | 2019-12-21 |

| 002 | For sale | 2019-12-19 | 2019-12-20 |

| 003 | On sale | 2019-12-20 | 2019-12-20 |

| 004 | deleted | 2019-12-15 | 2019-12-20 |

| 005( New products ) | To audit | 2019-12-21 | 2019-12-21 |

| 006( New products ) | To audit | 2019-12-21 | 2019-12-21 |

12 month 22 Japan (18 Data )

| goods_id | goods_status | createtime | modifytime |

|---|---|---|---|

| The following is a 12 month 20 Daily snapshot data | |||

| 001 | To audit | 2019-12-18 | 2019-12-20 |

| 002 | For sale | 2019-12-19 | 2019-12-20 |

| 003 | On sale | 2019-12-20 | 2019-12-20 |

| 004 | deleted | 2019-12-15 | 2019-12-20 |

| The following is a 12 month 21 Daily snapshot data | |||

| 001 | For sale ( From to be approved to be sold ) | 2019-12-18 | 2019-12-21 |

| 002 | For sale | 2019-12-19 | 2019-12-20 |

| 003 | On sale | 2019-12-20 | 2019-12-20 |

| 004 | deleted | 2019-12-15 | 2019-12-20 |

| 005 | To audit | 2019-12-21 | 2019-12-21 |

| 006 | To audit | 2019-12-21 | 2019-12-21 |

| The following is a 12 month 22 Daily snapshot data | |||

| 001 | For sale | 2019-12-18 | 2019-12-21 |

| 002 | For sale | 2019-12-19 | 2019-12-20 |

| 003 | deleted ( From on sale to deleted ) | 2019-12-20 | 2019-12-22 |

| 004 | To audit | 2019-12-21 | 2019-12-21 |

| 005 | To audit | 2019-12-21 | 2019-12-21 |

| 006 | deleted ( From pending to deleted ) | 2019-12-21 | 2019-12-22 |

| 007 | To audit | 2019-12-22 | 2019-12-22 |

| 008 | To audit | 2019-12-22 | 2019-12-22 |

MySQL Realization of data warehouse code

MySQL initialization

stay MySQL in lalian Libraries and commodity tables are used to go to the raw data layer

-- Create database create database if not exists lalian;-- Create a product list create table if not exists `lalian`.`t_product`( goods_id varchar(50), -- Product id goods_status varchar(50), -- Goods state createtime varchar(50), -- Product creation time modifytime varchar(50) -- Product modification time );

stay MySQL Created in ods and dw Layer to simulate data warehouse

-- ods Create a product list create table if not exists `lalian`.`ods_t_product`( goods_id varchar(50), -- Product id goods_status varchar(50), -- Goods state createtime varchar(50), -- Product creation time modifytime varchar(50), -- Product modification time cdat varchar(10) -- simulation hive Partition )default character set = 'utf8';-- dw Create a product list create table if not exists `lalian`.`dw_t_product`( goods_id varchar(50), -- Product id goods_status varchar(50), -- Goods state createtime varchar(50), -- Product creation time modifytime varchar(50), -- Product modification time cdat varchar(10) -- simulation hive Partition )default character set = 'utf8';

Incremental import 12 month 20 Data no.

Raw data import 12 month 20 Data no. (4 strip )

insert into `lalian`.`t_product`(goods_id, goods_status, createtime, modifytime) values('001', ' To audit ', '2019-12-18', '2019-12-20'),('002', ' For sale ', '2019-12-19', '2019-12-20'),('003', ' On sale ', '2019-12-20', '2019-12-20'),('004', ' deleted ', '2019-12-15', '2019-12-20');Be careful : Because of the MySQL To simulate the data warehouse, so directly use insert into Import data in the same way , It may be used in enterprises hive To do data warehouse use kettle perhaps sqoop or datax And so on to synchronize the data .

# Import from the raw data layer to ods layer insert into lalian.ods_t_productselect *,'20191220' from lalian.t_product ;# from ods Synchronize to dw layer insert into lalian.dw_t_productselect * from lalian.ods_t_product where cdat='20191220';

see dw The running results of the layer

select * from lalian.dw_t_product where cdat='20191220';

| goods_id | goods_status | createtime | modifytime | cdat |

|---|---|---|---|---|

| 1 | To audit | 2019/12/18 | 2019/12/20 | 20191220 |

| 2 | For sale | 2019/12/19 | 2019/12/20 | 20191220 |

| 3 | On sale | 2019/12/20 | 2019/12/20 | 20191220 |

| 4 | deleted | 2019/12/15 | 2019/12/20 | 20191220 |

Incremental import 12 month 21 data

Raw data layer import 12 month 21 Daily data (6 Data )

UPDATE `lalian`.`t_product` SET goods_status = ' For sale ', modifytime = '2019-12-21' WHERE goods_id = '001';INSERT INTO `lalian`.`t_product`(goods_id, goods_status, createtime, modifytime) VALUES('005', ' To audit ', '2019-12-21', '2019-12-21'),('006', ' To audit ', '2019-12-21', '2019-12-21');Import data into ods Layer and dw layer

# Import from the raw data layer to ods layer insert into lalian.ods_t_productselect *,'20191221' from lalian.t_product ;# from ods Synchronize to dw layer insert into lalian.dw_t_productselect * from lalian.ods_t_product where cdat='20191221';

see dw The running results of the layer

select * from lalian.dw_t_product where cdat='20191221';

| goods_id | goods_status | createtime | modifytime | cdat |

|---|---|---|---|---|

| 1 | For sale | 2019/12/18 | 2019/12/21 | 20191221 |

| 2 | For sale | 2019/12/19 | 2019/12/20 | 20191221 |

| 3 | On sale | 2019/12/20 | 2019/12/20 | 20191221 |

| 4 | deleted | 2019/12/15 | 2019/12/20 | 20191221 |

| 5 | To audit | 2019/12/21 | 2019/12/21 | 20191221 |

| 6 | To audit | 2019/12/21 | 2019/12/21 | 20191221 |

Incremental import 12 month 22 Daily data

Raw data layer import 12 month 22 Daily data (6 Data )

UPDATE `lalian`.`t_product` SET goods_status = ' deleted ', modifytime = '2019-12-22' WHERE goods_id = '003';UPDATE `lalian`.`t_product` SET goods_status = ' deleted ', modifytime = '2019-12-22' WHERE goods_id = '006';INSERT INTO `lalian`.`t_product`(goods_id, goods_status, createtime, modifytime) VALUES('007', ' To audit ', '2019-12-22', '2019-12-22'),('008', ' To audit ', '2019-12-22', '2019-12-22');Import data into ods Layer and dw layer

# Import from the raw data layer to ods layer insert into lalian.ods_t_productselect *,'20191222' from lalian.t_product ;# from ods Synchronize to dw layer insert into lalian.dw_t_productpeizhiwenjianselect * from lalian.ods_t_product where cdat='20191222';

see dw The running results of the layer

select * from lalian.dw_t_product where cdat='20191222';

| goods_id | goods_status | createtime | modifytime | cdat |

|---|---|---|---|---|

| 1 | For sale | 2019/12/18 | 2019/12/21 | 20191222 |

| 2 | For sale | 2019/12/19 | 2019/12/20 | 20191222 |

| 3 | deleted | 2019/12/20 | 2019/12/22 | 20191222 |

| 4 | deleted | 2019/12/15 | 2019/12/20 | 20191222 |

| 5 | To audit | 2019/12/21 | 2019/12/21 | 20191222 |

| 6 | deleted | 2019/12/21 | 2019/12/22 | 20191222 |

| 7 | To audit | 2019/12/22 | 2019/12/22 | 20191222 |

| 8 | To audit | 2019/12/22 | 2019/12/22 | 20191222 |

see dw The running results of the layer

select * from lalian.dw_t_product;

| goods_id | goods_status | createtime | modifytime | cdat |

|---|---|---|---|---|

| 1 | To audit | 2019/12/18 | 2019/12/20 | 20191220 |

| 2 | For sale | 2019/12/19 | 2019/12/20 | 20191220 |

| 3 | On sale | 2019/12/20 | 2019/12/20 | 20191220 |

| 4 | deleted | 2019/12/15 | 2019/12/20 | 20191220 |

| 1 | For sale | 2019/12/18 | 2019/12/21 | 20191221 |

| 2 | For sale | 2019/12/19 | 2019/12/20 | 20191221 |

| 3 | On sale | 2019/12/20 | 2019/12/20 | 20191221 |

| 4 | deleted | 2019/12/15 | 2019/12/20 | 20191221 |

| 5 | To audit | 2019/12/21 | 2019/12/21 | 20191221 |

| 6 | To audit | 2019/12/21 | 2019/12/21 | 20191221 |

| 1 | For sale | 2019/12/18 | 2019/12/21 | 20191222 |

| 2 | For sale | 2019/12/19 | 2019/12/20 | 20191222 |

| 3 | deleted | 2019/12/20 | 2019/12/22 | 20191222 |

| 4 | deleted | 2019/12/15 | 2019/12/20 | 20191222 |

| 5 | To audit | 2019/12/21 | 2019/12/21 | 20191222 |

| 6 | deleted | 2019/12/21 | 2019/12/22 | 20191222 |

| 7 | To audit | 2019/12/22 | 2019/12/22 | 20191222 |

| 8 | To audit | 2019/12/22 | 2019/12/22 | 20191222 |

From the above cases , You can see : Keep a full copy of the watch every day , A lot of unchanging information will be saved in each full volume , If there's a lot of data , It's a huge waste of storage , The watch can be designed as a zipper watch , It can satisfy the historical state of the reaction data , It can also save the storage space as much as possible .

Option two : Use zipper tables to save historical snapshots

Zipper tables don't store redundant data , Only one The row data changes , It needs to be preserved , Compared with every full synchronization, it saves storage space

Can query historical snapshot

Two extra columns have been added (dw_start_date、dw_end_date), For the life cycle of data rows .

12 month 20 Data from the daily commodity list

| goods_id | goods_status | createtime | modifytime | dw_start_date | dw_end_date |

|---|---|---|---|---|---|

| 001 | To audit | 2019-12-18 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

| 002 | For sale | 2019-12-19 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

| 003 | On sale | 2019-12-20 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

| 004 | deleted | 2019-12-15 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

12 month 20 The data of the day is brand new data imported into dw surface

dw_start_date Represents the start time of the life cycle of a piece of data , That is, the data is valid from that time ( The effective date )

dw_end_date Represents the end time of the life cycle of a piece of data , That's when the data comes to this day ( It doesn't contain )( The expiration date )

dw_end_date by 9999-12-31, Indicates that the current data is the latest data , Data to 9999-12-31 It's overdue

12 month 21 Data from the daily commodity list

| goods_id | goods_status | createtime | modifytime | dw_start_date | dw_end_date |

|---|---|---|---|---|---|

| 001 | To audit | 2019-12-18 | 2019-12-20 | 2019-12-20 | 2019-12-21 |

| 002 | For sale | 2019-12-19 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

| 003 | On sale | 2019-12-20 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

| 004 | deleted | 2019-12-15 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

| 001( change ) | For sale | 2019-12-18 | 2019-12-21 | 2019-12-21 | 9999-12-31 |

| 005( new ) | To audit | 2019-12-21 | 2019-12-21 | 2019-12-21 | 9999-12-31 |

There is no redundant data stored in the zipper table , That is, as long as the data does not change , No need to synchronize

001 The status of the numbered item data has changed ( From to be audited → For sale ), Need to put the original dw_end_date from 9999-12-31 Turn into 2019-12-21, Indicates the status to be approved , stay 2019/12/20( contain ) - 2019/12/21( It doesn't contain ) It works ;

001 Number the new state to save a new record ,dw_start_date by 2019/12/21,dw_end_date by 9999/12/31;

The new data 005、006、dw_start_date by 2019/12/21,dw_end_date by 9999/12/31.

12 month 22 Data from the daily commodity list

| goods_id | goods_status | createtime | modifytime | dw_start_date | dw_end_date |

|---|---|---|---|---|---|

| 001 | To audit | 2019-12-18 | 2019-12-20 | 2019-12-20 | 2019-12-21 |

| 002 | For sale | 2019-12-19 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

| 003 | On sale | 2019-12-20 | 2019-12-20 | 2019-12-20 | 2019-12-22 |

| 004 | deleted | 2019-12-15 | 2019-12-20 | 2019-12-20 | 9999-12-31 |

| 001 | For sale | 2019-12-18 | 2019-12-21 | 2019-12-21 | 9999-12-31 |

| 005 | To audit | 2019-12-21 | 2019-12-21 | 2019-12-21 | 9999-12-31 |

| 006 | To audit | 2019-12-21 | 2019-12-21 | 2019-12-21 | 9999-12-31 |

| 003( change ) | deleted | 2019-12-20 | 2019-12-22 | 2019-12-22 | 9999-12-31 |

| 007( new ) | To audit | 2019-12-22 | 2019-12-22 | 2019-12-22 | 9999-12-31 |

| 008( new ) | To audit | 2019-12-22 | 2019-12-22 | 2019-12-22 | 9999-12-31 |

There is no redundant data stored in the zipper table , That is, as long as the data does not change , No need to synchronize

003 The status of the numbered item data has changed ( From being on sale → deleted ), Need to put the original dw_end_date from 9999-12-31 Turn into 2019-12-22, It's on sale , stay 2019/12/20( contain ) - 2019/12/22( It doesn't contain ) It works

003 Number the new state to save a new record ,dw_start_date by 2019-12-22,dw_end_date by 9999-12-31

The new data 007、008、dw_start_date by 2019-12-22,dw_end_date by 9999-12-31

MySQL Data warehouse zipper table snapshot implementation

Operation process :

In the original dw On the surface , Add two extra columns

Only synchronize the data modified that day to ods layer

Zipper table algorithm implementation

The data of zipper table is : The latest data of the day UNION ALL The historical data

Code implementation

stay MySQL in lalian Libraries and commodity tables are used to go to the raw data layer

-- Create database create database if not exists lalian;-- Create a product list create table if not exists `lalian`.`t_product2`( goods_id varchar(50), -- Product id goods_status varchar(50), -- Goods state createtime varchar(50), -- Product creation time modifytime varchar(50) -- Product modification time )default character set = 'utf8';

stay MySQL Created in ods and dw layer Analog data warehouse

-- ods Create a product list create table if not exists `lalian`.`ods_t_product2`( goods_id varchar(50), -- Product id goods_status varchar(50), -- Goods state createtime varchar(50), -- Product creation time modifytime varchar(50), -- Product modification time cdat varchar(10) -- simulation hive Partition )default character set = 'utf8';-- dw Create a product list create table if not exists `lalian`.`dw_t_product2`( goods_id varchar(50), -- Product id goods_status varchar(50), -- Goods state createtime varchar(50), -- Product creation time modifytime varchar(50), -- Product modification time dw_start_date varchar(12), -- Effective date dw_end_date varchar(12), -- Failure time cdat varchar(10) -- simulation hive Partition )default character set = 'utf8';

Full import 2019 year 12 month 20 Daily data

Raw data layer import 12 month 20 Daily data (4 Data )

insert into `lalian`.`t_product_2`(goods_id, goods_status, createtime, modifytime) values('001', ' To audit ', '2019-12-18', '2019-12-20'),('002', ' For sale ', '2019-12-19', '2019-12-20'),('003', ' On sale ', '2019-12-20', '2019-12-20'),('004', ' deleted ', '2019-12-15', '2019-12-20');Import data into the data warehouse ods layer

insert into lalian.ods_t_product2select *,'20191220' from lalian.t_product2 where modifytime >='2019-12-20';

Take data from ods Layers are imported into dw layer

insert into lalian.dw_t_product2select goods_id, goods_status, createtime, modifytime, modifytime,'9999-12-31', cdat from lalian.ods_t_product2 where cdat='20191220';

Incremental import 2019 year 12 month 21 Daily data

Raw data layer import 12 month 21 Daily data (6 Data )

UPDATE `lalian`.`t_product2` SET goods_status = ' For sale ', modifytime = '2019-12-21' WHERE goods_id = '001';INSERT INTO `lalian`.`t_product2`(goods_id, goods_status, createtime, modifytime) VALUES('005', ' To audit ', '2019-12-21', '2019-12-21'),('006', ' To audit ', '2019-12-21', '2019-12-21');The raw data layer is synchronized to ods layer

insert into lalian.ods_t_product2select *,'20191221' from lalian.t_product2 where modifytime >='2019-12-21';

To write ods Layer to dw Layer recalculation dw_end_date

select t1.goods_id, t1.goods_status, t1.createtime, t1.modifytime, t1.dw_start_date, case when (t2.goods_id is not null and t1.dw_end_date>'2019-12-21') then '2019-12-21'else t1.dw_end_date end as dw_end_date , t1.cdatfrom lalian.dw_t_product2 t1left join (select * from lalian.ods_t_product2 where cdat='20191221')t2 on t1.goods_id=t2.goods_idunionselect goods_id, goods_status, createtime, modifytime, modifytime,'9999-12-31', cdat from lalian.ods_t_product2 where cdat='20191221';

The results are as follows :

| goods_id | goods_status | createtime | modifytime | dw_start_date | dw_end_date | cdat |

|---|---|---|---|---|---|---|

| 1 | To audit | 2019-12-18 | 2019-12-20 | 2019-12-20 | 2019-12-21 | 20191220 |

| 2 | For sale | 2019-12-19 | 2019-12-20 | 2019-12-20 | 9999-12-31 | 20191220 |

| 3 | On sale | 2019-12-20 | 2019-12-20 | 2019-12-20 | 9999-12-31 | 20191220 |

| 4 | deleted | 2019-12-15 | 2019-12-20 | 2019-12-20 | 9999-12-31 | 20191220 |

| 1 | For sale | 2019-12-18 | 2019-12-21 | 2019-12-21 | 9999-12-31 | 20191221 |

| 5 | To audit | 2019-12-21 | 2019-12-21 | 2019-12-21 | 9999-12-31 | 20191221 |

| 6 | To audit | 2019-12-21 | 2019-12-21 | 2019-12-21 | 9999-12-31 | 20191221 |

Zipper history table , It can satisfy the historical state of the reaction data , It can also save storage to the greatest extent . When we make the zipper watch, we should determine the granularity of the zipper watch , For example, the zipper watch only takes one status every day , That is to say, if one day has 3 State changes , We only take the last state , This kind of day granularity table can solve most of the problems .

Here we are , I'm sure you're right “mysql What is a database zipper table ” Have a deeper understanding of , You might as well put it into practice ! This is the Yisu cloud website , For more relevant contents, you can enter the relevant channels for inquiry , Pay attention to our , Continue to learn !

边栏推荐

- mysql不是内部命令如何解决

- Use js to switch between clickable and non clickable page buttons

- Pat class B 1031 checking ID card (15 points)

- 数学是用于解决问题的工具

- How to start wireless network service after win10 system installation

- Easyexcel introduction-01

- 什么是Eureka?Eureka能干什么?Eureka怎么用?

- Google Earth engine (GEE) - US native lithology data set

- Simulate long press event of mobile device

- Minesweeping - C language - Advanced (recursive automatic expansion + chess mark)

猜你喜欢

Postman发布API文档

Wechat applet_ 6. Network data request

Wechat applet_ 4. Wxss template style

mysql如何关闭事务

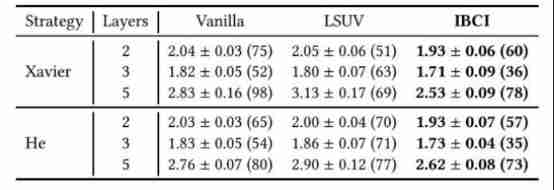

dried food! Neuron competitive initialization strategy based on information bottleneck theory

MATLAB 三维图(非常规)

mysql分页查询如何优化

![[OSG] OSG development (03) -- build the osgqt Library of MSVC version](/img/54/602cc5e92d8bfafbef346d6fbb96e3.png)

[OSG] OSG development (03) -- build the osgqt Library of MSVC version

![[QT] article summarizes the MSVC compilation suite in qtcreator](/img/c6/640ea7f06aa9582d125b3c3c7c3ee9.png)

[QT] article summarizes the MSVC compilation suite in qtcreator

Necessary free artifact for remote assistance todesk remote control software (defense, remote, debugging, office) necessary remote tools

随机推荐

Google Earth engine (GEE) - Global farmland organic soil carbon and nitrogen emissions (1992-2018) data set

Wechat applet_ 4. Wxss template style

源代码加密产品的分析

MATLAB快速入门

Digital twin smart server: information security monitoring platform

怎么看小程序是谁开发的(查看小程序开发公司方法)

app安全滲透測試詳細方法流程

Wechat applet_ 5. Page configuration

Research Report on market supply and demand and strategy of oil-free scroll compressor industry in China

On June 13, 2022, interview questions were asked

mysql中执行存储过程的语句怎么写

[OSG] OSG development (02) - build osgqt Library Based on MinGW compilation

20 statistics and their sampling distribution -- Sampling Distribution of sample proportion

19 statistics and its sampling distribution -- distribution of sample mean and central limit theorem

部署Zabbix企业级分布式监控

Best practice | how to use Tencent cloud micro build to develop enterprise portal applications from 0 to 1

Detailed method and process of APP security penetration test

Hisilicon series mass production hardware commissioning record

Wechat applet_ 5. Global configuration

Root cause analysis | inventory of nine scenarios with abnormal status of kubernetes pod