当前位置:网站首页>Dlib detects 68 facial features, and uses sklearn to train a facial smile recognition model based on SVM

Dlib detects 68 facial features, and uses sklearn to train a facial smile recognition model based on SVM

2022-06-23 22:32:00 【AnieaLanie】

1. Mission

1.1 Training purpose

Use Dlib Extract face features and train a class II classifier (smile, nosmile) To recognize smiling facial expressions .

1.2 Data sets

The data set is 4000 A picture , There are two kinds of : smile , Don't smile ; The format of the photo is jpg, The file named file+ Number .

2. code

2.1 obtain 4000 Feature point data of Zhang's face

import sys import os import dlib

First step , Read picture file , Define the picture Directory , Preprocessing characteristic data shape_predictor_68_face_landmarks.dat The catalog of .

predictor_path = "./train_dir/shape_predictor_68_face_landmarks.dat" faces_folder_path = "./train_dir/face/"

The second step , Load the face detector .

detector = dlib.get_frontal_face_detector() predictor = dlib.shape_predictor(predictor_path)

The third step , Face detection 68 The data of feature points are stored in the file :

fi=open("./train_dir/face_feature3.txt","a")

for i in range(4000):

f=faces_folder_path+'file'+'{:0>4d}'.format(i+1)+".jpg"

img = dlib.load_rgb_image(f)

# Let the detector find the bounding box of each face .

# In the second parameter 1 It means that we should 1 Next sampling . This

# # Will make everything bigger , And allow us to detect more faces .

dets = detector(img, 1)

#print("Number of faces detected: {}".format(len(dets)))

won=False

for k, d in enumerate(dets):

# Landmarks obtained / For face in frame d Part of .

shape = predictor(img, d)

rect_w=shape.rect.width()

rect_h=shape.rect.height()

top=shape.rect.top()

left=shape.rect.left()

fi.write('{},'.format(i))

won=True

for i in range(shape.num_parts):

fi.write("{},{},".format((shape.part(i).x-left)/rect_w,(shape.part(i).y-top)/rect_h))

if(won):

fi.write("\n")

fi.close()Complete code :

import sys

import os

import dlib

predictor_path = "./train_dir/shape_predictor_68_face_landmarks.dat"

faces_folder_path = "./train_dir/face/"

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(predictor_path)

fi=open("./train_dir/face_feature3.txt","a")

for i in range(4000):

f=faces_folder_path+'file'+'{:0>4d}'.format(i+1)+".jpg"

img = dlib.load_rgb_image(f)

# Let the detector find the bounding box of each face .

# In the second parameter 1 It means that we should 1 Next sampling . This

# # Will make everything bigger , And allow us to detect more faces .

dets = detector(img, 1)

#print("Number of faces detected: {}".format(len(dets)))

won=False

for k, d in enumerate(dets):

# Landmarks obtained / For face in frame d Part of .

shape = predictor(img, d)

rect_w=shape.rect.width()

rect_h=shape.rect.height()

top=shape.rect.top()

left=shape.rect.left()

fi.write('{},'.format(i))

won=True

for i in range(shape.num_parts):

fi.write("{},{},".format((shape.part(i).x-left)/rect_w,(shape.part(i).y-top)/rect_h))

if(won):

fi.write("\n")

fi.close()2.2 Handle 4000 The face data will be provided in advance smile perhaps nosmile Data is added to the face dataset

The data in this column is 1, It means a smiling face , by 0 Don't laugh :

Complete code for processing data :

import pandas as pd

import numpy as np

def dataprocess():

iris = pd.read_csv('./train_dir/face_feature4.csv')

result = pd.read_csv('./labels.txt',header=None,sep=' ')

result.columns=['smile','2','3','4']

smile=[]

for k in iris['Column1']:

smile.append(result['smile'][k])

iris['Column138']=smile

detectable=iris['Column1']

iris.drop(columns=['Column1'],inplace=True)

# Processing as binary data

iris['Column138'].replace(to_replace=[1,0],value=[+1,-1],inplace=True)

return iris2.3 Use sklearn Of svm Train and test the existing data

from dataprocess import dataprocess import numpy as np import matplotlib.pyplot as plt from sklearn.svm import SVC from sklearn.metrics import accuracy_score from sklearn.model_selection import train_test_split data = dataprocess() data = data.to_numpy() #print(data) x, y = np.split(data, (136,), axis=1) x = x[:, :] x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=1, train_size=0.6) # Training svm classifier clf = SVC(C=0.8, kernel='rbf', gamma=1, decision_function_shape='ovr') clf.fit(x_train, y_train.ravel()) # Calculation svc The accuracy of the classifier print(clf.score(x_train, y_train)) # precision y_hat = clf.predict(x_train) #print(y_hat) print(clf.score(x_test, y_test)) y_hat = clf.predict(x_test)

The following are the accuracy of the training set and the test set on the final decision model :

2.4 Save and read the model

# Save the model

import pickle

#lr It's a LogisticRegression Model

pkl_filename = "smile_detect.model"

with open(pkl_filename, 'wb') as file:

pickle.dump(clf, file)

# Read the model

with open(pkl_filename, 'rb') as file:

clf = pickle.load(file)2.5 Use the camera to detect smiling faces in real time

Perform real-time smile detection , It's about putting cv2 Image to dlib detector An array of images that can be detected :

# detector detector = dlib.get_frontal_face_detector() ... # Grab a frame of video #cv2 Images ret, frame = video_capture.read() #PIL Image Images picture = Image.fromarray(cv2.cvtColor(frame,cv2.COLOR_BGR2RGB)) # Convert to numpy Array img=np.array(picture) # Load in detector dets = detector(img, 1)

And then use dlib testing 68 Feature point data :

shape = predictor(img, d)

#68 Characteristic points

for i in range(shape.num_parts):

print("{},{},".format((shape.part(i).x-left)/rect_w,(shape.part(i).y-top)/rect_h))Complete code :

# Video detection

import cv2

import numpy as np

from PIL import Image, ImageDraw

import os

import time

import dlib

video_capture = cv2.VideoCapture(0)

j=0

detector = dlib.get_frontal_face_detector()

predictor_path = "./train_dir/shape_predictor_68_face_landmarks.dat"

predictor = dlib.shape_predictor(predictor_path)

faces=[]

while j<100:

# Grab a frame of video

ret, frame = video_capture.read()

picture = Image.fromarray(cv2.cvtColor(frame,cv2.COLOR_BGR2RGB))

img=np.array(picture)

dets = detector(img, 1)

face=[]

for k, d in enumerate(dets):

# Landmarks obtained / For face in frame d Part of .

shape = predictor(img, d)

rect_w=shape.rect.width()

rect_h=shape.rect.height()

top=shape.rect.top()

left=shape.rect.left()

for i in range(shape.num_parts):

#print("{},{},".format((shape.part(i).x-left)/rect_w,(shape.part(i).y-top)/rect_h))

face=np.append(face,(shape.part(i).x-left)/rect_w)

face=np.append(face,(shape.part(i).y-top)/rect_h)

#img = dlib.convert_image(picture)

print(type(face))

print(len(face))

if(len(face)!=0):

faces.append(face)

print(faces)

j+=1

smile=clf.predict(faces)

issmile=""

if(smile[0]==1):

print("smile")

issmile="smile"

else:

print("nosmile")

issmile="nosmile"

faces.clear()

#cv2.imshow('face',cv2.cvtColor(frame,cv2.COLOR_BGR2RGB))

# Take the image from BGR Color (OpenCV The use of ) Convert to RGB Color (face_recognition The use of )

rgb_frame = frame[:, :, ::-1]

# Find all faces and face codes in the video frame

face_locations = face_recognition.face_locations(rgb_frame)

face_encodings = face_recognition.face_encodings(rgb_frame, face_locations)

# Traverse each face in this frame of video

for (top, right, bottom, left), face_encoding in zip(face_locations, face_encodings):

# Draw a frame around your face

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

# Draw a label with a name under your face

cv2.rectangle(frame, (left, bottom - 35), (right, bottom), (0, 0, 255), cv2.FILLED)

font = cv2.FONT_HERSHEY_DUPLEX

cv2.putText(frame, issmile, (left + 6, bottom - 6), font, 1.0, (255, 255, 255), 1)

# Display the result image

cv2.imshow(' Smiling face detection ', frame)

# Press on the keyboard “q” sign out !

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release the handle of the webcam

video_capture.release()

cv2.destroyAllWindows()3. Complete code

Get face 68 Characteristic point feature_process.py:

Detector data shape_predictor_68_face_landmarks.dat Download from this website :http://dlib.net/files/shape_predictor_68_face_landmarks.dat.bz2.

4000 Put a photo in ./train_dir/face/ in .

#!/usr/bin/python

# The contents of this document are in the public domain . See LICENSE_FOR_EXAMPLE_PROGRAMS.txt

#

# This sample program shows how to find a positive face in an image and

# Estimate their posture . The posture is 68 In the form of a landmark . These are

# Facial points , For example, the corners of the mouth 、 eyebrow 、 Eyes, etc .

#

# The face detector we use is a classic directional histogram

# gradient (HOG) Feature combined linear classifier 、 Image pyramid 、

# And sliding window detection scheme . The pose estimator is composed of

# # Use dlib The paper realizes the creation of :

##

# Vahid Kazemi and Josephine Sullivan,CVPR 2014

# Align with the one millisecond face of the regression tree set

# # And in iBUG 300-W Face landmarks are trained on the dataset ( See # https://ibug.doc.ic.ac.uk /resources/facial-point-annotations/):

#C. Sagonas、E. Antonakos、G、Tzimiropoulos、S. Zafeiriou、M. Pantic.

# 300 face In-the-wild Challenge : Databases and results .

# Image and Vision Computing (IMAVIS), Facial landmark positioning “In-The-Wild” A special issue .2016.

# You can get the trained model file from the following location :

# http://dlib.net/files/shape_predictor_68_face_landmarks.dat.bz2.

# Please note that ,iBUG 300-W The license for the dataset does not include commercial use .

# So you should contact Imperial College London , have a look

#

#

# in addition , Please note that , You can use dlib Machine learning of

#

# compile / install DLIB PYTHON Interface

# You can use the following command to install dlib:

# pip install dlib

#

# perhaps , If you want to compile it yourself dlib, entering dlib

# Root folder and run :

# python setup.py install

#

# compile dlib Should be able to run on any operating system , As long as you have

# CMake already installed . stay Ubuntu On , This can be done by running

# command

# # sudo apt-get install cmake

#

# Attention, please. , This example needs to be able to

# pip install numpy

import sys

import os

import dlib

import glob

predictor_path = "./train_dir/shape_predictor_68_face_landmarks.dat"

faces_folder_path = "./train_dir/face/"

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(predictor_path)

#win = dlib.image_window()

fi=open("./train_dir/face_feature3.txt","a")

#i=0

#for f in glob.glob(os.path.join(faces_folder_path, "*.jpg")):

for i in range(4000):

f=faces_folder_path+'file'+'{:0>4d}'.format(i+1)+".jpg"

img = dlib.load_rgb_image(f)

# Let the detector find the bounding box of each face .

# In the second parameter 1 It means that we should 1 Next sampling . This

# # Will make everything bigger , And allow us to detect more faces .

dets = detector(img, 1)

won=False

for k, d in enumerate(dets):

# Landmarks obtained / For face in frame d Part of .

shape = predictor(img, d)

# Feature normalization

rect_w=shape.rect.width()

rect_h=shape.rect.height()

top=shape.rect.top()

left=shape.rect.left()

fi.write('{},'.format(i))

won=True

for i in range(shape.num_parts):

fi.write("{},{},".format((shape.part(i).x-left)/rect_w,(shape.part(i).y-top)/rect_h))

if(won):

fi.write("\n")

fi.close()Data processing dataprocess.py:

import pandas as pd

import numpy as np

def dataprocess():

iris = pd.read_csv('./train_dir/face_feature4.csv')

result = pd.read_csv('./labels.txt',header=None,sep=' ')

result.columns=['smile','2','3','4']

smile=[]

for k in iris['Column1']:

smile.append(result['smile'][k])

iris['Column138']=smile

detectable=iris['Column1']

iris.drop(columns=['Column1'],inplace=True)

# Processing as binary data

iris['Column138'].replace(to_replace=[1,0],value=[+1,-1],inplace=True)

return iris

# Use your face as monitoring data

def getmyself():

myface = pd.read_csv('./train_dir/myfeatures.csv')

myface.drop(columns=['Column1'],inplace=True)

myface.drop(columns=['Column138'],inplace=True)

return myfaceFace detection model training and real-time face detection :

from dataprocess import dataprocess

from dataprocess import getmyself

import numpy as np

import matplotlib.pyplot as plt

import face_recognition

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

# obtain 4000 Face data

data = dataprocess()

data = data.to_numpy()

x, y = np.split(data, (136,), axis=1)

x = x[:, :]

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=1, train_size=0.6)

# Training svm classifier

clf = SVC(C=0.8, kernel='rbf', gamma=1, decision_function_shape='ovr')

clf.fit(x_train, y_train.ravel())

# Calculation svc The accuracy of the classifier

print(clf.score(x_train, y_train)) # precision

y_hat = clf.predict(x_train)

print(clf.score(x_test, y_test))

y_hat = clf.predict(x_test)

# Use your own face data for detection

#myself=getmyself()

#myself=myself.to_numpy()

#print(myself)

#AmISmile=clf.predict(myself)

#print(AmISmile)

# Video detection

import cv2

import numpy as np

from PIL import Image, ImageDraw

import os

import time

import dlib

video_capture = cv2.VideoCapture(0)

j=0

detector = dlib.get_frontal_face_detector()

predictor_path = "./train_dir/shape_predictor_68_face_landmarks.dat"

predictor = dlib.shape_predictor(predictor_path)

faces=[]

while j<100:

# Grab a frame of video

ret, frame = video_capture.read()

picture = Image.fromarray(cv2.cvtColor(frame,cv2.COLOR_BGR2RGB))

img=np.array(picture)

dets = detector(img, 1)

face=[]

for k, d in enumerate(dets):

# Landmarks obtained / For face in frame d Part of .

shape = predictor(img, d)

rect_w=shape.rect.width()

rect_h=shape.rect.height()

top=shape.rect.top()

left=shape.rect.left()

for i in range(shape.num_parts):

face=np.append(face,(shape.part(i).x-left)/rect_w)

face=np.append(face,(shape.part(i).y-top)/rect_h)

print(type(face))

print(len(face))

if(len(face)!=0):

faces.append(face)

print(faces)

j+=1

smile=clf.predict(faces)

issmile=""

if(smile[0]==1):

print("smile")

issmile="smile"

else:

print("nosmile")

issmile="nosmile"

faces.clear()

#cv2.imshow('face',cv2.cvtColor(frame,cv2.COLOR_BGR2RGB))

# Take the image from BGR Color (OpenCV The use of ) Convert to RGB Color (face_recognition The use of )

rgb_frame = frame[:, :, ::-1]

# Find all faces and face codes in the video frame

face_locations = face_recognition.face_locations(rgb_frame)

face_encodings = face_recognition.face_encodings(rgb_frame, face_locations)

# Traverse each face in this frame of video

for (top, right, bottom, left), face_encoding in zip(face_locations, face_encodings):

# Draw a frame around your face

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

# Draw a label with a name under your face

cv2.rectangle(frame, (left, bottom - 35), (right, bottom), (0, 0, 255), cv2.FILLED)

font = cv2.FONT_HERSHEY_DUPLEX

cv2.putText(frame, issmile, (left + 6, bottom - 6), font, 1.0, (255, 255, 255), 1)

# Display the result image

cv2.imshow(' Smiling face detection ', frame)

# Press on the keyboard “q” sign out !

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release the handle of the webcam

video_capture.release()

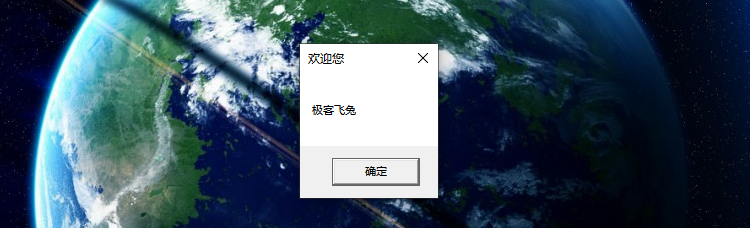

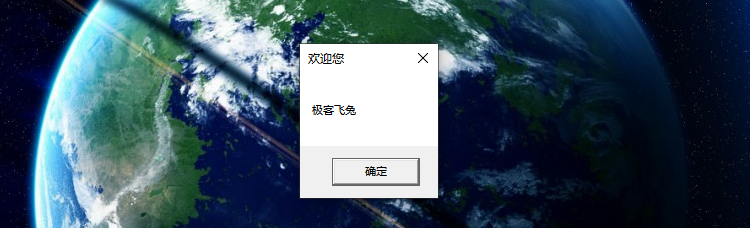

cv2.destroyAllWindows()Detection effect :

4. Reference resources

边栏推荐

- How to set the text style as PG in website construction

- Learn about reentrantlock

- How to control the quality of omics research—— Mosein

- In depth understanding of Internet of things device access layer

- H265 enables mobile phone screen projection

- Command line setting the next boot to enter safe mode

- December 14, 2021: rebuild the queue according to height. Suppose there's a bunch of people out of order

- Summary of redis Functions PHP version

- SAP mm ml81n creates a service receipt for a purchase order and reports an error - no matching Po items selected-

- Trident tutorial

猜你喜欢

游戏安全丨喊话CALL分析-写代码

為什麼你的數據圖譜分析圖上只顯示一個值?

Why is only one value displayed on your data graph?

脚本之美│VBS 入门交互实战

为什么你的数据图谱分析图上只显示一个值?

Beauty of script │ VBS introduction interactive practice

Opengauss Developer Day 2022 was officially launched to build an open source database root community with developers

openGauss Developer Day 2022正式开启,与开发者共建开源数据库根社区

Game security - call analysis - write code

在宇宙的眼眸下,如何正确地关心东数西算?

随机推荐

What are the steps required for TFTP to log in to the server through the fortress machine? Operation guide for novice

2008R2 CFS with NFS protocol

How to control the quality of omics research—— Mosein

Core features and technical implementation of FISCO bcos v3.0

Pourquoi une seule valeur apparaît - elle sur votre carte de données?

Bi SQL constraints

Error message - Customizing incorrectly maintained – in transaction code ML81N

Using h5ai to build Download Station

How to set the protective strip in the barcode

PHP laravel 8.70.1 - cross site scripting (XSS) to cross Site Request Forgery (CSRF)

How does H5 communicate with native apps?

Redis source code analysis -- QuickList of redis list implementation principle

Activiti practice

JWT implementation

应用实践 | Apache Doris 整合 Iceberg + Flink CDC 构建实时湖仓一体的联邦查询分析架构

What are the operation and maintenance advantages of Fortress machine web application publishing server? Two outstanding advantages

Judge whether the target class conforms to the section rule

Usage of cobaltstrike: Part 1 (basic usage, listener, redirector)

5 minutes to explain what is redis?

Summary of redis Functions PHP version