当前位置:网站首页>Do280 private warehouse persistent storage and chapter experiment

Do280 private warehouse persistent storage and chapter experiment

2022-06-30 03:46:00 【It migrant worker brother goldfish】

Personal profile : Hello everyone , I am a Brother goldfish ,CSDN New star creator in operation and maintenance field , Hua Wei Yun · Cloud sharing experts , Alicloud community · Expert bloggers

Personal qualifications :CCNA、HCNP、CSNA( Network Analyst ), Soft test primary 、 Intermediate network engineer 、RHCSA、RHCE、RHCA、RHCI、ITIL

Maxim : Hard work doesn't necessarily succeed , But if you want to succeed, you must work hardStand by me : I like it 、 Can collect ️、 Leave message

List of articles

Private warehouse persistent storage

Create a private warehouse persistent volume

OCP The internal warehouse is source-to-image(S2I) An important component of the process , This process is used to create pod.S2I The final output of the process is a container image, It was pushed to OCP Internal warehouse , It can then be used to deploy .

In the production environment , It is generally recommended to provide a persistent store for the internal Repository . otherwise , Re creating registry pod after ,S2I Created pod May not start . for example , stay master After the node restarts .

OpenShift The setup program configures and starts a default persistent Repository , The warehouse uses NFS share , from Inventory In the document openshift_hosted_registry_storage_* Variable definitions . In the production environment ,Red Hat It is recommended that persistent storage be provided by external dedicated storage , The server is configured for flexibility and high availability .

Advanced setup will NFS The server is configured to use external NFS Persistent storage on the server , stay [NFS] The one defined in the field NFS List of servers . The server and openshift_hosted_registry_storage* Variables are used together , To configure NFS The server .

Sample configuration :

[OSEv3:vars]

openshift_hosted_registry_storage_kind=nfs # Definition OCP Storage back end

openshift_hosted_registry_storage_access_modes=['ReadWriteMany'] # Define access patterns , The default is ReadWriteMany, Indicates that multiple nodes are allowed to mount in read-write form

openshift_hosted_registry_storage_nfs_directory=/exports # Definition NFS On the server NFS Storage directory

openshift_hosted_registry_storage_nfs_options='*(rw,root_squash)' # Defines the storage volume NFS Options . These options are added to /etc/ exports.d/openshift-ansible.exports in .rw Options allow you to NFS Volume for read-write access ,root_squash Option prevents the root user of the remote connection from owning root Privilege , And for nfsnobody Assign users ID

openshift_hosted_registry_storage_volume_name=registry # Define the... To be used for persistent repositories NFS The name of the catalog

openshift_hosted_registry_storage_volume_size=40Gi # Define persistent volume size

... output omitted ...

[nfs]

services.lab.example.com

After installing and configuring the store for the persistent Repository ,OpenShift stay OpenShift Create a project named register-volume The persistent volume of . The capacity of the persistent volume is 40gb, And set up... According to the definition Retain Strategy . At the same time, the default project is pvc call pv.

[[email protected] ~]$ oc describe pv registry-volume

Name: registry-volume # Define persistent volume name

Labels: <none>

Annotations: pv.kubernetes.io/bound-by-controller=yes

StorageClass:

Status: Bound

Claim: default/registry-claim # Define the statement to use persistent volumes

Reclaim Policy: Retain # Default persistent volume policy , have Retain Policy volumes are not erased after they are released from their claims

Access Modes: RWX # Define the access mode for persistent volumes , from Ansible inventory Of documents openshift_hosted_registry_storage_access_modes=['ReadWriteMany'] Variable definitions

Capacity: 40Gi # Define the size of the persistent volume , from Ansible inventory Of documents openshift_hosted_registry_storage_volume_size Variable definitions

Message:

Source: # Define the location of the storage back end and NFS share

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: services.lab.example.com

Path: /exports/registry

ReadOnly: false

Events: <none>

Run the following command , confirm OpenShift The internal warehouse has been configured registry-volume By default PersistentVolumeClaim.

[[email protected] ~] oc describe dc/docker-registry | grep -A4 Volumes

Volumes:

registry-storage:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: registry-claim

ReadOnly: false

OCP The internal warehouse will image and metadata Store as normal files and folders , This means that you can check PV Source storage , Check if the warehouse has written files to it .

In the production environment , This is through access to the outside NFS Server to complete . however , In this context ,NFS Sharing is in services Of VM The configuration of , therefore ssh to services see , For verification OCP Internal warehouse success will image Store in persistent storage .

Example : A group called hello The application is in default Run... In namespace , The following command verifies that the image is stored in persistent storage .

[[email protected] ~] ssh [email protected] ls -l \

/var/export/registryvol/docker/registry/v2/repositories/default/

Chapter experiment

Environmental preparation

[[email protected] ~]$ lab install-prepare setup

[[email protected] ~]$ cd /home/student/do280-ansible

[[email protected] do280-ansible]$ ./install.sh

Tips : If you already have a complete environment , Don't execute .

Prepare for this exercise

[[email protected] ~]$ lab storage-review setup

To configure NFS

This experiment does not explain in detail NFS Configuration and creation of , Use it directly /root/DO280/labs/deploy-volume/config-nfs.sh Script implementation , The specific script content can be viewed in the following ways .

meanwhile NFS from services Nodes provide .

[[email protected] ~]# less -FiX /root/DO280/labs/storage-review/config-review-nfs.sh

[[email protected] ~]# /root/DO280/labs/storage-review/config-review-nfs.sh # establish NFS

[[email protected] ~]# showmount -e # Validation verification

Export list for services.lab.example.com:

/var/export/dbvol *

/var/export/review-dbvol *

/exports/prometheus-alertbuffer *

/exports/prometheus-alertmanager *

/exports/prometheus *

/exports/etcd-vol2 *

/exports/logging-es-ops *

/exports/logging-es *

/exports/metrics *

/exports/registry *

Create persistent volume

[[email protected] ~]$ oc login -u admin -p redhat https://master.lab.example.com

[[email protected] ~]$ less -FiX /home/student/DO280/labs/storage-review/review-volume-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: review-pv

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

nfs:

path: /var/export/review-dbvol

server: services.lab.example.com

persistentVolumeReclaimPolicy: Recycle

[[email protected] ~]$ oc create -f /home/student/DO280/labs/storage-review/review-volume-pv.yaml

[[email protected] ~]$ oc get pv # see PV

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

etcd-vol2-volume 1G RWO Retain Bound openshift-ansible-service-broker/etcd 6d

registry-volume 40Gi RWX Retain Bound default/registry-claim 6d

review-pv 3Gi RWX Recycle Available 5s

Deployment templates

[[email protected] ~]$ less -FiX /home/student/DO280/labs/storage-review/instructor-template.yaml

[[email protected] ~]$ oc create -n openshift -f /home/student/DO280/labs/storage-review/instructor-template.yaml

# Use templates to create applications to openshift namespace in

Create project

[[email protected] ~]$ oc login -u developer -p redhat https://master.lab.example.com

[[email protected] ~]$ oc new-project instructor

web Deploy the application

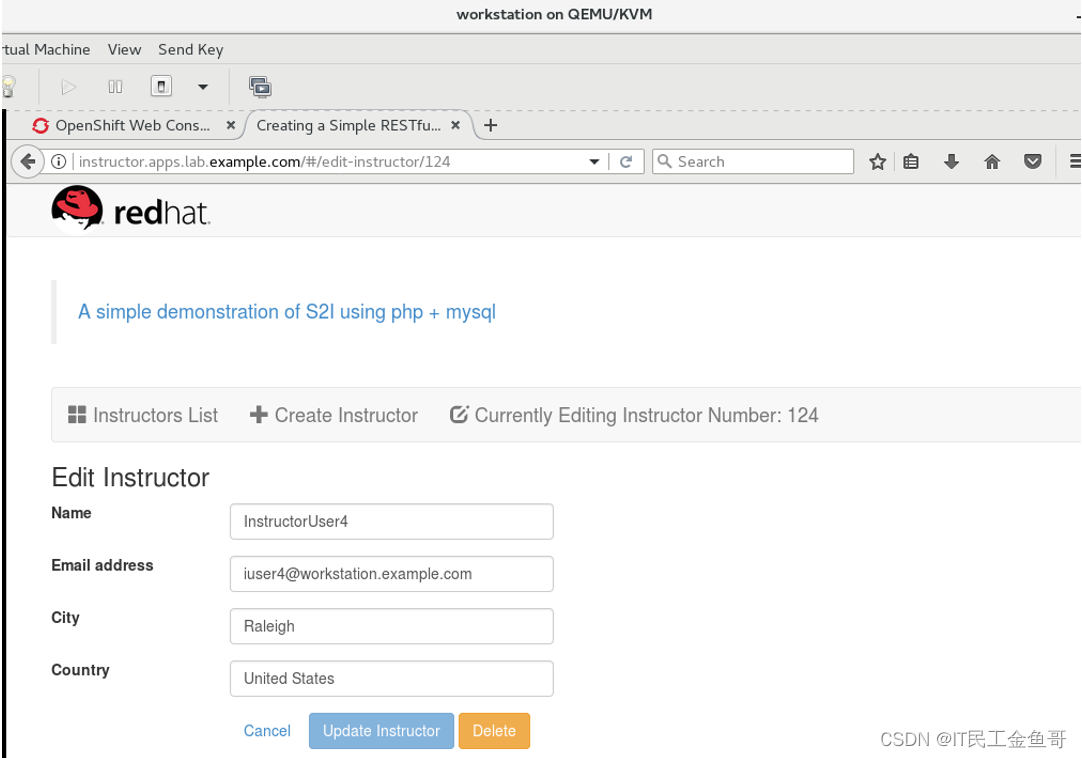

Browser access : https://master.lab.example.com

choice Catalog

choice PHP, And use instructor-template.

Set up Application Hostname, Then go straight to the next step , The template creates a database server .

single click Continue to project overview To monitor the build process of the application . From the framework of services provided , single click instructor. Click deploy configuration #1 The drop-down arrow next to the entry , Open the deployment panel . When the build is complete ,build Part of the Complete There should be a green check mark next to it .

Port forwarding

[[email protected] ~]$ oc login -u developer -p redhat https://master.lab.example.com

[[email protected] ~]$ oc get pod

NAME READY STATUS RESTARTS AGE

instructor-1-build 0/1 Completed 0 15m

instructor-1-zqtwp 1/1 Running 0 14m

mysql-1-2k8kb 1/1 Running 0 15m

[[email protected] ~]$ oc port-forward mysql-1-2k8kb 3306:3306

Fill the database

[[email protected] ~]$ mysql -h127.0.0.1 -u instructor -ppassword \

instructor < /home/student/DO280/labs/storage-review/instructor.sql

[[email protected] ~]$ mysql -h127.0.0.1 -u instructor -ppassword instructor -e "select * from instructors;"

+------------------+-----------------+--------------------------------+----------------+-------+------------+---------------+

| instructorNumber | instructorName | email | city | state | postalCode | country |

+------------------+-----------------+--------------------------------+----------------+-------+------------+---------------+

| 103 | DemoUser1 | [email protected] | Raleigh | NC | 27601 | United States |

| 112 | InstructorUser1 | [email protected] | Rio de Janeiro | RJ | 22021-000 | Brasil |

| 114 | InstructorUser2 | [email protected] | Raleigh | NC | 27605 | United States |

| 123 | InstructorUser3 | [email protected] | Sao Paulo | SP | 01310-000 | Brasil |

+------------------+-----------------+--------------------------------+----------------+-------+------------+---------------+

Test access

workstations Browser access :http://instructor.apps.lab.example.com

Test add data

Validation verification

[[email protected] ~]$ lab storage-review grade # Environment script judgment experiment

Clear the experiment

[[email protected] ~]$ oc login -uadmin -predhat

[[email protected] ~]$ oc delete project instructor

[[email protected] ~]$ oc delete pv review-pv

[[email protected] ~]$ ssh [email protected]

[[email protected] ~]# rm -rf /var/export/review-dbvol

[[email protected] ~]# rm -f /etc/exports.d/review-dbvol.exports

summary

- Red Hat OpenShift Container platform use PersistentVolumes (PVs) by pods Provide persistent storage .

- OpenShift Project use PersistentVolumeClaim (PVC) Resources to request that PV Assigned to project .

- OpenShift The installer configures and starts a default Repository , It is used from OpenShift Exported by the main program NFS share .

- A group of Ansible Variables are allowed to be OpenShift The default warehouse is configured with an external NFS Storage . This will create a persistent volume and a persistent volume declaration .

RHCA Certification requires experience 5 Study and examination of the door , It still takes a lot of time to study and prepare for the exam , Come on , Can GA 🤪.

That's all 【 Brother goldfish 】 Yes Chapter six DO280 Private warehouse persistent storage and chapter experiment Brief introduction and explanation of . I hope it can be helpful to the little friends who see this article .

Red hat Certification Column series :

RHCSA special column : entertain RHCSA authentication

RHCE special column : entertain RHCE authentication

This article is included in RHCA special column :RHCA memoir

If this article 【 article 】 It helps you , I hope I can give 【 Brother goldfish 】 Point a praise , It's not easy to create , Compared with the official statement , I prefer to use 【 Easy to understand 】 To explain every point of knowledge with your writing .

If there is a pair of 【 Operation and maintenance technology 】 Interested in , You are welcome to pay attention to ️️️ 【 Brother goldfish 】️️️, I will bring you great 【 Harvest and surprise 】!

边栏推荐

猜你喜欢

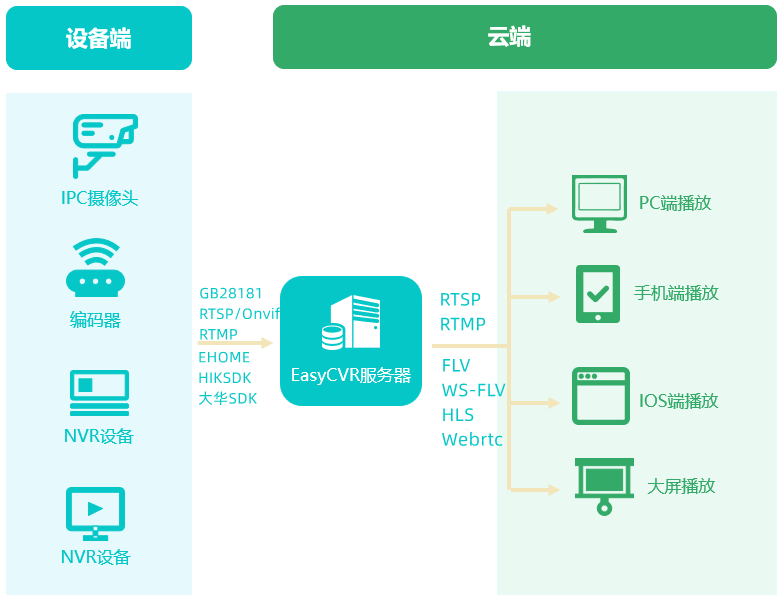

如何通过进程启动来分析和解决EasyCVR内核端口报错问题?

Geometric objects in shapely

Number of students from junior college to Senior College (4)

![[summary of skimming questions] database questions are summarized by knowledge points (continuous update / simple and medium questions have been completed)](/img/89/fc02ce355c99031623175c9f351790.jpg)

[summary of skimming questions] database questions are summarized by knowledge points (continuous update / simple and medium questions have been completed)

Tidb 6.0: rendre les GRT plus efficaces 丨 tidb Book Rush

11: I came out at 11:04 after the interview. What I asked was really too

NER中BiLSTM-CRF解读score_sentence

【筆記】AB測試和方差分析

Hash design and memory saving data structure design in redis

TiDB 6.0:讓 TSO 更高效丨TiDB Book Rush

随机推荐

NER中BiLSTM-CRF解读score_sentence

2021-07-05

DO280私有仓库持久存储与章节实验

How do college students make money by programming| My way to make money in College

Vscode+anaconda+jupyter reports an error: kernel did with exit code

How to use Jersey to get the complete rest request body- How to get full REST request body using Jersey?

Usage record of unity input system (instance version)

【作业】2022.5.24 MySQL 查操作

Simple theoretical derivation of SVM (notes)

[FAQ] page cross domain and interface Cross Domain

[punch in - Blue Bridge Cup] day 2 --- format output format, ASCII

[qt] qmap usage details

1151_ Makefile learning_ Static matching pattern rules in makefile

声网自研传输层协议 AUT 的落地实践丨Dev for Dev 专栏

UML图与List集合

C [advanced] C interface

December2020 - true questions and analysis of C language (Level 2) in the youth level examination of the Electronic Society

SDS understanding in redis

yarn的安装和使用

DRF -- nested serializer (multi table joint query)