当前位置:网站首页>From 5 seconds to 1 second, remember the performance optimization with "very" significant effect once

From 5 seconds to 1 second, remember the performance optimization with "very" significant effect once

2022-07-23 17:35:00 【Fat technology house】

performance optimization , Sometimes it looks like a comparison virtual Technical requirements of . Unless the code is unbearably slow , otherwise , Few companies have the awareness to invest resources to do these jobs . Even if you have performance data , It is also difficult to persuade leaders to do a time-consuming job 300ms Down to 150ms Improvement , Because it has no business value .

It's sad , But this is a sad reality .

performance optimization , Usually initiated by people with technical pursuit , Forward optimization according to observation indexes . They usually have the spirit of craftsmanship , Find fault with every millisecond of time , strive for perfection . Of course , If you have time .

1. Optimize the background and objectives

Our performance optimization , It's because it's unbearable , The optimization work , It belongs to ex post facto remedy , Problem driven approach . It's usually no problem , After all, business comes first , The iteration is carried out in the pit filling .

Let's start with the background . The services optimized this time , The request response time is very unstable . As the amount of data increases , Most requests , Time consuming 5-6 About seconds ! Beyond what ordinary people can bear .

Of course, it needs to be optimized .

To illustrate the objectives to be optimized , I sketched its topology . As shown in the figure , This is a set of microservice architecture services .

among , Our optimization goal , In a relatively upstream service . It needs to go through Feign Interface , Call many downstream service providers , Aggregate and splice after obtaining data , Finally through zuul Gateway and nginx, To send to the browser client .

In order to observe the call relationship between services and monitor data , We have access to Skywalking Call chain platform and Prometheus Monitoring platform , Collect important data so that optimization decisions can be made . Before optimization , We need to first look at the two technical indicators that need to be referred to in the optimization .

- throughput : The number of occurrences per unit time . such as QPS、TPS、HPS etc. .

- Mean response time : Average time per request .

The smaller the average response time, the better , The smaller it is , The higher the throughput . The increase of throughput can also make rational use of multi-core , Increase the number of occurrences per unit time through parallelism .

Our goal of this optimization , Is to reduce the average response time of some interfaces , Down to 1 Within seconds ; Increased throughput , That is to improve QPS, So that the single instance system can undertake more concurrent requests .

2. Through compression, the time consumption is greatly reduced

I want to introduce the most important optimization method to make the system fly : Compress .

By means of chrome Of inspect View the requested data in , We found a key request interface , Each transmission is about 10MB The data of . How many things have to be stuffed .

So big data , Downloading alone takes a lot of time . As shown in the figure below , I asked juejin A request from the home page , Among them content download, It represents the transmission time of data on the network . If the user's bandwidth is very slow , So the time of this request , It will be very long .

In order to reduce the transmission time of data on the network , Enable gzip Compress .gzip Compression belongs to the practice of changing time into space . For most services , The last ring is nginx, Most people will be in nginx This layer is compressed . Its main configuration is as follows :

gzip on;

gzip_vary on;

gzip_min_length 10240;

gzip_proxied expired no-cache no-store private auth;

gzip_types text/plain text/css text/xml text/javascript application/x-javascript application/xml;

gzip_disable "MSIE [1-6]\.";

Copy code How amazing the compression rate is ? We can take a look at this screenshot . You can see , After data compression , from 8.95MB Reduced to 368KB! It can be downloaded by the browser in an instant .

But wait. ,nginx Just the outermost link , It's not over yet. , We can also make the request faster .

See the request path below , Due to the adoption of microservices , The flow of requests becomes complicated :nginx It does not directly call the relevant services , It calls theta zuul gateway ,zuul The gateway is the real target service , The target service calls other services . Intranet bandwidth is also bandwidth , Network latency also affects call speed , Also compress .

nginx->zuul-> service A-> service E

Copy code If you want to Feign All calls between go through the compression channel , Additional configuration is required . We are springboot service , Can pass okhttp Transparent compression of .

Add its dependencies :

<dependency>

<groupId>io.github.openfeign</groupId>

<artifactId>feign-okhttp</artifactId>

</dependency>

Copy code Open the server configuration :

server:

port:8888

compression:

enabled:true

min-response-size:1024

mime-types:["text/html","text/xml","application/xml","application/json","application/octet-stream"]

Copy code Open client configuration :

feign:

httpclient:

enabled:false

okhttp:

enabled:true

Copy code After these compressions , The average response time of our interface , Directly from 5-6 Seconds down to 2-3 second , The optimization effect is very significant .

Of course , We also made an article on the result set , In the data returned to the front end , Unused objects and fields , Have been streamlined . But in general , These changes are traumatic , A lot of code needs to be adjusted , So our energy on this is limited , The effect is naturally Limited .

3. Getting data in parallel , Fast response

Next , We need to go deep into the logic of the code for analysis . We mentioned above , User oriented interface , It's actually a data aggregation interface . Its every request , adopt Feign, Called dozens of other service interfaces , Data acquisition , Then splice the result set .

Why slow ? Because these requests are all serial !Feign The call is a remote call , It's the Internet I/O Intensive call , Most of the time waiting , If the data is satisfied , It is very suitable for parallel calls .

First , We need to analyze the dependencies of these dozens of sub interfaces , See if they have strict sequencing requirements . If most don't , That would be great .

The results of the analysis are mixed , This pile of interfaces , According to the call logic , In general, it can be divided into A,B class . First , Need to request A The class interface , After splicing data , These data can be used for B Class uses . But in A,B Intra class , There is no order requirement .

in other words , We can put this interface , Split into two parts executed sequentially , In a certain part, data can be obtained in parallel .

Then try to transform it according to the analysis results , Use concurrent In the bag CountDownLatch, It's easy to implement the merge function .

CountDownLatch latch = new CountDownLatch(jobSize);

//submit job

executor.execute(() -> {

//job code

latch.countDown();

});

executor.execute(() -> {

latch.countDown();

});

...

//end submit

latch.await(timeout, TimeUnit.MILLISECONDS);

Copy code The results are very satisfying , Our interface takes time , Reduced by nearly half ! here , The interface time has been reduced to 2 Seconds or less .

You may ask , Why not Java What about parallel streams ?

Concurrent programming must be careful , Especially concurrent programming in business code . We constructed a dedicated thread pool , To support the function of concurrent acquisition .

final ThreadPoolExecutor executor = new ThreadPoolExecutor(100, 200, 1,

TimeUnit.HOURS, new ArrayBlockingQueue<>(100));

Copy code Compression and parallelization , It is in our optimization , The most effective means . They directly cut down most of the time-consuming of the request , Very effective . But we are still not satisfied , Because every time I ask , There are still 1 More than seconds .

4. Cache classification , Further accelerate

We found that , Some data acquisition , It's in a loop , There are many invalid requests , That can't endure .

for(List){

client.getData();

}

Copy code If you cache these common results , Then you can greatly reduce the network IO Number of requests , Increase the running efficiency of the program .

Caching is in the optimization of most applications , It's very important . But because of the contrast between compression and parallelism , Cache in our scene , The effect is not very obvious , But it still reduces the request time by about thirty or forty milliseconds .

This is what we do .

First , We'll make some of the code logic simple , fit Cache Aside Pattern Data for patterns , In the distributed cache Redis in . say concretely , It's when reading , Read cache first , When the cache cannot be read , Reread the database ; When it's updated , Update the database first , Delete the cache ( Delay double delete ). In this way , It can solve most caching scenarios with simple business logic , And can solve the problem of data consistency .

however , Just doing so is not enough , Because some business logic is very complex , The updated code is very scattered , Unsuitable for use Cache Aside Pattern To transform . We learned that , There's some data , It has the following characteristics :

- These data , After time-consuming acquisition , In extreme times , Will be used again

- Consistency requirements for business data , It can be controlled within seconds

- For the use of these data , Cross code 、 Cross thread , Various ways of use

In this case , We designed an in heap memory cache with a very short lifetime , The data is in 1 Seconds later , It will fail. , Then read from the database again . Adding a node to call the server interface is 1 Second 1k Time , We reduced it directly to 1 Time .

ad locum , Used Guava Of LoadingCache, Reduced Feign Interface call , It's an order of magnitude .

LoadingCache<String, String> lc = CacheBuilder

.newBuilder()

.expireAfterWrite(1,TimeUnit.SECONDS)

.build(new CacheLoader<String, String>() {

@Override

public String load(String key) throws Exception {

return slowMethod(key);

}});

Copy code 5. MySQL Index optimization

Our business system , It uses MySQL database , Because there is no professional DBA intervention , And the data table uses JPA Generated . When it comes to optimization , A large number of unreasonable indexes were found , Of course, we should optimize .

because SQL It has strong sensitivity , I will only talk about some index optimization rules encountered in the optimization process , I believe you can make analogy in your own business system .

Indexes are very useful , But be careful , If you do a function operation on a field , Then the index won't work . Common index failures , There are two other situations :

- The index field type of the query , Different from the data type passed by the user , To do a layer of implicit conversion . such as varchar Type field on , Into int Parameters

- Between the two tables of the query , Different character sets are used , You can't use the associated field as an index

MySQL Index optimization , The most basic thing is to follow the leftmost prefix principle , When there is a、b、c Three fields , If the query criteria use a, perhaps a、b, perhaps a、b、c, So we can create (a,b,c) Just one index , It contains a and ab. Of course , Strings can also be prefixed and indexed , But in ordinary applications, it is less .

occasionally ,MySQL The optimizer for , The wrong index is selected , We need to use force index Specify the index to use . stay JPA in , Then use nativeQuery, To write binding to MySQL Database SQL sentence , We try to avoid this situation .

Another optimization is to reduce back to table . because InnoDB Adopted B+ Trees , But if you don't use a non primary key index , Will pass the secondary index (secondary index) First find the cluster index (clustered index), Then locate the data . More than a step , Generate back table . Use Overlay index , It can avoid returning to the table to a certain extent , It is a common optimization method . specific working means , Is to query the fields , Put it together with the index to make a joint index , It's a practice of exchanging space for time .

6. JVM Optimize

I usually JVM The optimization of is put in the last ring . and , Unless there is a serious jam in the system , perhaps OOM problem , Will not actively over optimize it .

unfortunately , Our application , Because a large amount of memory is turned on (8GB+), stay JDK1.8 Under the default parallel collector , It happens all the time . Although not very often , But every few seconds , It has seriously affected the smoothness of some requests .

The program has just begun , It's naked running in JVM Under the ,GC Information , also OOM, Nothing left . To record GC Information , We have made the following transformation .

First step , Join in GC Various parameters for troubleshooting .

-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/opt/xxx.hprof -DlogPath=/opt/logs/ -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCApplicationStoppedTime -XX:+PrintTenuringDistribution -Xloggc:/opt/logs/gc_%p.log -XX:ErrorFile=/opt/logs/hs_error_pid%p.log

Copy code such , We can take the generated GC file , Upload to gceasy And so on . You can see JVM Throughput and delay of each stage .

The second step , Turn on SpringBoot Of GC Information , Access Promethus monitor .

stay pom Add dependency in .

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

Copy code Then configure the exposure point . such , We have real-time analysis data , With the basis of optimization .

management.endpoints.web.exposure.include=health,info,prometheus

Copy code

I'm observing JVM After your performance , We switched to G1 Garbage collector .G1 Have the biggest pause goal , We can make our GC Time is smoother . It mainly has the following tuning parameters :

- -XX:MaxGCPauseMillis Set the target pause time ,G1 We will try our best to achieve .

- -XX:G1HeapRegionSize Set the small heap size . The value of 2 Power of power , Don't be too big , Not too small . If you don't know how to set it , Keep default .

- -XX:InitiatingHeapOccupancyPercent When the whole heap memory usage reaches a certain proportion ( The default is 45%), The concurrent marking phase is started .

- -XX:ConcGCThreads The number of threads used by the concurrent garbage collector . The default value follows JVM Running on different platforms . Modification is not recommended .

Switch to G1 after , This uninterrupted pause , Miraculously disappeared ! period , There have been many memory overflow problems , However, there are MAT The blessing of this artifact , In the end, it was easy The problem has been solved .

7. Other optimization

In terms of engineering structure and Architecture , If there is a hard injury , So code optimization , The effect is actually limited , For example, in our case .

But the main code still needs to be adjusted to accommodate . Some critical code in time-consuming logic , We took special care of it . According to the development specification , A unified clean-up of the code . among , There are several deeply impressed points .

Some students in order to be able to reuse map aggregate , After every use , All use clear Methods to clean up .

map1.clear();

map2.clear();

map3.clear();

map4.clear();

Copy code these map Data in , It's special , and clear The method is a little special , Its time complexity matters O(n) Of , Resulting in high time consumption .

public void clear() {

Node<K,V>[] tab;

modCount++;

if ((tab = table) != null && size > 0) {

size = 0;

for (int i = 0; i < tab.length; ++i)

tab[i] = null;

}

}

Copy code The same thread safe queue , Yes ConcurrentLinkedQueue, its size() Method , Time complexity is very high , Somehow I was used by my colleagues , These are all performance killers .

public int size() {

restartFromHead: for (;;) {

int count = 0;

for (Node<E> p = first(); p != null;) {

if (p.item != null)

if (++count == Integer.MAX_VALUE)

break; // @see Collection.size()

if (p == (p = p.next))

continue restartFromHead;

}

return count;

}

}

Copy code in addition , Some services web page , The response itself is very slow , This is because the business logic is complex , front end JavaScript In itself, the implementation is slow . This part of the code optimization , The front-end colleagues need to deal with it , Pictured , Use chrome perhaps firefox Of performance tab , You can easily find the time-consuming front end Code .

8. summary

performance optimization , In fact, there is a routine , But most teams wait for problems to optimize , There is little planning . But with surveillance and APM It's different. , We can get the data at any time , Reverse the optimization process .

Some performance problems , Be able to at the level of business needs , Or at the architectural level . What has been brought to the code layer , Optimization that requires programmer intervention , It has reached the demand side and the architecture side. We can't move any more , Or don't want to move again .

Performance optimization starts with gathering information , Find the bottleneck , Balance CPU、 Memory 、 The Internet 、、IO And so on , Then minimize the average response time , Increase throughput .

cache 、 buffer 、 Pooling 、 Reduce lock conflicts 、 asynchronous 、 parallel 、 Compress , Are common optimization methods . In our scenario , Play the greatest role , Data compression and parallel requests . Of course , With the help of other optimization methods , Our business interface , from 5-6 Seconds , Directly reduced to 1 In seconds , This optimization effect is still very impressive . It is estimated that for a long time to come , It won't be optimized anymore .

边栏推荐

- True title of Blue Bridge Cup: Card [easy to understand]

- [ pytorch ] 基本使用丨7. GPU分配丨

- Log slimming operation: from 5g to 1g!

- Explication détaillée de l'injection aveugle d'erreur SQL

- 不掌握这些坑,你敢用BigDecimal吗?

- Major upgrade of openim - group chat reading diffusion model release group management function upgrade

- keras——accuracy_ Score formula

- Pymoo学习 (3):使用多目标优化找到最优解的集合

- 封玩家IP和机器码以及解开被封的教程

- 场景小小记

猜你喜欢

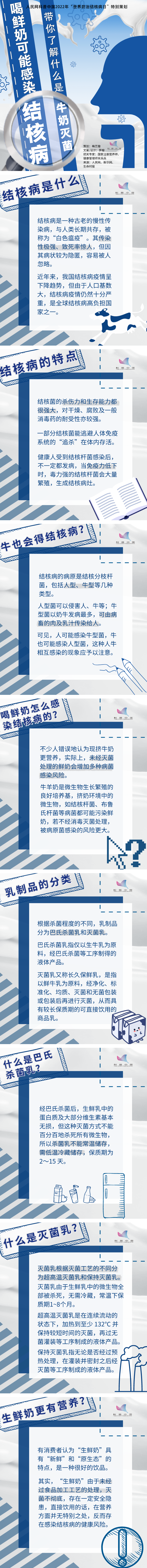

食品安全|喝鲜奶可能感染结核病?带你了解什么是牛奶灭菌

USB通信协议深入理解

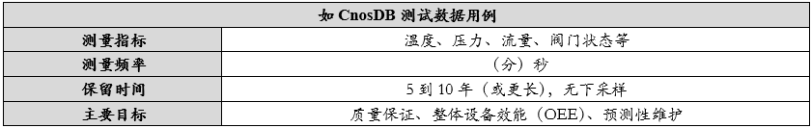

Time series data in industrial Internet of things

isEmpty 和 isBlank 的用法区别,至少一半的人答不上来...

【作业】研一(互联网新技术作业)

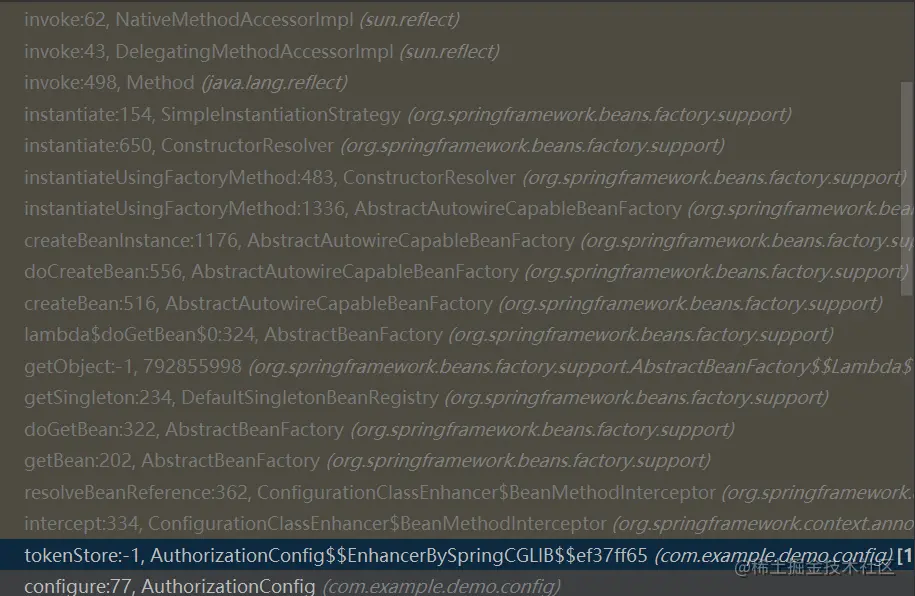

@Bean 注解的方法调用多次会创建多个bean 实例吗

PPPoE协议讲解以及拨号过程Wireshark抓包解析

Major upgrade of openim - group chat reading diffusion model release group management function upgrade

Description and usage of Axi interconnect IP core

食品安全|巧克力也有真假?关于它你了解多少

随机推荐

Kubernetes kubelet 硬核知识 架构

ride the wind and waves! Digital transformation in the era of financial technology

为啥一问 JVM 就 懵B ???

封玩家IP和机器码以及解开被封的教程

日志瘦身骚操作:从5G优化到1G!

When does MySQL use table locks and row locks?

程序环境和预处理

食品安全|火腿肠午餐肉,真有说的那么不堪?

日期格式化

xlinx pcie xvc

Encapsulate the general connection and query of the project with pymysql

OpenCV求两个区域的交集

使用 Preparedstatement 选择和显示记录的 JDBC 程序

July training (day 23) - dictionary tree

工業物聯網中的時序數據

【flask高级】从源码深入理解flask路由之endpoint

OpenIM重大优化-消息按需加载 一致性缓存 uniapp发布

乘风破浪!金融科技时代下的数字化转型之路

KV260单板PS控制设置IIC开关芯片

Could not load dynamic library ‘cudnn64_ 8.dll‘; dlerror: cudnn64_ 8.dll not found