当前位置:网站首页>Sqoop1.4.4原生增量导入特性探秘

Sqoop1.4.4原生增量导入特性探秘

2022-07-03 11:55:00 【星哥玩云】

原始思路

要想实现增量导入,完全可以不使用Sqoop的原生增量特性,仅使用shell脚本生成一个以当前时间为基准的固定时间范围,然后拼接Sqoop命令语句即可。

原生增量导入特性简介

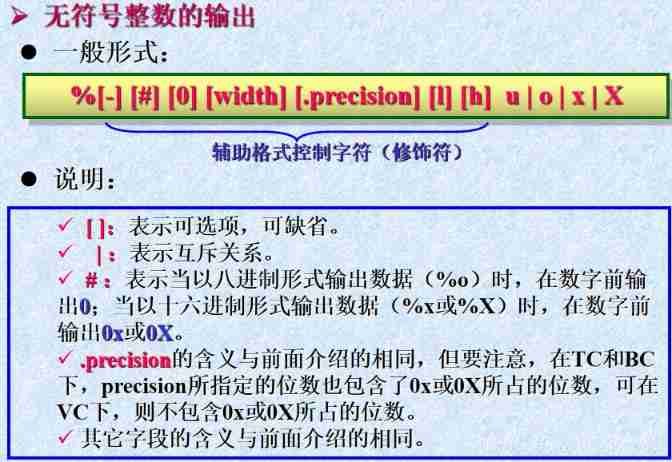

Sqoop提供了原生增量导入的特性,包含以下三个关键参数:

Argument | Description |

|---|---|

--check-column (col) | 指定一个“标志列”用于判断增量导入的数据范围,该列不能是字符型,最好是数字或者日期型(这个很好理解吧)。 |

--incremental (mode) | 指定增量模式,包含“追加模式” append 和“最后修改模式” lastmodified (该模式更满足常见需求)。 |

--last-value (value) | 指定“标志列”上次导入的上界。如果“标志列”是最后修改时间,则--last-value为上次执行导入脚本的时间。 |

结合Saved Jobs机制,可以实现重复调度增量更新Job时 --last-value 字段的自动更新赋值,再结合cron或者oozie的定时调度,可实现真正意义的增量更新。

实验:增量job的创建和执行

创建增量更新job:

[email protected]:~/Sqoop/sqoop-1.4.4/bin$ sqoop job --create incretest -- import --connect jdbc:Oracle:thin:@192.168.0.138:1521:orcl --username HIVE --password hivefbi --table FBI_SQOOPTEST --hive-import --hive-table INCRETEST --incremental lastmodified --check-column LASTMODIFIED --last-value '2014/8/27 13:00:00'

14/08/27 17:29:37 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

14/08/27 17:29:37 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override

14/08/27 17:29:37 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc.

14/08/27 17:29:37 WARN tool.BaseSqoopTool: It seems that you've specified at least one of following:

14/08/27 17:29:37 WARN tool.BaseSqoopTool: --hive-home

14/08/27 17:29:37 WARN tool.BaseSqoopTool: --hive-overwrite

14/08/27 17:29:37 WARN tool.BaseSqoopTool: --create-hive-table

14/08/27 17:29:37 WARN tool.BaseSqoopTool: --hive-table

14/08/27 17:29:37 WARN tool.BaseSqoopTool: --hive-partition-key

14/08/27 17:29:37 WARN tool.BaseSqoopTool: --hive-partition-value

14/08/27 17:29:37 WARN tool.BaseSqoopTool: --map-column-hive

14/08/27 17:29:37 WARN tool.BaseSqoopTool: Without specifying parameter --hive-import. Please note that

14/08/27 17:29:37 WARN tool.BaseSqoopTool: those arguments will not be used in this session. Either

14/08/27 17:29:37 WARN tool.BaseSqoopTool: specify --hive-import to apply them correctly or remove them

14/08/27 17:29:37 WARN tool.BaseSqoopTool: from command line to remove this warning.

14/08/27 17:29:37 INFO tool.BaseSqoopTool: Please note that --hive-home, --hive-partition-key,

14/08/27 17:29:37 INFO tool.BaseSqoopTool: hive-partition-value and --map-column-hive options are

14/08/27 17:29:37 INFO tool.BaseSqoopTool: are also valid for HCatalog imports and exports

执行Job:

[email protected]:~/Sqoop/sqoop-1.4.4/bin$ ./sqoop job --exec incretest

注意日志中显示的SQL语句:

14/08/27 17:36:23 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(ID), MAX(ID) FROM FBI_SQOOPTEST WHERE ( LASTMODIFIED >= TO_DATE('2014/8/27 13:00:00', 'YYYY-MM-DD HH24:MI:SS') AND LASTMODIFIED < TO_DATE('2014-08-27 17:36:23', 'YYYY-MM-DD HH24:MI:SS') )

其中,LASTMODIFIED的下界是创建job的语句中指定的,上界是当前时间2014-08-27 17:36:23。

验证:

hive> select * from incretest;

OK

2 lion 2014-08-27

Time taken: 0.085 seconds, Fetched: 1 row(s)

然后我向Oracle中插入一条数据:

再执行一次:

[email protected]:~/Sqoop/sqoop-1.4.4/bin$ ./sqoop job --exec incretest

日志中显示的SQL语句:

14/08/27 17:47:19 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(ID), MAX(ID) FROM FBI_SQOOPTEST WHERE ( LASTMODIFIED >= TO_DATE('2014-08-27 17:36:23', 'YYYY-MM-DD HH24:MI:SS') AND LASTMODIFIED < TO_DATE('2014-08-27 17:47:19', 'YYYY-MM-DD HH24:MI:SS') )

其中,LASTMODIFIED的下界是上一次执行该job的上界,也就是说,Sqoop的“Saved Jobs”机制对于增量导入类Job,自动记录了上一次的执行时间,并自动将该时间赋值给下一次执行的--last-value参数!也就是说,我们只需要通过crontab设定定期执行该job即可,job中的--last-value将被“Saved Jobs”机制自动更新以实现真正意义的增量导入。

以上Oracle表中新增的数据被成功插入Hive表中。

再次向oracle表中新增一条数据,再次执行该job,情况依旧,日志中显示上一次的上界自动成为本次导入的下界:

14/08/27 17:59:34 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(ID), MAX(ID) FROM FBI_SQOOPTEST WHERE ( LASTMODIFIED >= TO_DATE('2014-08-27 17:47:19', 'YYYY-MM-DD HH24:MI:SS') AND LASTMODIFIED < TO_DATE('2014-08-27 17:59:34', 'YYYY-MM-DD HH24:MI:SS') )

边栏推荐

猜你喜欢

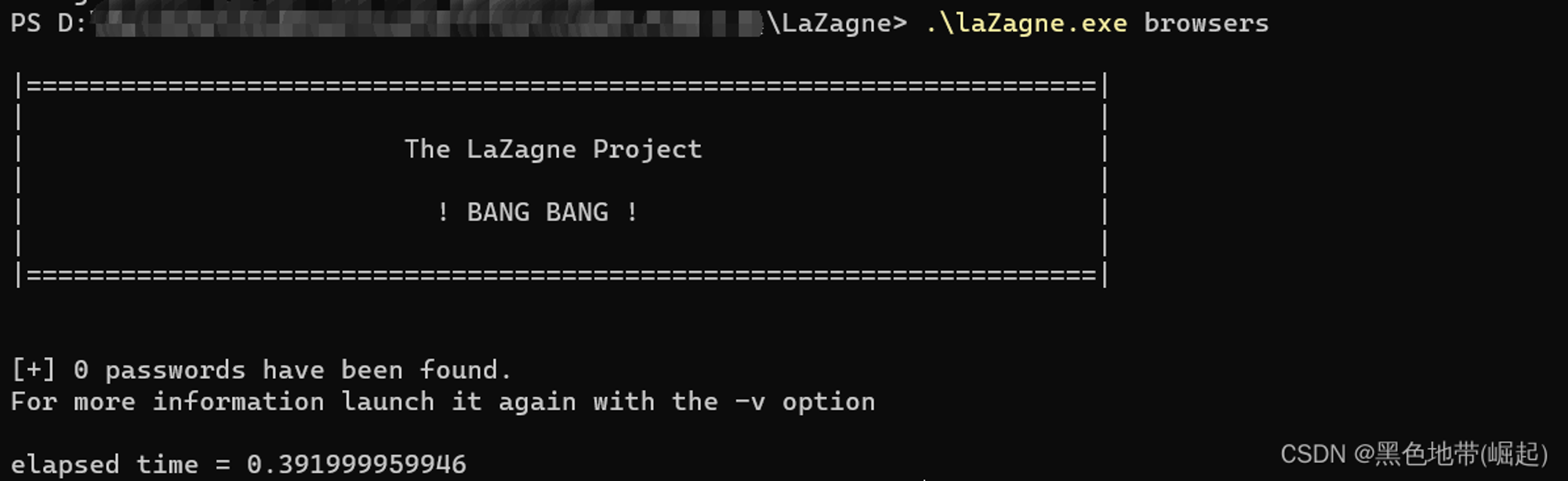

【附下载】密码获取工具LaZagne安装及使用

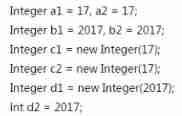

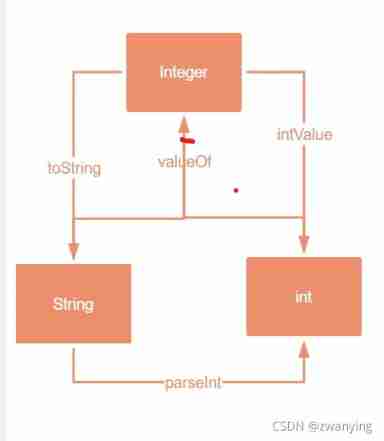

Integer int compare size

(构造笔记)ADT与OOP

Summary of development issues

Integer string int mutual conversion

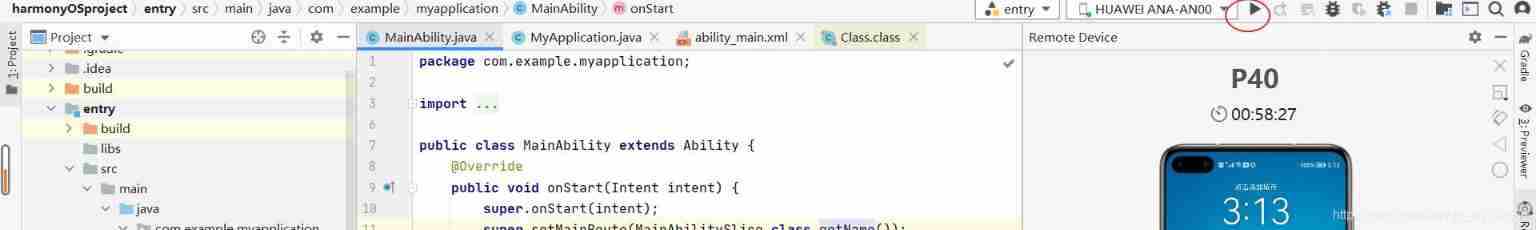

Take you to the installation and simple use tutorial of the deveco studio compiler of harmonyos to create and run Hello world?

With pictures and texts, summarize the basic review of C language in detail, so that all kinds of knowledge points are clear at a glance?

LeetCode 0556. Next bigger element III - end of step 4

Shutter: add gradient stroke to font

OpenGL draws colored triangles

随机推荐

Flutter 退出登录二次确认怎么做才更优雅?

Apprendre à concevoir des entités logicielles réutilisables à partir de la classe, de l'API et du cadre

Socket TCP for network communication (I)

Kubectl_ Command experience set

(construction notes) grasp learning experience

SystemVerilog -- OOP -- copy of object

PHP get the file list and folder list under the folder

023 ([template] minimum spanning tree) (minimum spanning tree)

实现验证码验证

Sword finger offer05 Replace spaces

elastic_ L01_ summary

Wechat applet pages always report errors when sending values to the background. It turned out to be this pit!

Sword finger offer03 Repeated numbers in the array [simple]

adb push apk

(构造笔记)GRASP学习心得

[official MySQL document] deadlock

雲計算未來 — 雲原生

双链笔记·思源笔记综合评测:优点、缺点、评价

Flutter Widget : Flow

[ManageEngine] the role of IP address scanning