当前位置:网站首页>Recurrence of recommended models (III): recall models youtubednn and DSSM

Recurrence of recommended models (III): recall models youtubednn and DSSM

2022-06-29 12:40:00 【GoAI】

This chapter is the third chapter of recommended model reproduction , Use torch_rechub Framework for model building , It mainly introduces the recommended system recall model YoutubeDNN、DSSM, Including structure explanation and code practice , Refer to other articles .

Recommended directions and materials :

1. DSSM

1.1 DSSM Model principle

DSSM(Deep Structured Semantic Model), Proposed by Microsoft research , The text is represented as a low dimensional vector by using the depth neural network , An algorithm for text similarity matching . Not just text , In other scenarios where similarity calculation can be calculated , For example, in the recommendation system . According to the user search behavior query( Text search ) and doc( Text to match ) Log data for , Using deep learning networks take query and doc Mapping to the semantic space of the same dimension , namely query Lateral characteristic embedding and doc Lateral characteristic embedding, Thus, the low dimensional semantic vector expression of the statement is obtained sentence embedding, Used to predict the semantic similarity of two sentences .

1.2 DSSM structure

Model structure :user Side tower and item The side towers pass through their respective DNN obtain embedding, Then calculate the similarity between the two

characteristic :

- user and item Both sides finally get embedding Dimensions need to be consistent

- For all items in the material warehouse item When calculating similarity , Use negative sampling for approximate calculation

- In the scenario of massive candidate data recall , fast

** shortcoming :** The structure of two towers cannot consider the interactive information between the features on both sides , At the expense of some of the accuracy of the model .

1.3 Positive and negative sample construction

Positive sample : Take content recommendation as an example , choose “ The user clicks ” Of item Positive sample . At most, consider the length of stay of users , take “ User clicks by mistake ” Ruled out

Negative sample :user And item Mismatched samples , It's a negative sample .

- Global random sampling : From the global candidate item A certain number of negative samples are randomly selected as the recall model , But it may lead to a long tail .

- Global random sampling + Hot press : For some hot item Take appropriate samples , Reduce the impact of popularity on search , Improve model pair similarity item Ability to distinguish .

- Hard Negative Enhanced samples : Select a part of... With moderate matching degree item, Increase the difficulty of the model in training

- Batch Internal random selection : Use positive samples of other samples in batch Internal random sampling as its own negative sample

1.4 DSSM Code for

class DSSM(torch.nn.Module):

def __init__(self, user_features, item_features, user_params, item_params, temperature=1.0):

super().__init__()

self.user_features = user_features

self.item_features = item_features

self.temperature = temperature

self.user_dims = sum([fea.embed_dim for fea in user_features])

self.item_dims = sum([fea.embed_dim for fea in item_features])

self.embedding = EmbeddingLayer(user_features + item_features)

self.user_mlp = MLP(self.user_dims, output_layer=False, **user_params)

self.item_mlp = MLP(self.item_dims, output_layer=False, **item_params)

self.mode = None

def forward(self, x):

user_embedding = self.user_tower(x)

item_embedding = self.item_tower(x)

if self.mode == "user":

return user_embedding

if self.mode == "item":

return item_embedding

# Calculate cosine similarity

y = torch.mul(user_embedding, item_embedding).sum(dim=1)

return torch.sigmoid(y)

def user_tower(self, x):

if self.mode == "item":

return None

input_user = self.embedding(x, self.user_features, squeeze_dim=True)

# user DNN

user_embedding = self.user_mlp(input_user)

user_embedding = F.normalize(user_embedding, p=2, dim=1)

return user_embedding

def item_tower(self, x):

if self.mode == "user":

return None

input_item = self.embedding(x, self.item_features, squeeze_dim=True)

# item DNN

item_embedding = self.item_mlp(input_item)

item_embedding = F.normalize(item_embedding, p=2, dim=1)

return item_embedding

2. YoutubeDNN

2.1 YoutubeDNN Model principle

YoutubeDNN yes Youtube Floor model for video recommendation , It is a classic in the recommendation system , The general idea is to use multiple simple models to screen out a large number of samples with low correlation in the recall stage , In the sorting stage, a more complex model is used to obtain accurate recommendation results .

2.2 YoutubeDNN structure

Recall section : The main input is the user's click history data , The output is a candidate video set associated with the user ;

Fine discharge part : The main method is feature engineering , Model design and training methods ;

Offline evaluation : Use some commonly used evaluation indicators , adopt A/B Experiment and observe the real behavior of users ;

2.2.1 YoutubeDNN Recall model

- The input layer is used by users to watch video sequences embedding mean pooling、 Search term embedding mean pooling、 Location embedding、 User characteristics ;

- Input layer gives three activation function bits ReLU The full connection layer of , Then we get the user vector ;

- Last , after softmax layer , Get the viewing probability of each video .

2.2.2 Training data selection

- Sampling methods : Negative sampling ( Be similar to skip-gram Sampling of )

- Sample source : From all YouTube User viewing record , Contains videos that users watch from other channels

Be careful :

- Select the same number of samples for each user in the training data , Guarantee the weight of the user sample in the loss function ;

- Avoid letting the model know what it shouldn't know , Information leakage

2.2.3 Example Age features

- what:Example Age by Characteristics of video age , That is, the release time of the video

- background : Due to the user's viewing characteristics of the new video , As a result, the playback prediction value of the video is expected to be inaccurate .

- effect : Capture the life cycle of video , Let the model learn the user's preference for novel content bias, Eliminate heat bias .

- operation : When forecasting online , take example age All set to 0 Or a small negative value , It does not depend on the upload time of each video .

- benefits : take example age Set to a constant value , You only need to calculate the user vector once ; For different videos , Corresponding example age In the same range , Only depend on the time span selected by the training data , It is convenient for normalization operation .

2.3 YoutubeDNN Code

import torch

import torch.nn.functional as F

from torch_rechub.basic.layers import MLP, EmbeddingLayer

from tqdm import tqdm

class YoutubeDNN(torch.nn.Module):

def __init__(self, user_features, item_features, neg_item_feature, user_params, temperature=1.0):

super().__init__()

self.user_features = user_features

self.item_features = item_features

self.neg_item_feature = neg_item_feature

self.temperature = temperature

self.user_dims = sum([fea.embed_dim for fea in user_features])

self.embedding = EmbeddingLayer(user_features + item_features)

self.user_mlp = MLP(self.user_dims, output_layer=False, **user_params)

self.mode = None

def forward(self, x):

user_embedding = self.user_tower(x)

item_embedding = self.item_tower(x)

if self.mode == "user":

return user_embedding

if self.mode == "item":

return item_embedding

# Calculate similarity

y = torch.mul(user_embedding, item_embedding).sum(dim=2)

y = y / self.temperature

return y

def user_tower(self, x):

# be used for inference_embedding Stage

if self.mode == "item":

return None

input_user = self.embedding(x, self.user_features, squeeze_dim=True)

user_embedding = self.user_mlp(input_user).unsqueeze(1)

user_embedding = F.normalize(user_embedding, p=2, dim=2)

if self.mode == "user":

return user_embedding.squeeze(1)

return user_embedding

def item_tower(self, x):

if self.mode == "user":

return None

pos_embedding = self.embedding(x, self.item_features, squeeze_dim=False)

pos_embedding = F.normalize(pos_embedding, p=2, dim=2)

if self.mode == "item":

return pos_embedding.squeeze(1)

neg_embeddings = self.embedding(x, self.neg_item_feature, squeeze_dim=False).squeeze(1)

neg_embeddings = F.normalize(neg_embeddings, p=2, dim=2)

return torch.cat((pos_embedding, neg_embeddings), dim=1)

3. summary

- DSSM It is a two tower model ,user And item Passing by separately DNN obtain embedding, Then calculate the similarity between the two . The training sample , Positive samples are the correct search targets , Negative samples are global samples + A negative sample of hot hits .

- YoutubeDNN It is improved on the basis of the twin tower model , In the recall phase, multiple simple models are used to screen out a large number of samples with low correlation , In the sorting stage, a more complex model is used to obtain accurate recommendation results .

Reference resources :

边栏推荐

- 【LeetCode】14、最长公共前缀

- Baidu cloud disk downloads large files without speed limit (valid for 2021-11 personal test)

- Paper reproduction - ac-fpn:attention-guided context feature pyramid network for object detection

- Ttchat x Zadig open source co creates helm access scenarios, and environmental governance can be done!

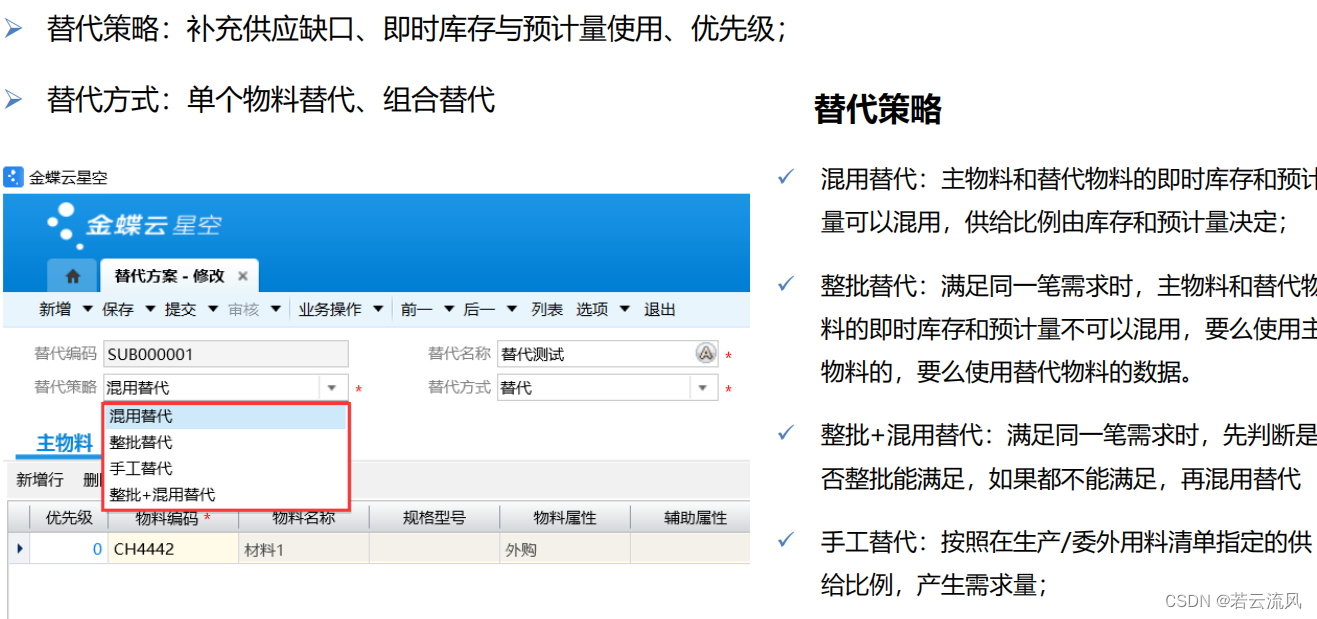

- ERP编制物料清单 金蝶

- 《高难度谈话》突破谈话瓶颈,实现完美沟通

- 一种可解释的几何深度学习模型,用于基于结构的蛋白质结合位点预测

- GBase8s数据库在组合查询中的集合运算符

- Syntax of gbase8s database incompatible with for update clause

- Go Senior Engineer required course | I sincerely suggest you listen to it. Don't miss it~

猜你喜欢

随机推荐

go 学习-搭建开发环境vscode开发环境golang

Bison uses error loop records

Titanium dynamic technology: our Zadig landing Road

Principle and process of MySQL master-slave replication

GBase8s数据库INTO TEMP 子句创建临时表来保存查询结果。

Mysql database master-slave synchronization, consistency solution

Unexpected ‘debugger‘ statement no-debugger

墨菲安全入选中关村科学城24个重点项目签约

Introduction to multi project development - business scenario Association basic introduction test payroll

Interview shock 61: tell me about MySQL transaction isolation level?

Gbase8s database into table clause

推荐模型复现(一):熟悉Torch-RecHub框架与使用

NvtBack

LeetCode_双指针_中等_328.奇偶链表

Pangolin compilation error: 'numeric_ limits’ is not a member of ‘std’

Unexpected ‘debugger‘ statement no-debugger

GBase8s数据库select有ORDER BY 子句1

一种可解释的几何深度学习模型,用于基于结构的蛋白质结合位点预测

535. encryption and decryption of tinyurl: design a URL simplification system

Cache consistency, delete cache, write cache, cache breakdown, cache penetration, cache avalanche