当前位置:网站首页>Svm+surf+k-means flower classification (matlab)

Svm+surf+k-means flower classification (matlab)

2022-07-27 17:17:00 【Bald head whining devil】

Detailed algorithm 、 For the steps, see python edition :

https://editor.csdn.net/md/?articleId=103732613

Training documents

clc

clear all

train_path = "g:/flowers/train/";

test_path = "g:/flowers/test/";

K = 100;

build_centers(train_path,K);

% Construct training set feature vector

[x_train,y_train] = cal_vec(train_path,K);

% Input eigenvectors and labels into SVM In the classifier

Train(x_train,y_train);

% Calculate the accuracy of the test set

[predicted_label, accuracy, decision_values] = Test(test_path,K);

function f1 = calcSurfFeature(img)

% Convert the image to a grayscale image

gray = rgb2gray(img);

% Calculation surf Characteristic point

points = detectSURFFeatures(gray);

% Calculate the description vector

[f1, vpts1] = extractFeatures(gray, points);

end

%% Calculation word bag

function centers = learnVocabulary(features,K)

% Set the number of word bags

% use k-means to cluster a bag of features

%[ Cluster number , Location of points ] = [ Input , Number of clusters , The initial center point , Number of cluster repetitions ]

opts = statset('Display','final','MaxIter',1000);

[idx ,centers] = kmeans(features ,K,'Replicates',10, 'Options',opts);

end

%% Calculate the feature vector of the picture according to the dictionary

function featVec = calcFeatVec(features, centers,K)

featVec = zeros(1,K);

%m Represents the number of characteristic points ,n Dimension representing feature points

[m,n] = size(features);

for i = 1:m

fi = features(i,:);

%% Is characterized by 1*64, And the dictionary is K*64, We need to find out which word bag this feature is closest to

res = zeros(K,64);

for j = 1:K

res(j,:) = fi;

end

% Copy the feature vertically into K*64

% Find the distance

distance = sum((res - centers).^2,2);

distance = sqrt(distance);

% Sort the distances , Find the minimum distance

[x,y] = min(distance);

featVec(y) = featVec(y)+1;

end

end

%%% Build a dictionary

function build_centers(path,K)

% Get the path subdirectory , Save the subdirectory path to cate in

sub = dir(path);

cate = [];

features = [];

for i = 1:size(sub)

if sub(i).name ~= "." && sub(i).name ~= ".." && sub(i).isdir

s = strcat(strcat(path ,sub(i).name),"/");

cate = [cate s];

end

end

for i = 1:length(cate)

imgDir = dir( strcat(cate(i),'*.jpg'));

for j = 1:length(imgDir)

img = imread(strcat(cate(i),imgDir(j).name));

% Calculation surf features

img_f = calcSurfFeature(img);

% Feature points are added to the feature set

features = [features;img_f];

end

end

[m,n] = size(features);

fprintf(" Training set feature point set :[ %d , %d ]\n",m,n);

centers = learnVocabulary(features,K);

filename = "g:/flowers/svm/svm_centers.mat";

save(filename,'centers');

end

%% according to centers Calculate the image feature vector

function [data_vec,labels] = cal_vec(path,K)

load("g:/flowers/svm/svm_centers.mat");

% Store image feature vectors

data_vec = [];

% Store picture labels ( Category )

labels = [];

% Get the subdirectories under the current directory

sub = dir(path);

cate = [];

for i = 1:size(sub)

if sub(i).name ~= "." && sub(i).name ~= ".." && sub(i).isdir

s = strcat(strcat(path ,sub(i).name),"/");

cate = [cate s];

end

end

for i = 1:length(cate)

imgDir = dir( strcat(cate(i),'*.jpg'));

for j = 1:length(imgDir)

img = imread(strcat(cate(i),imgDir(j).name));

% Calculation surf features

img_f = calcSurfFeature(img);

img_vec = calcFeatVec(img_f, centers,K);

data_vec = [data_vec;img_vec];

labels = [labels,i];

end

end

end

% Training SVM classifier

function Train(data_vec,labels)

modle = libsvm_train(labels',data_vec,'-t 0');

save("g:/flowers/svm/svm_model.mat",'modle');

fprintf("Training Done!\n");

end

%SVM Test the correctness of the test set

function [predicted_label, accuracy, decision_values] = Test(path,K)

% Read SVM Model

load("g:/flowers/svm/svm_model.mat");

% Read dictionary

load("g:/flowers/svm/svm_centers.mat");

% Calculate the eigenvector of each picture

[data_vec,labels] = cal_vec(path,K);

[num_test , y] = size(data_vec);

[predicted_label, accuracy, decision_values] = libsvm_predict(labels',data_vec,modle);

fprintf("Testing Done!\n");

end

Forecast file

clc

clear all

img_path = "e:\minist\predict\";

class_flower = ["0","1","2","3","4","5","6","7","8","9"];

K = 100;

for i = 1:5

path = strcat(strcat(img_path,num2str(i)),".jpg");

img = imread(path);

figure(i);

imshow(img);

res = predict(img,K);

xlabel([" Predicted results :",class_flower(res)]);

end

% Predict a single picture

function res = predict(img,K)

load("g:/flowers/svm/svm_centers.mat");

load("g:/flowers/svm/svm_model.mat");

features = calcSurfFeature(img);

featVec = calcFeatVec(features, centers,K);

res = libsvm_predict([1],featVec,modle);

end

function f1 = calcSurfFeature(img)

% Convert the image to a grayscale image

gray = rgb2gray(img);

% Calculation suft Characteristic point

points = detectSURFFeatures(gray);

% Calculate the description vector

[f1, vpts1] = extractFeatures(gray, points);

end

%% Calculate the feature vector of the picture according to the dictionary

function featVec = calcFeatVec(features, centers,K)

featVec = zeros(1,K);

%m Represents the number of characteristic points ,n Dimension representing feature points

[m,n] = size(features);

for i = 1:m

fi = features(i,:);

%% Is characterized by 1*64, And the dictionary is 50*64, We need to find out which word bag this feature is closest to

res = zeros(K,64);

for j = 1:K

res(j,:) = fi;

end

% Copy the feature vertically into K*64

% Find the distance

distance = sum((res - centers).^2,2);

distance = sqrt(distance);

% Sort the distances , Find the minimum distance

[x,y] = min(distance);

featVec(y) = featVec(y)+1;

end

end

边栏推荐

- AppStore 内购

- Project exercise: the function of checking and modifying tables

- Swift QQ authorized login pit set

- Dynamic memory allocation in C language

- Flex flex flex box layout

- 步 IE 后尘,Firefox 的衰落成必然?

- MySQL: 函数

- Two table joint query 1

- (2)融合cbam的two-stream项目搭建----数据准备

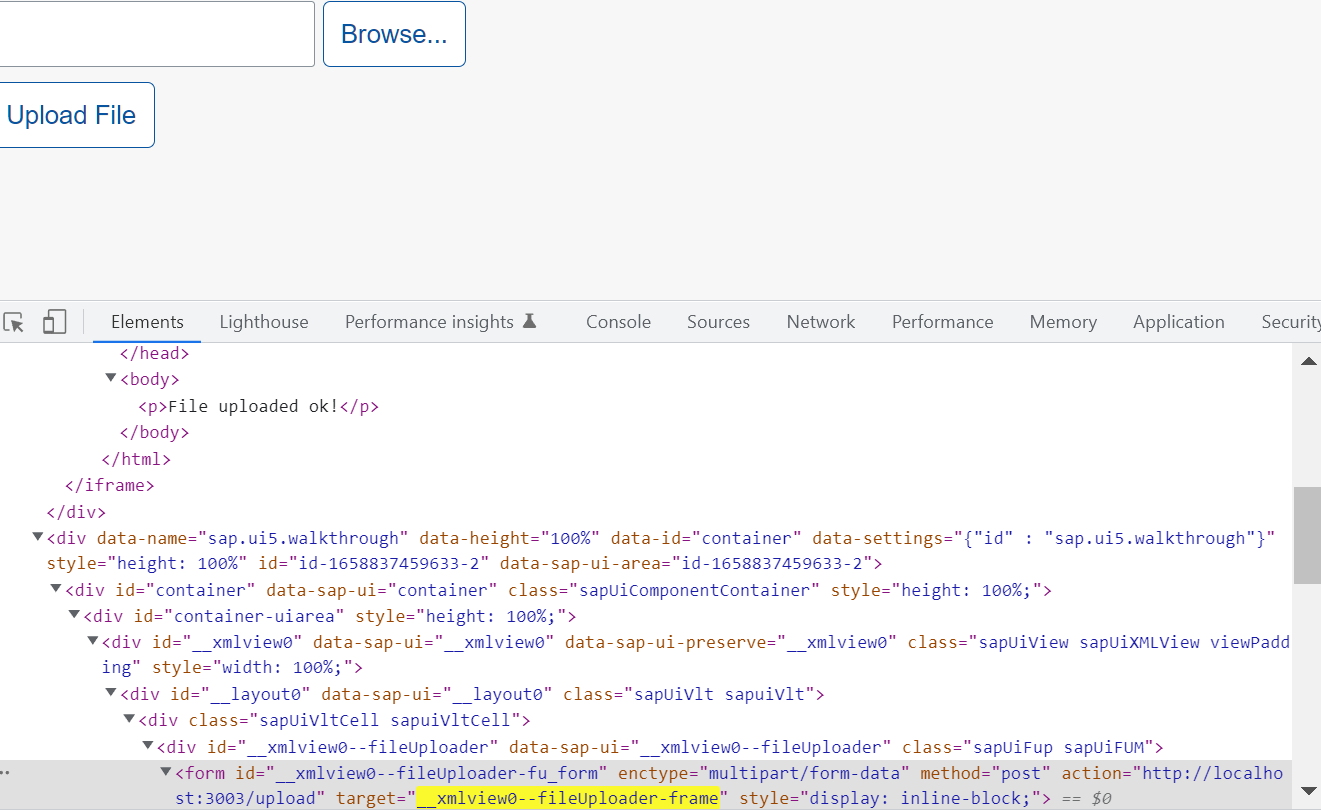

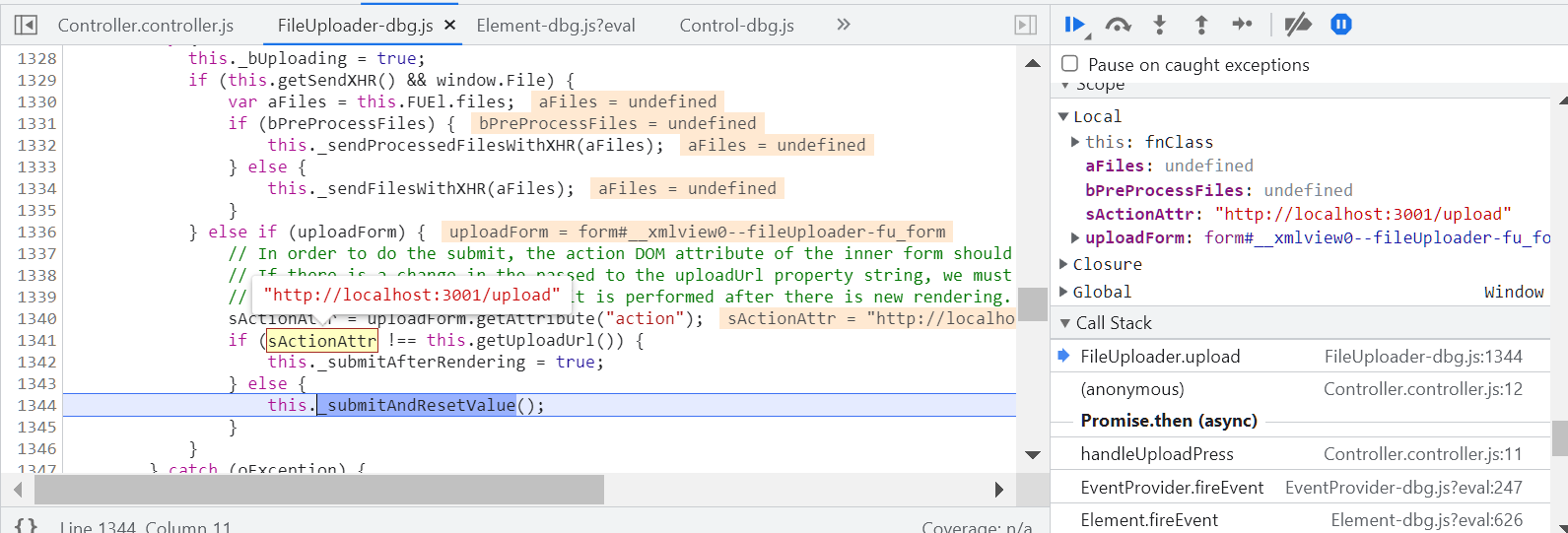

- Design details of hidden iframe and form elements used by SAP ui5 fileuploader

猜你喜欢

SAP UI5 FileUploader 使用的隐藏 iframe 和 form 元素的设计明细

Reference of meta data placeholder

Sharing of local file upload technology of SAP ui5 fileuploader

了解Bom与DOM的基本属性

关于 SAP UI5 应用 ui5.yaml 里的 paths 映射问题

VS2019 C语言如何同时运行多个项目,如何在一个项目中添加多个包含main函数的源文件并分别调试运行

微软默默给 curl 捐赠一万美元,半年后才通知

Three table joint query 1

Structure and bit segment of C language

第7天总结&作业

随机推荐

成本高、落地难、见效慢,开源安全怎么办?

三表联查3

Three table joint query 2

Unity 入门

Shell programming specifications and variables

JD Zhang Zheng: practice and exploration of content understanding in advertising scenes

WebView basic use

(2)融合cbam的two-stream项目搭建----数据准备

微软默默给 curl 捐赠一万美元,半年后才通知

Behind every piece of information you collect, you can't live without TA

腾讯云上传使用

Character stream read file

Kubernetes第八篇:使用kubernetes部署NFS系统完成数据库持久化(Kubernetes工作实践类)

如何通过C#/VB.NET从PDF中提取表格

Shell编程规范与变量

Select structure

Complete steps of JDBC program implementation

md 中超链接的解析问题:解析`this.$set()`,`$`前要加空格或转义符 `\`

Ten thousand words analysis ribbon core components and operation principle

苹果官网罕见打折,iPhone13全系优惠600元;国际象棋机器人弄伤对弈儿童手指;国内Go语言爱好者发起新编程语言|极客头条...