当前位置:网站首页>Awk tools

Awk tools

2022-06-26 13:22:00 【C chord~】

Catalog

3、 ... and .awk Common built-in variables

3. Through the pipe symbol 、 Double quotes call shell command

introduction

awk Command is a programming language , Used in linux/unix Processing of text and data . It is a programming language specially designed for text processing , It's also line processing software , Usually used to scan 、 Filter 、 Statistical summary work .

One . principle

Read the text line by line , The default is space or tab Key to separate the separator , Save the separated fields to the built-in variables , And execute the edit command according to the mode or condition

sed Commands are often used for a whole line of processing , and awk They tend to divide a line into multiple “ Field ” And then deal with it

awk Information is also read line by line , The execution result can be obtained through print To print and display field data

In the use of awk In the course of the order , You can use logical operators “&&” Express “ And ”、“||” Express “ or ”、“!” Express “ Not ”

You can also do simple mathematical operations , Such as +、-、*、/、%、^ Respectively means plus 、 reduce 、 ride 、 except 、 The remainder and the power

Two . Command format

- awk Options " Mode or condition [ operation ]" file 1 file 2

- awk -f Script files file 1 file 2 …

3、 ... and .awk Common built-in variables

Variable explain

FS Column separator , Specify the field separator for each line of text , Default to space or tab stop . And "-F" The same effect

NF Number of fields in the row currently processed

NR Line number of the currently processed line ( Ordinal number )

$0 The entire contents of the currently processed row

$n Of the current processing line n A field ( The first n Column )

FILENAME File name processed

RS Line separator ,awk When reading from a file , Based on the RS The definition of cut data into many records , and awk Read only one record at a time , To deal with . The default is ’\n’

Case study

1. Output text by line

awk '{print}' like.txt

# Output everything

awk '{print $0}' like.txt

# Output everything

awk 'NR==1,NR==3{print}' like.txt

# Output No 1~3 Row content

awk '(NR>=1)&&(NR<=3){print}' like.txt

# Output No 1~3 Row content

awk 'NR==1||NR==3{print}' like.txt

# Output No 1 That's ok 、 The first 3 Row content

awk '(NR%2)==1{print}' 1.txt

# Output the contents of all odd lines

awk '(NR%2)==0{print}' 1.txt

# Output the contents of all even lines

awk '/^root/{print}' /etc/passwd

# Output to root Beginning line

awk '/nologin$/{print}' /etc/passwd

# Output to nologin The line at the end

awk 'BEGIN {x=0};/\/bin\/bash$/{x++};END {print x}' /etc/passwd

# Statistics to /bin/bash Number of lines at the end

# Equate to grep -c "/bin/bash$" /etc/passwd

#BEGIN Pattern representation , Before processing the specified text , It has to be executed first BEGIN Actions specified in the pattern

#awk Reprocess the specified text , We'll do it later END Actions specified in the pattern

#END{} In the block , Often put in the print results and other statements

2. Output text by field

awk -F ":" '{print $3}' /etc/passwd

# Output in each line ( Separated by spaces or tab stops ) Of the 3 A field

awk -F ":" '{print $1,$3}' /etc/passwd

# Output the 1、3 A field

awk -F ":" '$3<5{print $1,$3}' /etc/passwd

# Output No 3 Field values are less than 5 Of the 1、3 Field contents

awk -F ":" '!($3<200){print}' /etc/passwd

# Output No 3 The value of each field is not less than 200 The line of

awk 'BEGIN {FS=":"};{if($3>=200){print}}' /etc/passwd

# I'll finish it first BEGIN The content of , And print out the contents of the text

awk -F ":" '{max=($3>$4)?$3:$4;{print max}}' /etc/passwd

#($3>$4)?$3:$4 Ternary operator , If the first 3 The value of field is greater than 4 Values for fields , Then put the 3 The value of a field is assigned to max, Otherwise, No 4 The value of a field is assigned to max

awk -F ":" '{print NR,$0}' /etc/passwd

# Output each line content and line number , Every time a record is processed ,NR It's worth adding 1

3. Through the pipe symbol 、 Double quotes call shell command

echo $PATH | awk 'BEGIN{RS=":"};END{print NR}'

# Count the number of colon separated text paragraphs ,END{} In the block , Often put in the print results and other statements

awk -F: '/bash$/{print | "wc -l"}' /etc/passwd

# call wc -l Command statistics usage bash Number of users , Equate to grep -c "bash$" /etc/passwd

free -m | awk '/Mem:/ {print int($3/($3+$4)*100)}'

# View the current memory usage percentage

top -b -n 1 | grep Cpu | awk -F ',' '{print $4}' | awk '{print $1}'

# View the current CPU Idle rate ,(-b -n 1 It means that you only need 1 The output of times )

date -d "$(awk -F "." '{print $1}' /proc/uptime) second ago" +"%F %H:%M:%S"

# Display the last system restart time , Equate to uptime;second ago To show how many seconds ago ,+"%F %H:%M:%S" Equate to +"%Y-%m-%d %H:%M:%S" Time format of

awk 'BEGIN {while ("w" | getline) n++ ; {print n-2}"%"}'

# call w command , And used to count the number of online users

awk 'BEGIN {"hostname" | getline ; {print $0}}'

# call hostname, And output the current host name

seq 10 | awk '{print $0; getline}'

seq 10 | awk '{getline; print $0}'

边栏推荐

- Electron official docs series: Processes in Electron

- I - Dollar Dayz

- Electron official docs series: Examples

- Ubuntu installation and configuration PostgreSQL (18.04)

- D - skiing

- A collection of common tools for making we media videos

- 8. [STM32] timer (TIM) -- interrupt, PWM, input capture experiment (proficient in timer)

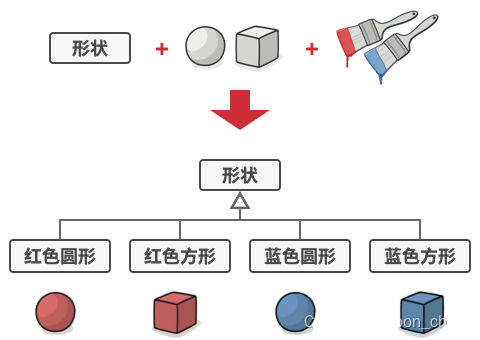

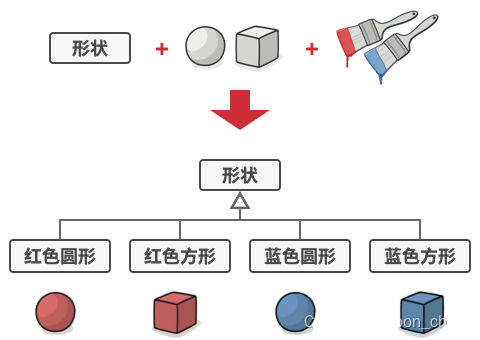

- Bridge mode

- mysql讲解(一)

- ES中索引别名(alias)的到底有什么用

猜你喜欢

Beifu twincat3 can read and write CSV and txt files

scrapy——爬取漫画自定义存储路径下载到本地

橋接模式(Bridge)

ES基于Snapshot(快照)的数据备份和还原

桥接模式(Bridge)

MediaPipe手势(Hands)

Bifu divides EtherCAT module into multiple synchronization units for operation -- use of sync units

8、【STM32】定时器(TIM)——中断、PWM、输入捕获实验(一文精通定时器)

ES6 module

Thinking caused by the error < note: candidate expectations 1 argument, 0 provided >

随机推荐

H5视频自动播放和循环播放

Processing function translate (mousex, mousey) learning

May product upgrade observation station

Processsing function random

What should the software test report include? Interview must ask

7-1 n queen problem

Electron official docs series: Get Started

MySQL数据库讲解(五)

计算两点之间的距离(二维、三维)

防火墙介绍

POJ 3070 Fibonacci

Bigint: handles large numbers (integers of any length)

tauri vs electron

Processing polyhedron change

NVM installation tutorial

J - Wooden Sticks poj 1065

[shell] generate strings between specified dates

MySQL数据库常见故障——遗忘数据库密码

7-2 picking peanuts

Do you know the limitations of automated testing?